ROS多机通信与话题控制

第一次写博客,写得不好大家见谅

任务描述

背景:师兄在仿真中完成了多机建图,想要在真机上验证一下。具体需求:对于单个机器人,师兄想要得到某个时刻这个机器人的一些状态信息,并且要保存成npy格式;并且实现多机协同(多个机器人的数据获取和控制)。下面是具体的解决方案。

键盘控制获取数据

需要安装 pynput 这个库,如果是python3,直接install即可;如果是python2,需要寻找该库的就版本以支持python2。

#!/usr/bin/env python

# -*-coding:utf-8-*

import rospy

from pynput import keyboard

count = 0

def on_press(key):

global count

if key == keyboard.Key.space:

count += 1

print(count)

def main():

rospy.init_node('keyboard_counter', anonymous=True)

rospy.loginfo("按空格键来增加计数。")

# 收听键盘事件

listener = keyboard.Listener(on_press=on_press)

listener.start()

# 保持节点运行直到被关闭

rospy.spin()

if __name__ == '__main__':

try:

main()

except rospy.ROSInterruptException:

pass

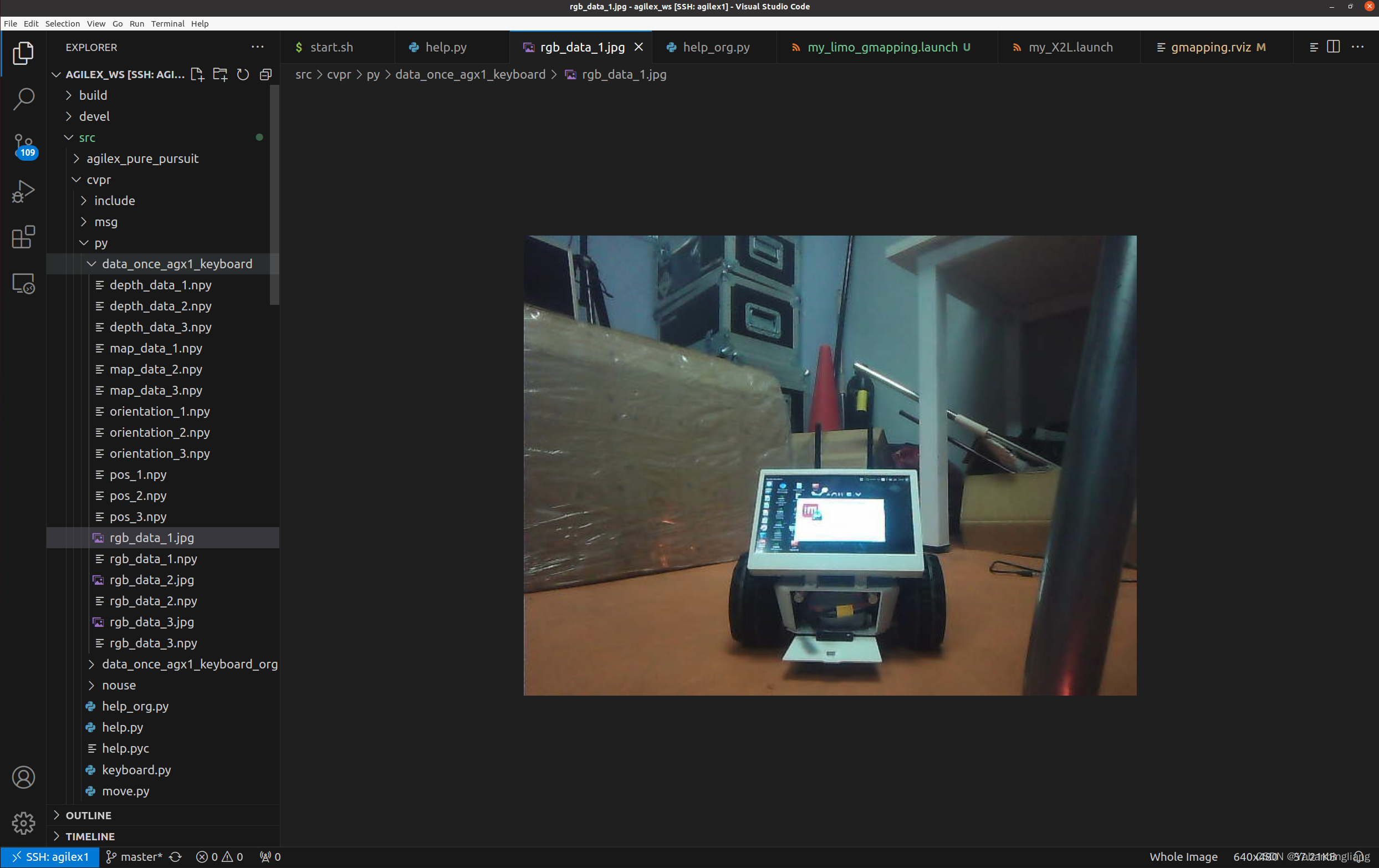

利用键盘保存当前时刻机器人的数据

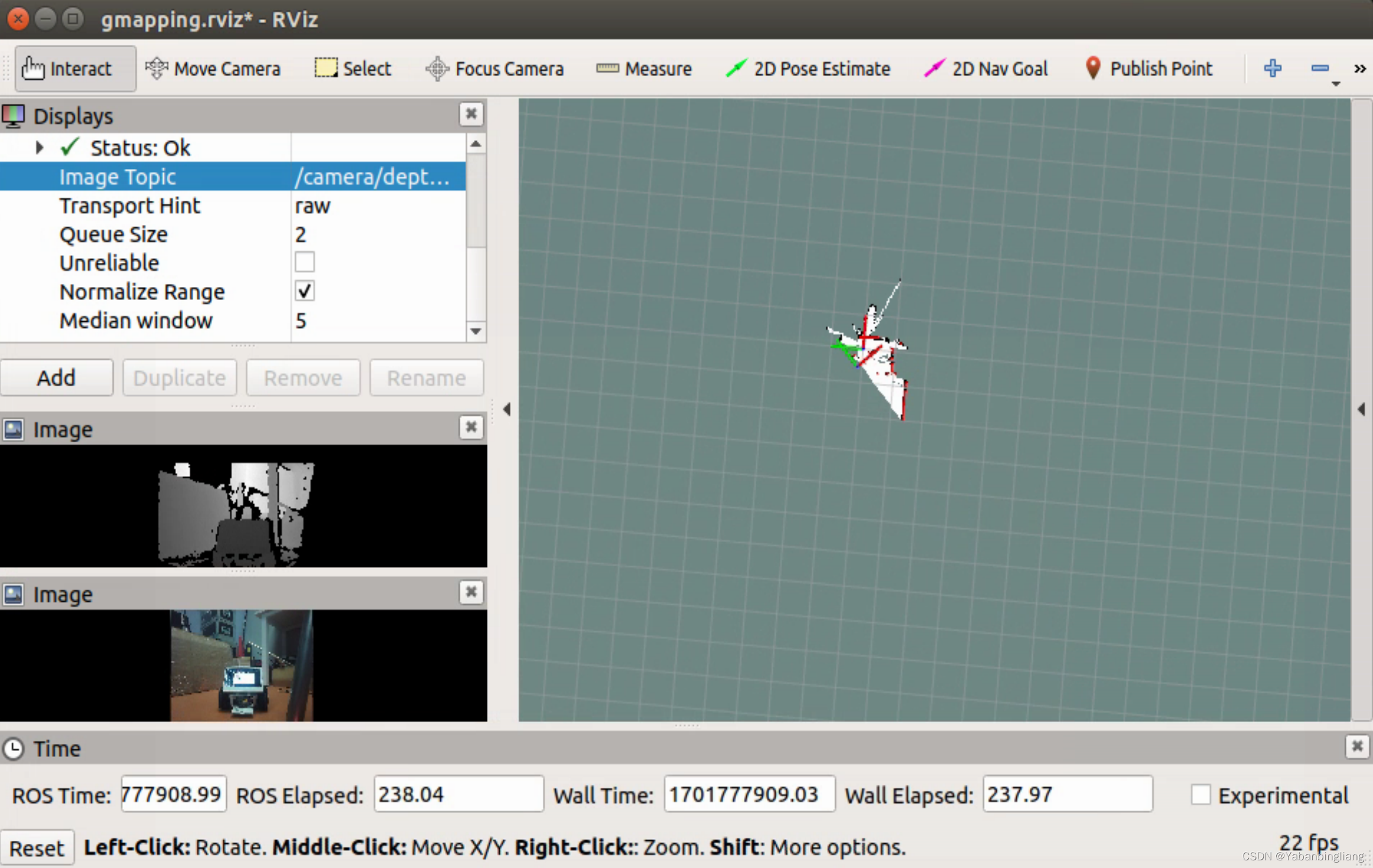

单个机器人启动底盘、相机和 gmapping后效果如图

help.py如下 主要功能是获取小车的odom、rgb、depth 和 map,并且能够通过敲击键盘保存当前小车的这四个信息。

import os

import rospy

import numpy as np

from sensor_msgs.msg import Image

from nav_msgs.msg import Odometry

from nav_msgs.msg import OccupancyGrid

from cv_bridge import CvBridge, CvBridgeError

import cv2

import time

import json

import pickle

from pynput import keyboard

import threading

from cvpr.msg import save

class getMsg(object):

def __init__(self, check_topic=True, saveimg=False):

self.keyNum = 0

self.counts = 0

self.saveNum = 0

self.rgb_callback_count = 0

self.depth_callback_count = 0

self.rgb = None

self.depth = None

self.odom = None

self.map = None

self.saveimg = saveimg

self.rgb_init = False

self.depth_init = False

self.odom_init = False

self.map_init = False

self.map_sub = rospy.Subscriber("/map", OccupancyGrid, self.map_callback)

self.odom_sub = rospy.Subscriber("/odom", Odometry, self.odom_callback)

self.rgb_sub = rospy.Subscriber("/camera/rgb/image_raw", Image, self.rgb_callback)

self.depth_sub = rospy.Subscriber("/camera/depth/image_raw", Image, self.depth_callback)

self.save_sub = rospy.Subscriber("/save", save, self.save_callback)

self.data = None

self.rgb_callback_np = [] # N * 480 * 640 * 3

self.depth_callback_np = [] # N * 480 * 640 * 3

self.pos_np = [] # x y z

self.orientation_np = [] # x y z w

self.map_data_np = [] # 1984 * 1984 array

self.map_meta_data_np = [] # x y z + x y z w

self.data_num = 0

if check_topic:

while not self.rgb_init:

continue

while not self.depth_init:

continue

while not self.odom_init:

continue

while not self.map_init:

continue

rospy.loginfo("getMsg Finish Subscriber Init...")

else:

rospy.loginfo("Jump Subscriber Init...")

def rgb_callback(self, msg):

self.rgb_init = True

self.rgb = msg

def depth_callback(self, msg):

self.depth_init = True

self.depth = msg

def odom_callback(self, msg):

self.odom_init = True

self.odom = msg

self.counts = 1

def map_callback(self, msg):

self.map_init = True

self.map = msg

def save_callback(self, msg):

self.save = msg

print("save = %d", msg)

def msg2npy(self, once=False):

rgb_msg = self.rgb

bridge = CvBridge()

cv_image = bridge.imgmsg_to_cv2(rgb_msg, "bgr8")

rgb = np.array(cv_image)

depth_msg = self.depth

bridge = CvBridge()

cv_image = bridge.imgmsg_to_cv2(depth_msg, desired_encoding="32FC1")

depth = np.array(cv_image)

pos = self.odom.pose.pose.position

orientation = self.odom.pose.pose.orientation

self.pos_np.append([pos.x, pos.y, pos.z])

self.orientation_np.append([orientation.x, orientation.y, orientation.z, orientation.w])

map = self.map

map_meta_data = map.info

map_data = np.array(map.data).reshape(map_meta_data.width, map_meta_data.height)

self.map_data_np.append(map_data)

self.map_meta_data_np = [map_meta_data.origin.position.x, map_meta_data.origin.position.y, map_meta_data.origin.position.z,

map_meta_data.origin.orientation.x, map_meta_data.origin.orientation.y, map_meta_data.origin.orientation.z, map_meta_data.origin.orientation.w

]

self.data_num += 1

if once:

dir_name = "data_once_agx1"

# print(dir_name)

if not os.path.exists(dir_name):

os.makedirs(dir_name)

np.save(dir_name + "/rgb_data_{}.npy".format(self.data_num), rgb)

np.save(dir_name + "/depth_data_{}.npy".format(self.data_num), depth)

# np.save(dir_name + "/" + "pos_" + self.data_num + ".npy", pos)

np.save(dir_name + "/pos_{}.npy".format(self.data_num), [pos.x, pos.y, pos.z])

np.save(dir_name + "/orientation_{}.npy".format(self.data_num), [orientation.x, orientation.y, orientation.z, orientation.w])

np.save(dir_name + "/map_data_{}.npy".format(self.data_num), map_data)

def onPress(self, key):

if key == keyboard.Key.space:

rgb_msg = self.rgb

bridge = CvBridge()

cv_image = bridge.imgmsg_to_cv2(rgb_msg, "bgr8")

rgb = np.array(cv_image)

depth_msg = self.depth

bridge = CvBridge()

cv_image = bridge.imgmsg_to_cv2(depth_msg, desired_encoding="32FC1")

depth = np.array(cv_image)

pos = self.odom.pose.pose.position

orientation = self.odom.pose.pose.orientation

# print(orientation)

map = self.map

map_meta_data = map.info

map_data = np.array(map.data).reshape(map_meta_data.width, map_meta_data.height)

self.keyNum += 1

# rospy.loginfo(f"cur num: {self.keyNum}")

print("cur num %d" % self.keyNum)

dir_name = "data_once_agx1_keyboard"

if not os.path.exists(dir_name):

os.makedirs(dir_name)

np.save(dir_name + "/rgb_data_{}.npy".format(self.keyNum), rgb)

np.save(dir_name + "/depth_data_{}.npy".format(self.keyNum), depth)

np.save(dir_name + "/pos_{}.npy".format(self.keyNum), [pos.x, pos.y, pos.z])

# np.save(dir_name + "/pos_{}.npy".format(self.keyNum), [0, 0, self.keyNum])

np.save(dir_name + "/orientation_{}.npy".format(self.keyNum), [orientation.x, orientation.y, orientation.z, orientation.w])

np.save(dir_name + "/map_data_{}.npy".format(self.keyNum), map_data)

if self.saveimg:

cv2.imwrite(dir_name + "/rgb_data_{}.jpg".format(self.keyNum), rgb)

rospy.sleep(0.5)

else:

print("wait")

def getData(self):

return self.pos_np, self.orientation_np, self.map_data_np, self.map_meta_data_np

def save(self, once=True, vedio=True):

# self.data["pos"] = self.pos_np

# self.data["orientation"] = self.orientation_np

dir_name = "data_agx3"

if not os.path.exists(dir_name):

os.makedirs(dir_name)

if not once:

np.save(dir_name + "/" + "pos.npy", self.pos_np)

np.save(dir_name + "/" + "orientation.npy", self.orientation_np)

np.save(dir_name + "/" + "map_data.npy", self.map_data_np)

np.save(dir_name + "/" + "map_meta_data.npy", self.map_meta_data_np)

if vedio:

np.save(dir_name + "/" + "rgb_callback_np.npy", self.rgb_callback_np)

np.save(dir_name + "/" + "depth_callback_np.npy", self.depth_callback_np)

rospy.loginfo("rgb_callback_np saved %f data", len(self.rgb_callback_np))

rospy.loginfo("depth_callback_np saved %f data", len(self.depth_callback_np))

def visualMap(self):

map = self.map

map_meta_data = map.info

map_data = np.array(map.data).reshape(map_meta_data.width, map_meta_data.height)

row, col = map_data.shape

# print row, col

tmp = np.zeros((row, col))

for i in range(row):

for j in range(col):

if map_data[i][j] == -1:

tmp[i][j] = 255

else:

tmp[i][j] = map_data[i][j]

cv2.imwrite("map_image.png", tmp)

rospy.loginfo("map_image saved")

# cv2.imshow("map", tmp)

# cv2.waitKey(0)

def getMap(self):

return self.map

if __name__ == '__main__':

rospy.init_node('msg2npy', anonymous=True)

data2npy = getMsg()

curNum = 0

rospy.loginfo("Ready to rec msg and save")

data2npy.msg2npy()

keyboard.py如下 运行环境是python2

#!/usr/bin/env python2

# -*-coding:utf-8-*

import rospy

from pynput import keyboard

from help import getMsg

if __name__ == '__main__':

try:

rospy.init_node('keyboard_control', anonymous=True)

print("按空格键来增加计数。")

data2npy = getMsg(check_topic=True, saveimg=True)

# 收听键盘事件

listener = keyboard.Listener(on_press=data2npy.onPress)

listener.start()

# 保持节点运行直到被关闭

rospy.spin()

except rospy.ROSInterruptException:

pass

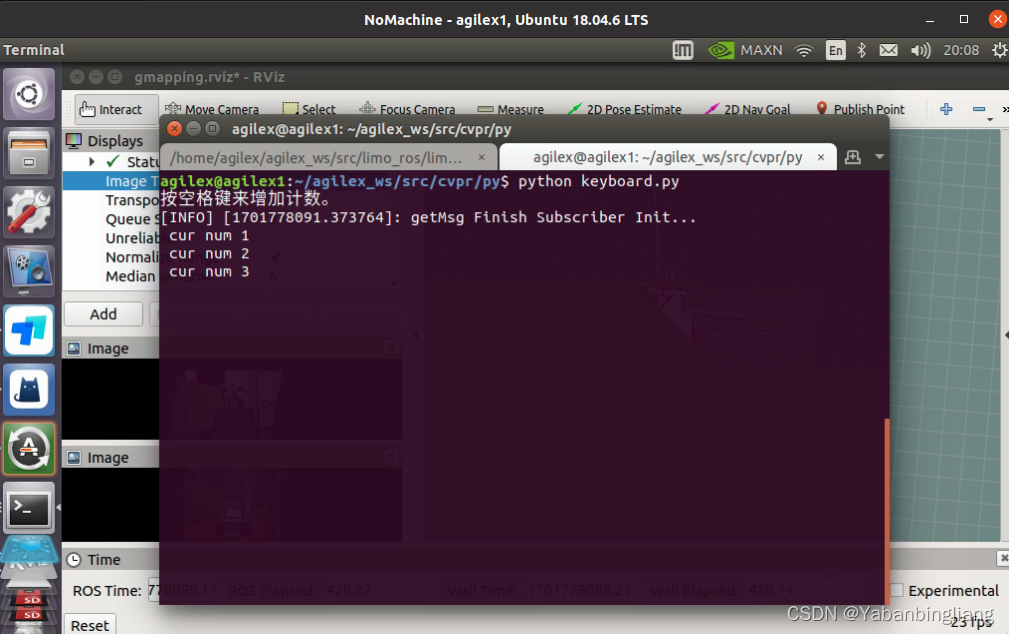

启动后实现的效果如图

多个机器人通信和控制

1. ros的多机通信

我这里的实验硬件配置是三台Agilex的Limo小车(Ubuntu 18.04),一台笔记本(Ubuntu 20.04)和一个无线路由器,四台电脑同时在一个局域网。

ros多机通信参考是赵虚左老师的博客 link

这部分需要做的就是设置好主机和从机的环境变量以及IP,通过修改 .bashrc 和 /etc/hosts 两个文件实现

1.1 主机

在 .bashrc 中添加如下两行, 192.168.1.101 是笔记本(主机)在局域网内的ip地址,通过 ifconfig 命令可以查看到,没有这个命令的话安装一下就好了。

export ROS_MASTER_URI=http://192.168.1.101:11311

export ROS_HOSTNAME=192.168.1.101

第一行的意思是将master设置在主机上(四台电脑都是ros1,是有ros master的,ros2就没有master了)。第二行是把 ROS_HOSTNAME 设置为本机的ip地址就好了。

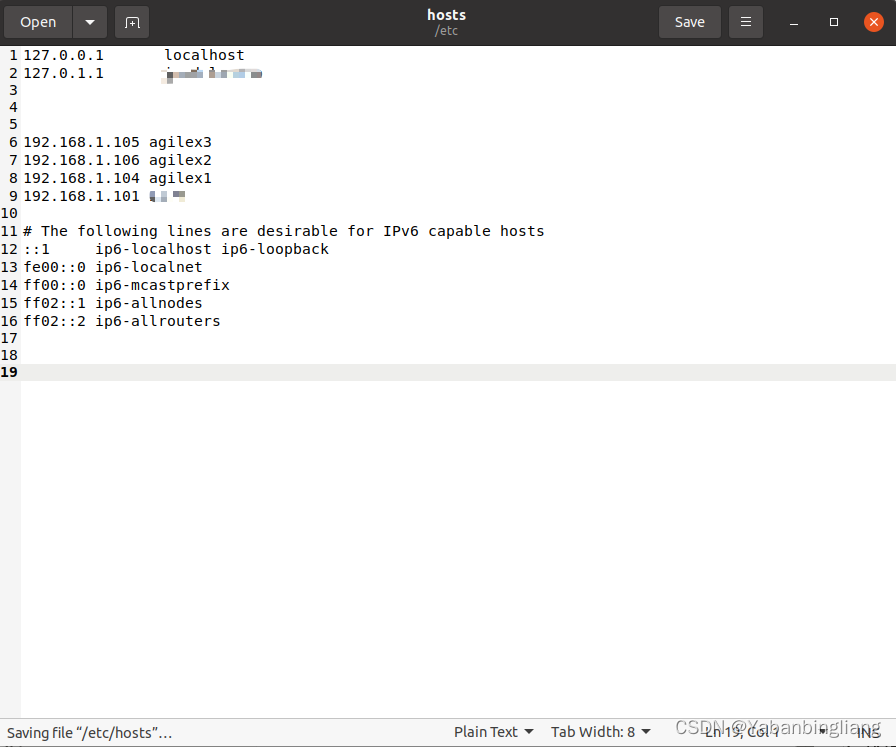

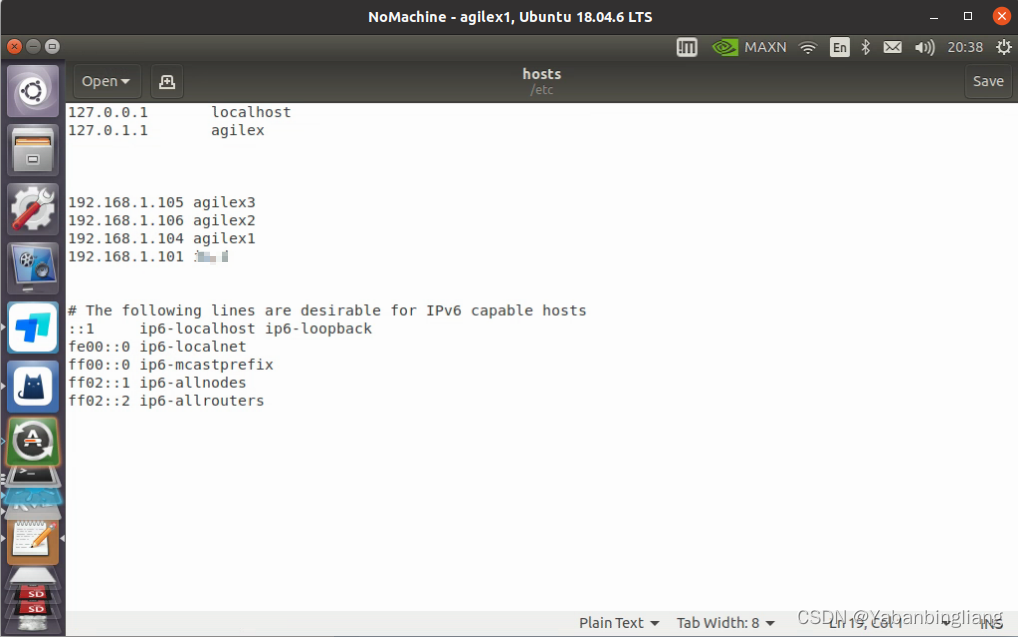

在 /etc/hosts 中添加如下几行

192.168.1.105 agilex3

192.168.1.106 agilex2

192.168.1.104 agilex1

192.168.1.101 xxxx(主机的用户名,就是终端 xx@xxx @前面那个)

这几行的意思就是把局域网内若干主机的ip地址和用户名对应起来,添加后如图。

1.2 从机(以limo1为例)

在 .bashrc 中添加如下两行, 192.168.1.101 是笔记本(主机)在局域网内的ip地址,192.168.1.104 是agilex1(从机)在局域网内的ip地址。

export ROS_MASTER_URI=http://192.168.1.101:11311

export ROS_HOSTNAME=192.168.1.104

在 /etc/hosts 中添加如下几行

192.168.1.105 agilex3

192.168.1.106 agilex2

192.168.1.104 agilex1

192.168.1.101 xxxx

其实无论是主机还是从机,/etc/hosts 中需要添加的内容都是一样的,就是把局域网内若干主机的ip地址和用户名对应起来,添加后如图。

1.3 验证

做好上述准备工作之后,就要检验一下子了。验证的方式也很简单,笔记本(master)启动roscore,如果每个从机都能看到话题,那就说明ros的多机通信是没有问题了。当然也可以用稍微复杂的方式来验证,分别在三台不同的主机上启动如下三行命令移动小乌龟了!

roscore

rosrun turtlesim turtlesim_node

rosrun turtlesim turtle_teleop_key

2. 话题(topic)重映射

话题(topic)重映射指的是将ros中节点的某个话题重新修改一个名字就叫作话题重映射。为什么需要话题重映射呢?因为如果每个机器人都启动完全相同的节点,那么话题肯定会重复的,一定会发生冲突的,无法实现给不同的机器人不同的命令,所以话题重映射是多机协同必须的一步。

在获取单个机器人数据的时候,为了方便启动机器人的底盘、gmapping和相机,我把 roslaunch 启动都放到一个sh脚本中了。

启动文件 single.sh

gnome-terminal --window -e "roslaunch limo_bringup limo_start.launch pub_odom_tf:=false" & sleep 7s

gnome-terminal --window -e "roslaunch limo_bringup limo_gmapping.launch" & sleep 3s

gnome-terminal --window -e "roslaunch astra_camera dabai_u3.launch"

而在多机协同的时候,每一个roslaunch 的启动都需要修改里面节点的话题,下面来详细说明每一个launch文件是怎么修改的。

2.1 limo_start.launch → my_limo_start.launch

原limo_start.launch如下

<?xml version="1.0"?>

<launch>

<!-- ttyTHS1 for NVIDIA nano serial port-->

<!-- ttyUSB0 for IPC USB serial port -->

<arg name="port_name" default="ttyTHS1" />

<arg name="use_mcnamu" default="false" />

<arg name="pub_odom_tf" default="" />

<include file="$(find limo_base)/launch/limo_base.launch">

<arg name="port_name" default="$(arg port_name)" />

<arg name="use_mcnamu" default="$(arg use_mcnamu)" />

<arg name="pub_odom_tf" default="$(arg pub_odom_tf)" />

</include>

<include file="$(find ydlidar_ros)/launch/X2L.launch" />

<node pkg="tf" type="static_transform_publisher" name="base_link_to_camera_link" args="0.105 0 0.1 0.0 0.0 0.0 /base_link /camera_link 10" />

<node pkg="tf" type="static_transform_publisher" name="base_link_to_imu_link" args="0.0 0.0 0.0 0.0 0.0 0.0 /base_link /imu_link 10" />

<node pkg="tf" type="static_transform_publisher" name="base_link_to_laser_link" args="0.105 0.0 0.08 0.0 0.0 0.0 /base_link /laser_link 10" />

</launch>

简单分析以下,这个launch文件包含了 limo_base.launch X2L.launch 并作了三个静态的坐标变化。这三个部分就都需要作相应的修改,my_limo_start.launch具体如下:

<?xml version="1.0"?>

<launch>

<!-- ttyTHS1 for NVIDIA nano serial port-->

<!-- ttyUSB0 for IPC USB serial port -->

<arg name="port_name" default="ttyTHS1" />

<arg name="use_mcnamu" default="false" />

<arg name="pub_odom_tf" default="" />

<arg name="rb_name" default="limo_1" />

<arg name="odom_frame" default="odom" />

<arg name="base_frame" default="base_link" />

<!-- <group ns="$(arg second_tb3)/map_merge"> -->

<group ns="$(arg rb_name)">

<node name="limo_base_node" pkg="limo_base" type="limo_base_node" output="screen" >

<param name="port_name" value="$(arg port_name)" />

<param name="odom_frame" value="$(arg odom_frame)" />

<param name="base_frame" value="$(arg base_frame)" />

<param name="use_mcnamu" value="$(arg use_mcnamu)" />

<param name="pub_odom_tf" value="$(arg pub_odom_tf)" />

<!-- <param name="rb_name" value="$(arg rb_name)" /> -->

<remap from="/odom" to="/$(arg rb_name)/odom" />

<remap from="/cmd_vel" to="/$(arg rb_name)/cmd_vel" />

<remap from="/imu" to="/$(arg rb_name)/imu" />

<remap from="/limo_status" to="/$(arg rb_name)/limo_status" />

</node>

<include file="$(find ydlidar_ros)/launch/my_X2L.launch" >

<arg name="rb_name" value="$(arg rb_name)" />

</include>

<node pkg="tf" type="static_transform_publisher" name="base_link_to_camera_link" args="0.105 0 0.1 0.0 0.0 0.0 $(arg rb_name)/base_link $(arg rb_name)/camera_link 10" />

<node pkg="tf" type="static_transform_publisher" name="base_link_to_imu_link" args="0.0 0.0 0.0 0.0 0.0 0.0 $(arg rb_name)/base_link $(arg rb_name)/imu_link 10" />

<node pkg="tf" type="static_transform_publisher" name="base_link_to_laser_link" args="0.105 0.0 0.08 0.0 0.0 0.0 $(arg rb_name)/base_link $(arg rb_name)/laser_link 10" />

</group>

</launch>

<group ns="$(arg rb_name)">标签是添加命名空间,这样这个标签内启动的所有的节点名字签名都会有rb_name这个前缀

<remap from="/odom" to="/$(arg rb_name)/odom" /> 是将节点的话题重新映射,注意不要忽略to里面第一个 \ ,否则会出现重复的前缀。(这部分涉及到的知识点好像是ros中的全局命名和私有命令,有点忘记了)

其它的话题也是同理

关于静态坐标变化也是同样的添加若干个变量前缀即可

最后是 my_X2L.launch 中主要是添加了一个变量,然后通过传递修改一下 frame_id , 别的就没有了

<launch>

<!-- <arg name="rb_name" default="limo_3" /> -->

<arg name="rb_name"/>

<node name="ydlidar_node" pkg="ydlidar_ros" type="ydlidar_node" output="screen" respawn="false" >

<param name="port" type="string" value="/dev/ydlidar"/>

<param name="baudrate" type="int" value="115200"/>

<param name="frame_id" type="string" value="/$(arg rb_name)/laser_link"/>

<param name="resolution_fixed" type="bool" value="true"/>

<param name="auto_reconnect" type="bool" value="true"/>

<param name="reversion" type="bool" value="true"/>

<param name="angle_min" type="double" value="-90" />

<param name="angle_max" type="double" value="90" />

<param name="range_min" type="double" value="0.1" />

<param name="range_max" type="double" value="12.0" />

<param name="ignore_array" type="string" value="" />

<param name="frequency" type="double" value="8"/>

<param name="samp_rate" type="int" value="3"/>

<param name="isSingleChannel" type="bool" value="true"/>

</node>

</launch>

2.2 limo_gmapping.launch →my_limo_gmapping.launch

原limo_gmapping.launch如下

<?xml version="1.0"?>

<launch>

<!-- use robot pose ekf to provide odometry-->

<node pkg="robot_pose_ekf" name="robot_pose_ekf" type="robot_pose_ekf">

<param name="output_frame" value="odom" />

<param name="base_footprint_frame" value="base_link"/>

<!--<remap from="imu_data" to="imu" />-->

</node>

<node pkg="gmapping" type="slam_gmapping" name="slam_gmapping" output="screen">

<param name="map_update_interval" value="5.0"/>

<param name="maxUrange" value="16.0"/>

<param name="sigma" value="0.05"/>

<param name="kernelSize" value="1"/>

<param name="lstep" value="0.05"/>

<param name="astep" value="0.05"/>

<param name="iterations" value="5"/>

<param name="lsigma" value="0.075"/>

<param name="ogain" value="3.0"/>

<param name="lskip" value="0"/>

<param name="srr" value="0.1"/>

<param name="srt" value="0.2"/>

<param name="str" value="0.1"/>

<param name="stt" value="0.2"/>

<param name="linearUpdate" value="1.0"/>

<param name="angularUpdate" value="0.5"/>

<param name="temporalUpdate" value="3.0"/>

<param name="resampleThreshold" value="0.5"/>

<param name="particles" value="30"/>

<param name="xmin" value="-50.0"/>

<param name="ymin" value="-50.0"/>

<param name="xmax" value="50.0"/>

<param name="ymax" value="50.0"/>

<param name="delta" value="0.05"/>

<param name="llsamplerange" value="0.01"/>

<param name="llsamplestep" value="0.01"/>

<param name="lasamplerange" value="0.005"/>

<param name="lasamplestep" value="0.005"/>

</node>

<node pkg="rviz" type="rviz" name="rviz" args="-d $(find limo_bringup)/rviz/gmapping.rviz" />

</launch>

修改后的my_limo_gmapping.launch如下

<?xml version="1.0"?>

<launch>

<arg name="rb_name" default="limo_1" />

<!-- <arg name="set_base_frame" default="$(arg rb_name)/base_link"/>

<arg name="set_odom_frame" default="$(arg rb_name)/odom"/>

<arg name="set_map_frame" default="$(arg rb_name)/map"/> -->

<!-- use robot pose ekf to provide odometry-->

<group ns="$(arg rb_name)">

<node pkg="robot_pose_ekf" name="robot_pose_ekf" type="robot_pose_ekf">

<param name="output_frame" value="/$(arg rb_name)/odom" />

<param name="base_footprint_frame" value="/$(arg rb_name)/base_link"/>

<!--<remap from="imu_data" to="imu" />-->

</node>

<node pkg="gmapping" type="slam_gmapping" name="slam_gmapping" output="screen">

<param name="base_frame" value="$(arg rb_name)/base_link"/>

<param name="odom_frame" value="$(arg rb_name)/odom"/>

<param name="map_frame" value="$(arg rb_name)/map"/>

<remap from="scan" to="/$(arg rb_name)/scan"/>

<param name="map_update_interval" value="5.0"/>

<param name="maxUrange" value="16.0"/>

<param name="sigma" value="0.05"/>

<param name="kernelSize" value="1"/>

<param name="lstep" value="0.05"/>

<param name="astep" value="0.05"/>

<param name="iterations" value="5"/>

<param name="lsigma" value="0.075"/>

<param name="ogain" value="3.0"/>

<param name="lskip" value="0"/>

<param name="srr" value="0.1"/>

<param name="srt" value="0.2"/>

<param name="str" value="0.1"/>

<param name="stt" value="0.2"/>

<param name="linearUpdate" value="1.0"/>

<param name="angularUpdate" value="0.5"/>

<param name="temporalUpdate" value="3.0"/>

<param name="resampleThreshold" value="0.5"/>

<param name="particles" value="30"/>

<param name="xmin" value="-50.0"/>

<param name="ymin" value="-50.0"/>

<param name="xmax" value="50.0"/>

<param name="ymax" value="50.0"/>

<param name="delta" value="0.05"/>

<param name="llsamplerange" value="0.01"/>

<param name="llsamplestep" value="0.01"/>

<param name="lasamplerange" value="0.005"/>

<param name="lasamplestep" value="0.005"/>

</node>

</group>

<node pkg="rviz" type="rviz" name="rviz_1" args="-d $(find limo_bringup)/rviz/multi_gmapping.rviz" />

</launch>

主要修改就是给一些参考系加个前缀,还有就是话题重映射,修改的内容如下

<param name="output_frame" value="/$(arg rb_name)/odom" />

<param name="base_footprint_frame" value="/$(arg rb_name)/base_link"/>

<param name="base_frame" value="$(arg rb_name)/base_link"/>

<param name="odom_frame" value="$(arg rb_name)/odom"/>

<param name="map_frame" value="$(arg rb_name)/map"/>

<remap from="scan" to="/$(arg rb_name)/scan"/>

2.3 dabai_u3.launch

这部分最省事了,因为小车来的时候本身安装好了相机的驱动,然后里面考虑到了相机前缀的问题了,所以就改个名字就好了,哈哈哈哈和。

<arg name="camera" default="camera" />

启动文件 multi.sh

为了能够保留原来所有single的功能,修改的launch文件基本上都是复制个副本,然后在副本里面进行修改,所以最后启动的sh就变成下面这个样子了

gnome-terminal --window -e "roslaunch limo_bringup my_limo_start.launch pub_odom_tf:=false" & sleep 7s

gnome-terminal --window -e "roslaunch limo_bringup my_limo_gmapping.launch" & sleep 3s

gnome-terminal --window -e "roslaunch astra_camera dabai_u3.launch"

2.4 验证

完成了上述工作之后,也需要做个简单的检验。验证的方式和之前也是类似的,在笔记本上启动 roscore,三台小车都启动 multi.sh ,然后各个小车都有独立的话题和节点就可以了,还可以通过 rostopic pub 小车cmd 看看能不能单独控制小车。

好了,到此为止,终于算是完成了ros多机协同的基本配置了,接下来就是写代码获取机器人的数据并控制了~

3. 编程

以下所有代码均在笔记本(python3)的环境下完成的

multi_robot.py

#!/usr/bin/env python3

import rospy

import tf

import math

import numpy as np

import cv2

import os

# from cv2 import CvBridge

from cv_bridge import CvBridge

from sensor_msgs.msg import Image

from geometry_msgs.msg import Twist

from geometry_msgs.msg import TwistStamped

from nav_msgs.msg import Odometry

from nav_msgs.msg import OccupancyGrid

from cvpr.msg import control

from pynput import keyboard

# from cvpr.msg import control

# from help import getMsg

class Robot():

def __init__(self, rb_name="limo_1", check_topic=False):

self.rb_name = rb_name

self.rgb_count = 0

self.depth_count = 0

self.rgb = None

self.depth = None

self.odom = None

self.pos = None

self.orientation = None

self.map =None

self.rgb_init = False

self.depth_init = False

self.odom_init = False

self.map_init = False

self.moveMsg = Twist()

self.moveMsg.linear.x = 0

self.moveMsg.linear.y = 0

self.moveMsg.linear.z = 0

self.moveMsg.angular.x = 0

self.moveMsg.angular.y = 0

self.moveMsg.angular.z = 0

self.curYaw = 0

self.cv_bridge = CvBridge()

camera_prefix = "camera" + self.rb_name[-1]

# print(camera_prefix)

self.rgb_sub = rospy.Subscriber(camera_prefix + "/rgb/image_raw", Image, self.rgb_callback)

self.depth_sub = rospy.Subscriber(camera_prefix + "/depth/image_raw", Image, self.depth_callback)

self.odom_sub = rospy.Subscriber(self.rb_name + "/odom", Odometry, self.odom_callback)

self.map_sub = rospy.Subscriber(self.rb_name + "/map", OccupancyGrid, self.map_callback)

self.type_sub = rospy.Subscriber(self.rb_name + "/type", control, self.type_rec_callback)

self.type_pub = rospy.Publisher(self.rb_name + '/cmd_vel', Twist, queue_size = 1)

if check_topic:

rospy.loginfo("%s Start Subscriber Init...", rb_name)

while not self.rgb_init:

continue

while not self.depth_init:

continue

while not self.odom_init:

continue

while not self.map_init:

continue

rospy.loginfo("%s Finish Subscriber Init...", rb_name)

else:

rospy.loginfo("%s Jump Subscriber Init...", rb_name)

def rgb_callback(self, msg):

self.rgb_init = True

tmp = msg

# self.rgb = msg

# self.rgb_count += 1

# dir_name = "rgb_" + self.rb_name

# if not os.path.exists(dir_name):

# os.makedirs(dir_name)

# way1

cv_image = self.cv_bridge.imgmsg_to_cv2(tmp, "bgr8")

self.rgb = np.array(cv_image)

# way2

# cv_img = np.frombuffer(tmp.data, dtype=np.uint8).reshape(tmp.height, tmp.width, -1)

# cv_img = cv2.cvtColor(cv_img, cv2.COLOR_RGB2BGR)

# self.rgb = np.array(cv_img)

# print(self.rgb.shape)

# way1

# np.save(dir_name + "/rgb_data_{}.npy".format(self.rgb_count), self.rgb)

# way2

# cv2.imwrite(dir_name + "/rgb_data_{}.jpg".format(self.rgb_count), self.rgb)

# rospy.loginfo("%s rgb count: %d", self.rb_name, self.rgb_count)

def depth_callback(self, msg):

self.depth_init = True

tmp = msg

self.depth_count += 1

# self.depth = msg

cv_image = self.cv_bridge.imgmsg_to_cv2(tmp, desired_encoding="32FC1")

self.depth = np.array(cv_image)

# dir_name = "depth_" + self.rb_name

# if not os.path.exists(dir_name):

# os.makedirs(dir_name)

# print(self.depth.shape)

# cv2.imwrite(dir_name + "/depth_data_{}.jpg".format(self.depth_count), self.depth)

# self.depth_count += 1

# rospy.loginfo("%s depth count: %d", self.rb_name, self.depth_count)

def odom_callback(self, msg):

self.odom_init = True

self.odom = msg

self.pos = [self.odom.pose.pose.position.x, self.odom.pose.pose.position.y, self.odom.pose.pose.position.z]

self.orientation = [self.odom.pose.pose.orientation.x, self.odom.pose.pose.orientation.y,

self.odom.pose.pose.orientation.z, self.odom.pose.pose.orientation.w]

# print(self.orientation)

# print(self.pos)

(_, _, yaw) = tf.transformations.euler_from_quaternion(self.orientation)

if yaw < 0:

yaw += 2 * math.pi

self.curYaw = yaw

def map_callback(self, msg):

self.map_init = True

tmp = msg

self.map = np.array(tmp.data).reshape(tmp.info.width, tmp.info.height)

# print(self.map.shape)

# print(self.map)

def type_rec_callback(self, data):

# rospy.loginfo("Received control message: %d", data.type)

if data.type == 8:

self.moveMsg.linear.x = 0

self.moveMsg.linear.y = 0

self.moveMsg.linear.z = 0

self.moveMsg.angular.x = 0

self.moveMsg.angular.y = 0

self.moveMsg.angular.z = 0

self.moveMsg.linear.x = 0.1

rospy.loginfo("%s Received control message: %d forward", self.rb_name, data.type)

elif data.type == 2:

self.moveMsg.linear.x = 0

self.moveMsg.linear.y = 0

self.moveMsg.linear.z = 0

self.moveMsg.angular.x = 0

self.moveMsg.angular.y = 0

self.moveMsg.angular.z = 0

self.moveMsg.linear.x = -0.1

rospy.loginfo("%s Received control message: %d backward", self.rb_name, data.type)

elif data.type == 4:

self.moveMsg.linear.x = 0

self.moveMsg.linear.y = 0

self.moveMsg.linear.z = 0

self.moveMsg.angular.x = 0

self.moveMsg.angular.y = 0

self.moveMsg.angular.z = 0

self.moveMsg.angular.z = 0.3

rospy.loginfo("%s Received control message: %d left turn", self.rb_name, data.type)

elif data.type == 6:

self.moveMsg.linear.x = 0

self.moveMsg.linear.y = 0

self.moveMsg.linear.z = 0

self.moveMsg.angular.x = 0

self.moveMsg.angular.y = 0

self.moveMsg.angular.z = 0

self.moveMsg.angular.z = -0.3

rospy.loginfo("%s Received control message: %d right turn", self.rb_name, data.type)

else:

rospy.loginfo("%s Received control message: %d STOP", self.rb_name, data.type)

self.moveMsg.linear.x = 0

self.moveMsg.angular.z = 0

self.type_pub.publish(self.moveMsg)

def getData(self):

return [self.pos, self.orientation, self.rgb, self.depth, self.map]

def getAngle(self):

return math.degrees(self.curYaw)

class MultiRobot():

def __init__(self, all_check_topic=True):

self.rb3 = Robot(rb_name="limo_3", check_topic=all_check_topic)

self.rb2 = Robot(rb_name="limo_2", check_topic=all_check_topic)

self.rb1 = Robot(rb_name="limo_1", check_topic=all_check_topic)

self.data_count = 0

self.merge_map = None

self.merge_map_init = False

self.merge_map_sub = rospy.Subscriber("/map", OccupancyGrid, self.merge_map_callback)

def merge_map_callback(self, msg):

self.merge_map_init = True

tmp ~~= msg

self.merge_map = np.array(tmp.data).reshape(tmp.info.width, tmp.info.height)~~

def onPress(self, key):

# print(key)

# return [self.pos, self.orientation, self.rgb, self.depth, self.map]

dir_name = "data"

if not os.path.exists(dir_name):

os.makedirs(dir_name)

rb1_dir = dir_name + "/" + self.rb1.rb_name

if not os.path.exists(rb1_dir):

os.makedirs(rb1_dir)

rb2_dir = dir_name + "/" + self.rb2.rb_name

if not os.path.exists(rb2_dir):

os.makedirs(rb2_dir)

rb3_dir = dir_name + "/" + self.rb3.rb_name

if not os.path.exists(rb3_dir):

os.makedirs(rb3_dir)

if key == keyboard.Key.space:

self.data_count += 1

print(self.data_count)

data_rb1 = self.rb1.getData()

data_rb2 = self.rb2.getData()

data_rb3 = self.rb3.getData()

# print(data_rb1[0])

# np.save(rb1_dir + "/pos_{}.npy".format(self.data_count), [0, 0, 0])

np.save(rb1_dir + "/pos_{}.npy".format(self.data_count), data_rb1[0])

np.save(rb1_dir + "/orientation_{}.npy".format(self.data_count), data_rb1[1])

np.save(rb1_dir + "/map_data_{}.npy".format(self.data_count), data_rb1[4])

np.save(rb2_dir + "/pos_{}.npy".format(self.data_count), data_rb2[0])

np.save(rb2_dir + "/orientation_{}.npy".format(self.data_count), data_rb2[1])

np.save(rb2_dir + "/map_data_{}.npy".format(self.data_count), data_rb2[4])

np.save(rb3_dir + "/pos_{}.npy".format(self.data_count), data_rb3[0])

np.save(rb3_dir + "/orientation_{}.npy".format(self.data_count), data_rb3[1])

np.save(rb3_dir + "/map_data_{}.npy".format(self.data_count), data_rb3[4])

else:

print("wait")

if __name__ == '__main__':

try:

rospy.init_node('multi_robot', anonymous=True)

multi_robot = MultiRobot()

# pos, orientation, rgb, depth, map_data = multi_robot.rb2.getData()

# print(pos) #list

# print(orientation) #list

# print(rgb.shape) #numpy

# print(depth.shape) #numpy

# print(map_data.shape) #numpy

rospy.spin()

except rospy.ROSInterruptException:

pass

其实就是定义了 Robot 和 MultiRobot 两个类,在Robot 里面实现简单的话题数据获取和话题控制,然后在MultiRobot 里面实例化三个Robot ,Robot的具体实现细节和单个机器人几乎每什么差,就是注意一下各种命名。

然后有个自定义消息 control ,这个也很简单,就定义了一个八字节的变量,因为师兄想要的就是能前后左右动就可以了,比较简单。

int8 type

里面有很多测试的代码注释了,还有其他的一些相同功能的替代方法,懒得整理了,各位观众老爷看个乐子,不要喷俺

其中有一行 self.merge_map_sub = rospy.Subscriber("/map", OccupancyGrid, self.merge_map_callback) ,这块是师兄一开始想用multi_map_merge,我也跑通了。但是后来吧,我用的无初始点的方式测试了好多遍,效果不是很显示,最重要的是师兄发现这个功能包给不了他想要的东西(简直是白弄了阿qaq),也就拉倒了。

验证

然后写完代码,当然是要验证拉~

这部分就是通过敲击一次空格,然后能够保存三个机器人的odom、rgb、depth和map这么几组信息就说明是没有问题了~

multi_keyboard.py

#!/usr/bin/env python3

# -*-coding:utf-8-*

import rospy

from pynput import keyboard

from multi_robot import MultiRobot

count = 0

def on_press(key):

global count

if key == keyboard.Key.space:

count += 1

print(count)

if __name__ == '__main__':

try:

rospy.init_node('keyboard_counter', anonymous=True)

rospy.loginfo("按空格键来增加计数。")

multi_robot = MultiRobot(all_check_topic=True)

# 收听键盘事件

# listener = keyboard.Listener(on_press=on_press)

listener = keyboard.Listener(on_press=multi_robot.onPress)

listener.start()

# 保持节点运行直到被关闭

rospy.spin()

except rospy.ROSInterruptException:

pass

还有就是需要验证机器人确实能够被独立的控制,这里我是让三个机器人作左转和右转,然后转的角速度不同,这样就能验证三个机器人是不是同时被独立控制了。

multi_control.py

import rospy

from multi_robot import MultiRobot

from cvpr.msg import control

from pynput import keyboard

if __name__ == "__main__":

rospy.init_node('test_msg', anonymous=True)

multi_limo = MultiRobot(all_check_topic=True)

# return [self.pos, self.orientation, self.rgb, self.depth, self.map]

[rb1_pos, rb1_orientation, rb1_rgb, rb1_depth, rb1_map] = multi_limo.rb1.getData()

[rb2_pos, rb2_orientation, rb2_rgb, rb2_depth, rb2_map] = multi_limo.rb2.getData()

[rb3_pos, rb3_orientation, rb3_rgb, rb3_depth, rb3_map] = multi_limo.rb3.getData()

n = 100

multi_limo.rb1.rgb_save = True

multi_limo.rb2.rgb_save = True

multi_limo.rb3.rgb_save = True

multi_limo.rb1.depth_save = True

multi_limo.rb2.depth_save = True

multi_limo.rb3.depth_save = True

while not rospy.is_shutdown():

multi_limo.rb1.move(n=1, type=6, angular=0.3)

multi_limo.rb2.move(n=1, type=4, angular=0.5)

multi_limo.rb3.move(n=1, type=6, angular=1.0)

rospy.sleep(1)

if n <= 0:

multi_limo.rb1.rgb_save = False

n -= 1

# print(rb1_pos)

# print(rb2_orientation)

# print(rb3_map)

rospy.spin()

至此,为了能够完成多机协同需要做的多机通信和控制就全部完成了,如果有不合理或者不合适的地方,欢迎批评指正,希望我的学习过程对你能够有所帮助~

900

900

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?