1、主要参考

(1)最主要参考,官方的blog

Make fragments — Open3D 0.16.0 documentation

(2)操作流程参考

(3)blog

Open3d利用彩色图和深度图生成点云进行室内三维重建_两车面包人的博客-CSDN博客_生成的点云没有颜色

(4)数据集来源

ICL-NUIM RGB-D Benchmark Dataset

2、使用open3d提供的官方例子重建三维

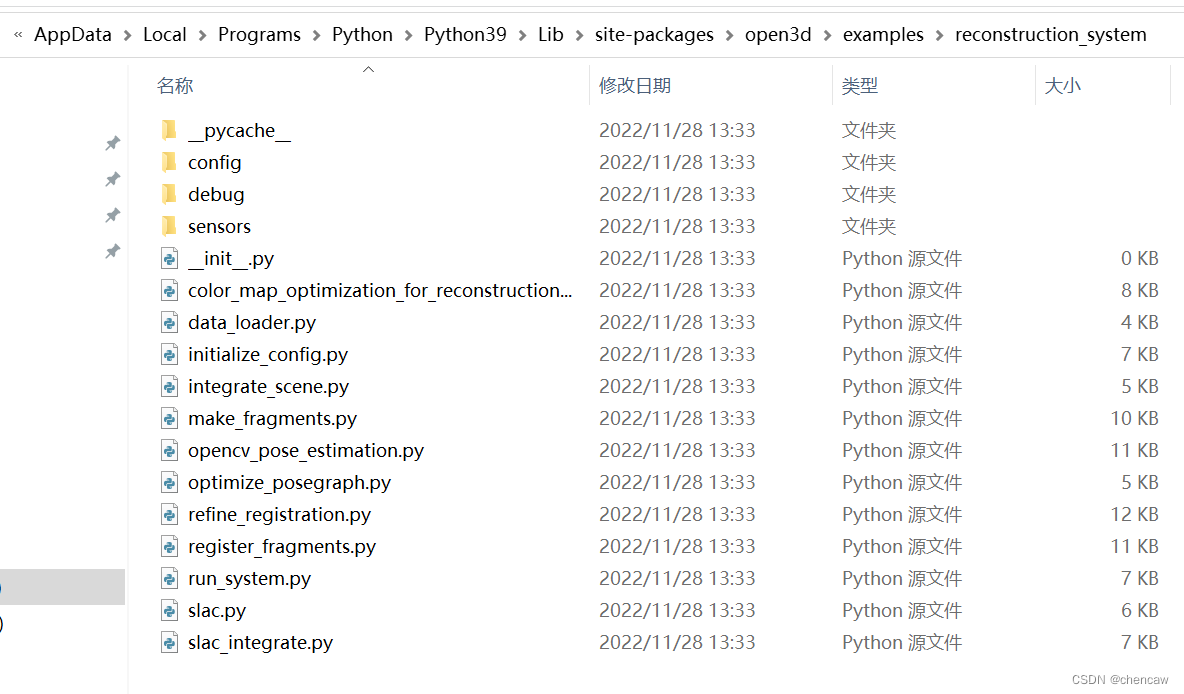

2.1进入如下目录

C:\Users\Administrator\AppData\Local\Programs\Python\Python39\Lib\site-packages\open3d\examples\reconstruction_system

2.2运行如下指令

python run_system.py --default_dataset lounge --make --register --refine --integrate

或者

python run_system.py --default_dataset bedroom --make --register --refine --integrate

或者

python run_system.py --default_dataset jack_jack --make --register --refine --integrate(2)其中bedroom可以手动下载,地址

https://github.com/isl-org/open3d_downloads/releases/download/20220301-data/bedroom01.zip

2.3实际操作注意

(3)注意,操作方法和下面的相同

注意:(1)先按照4.2.1步骤修改好json文件

然后操作以下四步即可!!!:

python run_system.py --config config/tutorial_chen_iclnuim.json --make python run_system.py --config config/tutorial_chen_iclnuim.json --register python run_system.py --config config/tutorial_chen_iclnuim.json --refine python run_system.py --config config/tutorial_chen_iclnuim.json --integrate

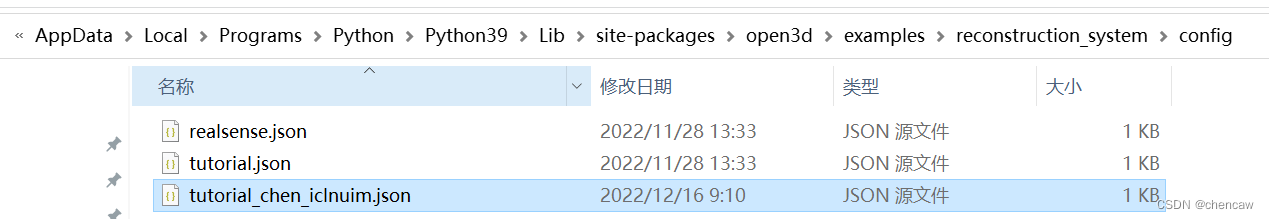

该tutorial_chen_iclnuim.json的内容如下:

{

"name": "Open3D reconstruction tutorial http://open3d.org/docs/release/tutorial/reconstruction_system/system_overview.html",

"path_dataset": "D:/RGBD_CAMERA/bedroom01",

"path_intrinsic": "",

"depth_max": 3.0,

"voxel_size": 0.05,

"depth_diff_max": 0.07,

"preference_loop_closure_odometry": 0.1,

"preference_loop_closure_registration": 5.0,

"tsdf_cubic_size": 3.0,

"icp_method": "color",

"global_registration": "ransac",

"python_multi_threading": true

}

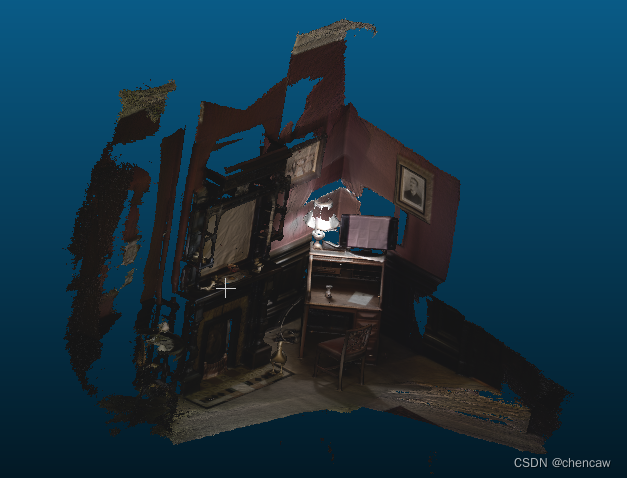

(4)拼接结果

2.4试试自己的数据

(1)使用前面教程的方法获取自己的数据集

(17)深度点云学习--python安装openni打开奥比中光RGBD_chencaw的博客-CSDN博客

(2)具体保存例子如下

---------------------------------------------

-------下面的步骤都是OK的,就是使用该数据集运算过程中出了问题!

--------------------------------------------------------------------

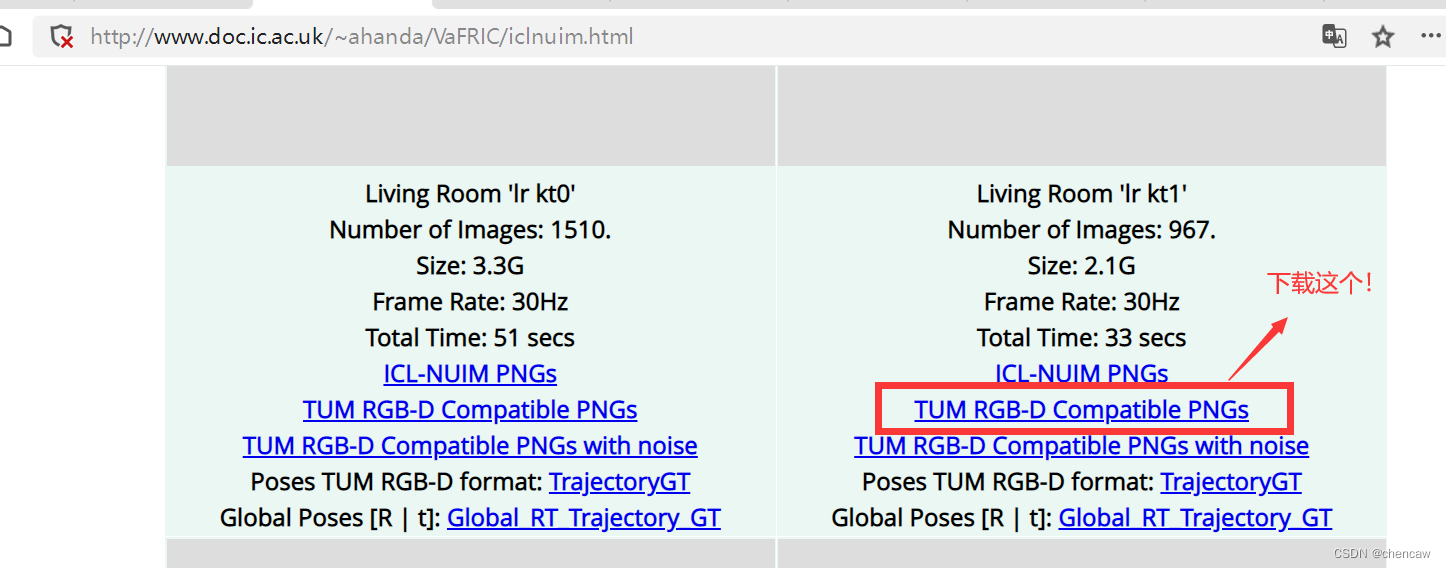

3、其它详细操作--下载指定数据集

3.1 下载数据

(1)网址

ICL-NUIM RGB-D Benchmark Dataset

(2)下载的位置

(3)下载后解压文件

(3)下载后解压文件

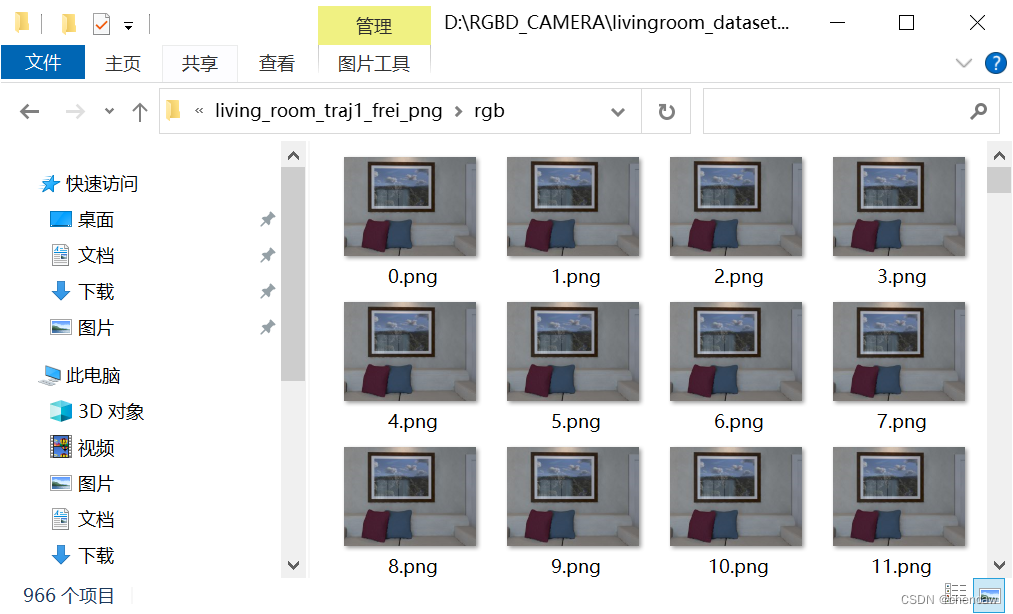

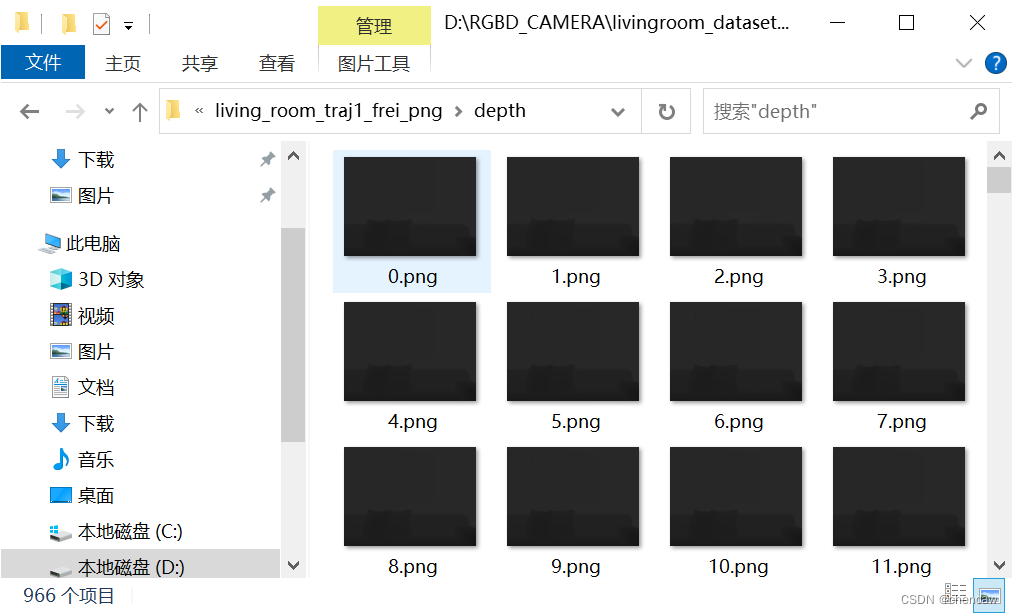

1)发现主要由2个文件夹构成

2)总文件数996

3)RGB图的内容如下

4)深度图的内容如下

3.2相机参数

(1)网址

ICL-NUIM RGB-D Benchmark Dataset

(2)参数

| 481.20, | 0, | 319.50 |

| 0, | -480.00, | 239.50 |

| 0, | 0, | 1 |

4、基于open3d的RGBD图三维重建

4.1到你安装的open3d的目录下

(1)到安装目录的examples\reconstruction_system文件下

我的是默认安装,位置如下:

C:\Users\Administrator\AppData\Local\Programs\Python\Python39\Lib\site-packages\open3d\examples\reconstruction_system

4.2Make fragments(创建片段)

注意:(1)先按照3.2.1步骤修改好json文件

然后操作以下四步即可!!!:

python run_system.py --config config/tutorial_chen_iclnuim.json --make python run_system.py --config config/tutorial_chen_iclnuim.json --register python run_system.py --config config/tutorial_chen_iclnuim.json --refine python run_system.py --config config/tutorial_chen_iclnuim.json --integrate

4.2.1操作

场景重建系统的第一步是从短RGBD序列中创建片段。

(1)输入参数

该脚本使用python run_system.py [config]——make运行。在[config]中,["path_dataset"]应该有image和depth子文件夹,分别存储彩色图像和深度图像。我们假设彩色图像和深度图像是同步和配准的。在[config]中,可选参数["path_intrinsic"]指定了存储相机固有矩阵的json文件的路径(详情请参阅Read camera intrinsic)。如果没有给出,则使用PrimeSense工厂设置。

(2)具体操作

复制修改config下面的tutorial.json为你的新的config

(3)具体内容修改了数据集的路径

{

"name": "Open3D reconstruction tutorial http://open3d.org/docs/release/tutorial/reconstruction_system/system_overview.html",

"path_dataset": "D:/RGBD_CAMERA/livingroom_dataset/living_room_traj1_frei_png",

"path_intrinsic": "",

"depth_max": 3.0,

"voxel_size": 0.05,

"depth_diff_max": 0.07,

"preference_loop_closure_odometry": 0.1,

"preference_loop_closure_registration": 5.0,

"tsdf_cubic_size": 3.0,

"icp_method": "color",

"global_registration": "ransac",

"python_multi_threading": true

}

(4)运行如下命令

python run_system.py --config config/tutorial_chen_iclnuim.json ——make4.2.2 涉及的主要函数

(1)Register RGBD image pairs

# examples/python/reconstruction_system/make_fragments.py

def register_one_rgbd_pair(s, t, color_files, depth_files, intrinsic,

with_opencv, config):

source_rgbd_image = read_rgbd_image(color_files[s], depth_files[s], True,

config)

target_rgbd_image = read_rgbd_image(color_files[t], depth_files[t], True,

config)

option = o3d.pipelines.odometry.OdometryOption()

option.depth_diff_max = config["depth_diff_max"]

if abs(s - t) != 1:

if with_opencv:

success_5pt, odo_init = pose_estimation(source_rgbd_image,

target_rgbd_image,

intrinsic, False)

if success_5pt:

[success, trans, info

] = o3d.pipelines.odometry.compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image, intrinsic, odo_init,

o3d.pipelines.odometry.RGBDOdometryJacobianFromHybridTerm(),

option)

return [success, trans, info]

return [False, np.identity(4), np.identity(6)]

else:

odo_init = np.identity(4)

[success, trans, info] = o3d.pipelines.odometry.compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image, intrinsic, odo_init,

o3d.pipelines.odometry.RGBDOdometryJacobianFromHybridTerm(), option)

return [success, trans, info]该函数读取一对RGBD图像,并将source_rgbd_image注册到target_rgbd_image。Open3D函数compute_rgbd_odometry被调用来对齐RGBD图像。对于相邻的RGBD图像,使用单位矩阵作为初始化。对于非相邻的RGBD图像,采用宽基线匹配作为初始化。特别地,函数pose_estimate计算OpenCV ORB特征来匹配宽基线图像上的稀疏特征,然后执行5点RANSAC来估计一个粗略的对齐,这被用作compute_rgbd_odometry的初始化。

(2)Multiway registration

# examples/python/reconstruction_system/make_fragments.py

def make_posegraph_for_fragment(path_dataset, sid, eid, color_files,

depth_files, fragment_id, n_fragments,

intrinsic, with_opencv, config):

o3d.utility.set_verbosity_level(o3d.utility.VerbosityLevel.Error)

pose_graph = o3d.pipelines.registration.PoseGraph()

trans_odometry = np.identity(4)

pose_graph.nodes.append(

o3d.pipelines.registration.PoseGraphNode(trans_odometry))

for s in range(sid, eid):

for t in range(s + 1, eid):

# odometry

if t == s + 1:

print(

"Fragment %03d / %03d :: RGBD matching between frame : %d and %d"

% (fragment_id, n_fragments - 1, s, t))

[success, trans,

info] = register_one_rgbd_pair(s, t, color_files, depth_files,

intrinsic, with_opencv, config)

trans_odometry = np.dot(trans, trans_odometry)

trans_odometry_inv = np.linalg.inv(trans_odometry)

pose_graph.nodes.append(

o3d.pipelines.registration.PoseGraphNode(

trans_odometry_inv))

pose_graph.edges.append(

o3d.pipelines.registration.PoseGraphEdge(s - sid,

t - sid,

trans,

info,

uncertain=False))

# keyframe loop closure

if s % config['n_keyframes_per_n_frame'] == 0 \

and t % config['n_keyframes_per_n_frame'] == 0:

print(

"Fragment %03d / %03d :: RGBD matching between frame : %d and %d"

% (fragment_id, n_fragments - 1, s, t))

[success, trans,

info] = register_one_rgbd_pair(s, t, color_files, depth_files,

intrinsic, with_opencv, config)

if success:

pose_graph.edges.append(

o3d.pipelines.registration.PoseGraphEdge(

s - sid, t - sid, trans, info, uncertain=True))

o3d.io.write_pose_graph(

join(path_dataset, config["template_fragment_posegraph"] % fragment_id),

pose_graph)此脚本使用Multi way注册中演示的技术。函数make_posegraph_for_fragment构建了一个用于该序列中所有RGBD图像的多路配准的姿态图。每个图节点代表一个RGBD图像及其姿态,它将几何图形转换为全局片段空间。为了提高效率,只使用关键帧。

一旦创建了一个姿态图,通过调用函数optimize_posegraph_for_fragment来执行多路注册

(3) optimize_posegraph_for_fragment

# examples/python/reconstruction_system/optimize_posegraph.py

def optimize_posegraph_for_fragment(path_dataset, fragment_id, config):

pose_graph_name = join(path_dataset,

config["template_fragment_posegraph"] % fragment_id)

pose_graph_optimized_name = join(

path_dataset,

config["template_fragment_posegraph_optimized"] % fragment_id)

run_posegraph_optimization(pose_graph_name, pose_graph_optimized_name,

max_correspondence_distance = config["depth_diff_max"],

preference_loop_closure = \

config["preference_loop_closure_odometry"])这个函数调用global_optimization来估计RGBD图像的姿态。

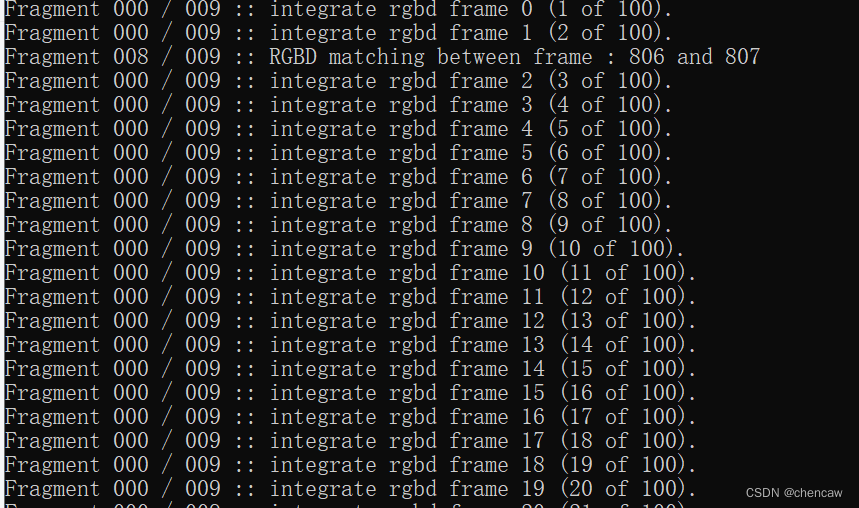

(4)Make a fragment

# examples/python/reconstruction_system/make_fragments.py

def integrate_rgb_frames_for_fragment(color_files, depth_files, fragment_id,

n_fragments, pose_graph_name, intrinsic,

config):

pose_graph = o3d.io.read_pose_graph(pose_graph_name)

volume = o3d.pipelines.integration.ScalableTSDFVolume(

voxel_length=config["tsdf_cubic_size"] / 512.0,

sdf_trunc=0.04,

color_type=o3d.pipelines.integration.TSDFVolumeColorType.RGB8)

for i in range(len(pose_graph.nodes)):

i_abs = fragment_id * config['n_frames_per_fragment'] + i

print(

"Fragment %03d / %03d :: integrate rgbd frame %d (%d of %d)." %

(fragment_id, n_fragments - 1, i_abs, i + 1, len(pose_graph.nodes)))

rgbd = read_rgbd_image(color_files[i_abs], depth_files[i_abs], False,

config)

pose = pose_graph.nodes[i].pose

volume.integrate(rgbd, intrinsic, np.linalg.inv(pose))

mesh = volume.extract_triangle_mesh()

mesh.compute_vertex_normals()

return mesh

一旦姿态估计出来,使用RGBD集成从每个RGBD序列中重建一个彩色片段。

(5)Batch processing

# examples/python/reconstruction_system/make_fragments.py

def run(config):

print("making fragments from RGBD sequence.")

make_clean_folder(join(config["path_dataset"], config["folder_fragment"]))

[color_files, depth_files] = get_rgbd_file_lists(config["path_dataset"])

n_files = len(color_files)

n_fragments = int(

math.ceil(float(n_files) / config['n_frames_per_fragment']))

if config["python_multi_threading"] is True:

from joblib import Parallel, delayed

import multiprocessing

import subprocess

MAX_THREAD = min(multiprocessing.cpu_count(), n_fragments)

Parallel(n_jobs=MAX_THREAD)(delayed(process_single_fragment)(

fragment_id, color_files, depth_files, n_files, n_fragments, config)

for fragment_id in range(n_fragments))

else:

for fragment_id in range(n_fragments):

process_single_fragment(fragment_id, color_files, depth_files,

n_files, n_fragments, config)main函数调用上面解释的每个单独的函数。

(6)结果

(7)以下是来自optimize_posegraph_for_fragment的日志

(8)以下是来自integrate_rgb_frames_for_fragment 的日志

3.3 Register fragments

一旦场景的片段(fragments)被创建,下一步就是在全局空间中对齐它们。

(1)主要操作方法

1748

1748

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?