outputs = batch_norm_for_conv2d(outputs, is_training, bn_decay=bn_decay, scope='bn')

def batch_norm_for_conv2d(inputs, is_training, bn_decay, scope):

""" Batch normalization on 2D convolutional maps.

Args:

inputs: Tensor, 4D BHWC input maps

is_training: boolean tf.Varialbe, true indicates training phase

bn_decay: float or float tensor variable, controling moving average weight

scope: string, variable scope

Return:

normed: batch-normalized maps

"""

return batch_norm_template(inputs, is_training, scope, [0,1,2], bn_decay)batch_norm_for_conv2d根据作者解释,是batch为单位在2d卷积层面进行归一化,具体实现在batch_norm_template中,如下:

def batch_norm_template(inputs, is_training, scope, moments_dims, bn_decay):

""" Batch normalization on convolutional maps and beyond...

Ref.: http://stackoverflow.com/questions/33949786/how-could-i-use-batch-normalization-in-tensorflow

Args:

inputs: Tensor, k-D input ... x C could be BC or BHWC or BDHWC

is_training: boolean tf.Varialbe, true indicates training phase

scope: string, variable scope

moments_dims: a list of ints, indicating dimensions for moments calculation

bn_decay: float or float tensor variable, controling moving average weight

Return:

normed: batch-normalized maps

"""

with tf.variable_scope(scope) as sc:

num_channels = inputs.get_shape()[-1].value

beta = tf.Variable(tf.constant(0.0, shape=[num_channels]),

name='beta', trainable=True)

gamma = tf.Variable(tf.constant(1.0, shape=[num_channels]),

name='gamma', trainable=True)

batch_mean, batch_var = tf.nn.moments(inputs, moments_dims, name='moments')

decay = bn_decay if bn_decay is not None else 0.9

ema = tf.train.ExponentialMovingAverage(decay=decay)

# Operator that maintains moving averages of variables.

ema_apply_op = tf.cond(is_training,

lambda: ema.apply([batch_mean, batch_var]),

lambda: tf.no_op())

# Update moving average and return current batch's avg and var.

def mean_var_with_update():

with tf.control_dependencies([ema_apply_op]):

return tf.identity(batch_mean), tf.identity(batch_var)

# ema.average returns the Variable holding the average of var.

mean, var = tf.cond(is_training,

mean_var_with_update,

lambda: (ema.average(batch_mean), ema.average(batch_var)))

normed = tf.nn.batch_normalization(inputs, mean, var, beta, gamma, 1e-3)

return normed 在pointnet工程中,经过"transformnet1"中的'tconv1'之后产生的output[32,1024,1,64]作为输入,则

num_channels=64

beta = tf.Variable(tf.constant(0.0, shape=[num_channels]),

name='beta', trainable=True)

gamma = tf.Variable(tf.constant(1.0, shape=[num_channels]),

name='gamma', trainable=True)初始化tensor,'beta','gamma',设置在train的时候实时观察,shape为64,类型为float,gama默认为1

momentdims为[0,1,2]是个vector里面的值是0,1,2

batch_mean, batch_var = tf.nn.moments(inputs, moments_dims, name='moments')声明一个batch对应的平均值以及方差tensor(batchsize明明是32为什么平均值及方差是64的tensor,能解释的就是每个batch有64channel,每个channel一个平均值以及方差)

decay = bn_decay沿用的依然是最初声明的指数增加的那个learning rate

ema = tf.train.ExponentialMovingAverage(decay=decay)创建参数的移动平均object

ema_apply_op = tf.cond(is_training,

lambda: ema.apply([batch_mean, batch_var]),

lambda: tf.no_op())

利用ema保持batch_mean, batch_var的移动平均

normed = tf.nn.batch_normalization(inputs, mean, var, beta, gamma, 1e-3)

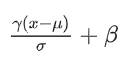

计算公式为:

gama以及beta需要在归一化的时候参与计算。其中gama为1beta默认为0所以默认归一化计算为(x-均值)/方差

1786

1786

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?