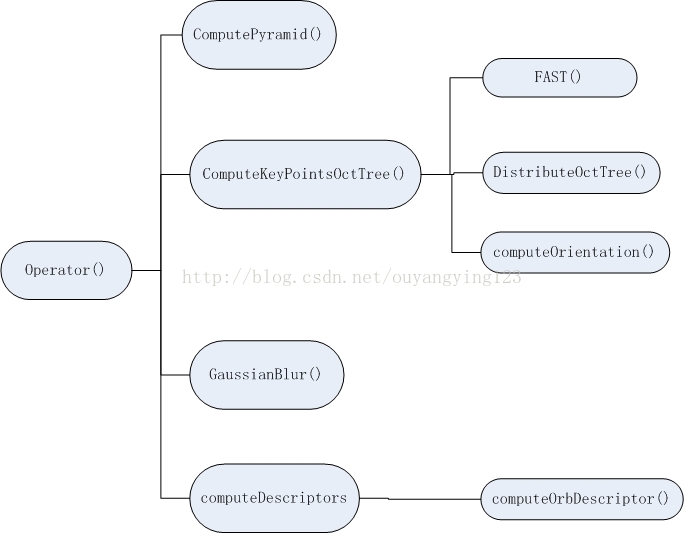

大体调用结构如下:

重载()运算符实现:

1.计算图像金字塔

2.特征点提取

3.高斯模糊

4.计算描述子

Operate()

void ORBextractor::operator()( InputArray _image, InputArray _mask, vector<KeyPoint>& _keypoints,

OutputArray _descriptors)

{

if(_image.empty())

return;

Mat image = _image.getMat();

assert(image.type() == CV_8UC1 );

/*

计算图像金字塔,所有图像来源于同一张原始图像 - 通过梯次向下采样获得,

最终得到一系列关于原始图片不同分别率的图像集合。

*/

ComputePyramid(image);

/*

计算特征点位置

*/

vector < vector<KeyPoint> > allKeypoints;

ComputeKeyPointsOctTree(allKeypoints);

Mat descriptors;

int nkeypoints = 0;

for (int level = 0; level < nlevels; ++level)

nkeypoints += (int)allKeypoints[level].size();

if( nkeypoints == 0 )

_descriptors.release();

else

{

_descriptors.create(nkeypoints, 32, CV_8U);

descriptors = _descriptors.getMat();

}

_keypoints.clear();

_keypoints.reserve(nkeypoints);

int offset = 0;

for (int level = 0; level < nlevels; ++level)

{

vector<KeyPoint>& keypoints = allKeypoints[level];

int nkeypointsLevel = (int)keypoints.size();

if(nkeypointsLevel==0)

continue;

/*

对图像进行高斯模糊,在计算描述子之前降噪

*/

Mat workingMat = mvImagePyramid[level].clone();

GaussianBlur(workingMat, workingMat, Size(7, 7), 2, 2, BORDER_REFLECT_101);

/*

计算描述子

*/

Mat desc = descriptors.rowRange(offset, offset + nkeypointsLevel);

computeDescriptors(workingMat, keypoints, desc, pattern);

offset += nkeypointsLevel;

// 换算为原始图片的坐标

if (level != 0)

{

float scale = mvScaleFactor[level]; //getScale(level, firstLevel, scaleFactor);

for (vector<KeyPoint>::iterator keypoint = keypoints.begin(),

keypointEnd = keypoints.end(); keypoint != keypointEnd; ++keypoint)

keypoint->pt *= scale;

}

// And add the keypoints to the output

_keypoints.insert(_keypoints.end(), keypoints.begin(), keypoints.end());

}

}ComputePyramid()

关于图像金字塔的详细解释请看这里:

http://www.opencv.org.cn/opencvdoc/2.3.2/html/doc/tutorials/imgproc/pyramids/pyramids.html

void ORBextractor::ComputePyramid(cv::Mat image)

{

//获取nlevelsge张不同分辨率的图片

for (int level = 0; level < nlevels; ++level)

{

float scale = mvInvScaleFactor[level];

Size sz(cvRound((float)image.cols*scale), cvRound((float)image.rows*scale));

Size wholeSize(sz.width + EDGE_THRESHOLD*2, sz.height + EDGE_THRESHOLD*2);

Mat temp(wholeSize, image.type()), masktemp;

mvImagePyramid[level] = temp(Rect(EDGE_THRESHOLD, EDGE_THRESHOLD, sz.width, sz.height));

// Compute the resized image

if( level != 0 )

{

//把原图缩放成sz大小

resize(mvImagePyramid[level-1], mvImagePyramid[level], sz, 0, 0, INTER_LINEAR);

//在图像周围以对称的方式加一个宽度为EDGE_THRESHOLD的边,便于后面的计算

//(在后面计算边缘像素的时候省去了判断; 可以省去加边操作,然后在后面计算的时候做一个边缘判断即可)

copyMakeBorder(mvImagePyramid[level], temp, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD,

BORDER_REFLECT_101+BORDER_ISOLATED);

}

else//第一张为原图分辨率,无需缩放

{

copyMakeBorder(image, temp, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD,

BORDER_REFLECT_101);

}

}

}ComputeKeyPointsOctTree()

其中关于网格划分的部分,请看这位仁兄写的文章:http://www.cnblogs.com/JingeTU/p/6438968.html

关于FAST部分代码请看这里:http://blog.csdn.net/ouyangying123/article/details/71576390

void ORBextractor::ComputeKeyPointsOctTree(vector<vector<KeyPoint> >& allKeypoints)

{

allKeypoints.resize(nlevels);

const float W = 30;

//计算每层的特征点

for (int level = 0; level < nlevels; ++level)

{

//以下参数的设置主要作用是把一张图片分成多个网格区域分别进行特征点检测

const int minBorderX = EDGE_THRESHOLD-3;

const int minBorderY = minBorderX;

const int maxBorderX = mvImagePyramid[level].cols-EDGE_THRESHOLD+3;

const int maxBorderY = mvImagePyramid[level].rows-EDGE_THRESHOLD+3;

vector<cv::KeyPoint> vToDistributeKeys;

vToDistributeKeys.reserve(nfeatures*10);

const float width = (maxBorderX-minBorderX);

const float height = (maxBorderY-minBorderY);

const int nCols = width/W;

const int nRows = height/W;

const int wCell = ceil(width/nCols);

const int hCell = ceil(height/nRows);

//把图像分割成 nRows * nCols个小网格

for(int i=0; i<nRows; i++)

{

const float iniY =minBorderY+i*hCell;

float maxY = iniY+hCell+6;

if(iniY>=maxBorderY-3)

continue;

if(maxY>maxBorderY)

maxY = maxBorderY;

for(int j=0; j<nCols; j++)

{

const float iniX =minBorderX+j*wCell;

float maxX = iniX+wCell+6;

if(iniX>=maxBorderX-6)

continue;

if(maxX>maxBorderX)

maxX = maxBorderX;

vector<cv::KeyPoint> vKeysCell;

//对网格区域内的像素进行特征点提取

FAST(mvImagePyramid[level].rowRange(iniY,maxY).colRange(iniX,maxX),

vKeysCell,iniThFAST,true);

if(vKeysCell.empty())//若该区域未提取到特征点,降低阈值再进行一次

{

FAST(mvImagePyramid[level].rowRange(iniY,maxY).colRange(iniX,maxX),

vKeysCell,minThFAST,true);

}

if(!vKeysCell.empty())

{

for(vector<cv::KeyPoint>::iterator vit=vKeysCell.begin(); vit!=vKeysCell.end();vit++)

{

(*vit).pt.x+=j*wCell;

(*vit).pt.y+=i*hCell;

vToDistributeKeys.push_back(*vit);

}

}

}

}

vector<KeyPoint> & keypoints = allKeypoints[level];

keypoints.reserve(nfeatures);

//八叉树分配 使得特征点在图像中分布均匀

keypoints = DistributeOctTree(vToDistributeKeys, minBorderX, maxBorderX,

minBorderY, maxBorderY,mnFeaturesPerLevel[level], level);

const int scaledPatchSize = PATCH_SIZE * mvScaleFactor[level];

// 得到特征点在图中的真实坐标(keypoints中记录的是许多特征点在每一块小区域中的相对位置)

const int nkps = keypoints.size();

for(int i=0; i<nkps ; i++)

{

keypoints[i].pt.x+=minBorderX;

keypoints[i].pt.y+=minBorderY;

keypoints[i].octave=level;

keypoints[i].size = scaledPatchSize;

}

}

// 计算特征点的方向

for (int level = 0; level < nlevels; ++level)

computeOrientation(mvImagePyramid[level], allKeypoints[level], umax);

}GaussianBlur()

关于高斯模糊这部分,opencv构建一个FilterEngine的类来实现图像的滤波操作,这里简单说下高斯模糊的原理,就是使用一个3x3(这里以3x3为例)的正太分布矩阵 与 原图进行卷积操作,具体效果请看这两位仁兄的文章:

http://blog.sina.com.cn/s/blog_c936dba00102vzhu.html

http://blog.csdn.net/chentravelling/article/details/48985561

如果直接使用3x3的一个kernel进行卷积运算量较大,可行的一种优化方式是使用十字形kernel进行计算,再进一步的优化方式可通过《Recursive implementation of the Gaussian filter》里描述的递归算法进行,详细请看这位仁兄的文章:http://www.cnblogs.com/Imageshop/p/6376028.html

十字形伪代码:

void GaussianBlur(image &src, image &dst)//读入的图像为单通道灰度图

{

/*

高斯核 7x7 sigma = 2

*/

double kernel[7] = {

0.0702, 0.1311, 0.1907, 0.2161, 0.1907, 0.1311, 0.0702

};

/*

加权叠加

*/

int length = 3; // 7/2

double tmp;

for (int y = 0; y < src.Size().y; ++y){

for (int x = 0; x < src.Size().x; ++x){

tmp = 0;

for (int i = -length; i <= length; ++i){ //realx realy 用于边界判断

int realx = x;

if (x + i < 0) realx = -(x + i);

else if (x + i > src.Size().x - 1) realx = 2 * (src.Size().x - 1) - (x + i);

else realx = x + i;

tmp += kernel[i + length] * (double)(*(src.Buffer() + y * src.Size().x + realx)); //水平方向

int realy = y;

if (y + i < 0) realy = -(y + i);

else if (y + i > src.Size().y - 1) realy = 2 * (src.Size().y - 1) - (y + i);

else realy = y + i;

tmp += kernel[i + length] * (double)(*(src.Buffer() + realy * src.Size().x + x)); //垂直方向

}

dst.Data[y * src.Size().x + x] = (uchar)tmp;

}

}

}

递归实现伪代码

void GaussianBlur(image &img){

/*

高斯核 7x7 sigma = 2

*/

double B0 = 0.3554, B1 = 0.3136, B2 = 0.2156, B3 = 0.1154;

/*

加权叠加

*/

int Width = img.Size().x, Height = img.Size().y;

for (int Y = 0; Y < Height; ++Y){ //水平方向模糊

uchar *LinePD = img.Data() + Y * Width;

double S1 = LinePD[0], S2 = LinePD[0], S3 = LinePD[0];//边缘重复像素

for (int X = 0; X < Width - 3; X++, LinePD++){

LinePD[0] = (double)LinePD[0] * B0 + S1 * B1 + S2 * B2 + S3 * B3;

S3 = S2, S2 = S1, S1 = LinePD[0];

}

}

for (int Y = 0; Y < Height - 3; Y++){ //垂直方向模糊

uchar *LinePD3 = img.Buffer() + (Y + 0) * Width;

uchar *LinePD2 = img.Buffer() + (Y + 1) * Width;

uchar *LinePD1 = img.Buffer() + (Y + 2) * Width;

uchar *LinePD0 = img.Buffer() + (Y + 3) * Width;

for (int X = 0; X < Width; X++, LinePD0++, LinePD1++, LinePD2++, LinePD3++){

LinePD0[0] = (double)LinePD0[0] * B0 + (double)LinePD1[0] * B1 + (double)LinePD2[0] * B2 + (double)LinePD3[0] * B3;

}

}

}

static void computeDescriptors(const Mat& image, vector<KeyPoint>& keypoints, Mat& descriptors,

const vector<Point>& pattern)

{

descriptors = Mat::zeros((int)keypoints.size(), 32, CV_8UC1);

for (size_t i = 0; i < keypoints.size(); i++)

computeOrbDescriptor(keypoints[i], image, &pattern[0], descriptors.ptr((int)i));

}

static void computeOrbDescriptor(const KeyPoint& kpt,

const Mat& img, const Point* pattern,

uchar* desc)

{

float angle = (float)kpt.angle*factorPI;

float a = (float)cos(angle), b = (float)sin(angle);

const uchar* center = &img.at<uchar>(cvRound(kpt.pt.y), cvRound(kpt.pt.x));

const int step = (int)img.step;

#define GET_VALUE(idx) \

center[cvRound(pattern[idx].x*b + pattern[idx].y*a)*step + \

cvRound(pattern[idx].x*a - pattern[idx].y*b)]

for (int i = 0; i < 32; ++i, pattern += 16)

{

int t0, t1, val;

t0 = GET_VALUE(0); t1 = GET_VALUE(1);

val = t0 < t1;

t0 = GET_VALUE(2); t1 = GET_VALUE(3);

val |= (t0 < t1) << 1;

t0 = GET_VALUE(4); t1 = GET_VALUE(5);

val |= (t0 < t1) << 2;

t0 = GET_VALUE(6); t1 = GET_VALUE(7);

val |= (t0 < t1) << 3;

t0 = GET_VALUE(8); t1 = GET_VALUE(9);

val |= (t0 < t1) << 4;

t0 = GET_VALUE(10); t1 = GET_VALUE(11);

val |= (t0 < t1) << 5;

t0 = GET_VALUE(12); t1 = GET_VALUE(13);

val |= (t0 < t1) << 6;

t0 = GET_VALUE(14); t1 = GET_VALUE(15);

val |= (t0 < t1) << 7;

desc[i] = (uchar)val;

}

#undef GET_VALUE

}推荐几篇比较好的文章:

http://blog.csdn.net/qq_30356613/article/details/71106605

939

939

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?