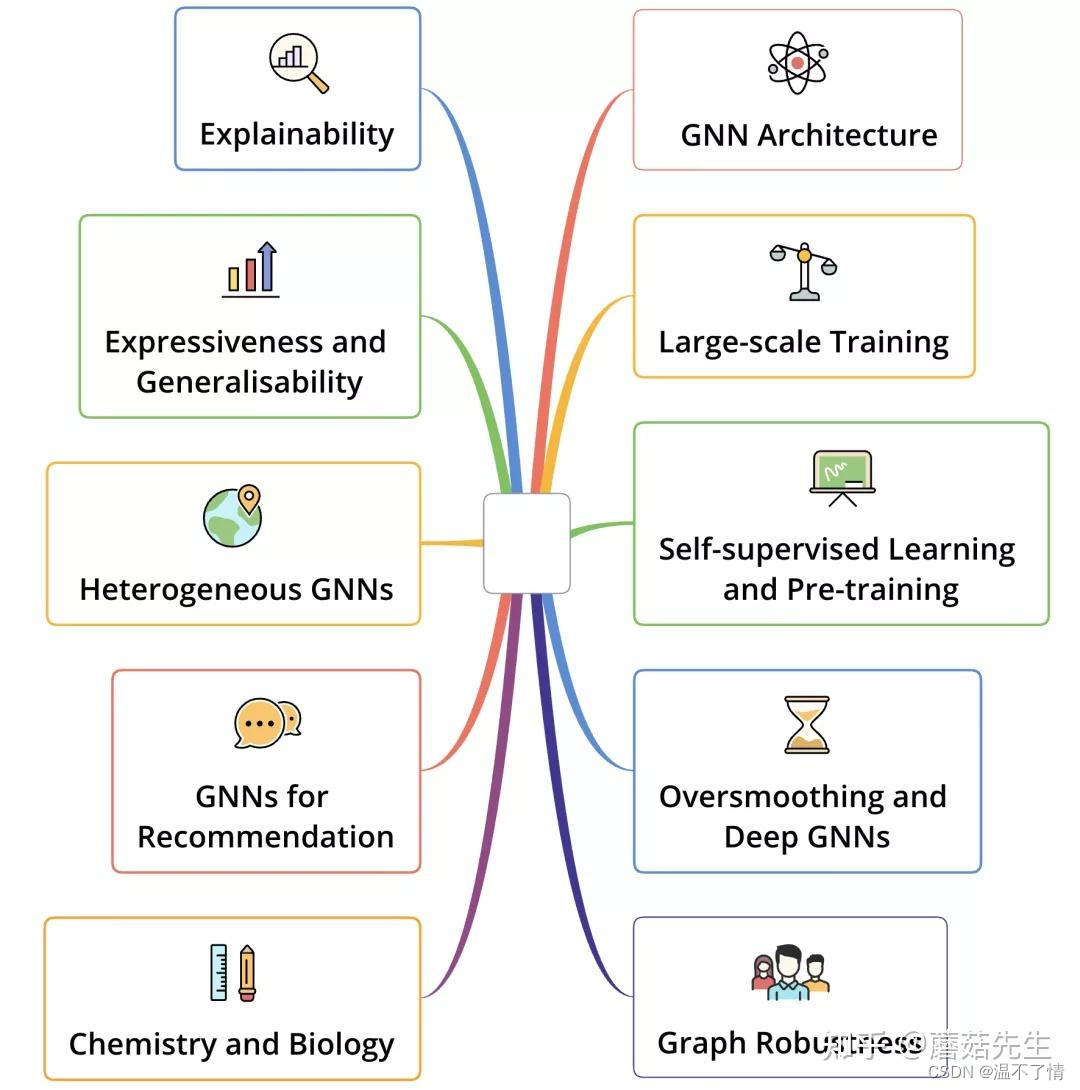

分享一篇GNN十大研究方向的总结,总共涵盖了10类GNN研究方向,每种研究方向下选了10篇文章,来一起预览下这10类方向。

- GNN Architecture:研究GNN架构/体系,基本涵盖了GNN最经典、必读的文章,如GCN三部曲, GAT, GGNN等等。

- Large-scale Training:研究大规模图训练,如经典的GraphSAGE/PinSAGE中做邻居结点采样的方法。

- Self-supervised Learning and Pre-training:研究图自监督学习和预训练,这两年来兴起的。

- Oversmoothing and Deep GNNs:研究GNN的过平滑问题和深层GNN。GNN的卷积操作在层数过多时会使得每个结点几乎都能间接融合其它所有结点的信息,导致所有结点的表征趋于相同,也是近年来比较热门的研究方向。

- Graph Robustness:研究图的鲁棒性,比如图的对抗攻击和防御等。

- Explainability:研究图的可解释性。

- Expressiveness and Generalisability:研究图的表达能力和泛化性,和经典的图同构测试关联,也是相当热门的研究方向,如经典的GIN。

- Heterogeneous GNNs:研究异构图神经网络,非常热门。如HAN等。

- GNNs for Recommendation:研究GNN在推荐场景中的应用,这类研究工作很实用,如GCMC, SRGNN, LightGCN等。

- Chemistry and Biology:研究GNN在化学和生物中的应用,最出名的当属AlphaFold以及最经典的消息传递框架MPNN最早就是在化学领域的应用中提出来的。

-

当然也有一些经典的文章和方向没有收录,比如GNN for recommendation下的PinSAGE;Explainability下的解耦式表征学习方向相关的文章,如DisenGCN等。这些文章和方向笔者认为也可以考虑收录进来。

GNN Architecture

研究GNN架构/体系,基本涵盖了GNN最经典、必读的文章,如基于谱图卷积的GCNs三部曲, 序列图神经网络GGNN,基于注意力机制的GAT等。

- Semi-Supervised Classification with Graph Convolutional Networks. Thomas N. Kipf, Max Welling. NeuIPS'17.

- Graph Attention Networks. Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, Yoshua Bengio. ICLR'18.

- Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. NeuIPS'16.

- Predict then Propagate: Graph Neural Networks meet Personalized PageRank. Johannes Klicpera, Aleksandar Bojchevski, Stephan Günnemann. ICLR'19.

- Gated Graph Sequence Neural Networks. Li, Yujia N and Tarlow, Daniel and Brockschmidt, Marc and Zemel, Richard. ICLR'16.

- Inductive Representation Learning on Large Graphs. William L. Hamilton, Rex Ying, Jure Leskovec. NeuIPS'17.

- Deep Graph Infomax. Petar Veličković, William Fedus, William L. Hamilton, Pietro Liò, Yoshua Bengio, R Devon Hjelm. ICLR'19.

- Representation Learning on Graphs with Jumping Knowledge Networks. Keyulu Xu, Chengtao Li, Yonglong Tian, Tomohiro Sonobe, Ken-ichi Kawarabayashi, Stefanie Jegelka. ICML'18.

- DeepGCNs: Can GCNs Go as Deep as CNNs?. Guohao Li, Matthias Müller, Ali Thabet, Bernard Ghanem. ICCV'19.

- DropEdge: Towards Deep Graph Convolutional Networks on Node Classification. Yu Rong, Wenbing Huang, Tingyang Xu, Junzhou Huang. ICLR'20.

Large-scale Training

研究大规模图训练,如经典的GraphSAGE/PinSAGE中做大规模邻居结点采样的方法。补充一点,这类研究实际上在各类框架上也有,例如DGL,PyG,Euler等。一方面可以进行训练方式改进,如邻居结点采样/子图采样等;另一方面也可以进行训练环境的分布式改造,分布式环境下,原始大图切割为子图分布在不同的机器中,如何进行子图间的通信、跨图卷积等,也是很有挑战的难点。

- Inductive Representation Learning on Large Graphs. William L. Hamilton, Rex Ying, Jure Leskovec. NeuIPS'17.

- FastGCN: Fast Learning with Graph Convolutional Networks via Importance Sampling. Jie Chen, Tengfei Ma, Cao Xiao. ICLR'18.

- Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks. Wei-Lin Chiang, Xuanqing Liu, Si Si, Yang Li, Samy Bengio, Cho-Jui Hsieh. KDD'19.

- GraphSAINT: Graph Sampling Based Inductive Learning Method. Hanqing Zeng, Hongkuan Zhou, Ajitesh Srivastava, Rajgopal Kannan, Viktor Prasanna. ICLR'20.

- GNNAutoScale: Scalable and Expressive Graph Neural Networks via Historical Embeddings. Matthias Fey, Jan E. Lenssen, Frank Weichert, Jure Leskovec. ICML'21.

- Scaling Graph Neural Networks with Approximate PageRank. Aleksandar Bojchevski, Johannes Klicpera, Bryan Perozzi, Amol Kapoor, Mart

本文汇总了图神经网络(GNN)的十大研究方向,包括GNN架构、大规模训练、自监督学习与预训练、过平滑问题、图的鲁棒性、可解释性、表达能力和泛化性、异构GNN、推荐系统应用以及化学与生物学应用。每个方向下精选了经典论文,涵盖了从基础理论到实际应用的多个层面,为深入理解GNN提供了一个全面的指南。

本文汇总了图神经网络(GNN)的十大研究方向,包括GNN架构、大规模训练、自监督学习与预训练、过平滑问题、图的鲁棒性、可解释性、表达能力和泛化性、异构GNN、推荐系统应用以及化学与生物学应用。每个方向下精选了经典论文,涵盖了从基础理论到实际应用的多个层面,为深入理解GNN提供了一个全面的指南。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

11万+

11万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?