在YOLOv8模型进行训练之后,会在

/runs目录下生成训练结果,其中会生成相应的权重文件、图表、图像等。

这里以一次分割训练为例,生成的文件如下:

$ tree train3/

train3/

├── args.yaml

├── BoxF1_curve.png

├── BoxP_curve.png

├── BoxPR_curve.png

├── BoxR_curve.png

├── confusion_matrix_normalized.png

├── confusion_matrix.png

├── labels_correlogram.jpg

├── labels.jpg

├── MaskF1_curve.png

├── MaskP_curve.png

├── MaskPR_curve.png

├── MaskR_curve.png

├── results.csv

├── results.png

├── train_batch0.jpg

├── train_batch1.jpg

├── train_batch2.jpg

├── train_batch4480.jpg

├── train_batch4481.jpg

├── train_batch4482.jpg

├── val_batch0_labels.jpg

├── val_batch0_pred.jpg

├── val_batch1_labels.jpg

├── val_batch1_pred.jpg

├── val_batch2_labels.jpg

├── val_batch2_pred.jpg

└── weights

├── best.pt

└── last.pt

2 directories, 29 files

文件分析

- 1.weights

- 2.args.yaml

- 3.BoxF1_curve.png

- 4.BoxP_curve.png

- 5.BoxPR_curve.png

- 6.BoxR_curve.png

- 7.confusion_matrix_normalized.png、confusion_matrix.png

- 8.labels_correlogram.jpg

- 9.labels.jpg

- 10.MaskF1_curve.png

- 11.MaskP_curve.png

- 12.MaskPR_curve.png

- 13.MaskR_curve.png

- 14.results.csv

- 15.results.png

- 16.train_batch(N).jpg

- 17.val_batch(N)_labels.jpg 和 val_batch(N)_pred.jpg

1.weights

该目录下保存了两个训练时的权重:

1.1 best.pt

best.pt 则通常指代在验证集或测试集上表现最好的模型权重文件。在训练过程中,会通过监视模型在验证集上的性能,并在性能提升时保存模型的权重文件。best.pt 可以被用于得到在验证集上表现最好的模型,以避免模型在训练集上过拟合的问题。

1.2 last.pt

last.pt 一般指代模型训练过程中最后一个保存的权重文件。在训练过程中,模型的权重可能会定期保存,而 last.pt 就是最新的一次保存的模型权重文件。这样的文件通常用于从上一次训练的断点继续训练,或者用于模型的推理和评估。

PS:正常模型训练的过程中我们肯定选择

best.pt,但如果训练的数据集较少,则选择last.pt。

2.args.yaml

训练时的超参数:

task: segment

mode: train

model: pre_model_yolov8x_seg.pt

data: fruit_seg_train/dataset.yaml

epochs: 150

time: null

patience: 100

batch: 4

imgsz: 640

save: true

save_period: -1

cache: false

device: null

workers: 8

project: null

name: train3

exist_ok: false

pretrained: true

optimizer: auto

verbose: true

seed: 0

deterministic: true

single_cls: false

rect: false

cos_lr: false

close_mosaic: 10

resume: false

amp: true

fraction: 1.0

profile: false

freeze: null

multi_scale: false

overlap_mask: true

mask_ratio: 4

dropout: 0.0

val: true

split: val

save_json: false

save_hybrid: false

conf: null

iou: 0.7

max_det: 300

half: false

dnn: false

plots: true

source: null

vid_stride: 1

stream_buffer: false

visualize: false

augment: false

agnostic_nms: false

classes: null

retina_masks: false

embed: null

show: false

save_frames: false

save_txt: false

save_conf: false

save_crop: false

show_labels: true

show_conf: true

show_boxes: true

line_width: null

format: torchscript

keras: false

optimize: false

int8: false

dynamic: false

simplify: false

opset: null

workspace: 4

nms: false

lr0: 0.01

lrf: 0.01

momentum: 0.937

weight_decay: 0.0005

warmup_epochs: 3.0

warmup_momentum: 0.8

warmup_bias_lr: 0.1

box: 7.5

cls: 0.5

dfl: 1.5

pose: 12.0

kobj: 1.0

label_smoothing: 0.0

nbs: 64

hsv_h: 0.015

hsv_s: 0.7

hsv_v: 0.4

degrees: 0.0

translate: 0.1

scale: 0.5

shear: 0.0

perspective: 0.0

flipud: 0.0

fliplr: 0.5

bgr: 0.0

mosaic: 1.0

mixup: 0.0

copy_paste: 0.0

auto_augment: randaugment

erasing: 0.4

crop_fraction: 1.0

cfg: null

tracker: botsort.yaml

save_dir: runs/segment/train3

关于精确率和召回率

3.BoxF1_curve.png

为了能够评价不同算法的优劣,在

为了能够评价不同算法的优劣,在Precision和Recall的基础上提出了F1值的概念,来对Precision和Recall进行整体评价。

F1的定义如下:

F1曲线是一种多分类问题中常用的性能评估工具,尤其在竞赛中得到广泛应用。它基于F1分数,这是精确率和召回率的调和平均数,取值范围介于0和1之间。1代表最佳性能,而0代表最差性能。

通常情况下,通过调整置信度阈值(判定为某一类的概率阈值),可以观察到F1曲线在不同阈值下的变化。在阈值较低时,模型可能将许多置信度较低的样本判定为真,从而提高召回率但降低精确率。而在阈值较高时,只有置信度很高的样本才被判定为真,使得模型的类别判定更为准确,进而提高精确率。

理想状态下,从上图可知,F1曲线显示在置信度为0.6-0.8的区间内取得了较好的F1分数。表明在这个范围内,模型在平衡精确率和召回率方面表现较为理想。

4.BoxP_curve.png

PCC图的横坐标表示检测器的置信度,纵坐标表示精度(或召回率)。曲线的形状和位置反映了检测器在不同置信度水平下的性能。

PCC图的横坐标表示检测器的置信度,纵坐标表示精度(或召回率)。曲线的形状和位置反映了检测器在不同置信度水平下的性能。

在PCC图中,当曲线向上并向左弯曲时,表示在较低置信度下仍能保持较高的精度,说明检测器在高召回率的同时能够保持低误报率,即对目标的识别准确性较高。

相反,当曲线向下并向右弯曲时,说明在较高置信度下才能获得较高的精度,这可能导致漏检率的增加,表示检测器的性能较差。

因此,PCC图对于评估检测器在不同信心水平下的表现提供了有用的信息。在图中,曲线向上并向左弯曲是期望的效果,而曲线向下并向右弯曲则表示改进的空间。

5.BoxPR_curve.png

PR_curve是精确率(Precision)和召回率(Recall)之间的关系。精确率表示预测为正例的样本中真正为正例的比例,而召回率表示真正为正例的样本中被正确预测为正例的比例。

PR_curve是精确率(Precision)和召回率(Recall)之间的关系。精确率表示预测为正例的样本中真正为正例的比例,而召回率表示真正为正例的样本中被正确预测为正例的比例。

在PR Curve中,横坐标表示召回率,纵坐标表示精确率。通常情况下,当召回率升高时,精确率会降低,反之亦然。PR Curve反映了这种取舍关系。曲线越靠近右上角,表示模型在预测时能够同时保证高的精确率和高的召回率,即预测结果较为准确。相反,曲线越靠近左下角,表示模型在预测时难以同时保证高的精确率和高的召回率,即预测结果较为不准确。

通常,PR Curve与ROC Curve一同使用,以更全面地评估分类模型的性能。 PR Curve提供了对模型在不同任务下性能表现的更详细的洞察。

6.BoxR_curve.png

在理想情况下,希望算法在保持高召回率的同时能够保持较高的精度。

在RCC图中,当曲线在较高置信度水平下呈现较高召回率时,说明算法在目标检测时能够准确地预测目标的存在,并在过滤掉低置信度的预测框后依然能够维持高召回率。这反映了算法在目标检测任务中的良好性能。

值得注意的是,RCC图中曲线的斜率越陡峭,表示在过滤掉低置信度的预测框后,获得的召回率提升越大,从而提高模型的检测性能。

在图表中,曲线越接近右上角,表示模型性能越好。当曲线靠近图表的右上角时,说明模型在保持高召回率的同时能够维持较高的精度。因此,RCC图可用于全面评估模型性能,帮助找到平衡模型召回率和精度的合适阈值。

7.confusion_matrix_normalized.png、confusion_matrix.png

混淆矩阵是对分类问题预测结果的总结,通过计数值汇总正确和不正确预测的数量,并按每个类别进行细分,展示了分类模型在进行预测时对哪些部分产生混淆。该矩阵以行表示预测的类别(y轴),列表示真实的类别(x轴),具体内容如下:

混淆矩阵的使用有助于直观了解分类模型的错误类型,特别是了解模型是否将两个不同的类别混淆,将一个类别错误地预测为另一个类别。这种详细的分析有助于克服仅使用分类准确率带来的局限性。

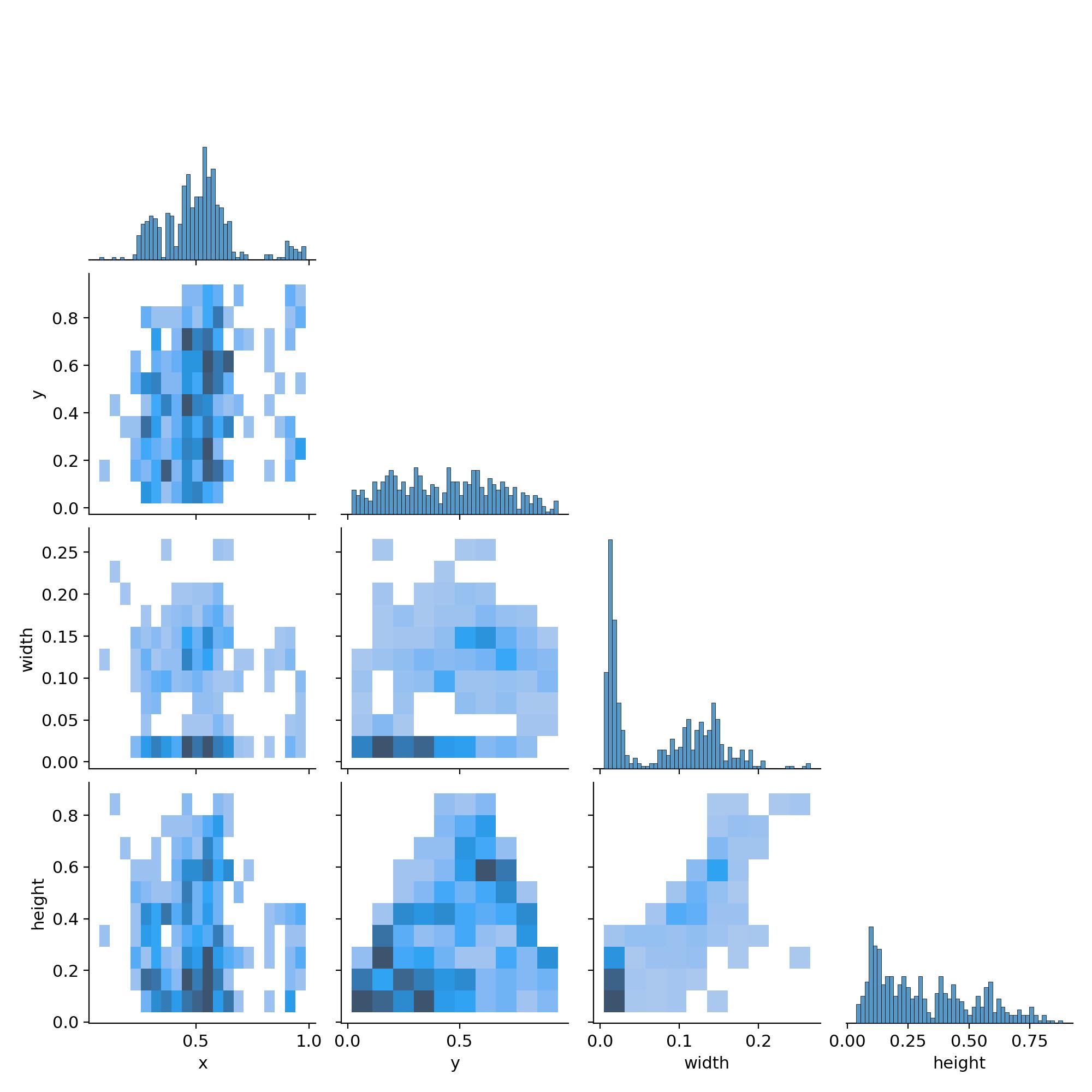

8.labels_correlogram.jpg

标签对应图展示了目标检测算法在训练过程中对标签之间相关性的建模情况。每个矩阵单元代表模型训练时使用的标签,而单元格的颜色深浅反映了对应标签之间的相关性。

- 深色单元格表示模型更强烈地学习了这两个标签之间的关联性。

- 浅色单元格则表示相关性较弱。

对角线上的颜色代表每个标签自身的相关性,通常是最深的,因为模型更容易学习标签与自身的关系。

可以直观识别到哪些标签之间存在较强的相关性,这对于优化训练和预测效果至关重要。如果发现某些标签之间的相关性过强,可能需要考虑合并它们,以简化模型并提高效率。最上面的图(0,0)至(3,3)分别表示中心点横坐标x、中心点纵坐标y、框的宽和框的高的分布情况。

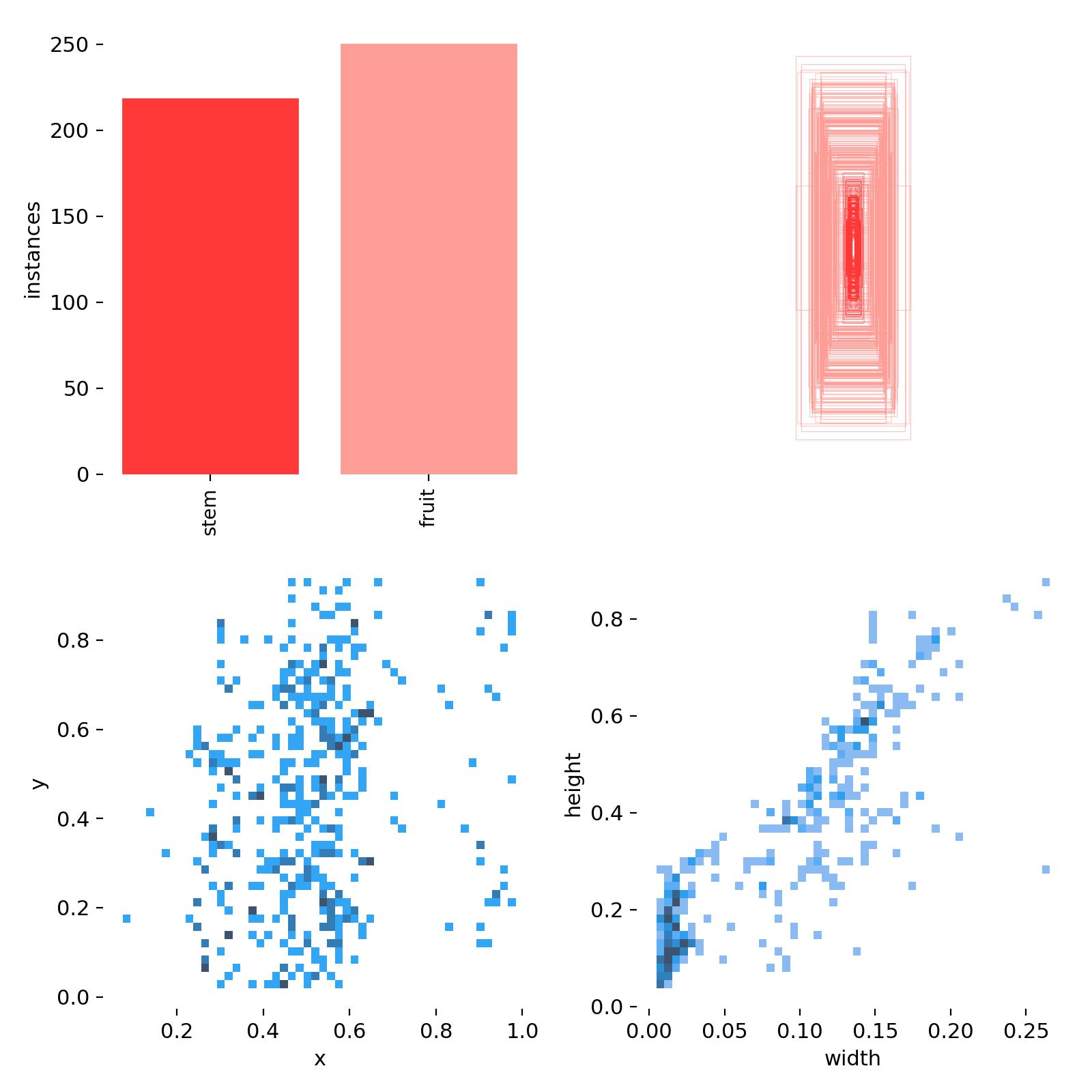

9.labels.jpg

将上面整张图从左往右按顺序排列:

- 左上角:训练集的数据量,显示每个类别包含的样本数量,每个类别有多少个。

- 右上角:框的尺寸和数量,展示了训练集中边界框的大小分布以及相应数量。

- 左下角:中心点相对于整幅图的位置,描述了边界框中心点在图像中的位置分布情况。

- 右下角:图中目标相对于整幅图的高宽比例,反映了训练集中目标高宽比例的分布状况。

10.MaskF1_curve.png

和 3.BoxF1_curve.png 一样,一个表示目标检测框,一个表示实例分割。

11.MaskP_curve.png

和 4.BoxP_curve.png 一样,一个表示目标检测框,一个表示实例分割。

12.MaskPR_curve.png

和 5.BoxPR_curve.png 一样,一个表示目标检测框,一个表示实例分割。

13.MaskR_curve.png

和 6.BoxR_curve.png 一样,一个表示目标检测框,一个表示实例分割。

14.results.csv

box_loss :用于监督检测框的回归,预测框与标定框之间的误差(CIoU)。

cls_loss :用于监督类别分类,计算锚框与对应的标定分类是否正确。

dfl_loss(Distribution Focal Loss):这个函数与GIOU loss一样,都是用来优化bbox的。

Precision:精度(找对的正类/所有找到的正类);

Recall:真实为positive的准确率;

Precision:精度(找对的正类/所有找到的正类);

Recall:真实为positive的准确率;

mAP50-95:表示在不同IoU阈值(从0.5到0.95,步长0.05)(0.5、0.55、0.6、0.65、0.7、0.75、0.8、0.85、0.9、0.95)上的平均mAP;

mAP50:表示在IoU阈值为0.5时的mAP值变化曲线

模型训练时每次迭代结果:

epoch, train/box_loss, train/seg_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), metrics/precision(M), metrics/recall(M), metrics/mAP50(M), metrics/mAP50-95(M), val/box_loss, val/seg_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

1, 1.3905, 2.8952, 2.9987, 1.6217, 0.55828, 0.62831, 0.62205, 0.39159, 0.49441, 0.58197, 0.54595, 0.37887, 1.1457, 1.3463, 1.4268, 1.2844, 0.00051677, 0.00051677, 0.00051677

2, 0.9107, 1.5213, 1.393, 1.1263, 0.83275, 0.86066, 0.93174, 0.69089, 0.81145, 0.83607, 0.86218, 0.59954, 0.78845, 0.9602, 0.94093, 0.92841, 0.0010433, 0.0010433, 0.0010433

3, 0.90378, 1.3998, 1.127, 1.0615, 0.89906, 0.96702, 0.9265, 0.62451, 0.71517, 0.81967, 0.71468, 0.55867, 0.88574, 0.87691, 0.752, 0.93684, 0.0015627, 0.0015627, 0.0015627

4, 0.91934, 1.4125, 1.0465, 1.049, 0.97305, 0.93191, 0.9829, 0.74389, 0.86446, 0.82599, 0.83689, 0.58294, 0.79499, 1.0134, 0.81907, 0.90467, 0.001634, 0.001634, 0.001634

5, 0.91213, 1.3639, 0.97003, 1.0576, 0.93908, 0.97752, 0.99328, 0.78941, 0.86162, 0.90389, 0.93055, 0.69134, 0.64619, 0.85164, 0.70329, 0.87146, 0.001623, 0.001623, 0.001623

6, 0.8047, 1.2713, 0.7866, 1.0008, 0.98702, 0.96721, 0.99204, 0.81761, 0.88201, 0.86885, 0.90312, 0.68932, 0.62139, 0.79069, 0.5322, 0.86725, 0.001612, 0.001612, 0.001612

7, 0.80128, 1.1921, 0.70582, 0.98063, 0.99665, 0.95, 0.98813, 0.80992, 0.8636, 0.86687, 0.89915, 0.66123, 0.63634, 0.85926, 0.50215, 0.85787, 0.001601, 0.001601, 0.001601

8, 0.74747, 1.1747, 0.65377, 0.96134, 0.98316, 0.94908, 0.98352, 0.81673, 0.92059, 0.89322, 0.92994, 0.71352, 0.56024, 0.78355, 0.47911, 0.8475, 0.00159, 0.00159, 0.00159

9, 0.79645, 1.2018, 0.67284, 0.97947, 0.95937, 0.97541, 0.99053, 0.81369, 0.91282, 0.92623, 0.946, 0.73121, 0.54981, 0.80933, 0.46551, 0.85427, 0.001579, 0.001579, 0.001579

10, 0.76401, 1.2142, 0.64003, 0.98513, 0.9853, 0.95889, 0.99108, 0.8059, 0.91475, 0.88955, 0.92256, 0.69098, 0.60967, 0.81387, 0.46915, 0.85835, 0.001568, 0.001568, 0.001568

11, 0.73328, 1.1837, 0.57221, 0.95689, 0.96866, 0.96721, 0.9864, 0.8155, 0.83883, 0.84426, 0.87924, 0.68306, 0.56597, 0.83987, 0.42742, 0.85039, 0.001557, 0.001557, 0.001557

12, 0.75548, 1.1859, 0.57661, 0.9537, 0.97104, 0.97627, 0.9876, 0.82508, 0.87581, 0.90164, 0.91331, 0.7188, 0.55579, 0.77994, 0.40878, 0.84424, 0.001546, 0.001546, 0.001546

13, 0.71544, 1.1828, 0.58885, 0.95644, 0.98374, 0.94991, 0.98701, 0.84598, 0.93433, 0.89344, 0.92465, 0.68881, 0.54616, 0.78841, 0.40163, 0.84476, 0.001535, 0.001535, 0.001535

14, 0.66318, 1.1478, 0.52364, 0.92578, 0.98572, 0.98361, 0.99416, 0.84069, 0.95246, 0.95082, 0.96595, 0.72295, 0.5113, 0.74403, 0.39351, 0.83794, 0.001524, 0.001524, 0.001524

15, 0.66882, 1.04, 0.51066, 0.92058, 0.99517, 0.98361, 0.99394, 0.82933, 0.97799, 0.96721, 0.97769, 0.74009, 0.56943, 0.75888, 0.36879, 0.84087, 0.001513, 0.001513, 0.001513

16, 0.70082, 1.0505, 0.51578, 0.92955, 0.96941, 0.9918, 0.99224, 0.85182, 0.91267, 0.93443, 0.95528, 0.71269, 0.53596, 0.80003, 0.37164, 0.83449, 0.001502, 0.001502, 0.001502

17, 0.65994, 1.0546, 0.49957, 0.90034, 0.96934, 0.97541, 0.9905, 0.83685, 0.89195, 0.90984, 0.93168, 0.70042, 0.53431, 0.79292, 0.36666, 0.83415, 0.001491, 0.001491, 0.001491

18, 0.66491, 1.0503, 0.48127, 0.91513, 0.99486, 0.98995, 0.99455, 0.84758, 0.96138, 0.95726, 0.96539, 0.72202, 0.51517, 0.77033, 0.3487, 0.83365, 0.00148, 0.00148, 0.00148

19, 0.69192, 1.0705, 0.49377, 0.94406, 0.99476, 0.97264, 0.99359, 0.8408, 0.94188, 0.93443, 0.95094, 0.71782, 0.52468, 0.72809, 0.36064, 0.84283, 0.001469, 0.001469, 0.001469

20, 0.70958, 1.0839, 0.4956, 0.93258, 0.99107, 0.95902, 0.99187, 0.84039, 0.8727, 0.91371, 0.9165, 0.69934, 0.56161, 0.75109, 0.3653, 0.8364, 0.001458, 0.001458, 0.001458

21, 0.68955, 1.0831, 0.48774, 0.94272, 0.98423, 0.98338, 0.99349, 0.84155, 0.94243, 0.94262, 0.96133, 0.73147, 0.5121, 0.77284, 0.35173, 0.83371, 0.001447, 0.001447, 0.001447

22, 0.67123, 1.066, 0.48467, 0.94614, 0.96019, 0.98719, 0.99298, 0.82807, 0.89403, 0.93359, 0.95675, 0.74306, 0.55329, 0.77381, 0.38104, 0.83446, 0.001436, 0.001436, 0.001436

23, 0.63138, 1.0263, 0.47072, 0.92604, 0.97334, 0.98213, 0.9934, 0.83406, 0.9161, 0.88525, 0.93223, 0.70288, 0.54336, 0.79075, 0.37798, 0.83504, 0.001425, 0.001425, 0.001425

24, 0.65385, 1.0507, 0.48208, 0.91955, 0.99403, 0.95902, 0.9891, 0.78203, 0.8262, 0.91182, 0.88294, 0.65797, 0.58875, 0.84304, 0.37948, 0.83029, 0.0014139, 0.0014139, 0.0014139

25, 0.65294, 1.0199, 0.46565, 0.91978, 0.99589, 0.97614, 0.99289, 0.81189, 0.87534, 0.94935, 0.90957, 0.68186, 0.51303, 0.73105, 0.35875, 0.82708, 0.0014029, 0.0014029, 0.0014029

26, 0.63885, 0.99248, 0.45, 0.90291, 0.99294, 0.99066, 0.995, 0.82704, 0.92009, 0.93443, 0.93424, 0.66593, 0.55407, 0.73406, 0.37135, 0.83298, 0.0013919, 0.0013919, 0.0013919

27, 0.64142, 1.0386, 0.44193, 0.90204, 0.99376, 0.96577, 0.9933, 0.85427, 0.92335, 0.90026, 0.92861, 0.69094, 0.50707, 0.68302, 0.35305, 0.82332, 0.0013809, 0.0013809, 0.0013809

28, 0.64525, 1.0097, 0.44896, 0.91466, 0.99485, 0.99835, 0.995, 0.86411, 0.9343, 0.90164, 0.93188, 0.71963, 0.49406, 0.74977, 0.33998, 0.81652, 0.0013699, 0.0013699, 0.0013699

29, 0.62253, 1.0182, 0.46345, 0.90354, 0.97624, 0.95902, 0.98659, 0.83591, 0.96885, 0.95082, 0.98111, 0.7431, 0.52632, 0.73716, 0.34148, 0.81925, 0.0013589, 0.0013589, 0.0013589

30, 0.6416, 0.99652, 0.4452, 0.90639, 0.99113, 0.95902, 0.99008, 0.83772, 0.97272, 0.94262, 0.97428, 0.73416, 0.52307, 0.72786, 0.32913, 0.82895, 0.0013479, 0.0013479, 0.0013479

31, 0.6345, 0.97221, 0.4529, 0.92996, 0.9933, 0.99962, 0.995, 0.85576, 0.91053, 0.91803, 0.91953, 0.69794, 0.50718, 0.68386, 0.31531, 0.81852, 0.0013369, 0.0013369, 0.0013369

32, 0.65567, 1, 0.45369, 0.90019, 0.99223, 1, 0.995, 0.82994, 0.86919, 0.87705, 0.87362, 0.67755, 0.54355, 0.69775, 0.33486, 0.82024, 0.0013259, 0.0013259, 0.0013259

33, 0.63265, 0.94348, 0.43047, 0.88804, 0.98152, 1, 0.99476, 0.87235, 0.94919, 0.96721, 0.95105, 0.72719, 0.47511, 0.67439, 0.3025, 0.81888, 0.0013149, 0.0013149, 0.0013149

34, 0.59882, 0.92828, 0.42084, 0.88809, 0.98268, 1, 0.99476, 0.87533, 0.95042, 0.96721, 0.96726, 0.74082, 0.47751, 0.65686, 0.31657, 0.82256, 0.0013039, 0.0013039, 0.0013039

35, 0.59478, 0.89694, 0.41045, 0.88428, 0.99321, 0.98256, 0.99476, 0.85686, 0.876, 0.91742, 0.92081, 0.69197, 0.4861, 0.69288, 0.32578, 0.82049, 0.0012929, 0.0012929, 0.0012929

36, 0.58791, 0.89244, 0.38729, 0.88763, 0.99297, 0.98961, 0.99492, 0.88068, 0.9041, 0.92623, 0.93918, 0.72815, 0.47147, 0.69573, 0.31121, 0.82088, 0.0012819, 0.0012819, 0.0012819

37, 0.5711, 0.89621, 0.38578, 0.88447, 0.99306, 0.99039, 0.99484, 0.88711, 0.94182, 0.91674, 0.95402, 0.72715, 0.45697, 0.68214, 0.29949, 0.82095, 0.0012709, 0.0012709, 0.0012709

38, 0.56485, 0.8913, 0.381, 0.8698, 0.99009, 0.97541, 0.99413, 0.88756, 0.92139, 0.94509, 0.95941, 0.73811, 0.45795, 0.62164, 0.31032, 0.82125, 0.0012599, 0.0012599, 0.0012599

39, 0.60508, 0.95977, 0.40415, 0.8964, 0.99239, 0.95902, 0.98942, 0.86842, 0.88171, 0.93443, 0.93226, 0.74746, 0.47476, 0.61886, 0.32513, 0.81709, 0.0012489, 0.0012489, 0.0012489

40, 0.584, 0.88982, 0.39122, 0.88901, 0.99444, 0.98361, 0.99453, 0.878, 0.89923, 0.92798, 0.91733, 0.76257, 0.47239, 0.62578, 0.3035, 0.81051, 0.0012379, 0.0012379, 0.0012379

41, 0.59486, 0.90701, 0.40209, 0.9015, 0.99336, 0.99987, 0.995, 0.87072, 0.92753, 0.93443, 0.91931, 0.74505, 0.47131, 0.62923, 0.29953, 0.8165, 0.0012269, 0.0012269, 0.0012269

42, 0.57981, 0.89605, 0.3884, 0.89331, 0.99347, 0.99895, 0.995, 0.87756, 0.91132, 0.91736, 0.92355, 0.72736, 0.49157, 0.65136, 0.30576, 0.82455, 0.0012159, 0.0012159, 0.0012159

43, 0.56892, 0.90118, 0.39688, 0.89113, 0.9937, 0.99883, 0.995, 0.87339, 0.93617, 0.94166, 0.94459, 0.73014, 0.44668, 0.67604, 0.28614, 0.81505, 0.0012049, 0.0012049, 0.0012049

44, 0.5682, 0.89289, 0.38081, 0.87862, 0.99412, 0.9918, 0.99492, 0.87786, 0.9357, 0.93443, 0.95198, 0.74688, 0.46909, 0.66507, 0.28843, 0.81864, 0.0011939, 0.0011939, 0.0011939

45, 0.56932, 0.89318, 0.38795, 0.88281, 0.99642, 0.974, 0.99406, 0.84824, 0.89908, 0.92497, 0.93357, 0.71659, 0.53374, 0.66138, 0.31442, 0.82559, 0.0011829, 0.0011829, 0.0011829

46, 0.57828, 0.92268, 0.38964, 0.89365, 0.99745, 0.97333, 0.99406, 0.87028, 0.89796, 0.92623, 0.92724, 0.71643, 0.49014, 0.70499, 0.30997, 0.81752, 0.0011719, 0.0011719, 0.0011719

47, 0.5741, 0.87585, 0.37728, 0.87917, 0.9849, 1, 0.99468, 0.88217, 0.96865, 0.98361, 0.98213, 0.75796, 0.43867, 0.65592, 0.29864, 0.81128, 0.0011609, 0.0011609, 0.0011609

48, 0.53781, 0.87689, 0.37193, 0.85733, 0.99553, 0.99078, 0.99492, 0.88782, 0.95376, 0.95021, 0.95483, 0.7604, 0.44237, 0.61751, 0.27544, 0.80777, 0.0011499, 0.0011499, 0.0011499

49, 0.56544, 0.88507, 0.37652, 0.88556, 0.9956, 0.9918, 0.99492, 0.8993, 0.96231, 0.95902, 0.97815, 0.76927, 0.43114, 0.60045, 0.28439, 0.8094, 0.0011389, 0.0011389, 0.0011389

50, 0.56133, 0.87988, 0.3835, 0.87392, 0.99379, 0.9918, 0.99492, 0.88764, 0.92128, 0.93299, 0.92684, 0.73812, 0.44163, 0.69348, 0.28845, 0.81137, 0.0011279, 0.0011279, 0.0011279

51, 0.57344, 0.88227, 0.37909, 0.89454, 0.99314, 0.99984, 0.995, 0.87671, 0.91886, 0.91715, 0.91912, 0.72323, 0.44843, 0.61987, 0.2908, 0.81266, 0.0011169, 0.0011169, 0.0011169

52, 0.54244, 0.85103, 0.36518, 0.86738, 0.97691, 0.99142, 0.99437, 0.87566, 0.94409, 0.91504, 0.93341, 0.75412, 0.43571, 0.64451, 0.28852, 0.81201, 0.0011059, 0.0011059, 0.0011059

53, 0.56126, 0.87236, 0.38151, 0.8677, 0.98875, 0.96686, 0.99329, 0.86631, 0.91847, 0.90164, 0.90572, 0.72555, 0.47136, 0.69786, 0.29761, 0.8177, 0.0010949, 0.0010949, 0.0010949

54, 0.55352, 0.85783, 0.3635, 0.88096, 0.98545, 0.98223, 0.99388, 0.86044, 0.91661, 0.90164, 0.88706, 0.70082, 0.45083, 0.6695, 0.29178, 0.81506, 0.0010839, 0.0010839, 0.0010839

55, 0.5364, 0.82946, 0.36068, 0.85155, 0.99513, 0.96721, 0.99328, 0.85057, 0.91612, 0.89344, 0.86848, 0.68093, 0.47906, 0.63858, 0.30619, 0.81837, 0.0010729, 0.0010729, 0.0010729

56, 0.55444, 0.87824, 0.3562, 0.87422, 0.98391, 0.99587, 0.99452, 0.888, 0.90242, 0.91196, 0.86881, 0.6718, 0.41929, 0.65002, 0.27808, 0.80868, 0.0010619, 0.0010619, 0.0010619

57, 0.57167, 0.84159, 0.36067, 0.87627, 0.9833, 0.99609, 0.99468, 0.87656, 0.91015, 0.92232, 0.90001, 0.6944, 0.466, 0.66246, 0.29777, 0.81034, 0.0010509, 0.0010509, 0.0010509

58, 0.54235, 0.85904, 0.36132, 0.86228, 0.98488, 0.98095, 0.99376, 0.87682, 0.92529, 0.90164, 0.91184, 0.69069, 0.41977, 0.74609, 0.28723, 0.80793, 0.0010399, 0.0010399, 0.0010399

59, 0.53129, 0.84959, 0.36349, 0.88032, 0.98645, 0.9918, 0.9946, 0.85753, 0.90792, 0.88525, 0.89047, 0.67611, 0.43915, 0.70887, 0.29537, 0.80498, 0.0010289, 0.0010289, 0.0010289

60, 0.52715, 0.81784, 0.3561, 0.86037, 0.99519, 0.97407, 0.99391, 0.85748, 0.8996, 0.87705, 0.90202, 0.68531, 0.46921, 0.69393, 0.29628, 0.80508, 0.0010179, 0.0010179, 0.0010179

61, 0.54388, 0.82199, 0.36753, 0.87818, 0.99324, 0.99817, 0.995, 0.87103, 0.90277, 0.90847, 0.90409, 0.70172, 0.44887, 0.66793, 0.28583, 0.80495, 0.0010069, 0.0010069, 0.0010069

62, 0.50407, 0.80333, 0.35046, 0.86303, 0.99377, 0.99771, 0.995, 0.87858, 0.9344, 0.95082, 0.94252, 0.73622, 0.45014, 0.65972, 0.2867, 0.80574, 0.00099587, 0.00099587, 0.00099587

63, 0.52693, 0.80241, 0.36421, 0.88089, 0.99164, 1, 0.995, 0.88904, 0.9201, 0.93443, 0.94033, 0.73032, 0.42418, 0.66698, 0.28158, 0.80794, 0.00098486, 0.00098486, 0.00098486

64, 0.50586, 0.80266, 0.35546, 0.8636, 0.99215, 1, 0.995, 0.89973, 0.95119, 0.95902, 0.96786, 0.76493, 0.42018, 0.63028, 0.28316, 0.81096, 0.00097386, 0.00097386, 0.00097386

65, 0.52634, 0.84481, 0.36214, 0.87209, 0.99232, 1, 0.995, 0.89434, 0.96099, 0.94951, 0.95941, 0.77711, 0.43037, 0.61703, 0.28001, 0.81159, 0.00096286, 0.00096286, 0.00096286

66, 0.53054, 0.822, 0.34754, 0.86991, 0.97807, 0.9918, 0.9946, 0.90126, 0.94289, 0.92623, 0.95152, 0.76651, 0.41591, 0.62948, 0.27975, 0.80397, 0.00095186, 0.00095186, 0.00095186

67, 0.52418, 0.85647, 0.34315, 0.86191, 0.99217, 0.99269, 0.995, 0.8999, 0.93463, 0.91803, 0.93287, 0.73357, 0.39979, 0.60939, 0.27347, 0.80086, 0.00094085, 0.00094085, 0.00094085

68, 0.53324, 0.82976, 0.35568, 0.86644, 0.99239, 0.98335, 0.99492, 0.90327, 0.95028, 0.94165, 0.95332, 0.75478, 0.4085, 0.62378, 0.27542, 0.80293, 0.00092985, 0.00092985, 0.00092985

69, 0.53115, 0.75997, 0.34781, 0.86763, 0.9841, 0.99483, 0.99468, 0.88702, 0.95213, 0.93443, 0.94841, 0.76229, 0.43461, 0.60293, 0.27994, 0.80518, 0.00091885, 0.00091885, 0.00091885

70, 0.526, 0.82016, 0.34888, 0.86263, 0.9934, 0.98281, 0.99476, 0.87986, 0.94272, 0.92571, 0.93836, 0.74529, 0.45097, 0.58342, 0.2931, 0.81038, 0.00090785, 0.00090785, 0.00090785

71, 0.53749, 0.83496, 0.35846, 0.86971, 0.99206, 0.98931, 0.995, 0.86993, 0.94183, 0.94055, 0.94918, 0.72368, 0.47205, 0.60356, 0.29092, 0.80785, 0.00089685, 0.00089685, 0.00089685

72, 0.54631, 0.7863, 0.35471, 0.8646, 0.98988, 1, 0.995, 0.87302, 0.9156, 0.92623, 0.92335, 0.72737, 0.45951, 0.61332, 0.2946, 0.80229, 0.00088584, 0.00088584, 0.00088584

73, 0.53411, 0.78431, 0.36218, 0.86938, 0.98489, 0.99509, 0.99492, 0.88537, 0.94284, 0.93626, 0.93957, 0.71939, 0.42682, 0.63563, 0.27247, 0.80249, 0.00087484, 0.00087484, 0.00087484

74, 0.51131, 0.75663, 0.34802, 0.86084, 0.9915, 0.99168, 0.99492, 0.89063, 0.96334, 0.95902, 0.96788, 0.74337, 0.4172, 0.66825, 0.27049, 0.80375, 0.00086384, 0.00086384, 0.00086384

75, 0.49274, 0.75514, 0.32385, 0.8434, 0.99351, 0.99852, 0.995, 0.90733, 0.95238, 0.95774, 0.95248, 0.75094, 0.39467, 0.61963, 0.25691, 0.80182, 0.00085284, 0.00085284, 0.00085284

76, 0.5105, 0.76651, 0.33562, 0.86911, 0.99277, 0.99871, 0.995, 0.9017, 0.92701, 0.93337, 0.93248, 0.73305, 0.40061, 0.59443, 0.25792, 0.80534, 0.00084184, 0.00084184, 0.00084184

77, 0.48464, 0.78173, 0.32266, 0.85218, 0.99376, 0.99036, 0.99492, 0.8981, 0.95083, 0.94262, 0.95504, 0.75764, 0.39427, 0.59708, 0.25147, 0.80274, 0.00083083, 0.00083083, 0.00083083

78, 0.48016, 0.74713, 0.31543, 0.84968, 0.99321, 0.98361, 0.99468, 0.90371, 0.94432, 0.94993, 0.95114, 0.75101, 0.38557, 0.60096, 0.24419, 0.79802, 0.00081983, 0.00081983, 0.00081983

79, 0.49464, 0.7429, 0.31877, 0.85275, 0.98495, 0.99058, 0.99468, 0.88972, 0.94387, 0.95002, 0.95663, 0.74389, 0.42732, 0.5955, 0.25661, 0.79905, 0.00080883, 0.00080883, 0.00080883

80, 0.49703, 0.76088, 0.33324, 0.85951, 0.9934, 0.98992, 0.99492, 0.89206, 0.95993, 0.95724, 0.96689, 0.73808, 0.43981, 0.59671, 0.26221, 0.80057, 0.00079783, 0.00079783, 0.00079783

81, 0.50835, 0.73724, 0.32828, 0.86083, 0.99331, 0.98924, 0.99492, 0.89331, 0.9598, 0.95651, 0.96941, 0.745, 0.43375, 0.58971, 0.2587, 0.80172, 0.00078682, 0.00078682, 0.00078682

82, 0.51318, 0.76387, 0.33139, 0.86378, 0.99167, 0.9918, 0.99492, 0.88483, 0.94865, 0.94262, 0.95668, 0.75753, 0.42563, 0.59294, 0.26377, 0.79974, 0.00077582, 0.00077582, 0.00077582

83, 0.51419, 0.81424, 0.33818, 0.87987, 0.98747, 0.9918, 0.99492, 0.88806, 0.92505, 0.94661, 0.94107, 0.7356, 0.42184, 0.62775, 0.26518, 0.79773, 0.00076482, 0.00076482, 0.00076482

84, 0.50549, 0.78554, 0.32831, 0.86063, 0.99334, 0.99824, 0.995, 0.90001, 0.92753, 0.93309, 0.923, 0.70456, 0.39291, 0.61839, 0.25388, 0.79387, 0.00075382, 0.00075382, 0.00075382

85, 0.4813, 0.76696, 0.33029, 0.84741, 0.99378, 0.99987, 0.995, 0.9079, 0.93619, 0.94262, 0.93837, 0.70622, 0.39948, 0.61958, 0.25202, 0.79552, 0.00074282, 0.00074282, 0.00074282

86, 0.47927, 0.74144, 0.31899, 0.85054, 0.99358, 1, 0.995, 0.90599, 0.92789, 0.93443, 0.92893, 0.71721, 0.39957, 0.6344, 0.25058, 0.79669, 0.00073181, 0.00073181, 0.00073181

87, 0.45797, 0.71496, 0.30763, 0.84343, 0.99289, 1, 0.995, 0.91198, 0.91926, 0.92623, 0.9221, 0.7322, 0.38337, 0.63706, 0.24917, 0.79772, 0.00072081, 0.00072081, 0.00072081

88, 0.47121, 0.73585, 0.31173, 0.84161, 0.98376, 1, 0.99468, 0.90451, 0.91132, 0.92623, 0.9232, 0.72954, 0.38732, 0.61386, 0.25398, 0.79965, 0.00070981, 0.00070981, 0.00070981

89, 0.48209, 0.76384, 0.31623, 0.85552, 0.98247, 1, 0.99468, 0.90055, 0.90207, 0.91803, 0.91434, 0.72889, 0.39506, 0.61794, 0.25017, 0.80414, 0.00069881, 0.00069881, 0.00069881

90, 0.48072, 0.85716, 0.31611, 0.86938, 0.99277, 1, 0.995, 0.91273, 0.95161, 0.95902, 0.95462, 0.76972, 0.37302, 0.60709, 0.23653, 0.80102, 0.0006878, 0.0006878, 0.0006878

91, 0.44969, 0.69409, 0.29463, 0.83908, 0.99368, 0.9998, 0.995, 0.90846, 0.95264, 0.95902, 0.96004, 0.77333, 0.37746, 0.62963, 0.23484, 0.79897, 0.0006768, 0.0006768, 0.0006768

92, 0.45362, 0.7412, 0.3009, 0.84783, 0.9942, 1, 0.995, 0.91573, 0.9448, 0.95082, 0.94998, 0.75041, 0.37572, 0.64233, 0.22932, 0.79888, 0.0006658, 0.0006658, 0.0006658

93, 0.46973, 0.75954, 0.30756, 0.84612, 0.99455, 1, 0.995, 0.92145, 0.95355, 0.95902, 0.95132, 0.76764, 0.37958, 0.60757, 0.23618, 0.79814, 0.0006548, 0.0006548, 0.0006548

94, 0.45368, 0.72953, 0.30207, 0.84845, 0.99388, 1, 0.995, 0.92263, 0.96929, 0.97541, 0.97241, 0.77965, 0.37618, 0.59154, 0.23763, 0.79689, 0.0006438, 0.0006438, 0.0006438

95, 0.45438, 0.79169, 0.29796, 0.84332, 0.99253, 1, 0.995, 0.92885, 0.95149, 0.95902, 0.95447, 0.75137, 0.36245, 0.58836, 0.2362, 0.79621, 0.00063279, 0.00063279, 0.00063279

96, 0.45539, 0.72519, 0.29444, 0.83772, 0.99275, 1, 0.995, 0.92858, 0.97634, 0.98361, 0.98094, 0.76541, 0.35058, 0.58058, 0.22581, 0.79501, 0.00062179, 0.00062179, 0.00062179

97, 0.46532, 0.71952, 0.30706, 0.84346, 0.99321, 1, 0.995, 0.92183, 0.96868, 0.97541, 0.97447, 0.77103, 0.36454, 0.57825, 0.22817, 0.79503, 0.00061079, 0.00061079, 0.00061079

98, 0.4634, 0.75797, 0.30198, 0.84253, 0.99349, 1, 0.995, 0.92373, 0.94434, 0.95082, 0.95062, 0.72778, 0.3825, 0.5839, 0.23593, 0.79903, 0.00059979, 0.00059979, 0.00059979

99, 0.45978, 0.73694, 0.2911, 0.83786, 0.99258, 1, 0.995, 0.92003, 0.95161, 0.95902, 0.96076, 0.72288, 0.38636, 0.60784, 0.23819, 0.79789, 0.00058878, 0.00058878, 0.00058878

100, 0.45982, 0.72692, 0.30612, 0.84651, 0.99265, 1, 0.995, 0.92714, 0.93509, 0.94262, 0.94487, 0.74094, 0.35984, 0.60772, 0.22535, 0.79389, 0.00057778, 0.00057778, 0.00057778

101, 0.45487, 0.73902, 0.29993, 0.84944, 0.99223, 1, 0.995, 0.92287, 0.95942, 0.96721, 0.9668, 0.76055, 0.35657, 0.58958, 0.22793, 0.79327, 0.00056678, 0.00056678, 0.00056678

102, 0.45561, 0.70695, 0.29775, 0.83618, 0.99295, 0.99944, 0.995, 0.91794, 0.9601, 0.96679, 0.96243, 0.75928, 0.35718, 0.61387, 0.23115, 0.79325, 0.00055578, 0.00055578, 0.00055578

103, 0.44562, 0.71273, 0.28594, 0.84192, 0.99309, 0.99937, 0.995, 0.92489, 0.96983, 0.96679, 0.96654, 0.74462, 0.35999, 0.58504, 0.23084, 0.79395, 0.00054478, 0.00054478, 0.00054478

104, 0.44344, 0.74941, 0.28662, 0.8279, 0.99414, 0.99951, 0.995, 0.92108, 0.96951, 0.97499, 0.97144, 0.77039, 0.36643, 0.58723, 0.23532, 0.7955, 0.00053377, 0.00053377, 0.00053377

105, 0.45057, 0.70289, 0.29097, 0.835, 0.99211, 1, 0.995, 0.91511, 0.96762, 0.97541, 0.97061, 0.76966, 0.3857, 0.64677, 0.2301, 0.79367, 0.00052277, 0.00052277, 0.00052277

106, 0.45902, 0.70389, 0.2992, 0.85038, 0.99161, 1, 0.995, 0.91969, 0.9589, 0.96721, 0.95966, 0.75002, 0.36394, 0.65928, 0.22896, 0.79132, 0.00051177, 0.00051177, 0.00051177

107, 0.44914, 0.70843, 0.292, 0.83229, 0.99364, 0.99823, 0.995, 0.91925, 0.9525, 0.95729, 0.9523, 0.74376, 0.3555, 0.64319, 0.23057, 0.79206, 0.00050077, 0.00050077, 0.00050077

108, 0.43956, 0.68764, 0.29229, 0.83512, 0.99416, 0.991, 0.99492, 0.92371, 0.92736, 0.92579, 0.92573, 0.73184, 0.34942, 0.65154, 0.23502, 0.79123, 0.00048976, 0.00048976, 0.00048976

109, 0.41627, 0.69546, 0.27477, 0.83016, 0.99291, 0.99877, 0.995, 0.92195, 0.92719, 0.9338, 0.92586, 0.71505, 0.34907, 0.66716, 0.22683, 0.78991, 0.00047876, 0.00047876, 0.00047876

110, 0.43757, 0.6661, 0.28918, 0.84288, 0.99301, 1, 0.995, 0.91401, 0.94358, 0.95082, 0.94563, 0.73865, 0.37311, 0.6476, 0.22238, 0.79185, 0.00046776, 0.00046776, 0.00046776

111, 0.42813, 0.74211, 0.28437, 0.83242, 0.99371, 1, 0.995, 0.91166, 0.92804, 0.93443, 0.93153, 0.72562, 0.38971, 0.59336, 0.22717, 0.79306, 0.00045676, 0.00045676, 0.00045676

112, 0.42815, 0.662, 0.27297, 0.82636, 0.99351, 1, 0.995, 0.92133, 0.96075, 0.96721, 0.96757, 0.76473, 0.35413, 0.57381, 0.2192, 0.79268, 0.00044576, 0.00044576, 0.00044576

113, 0.41605, 0.69908, 0.27479, 0.83956, 0.99269, 1, 0.995, 0.92058, 0.91059, 0.91803, 0.9254, 0.73206, 0.36123, 0.5735, 0.21968, 0.79245, 0.00043475, 0.00043475, 0.00043475

114, 0.41612, 0.70673, 0.27907, 0.8237, 0.99229, 1, 0.995, 0.91269, 0.91037, 0.91803, 0.92957, 0.73497, 0.37372, 0.56592, 0.21701, 0.79105, 0.00042375, 0.00042375, 0.00042375

115, 0.41127, 0.70335, 0.2723, 0.82646, 0.99333, 1, 0.995, 0.92246, 0.92783, 0.93443, 0.94217, 0.73893, 0.36762, 0.55255, 0.21824, 0.79213, 0.00041275, 0.00041275, 0.00041275

116, 0.40918, 0.65935, 0.2727, 0.83261, 0.99449, 1, 0.995, 0.92589, 0.95346, 0.95902, 0.9623, 0.75523, 0.35108, 0.54882, 0.21409, 0.79289, 0.00040175, 0.00040175, 0.00040175

117, 0.41562, 0.70598, 0.28245, 0.82885, 0.99461, 1, 0.995, 0.92908, 0.95358, 0.95902, 0.96138, 0.76171, 0.34242, 0.55364, 0.21487, 0.79117, 0.00039074, 0.00039074, 0.00039074

118, 0.41745, 0.68207, 0.27904, 0.83769, 0.99385, 1, 0.995, 0.91931, 0.95287, 0.95902, 0.95887, 0.75064, 0.33583, 0.55208, 0.21822, 0.78967, 0.00037974, 0.00037974, 0.00037974

119, 0.41603, 0.70187, 0.27842, 0.83059, 0.9926, 1, 0.995, 0.92081, 0.9435, 0.95082, 0.95046, 0.75362, 0.33905, 0.54759, 0.21387, 0.78944, 0.00036874, 0.00036874, 0.00036874

120, 0.40378, 0.68643, 0.26398, 0.83051, 0.99193, 1, 0.995, 0.93043, 0.9429, 0.95082, 0.9521, 0.76182, 0.32203, 0.54068, 0.21858, 0.78842, 0.00035774, 0.00035774, 0.00035774

121, 0.41997, 0.66423, 0.28015, 0.8344, 0.99191, 1, 0.995, 0.933, 0.95922, 0.96721, 0.96795, 0.77824, 0.33187, 0.54511, 0.2298, 0.79038, 0.00034674, 0.00034674, 0.00034674

122, 0.41775, 0.66789, 0.27401, 0.82077, 0.99259, 1, 0.995, 0.93408, 0.9616, 0.95003, 0.96194, 0.77414, 0.34596, 0.54746, 0.22545, 0.7913, 0.00033573, 0.00033573, 0.00033573

123, 0.40407, 0.68235, 0.26019, 0.83061, 0.99328, 1, 0.995, 0.93783, 0.95222, 0.95902, 0.95434, 0.7696, 0.33434, 0.54152, 0.21774, 0.79008, 0.00032473, 0.00032473, 0.00032473

124, 0.40653, 0.66737, 0.2669, 0.82085, 0.99297, 0.99983, 0.995, 0.9237, 0.93549, 0.94262, 0.93727, 0.75481, 0.33936, 0.54897, 0.21549, 0.78919, 0.00031373, 0.00031373, 0.00031373

125, 0.40439, 0.669, 0.26104, 0.82496, 0.99226, 0.99888, 0.995, 0.91523, 0.93473, 0.9416, 0.93943, 0.74624, 0.34282, 0.56006, 0.21817, 0.7887, 0.00030273, 0.00030273, 0.00030273

126, 0.40482, 0.66549, 0.26258, 0.834, 0.99171, 0.99827, 0.995, 0.91652, 0.94218, 0.94925, 0.95096, 0.75169, 0.33964, 0.56686, 0.21283, 0.78855, 0.00029172, 0.00029172, 0.00029172

127, 0.42567, 0.69893, 0.27031, 0.84044, 0.99086, 0.99824, 0.995, 0.9219, 0.94136, 0.94924, 0.95395, 0.75408, 0.33562, 0.56239, 0.21541, 0.7878, 0.00028072, 0.00028072, 0.00028072

128, 0.39795, 0.6567, 0.26584, 0.81067, 0.99195, 0.99905, 0.995, 0.91935, 0.92615, 0.93372, 0.93978, 0.77321, 0.34061, 0.55892, 0.21622, 0.78728, 0.00026972, 0.00026972, 0.00026972

129, 0.4239, 0.68173, 0.27368, 0.83477, 0.9921, 0.99942, 0.995, 0.93027, 0.92643, 0.93412, 0.94282, 0.77579, 0.32603, 0.54331, 0.20922, 0.78643, 0.00025872, 0.00025872, 0.00025872

130, 0.39117, 0.68029, 0.26017, 0.8203, 0.99238, 1, 0.995, 0.93462, 0.91841, 0.92623, 0.93294, 0.75863, 0.31398, 0.53387, 0.20246, 0.78635, 0.00024772, 0.00024772, 0.00024772

131, 0.3795, 0.65045, 0.25729, 0.83237, 0.99282, 1, 0.995, 0.93766, 0.93526, 0.94262, 0.94503, 0.77142, 0.30989, 0.52892, 0.20046, 0.78633, 0.00023671, 0.00023671, 0.00023671

132, 0.39827, 0.6868, 0.26045, 0.82531, 0.99311, 1, 0.995, 0.93918, 0.93556, 0.94262, 0.94958, 0.78757, 0.31754, 0.52856, 0.19939, 0.78713, 0.00022571, 0.00022571, 0.00022571

133, 0.38967, 0.62062, 0.25842, 0.82234, 0.99318, 0.99979, 0.995, 0.93772, 0.95216, 0.95899, 0.96231, 0.78373, 0.31538, 0.52554, 0.19806, 0.78744, 0.00021471, 0.00021471, 0.00021471

134, 0.38896, 0.6629, 0.2565, 0.82739, 0.99359, 0.99975, 0.995, 0.94179, 0.96076, 0.96708, 0.96812, 0.79162, 0.31534, 0.52828, 0.19648, 0.78677, 0.00020371, 0.00020371, 0.00020371

135, 0.39411, 0.63873, 0.26164, 0.81631, 0.99389, 0.99979, 0.995, 0.93927, 0.96107, 0.96713, 0.96568, 0.77388, 0.31173, 0.5314, 0.19761, 0.78708, 0.00019271, 0.00019271, 0.00019271

136, 0.3866, 0.66188, 0.26229, 0.82533, 0.99437, 0.99997, 0.995, 0.93719, 0.96151, 0.96721, 0.96451, 0.7705, 0.304, 0.53313, 0.19509, 0.78607, 0.0001817, 0.0001817, 0.0001817

137, 0.39051, 0.6547, 0.25469, 0.83435, 0.99439, 0.99987, 0.995, 0.93557, 0.95334, 0.95902, 0.95675, 0.77016, 0.29923, 0.53446, 0.19502, 0.78622, 0.0001707, 0.0001707, 0.0001707

138, 0.38438, 0.63143, 0.25649, 0.83129, 0.99435, 1, 0.995, 0.93747, 0.9368, 0.94262, 0.94036, 0.7574, 0.29801, 0.5346, 0.19163, 0.78596, 0.0001597, 0.0001597, 0.0001597

139, 0.36798, 0.62587, 0.24103, 0.83531, 0.9943, 0.99995, 0.995, 0.93688, 0.93675, 0.94262, 0.94101, 0.76059, 0.29363, 0.53466, 0.19086, 0.78513, 0.0001487, 0.0001487, 0.0001487

140, 0.38333, 0.66509, 0.25159, 0.82811, 0.99407, 0.99998, 0.995, 0.93722, 0.94473, 0.95082, 0.94877, 0.7574, 0.29267, 0.53047, 0.18981, 0.78471, 0.00013769, 0.00013769, 0.00013769

141, 0.3702, 0.71226, 0.24245, 0.80544, 0.99315, 0.99948, 0.995, 0.93565, 0.94389, 0.95051, 0.94732, 0.75533, 0.29669, 0.53, 0.19356, 0.78569, 0.00012669, 0.00012669, 0.00012669

142, 0.36059, 0.64947, 0.23873, 0.79385, 0.99275, 0.99944, 0.995, 0.93843, 0.9599, 0.96679, 0.96131, 0.76143, 0.29868, 0.52674, 0.19178, 0.78617, 0.00011569, 0.00011569, 0.00011569

143, 0.35999, 0.65547, 0.24062, 0.7918, 0.99298, 0.99972, 0.995, 0.93664, 0.96014, 0.96714, 0.96102, 0.76989, 0.30173, 0.52395, 0.19073, 0.78663, 0.00010469, 0.00010469, 0.00010469

144, 0.37324, 0.70191, 0.2354, 0.81332, 0.99352, 0.99997, 0.995, 0.93601, 0.95235, 0.95902, 0.95198, 0.76974, 0.30419, 0.52123, 0.19405, 0.78663, 9.3685e-05, 9.3685e-05, 9.3685e-05

145, 0.38671, 0.63598, 0.24397, 0.80163, 0.99346, 1, 0.995, 0.93918, 0.95232, 0.95902, 0.9527, 0.77035, 0.30159, 0.51605, 0.1936, 0.78633, 8.2683e-05, 8.2683e-05, 8.2683e-05

146, 0.34948, 0.63909, 0.23791, 0.80419, 0.99316, 1, 0.995, 0.93714, 0.94383, 0.95082, 0.94306, 0.76542, 0.29906, 0.51237, 0.19375, 0.786, 7.1681e-05, 7.1681e-05, 7.1681e-05

147, 0.35161, 0.65631, 0.23166, 0.79369, 0.99341, 1, 0.995, 0.93773, 0.95225, 0.95902, 0.95252, 0.75927, 0.29859, 0.51517, 0.19602, 0.78593, 6.0679e-05, 6.0679e-05, 6.0679e-05

148, 0.35401, 0.6422, 0.23434, 0.80021, 0.99352, 1, 0.995, 0.93492, 0.94412, 0.95082, 0.94552, 0.75397, 0.30047, 0.52069, 0.19621, 0.78553, 4.9677e-05, 4.9677e-05, 4.9677e-05

149, 0.33434, 0.62328, 0.23624, 0.79421, 0.99378, 0.99975, 0.995, 0.9348, 0.94454, 0.95082, 0.94595, 0.75298, 0.30142, 0.52289, 0.19363, 0.78548, 3.8674e-05, 3.8674e-05, 3.8674e-05

150, 0.34916, 0.63646, 0.22777, 0.79437, 0.99385, 0.99975, 0.995, 0.93086, 0.94461, 0.95082, 0.94613, 0.75062, 0.29889, 0.52373, 0.19261, 0.78553, 2.7672e-05, 2.7672e-05, 2.7672e-05

15.results.png

损失函数在目标检测任务中扮演关键角色,它用于衡量模型的预测值与真实值之间的差异,直接影响模型性能。以下是一些与目标检测相关的损失函数和性能评价指标的解释:

-

定位损失(box_loss):

定义: 衡量预测框与标注框之间的误差,通常使用 GIoU(Generalized Intersection over Union)来度量,其值越小表示定位越准确。

目的: 通过最小化定位损失,使模型能够准确地定位目标。 -

置信度损失(obj_loss):

定义: 计算网络对目标的置信度,通常使用二元交叉熵损失函数,其值越小表示模型判断目标的能力越准确。

目的: 通过最小化置信度损失,使模型能够准确判断目标是否存在。 -

分类损失(cls_loss):

定义: 计算锚框对应的分类是否正确,通常使用交叉熵损失函数,其值越小表示分类越准确。

目的: 通过最小化分类损失,使模型能够准确分类目标。 -

Precision(精度):

定义: 正确预测为正类别的样本数量占所有预测为正类别的样本数量的比例。

目的: 衡量模型在所有预测为正例的样本中有多少是正确的。 -

Recall(召回率):

定义: 正确预测为正类别的样本数量占所有真实正类别的样本数量的比例。

目的: 衡量模型能够找出真实正例的能力。 -

mAP(平均精度):

定义: 使用 Precision-Recall 曲线计算的面积,mAP50-95 表示在不同 IoU 阈值下的平均 mAP。

目的: 综合考虑了模型在不同精度和召回率条件下的性能,是目标检测任务中常用的评价指标。

在训练过程中,通常需要关注精度和召回率的波动情况,以及 mAP50 和 mAP50-95评估训练结果。这些指标可以提供关于模型性能和泛化能力的有用信息。

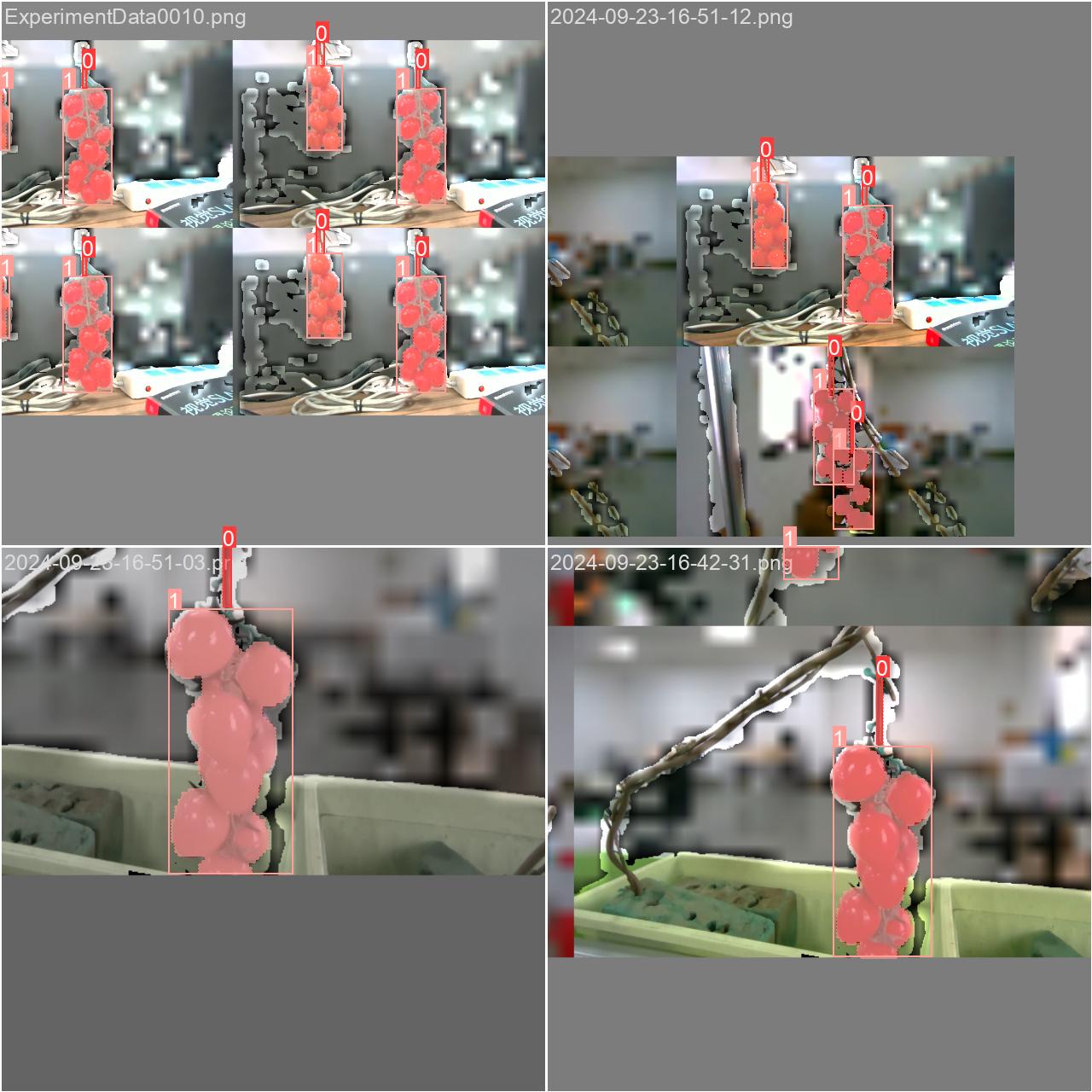

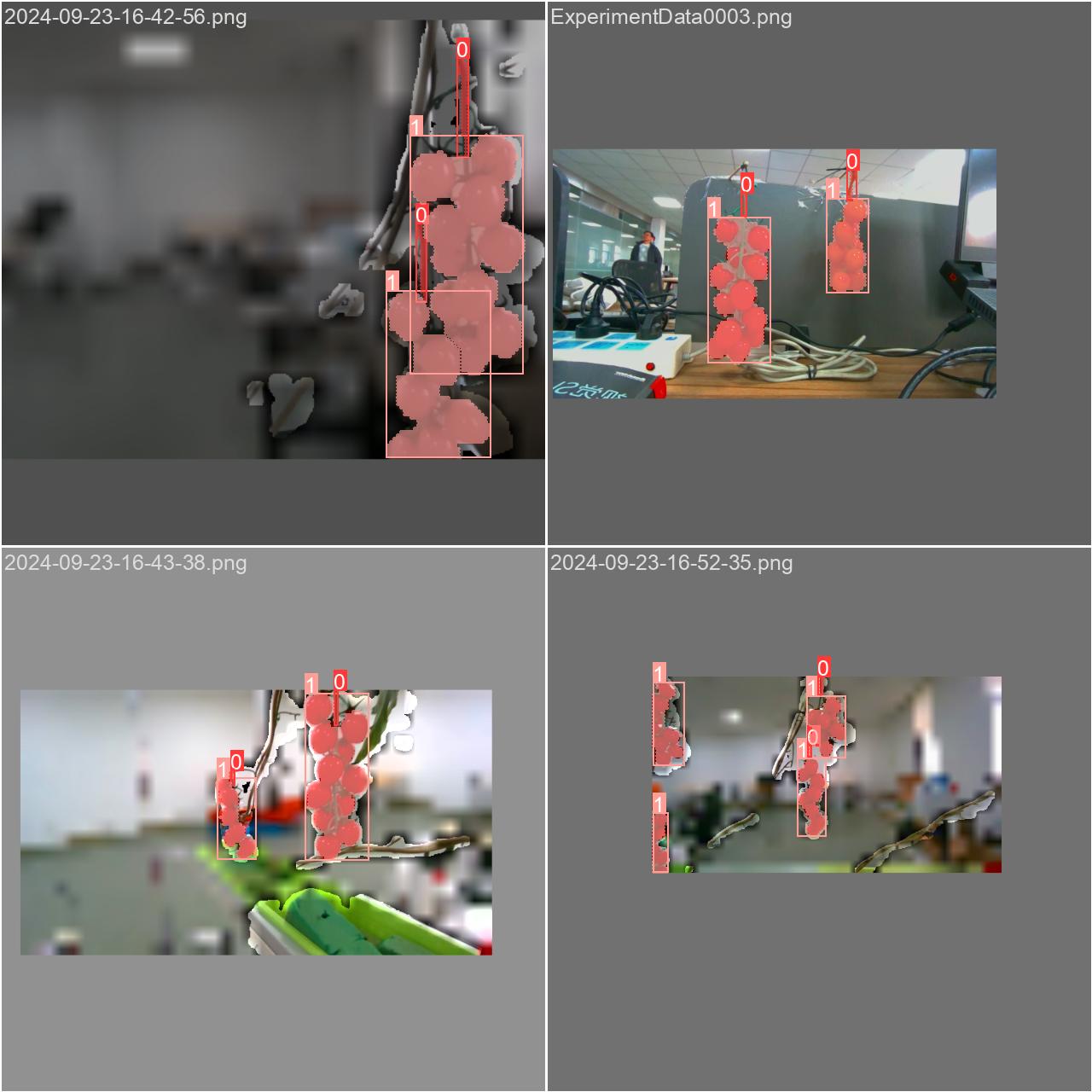

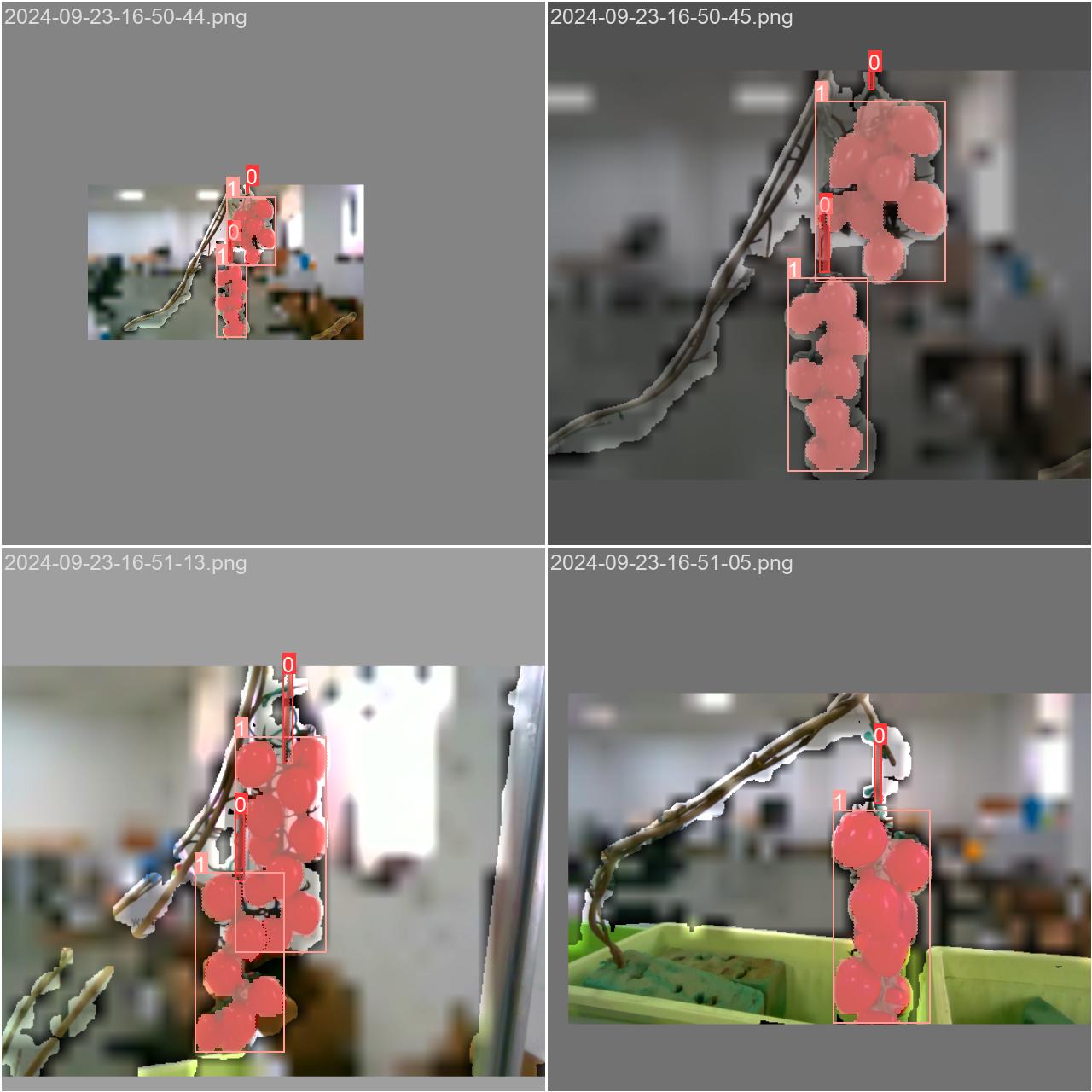

16.train_batch(N).jpg

对应batch_size这个超参,这里设置为4所以一次读取4张图片:

-

train_batch0.jpg

-

train_batch1.jpg

-

train_batch2.jpg

-

train_batch4480.jpg

-

train_batch4481.jpg

-

train_batch4482.jpg

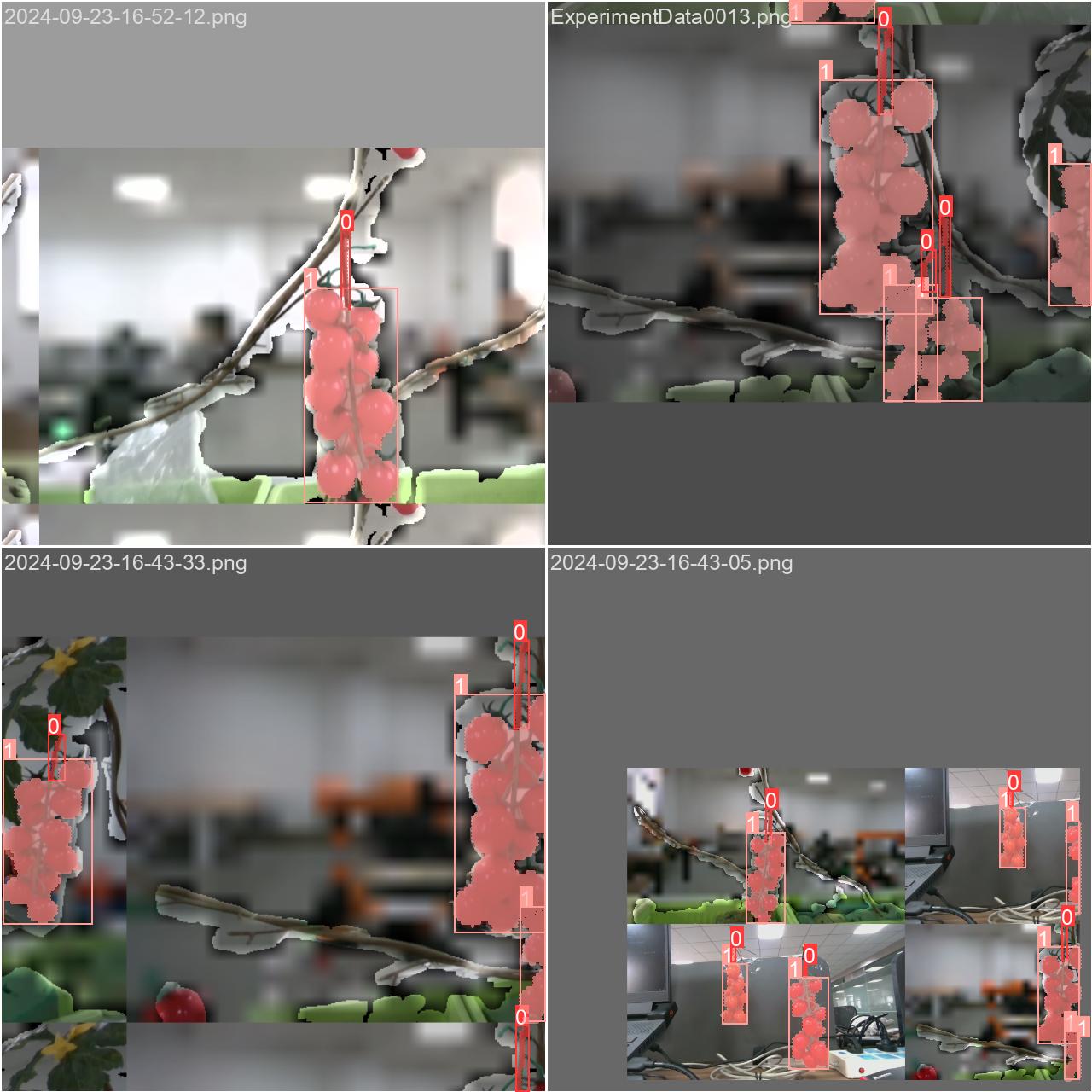

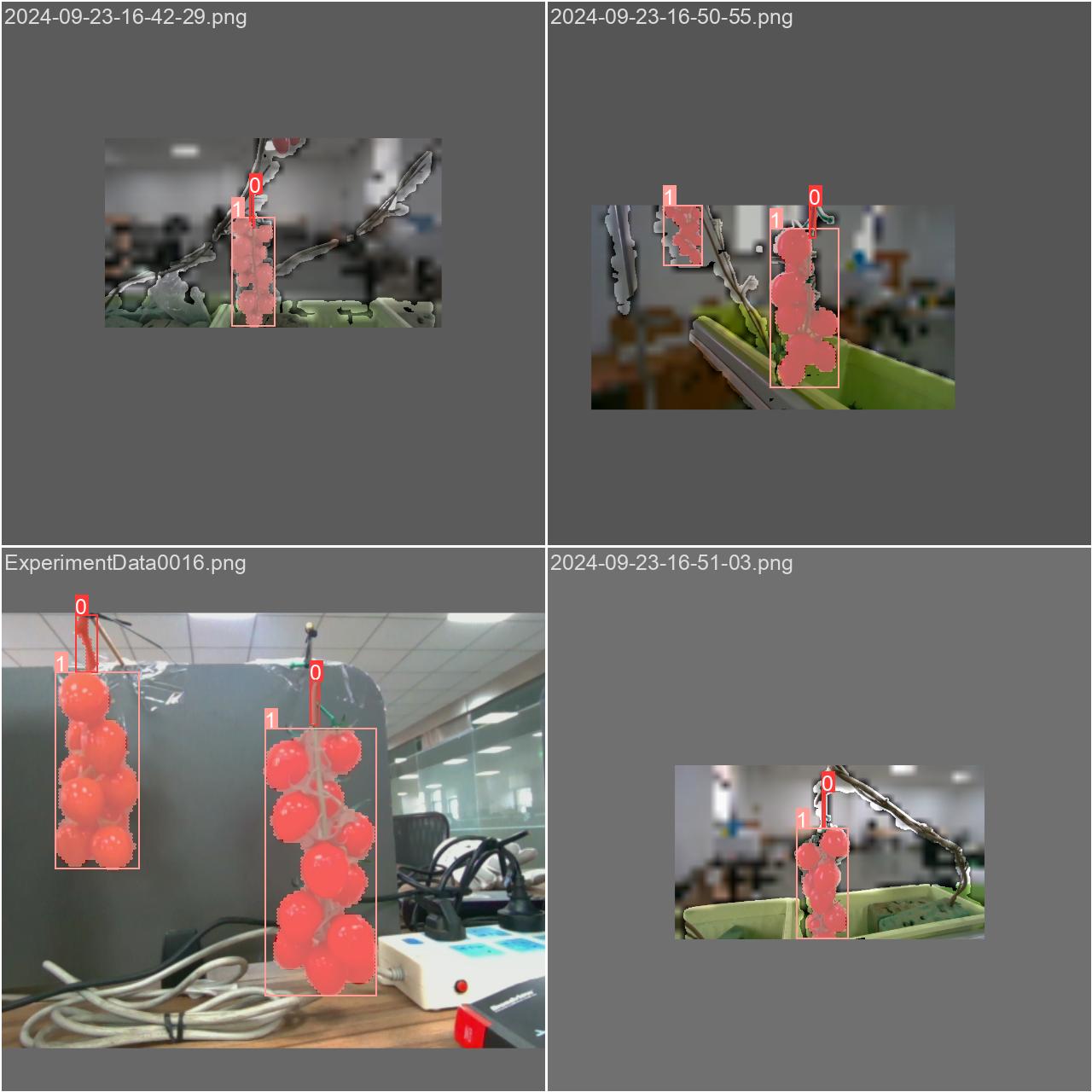

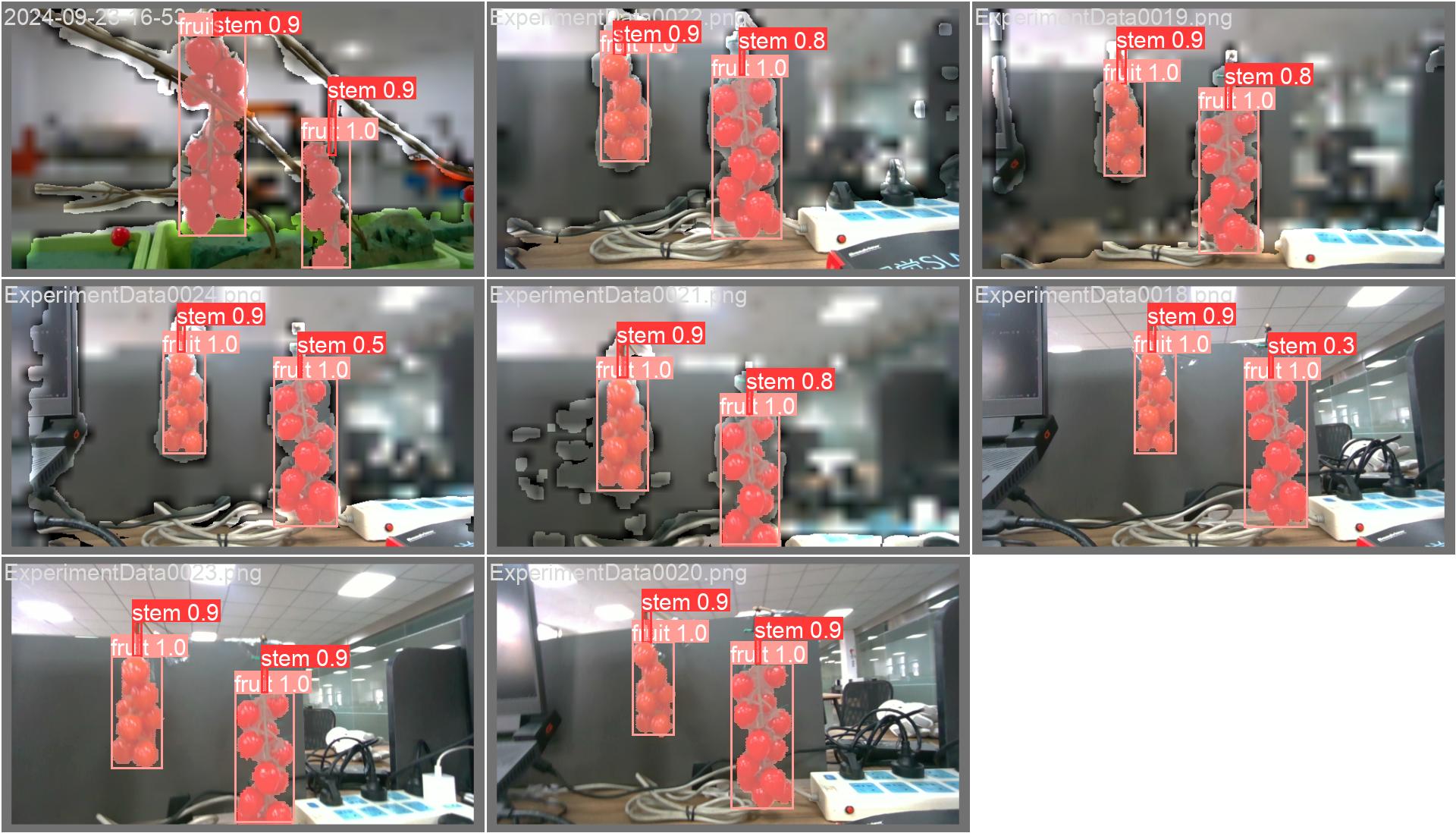

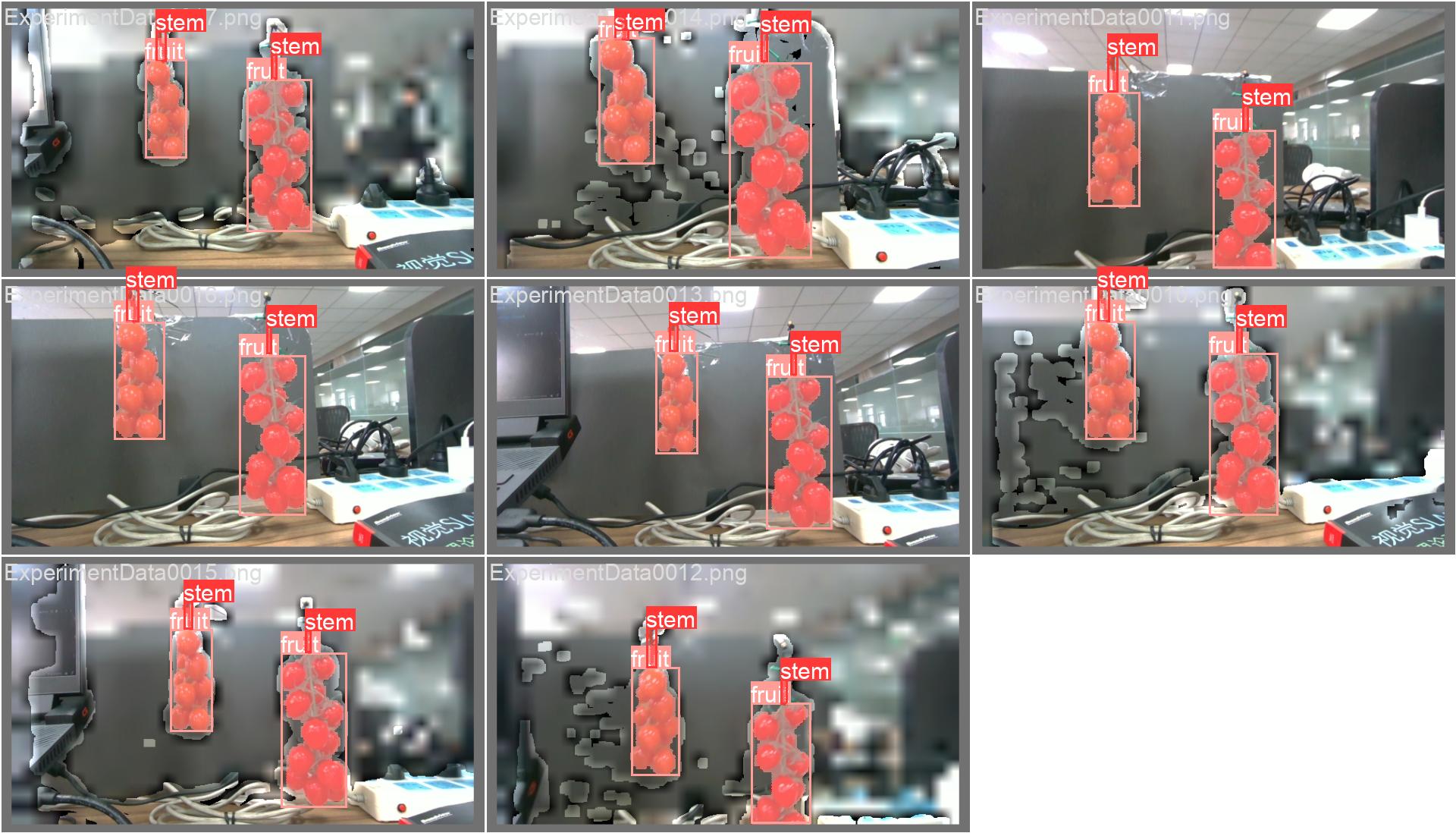

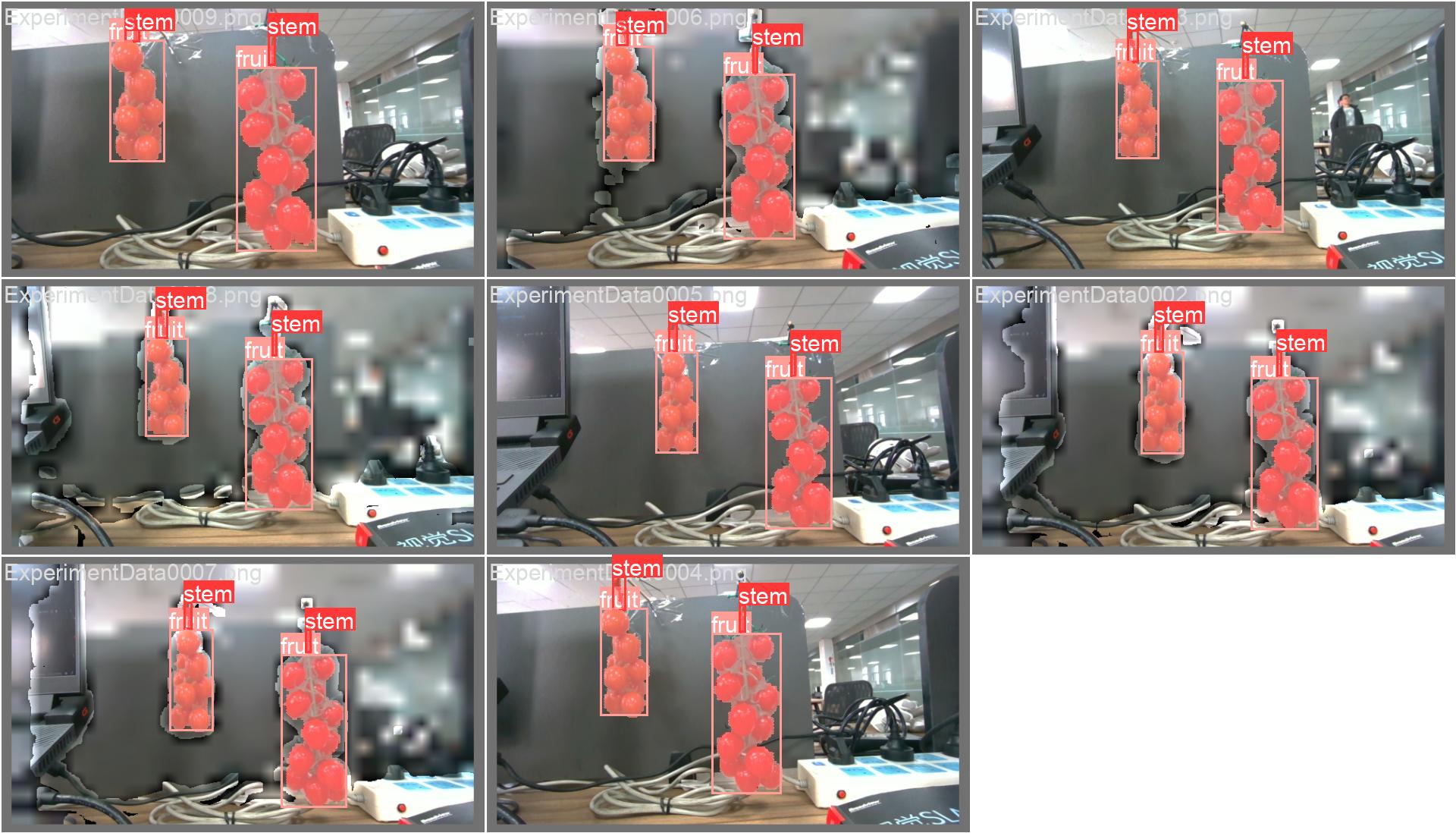

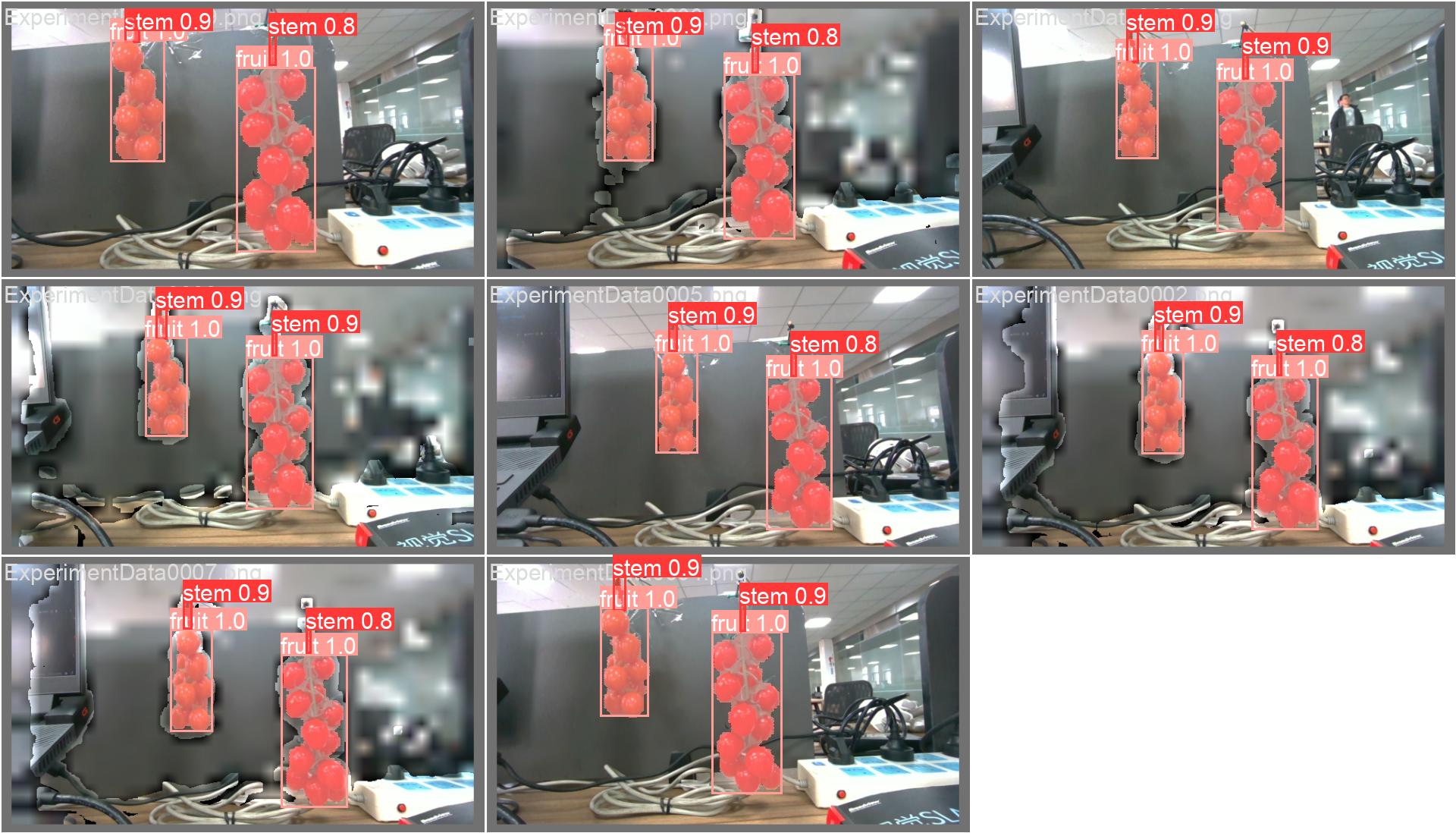

17.val_batch(N)_labels.jpg 和 val_batch(N)_pred.jpg

val_batchx_labels:验证集第N轮的实际标签

val_batchx_pred:验证集第N轮的预测标签

-

val_batch0_labels.jpg

-

val_batch0_pred.jpg

-

val_batch1_labels.jpg

-

val_batch1_pred.jpg

-

val_batch2_labels.jpg

-

val_batch2_pred.jpg

1097

1097

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?