文章目录

Unit 8: Training a Fetch Robot. Part 2

| SUMMARY |

|---|

Estimated time to completion: 2 hours

In this unit, you are going to have a step-by-step look at how to build the Task Environment for training a Fetch robot.

| END OF SUMMARY |

|---|

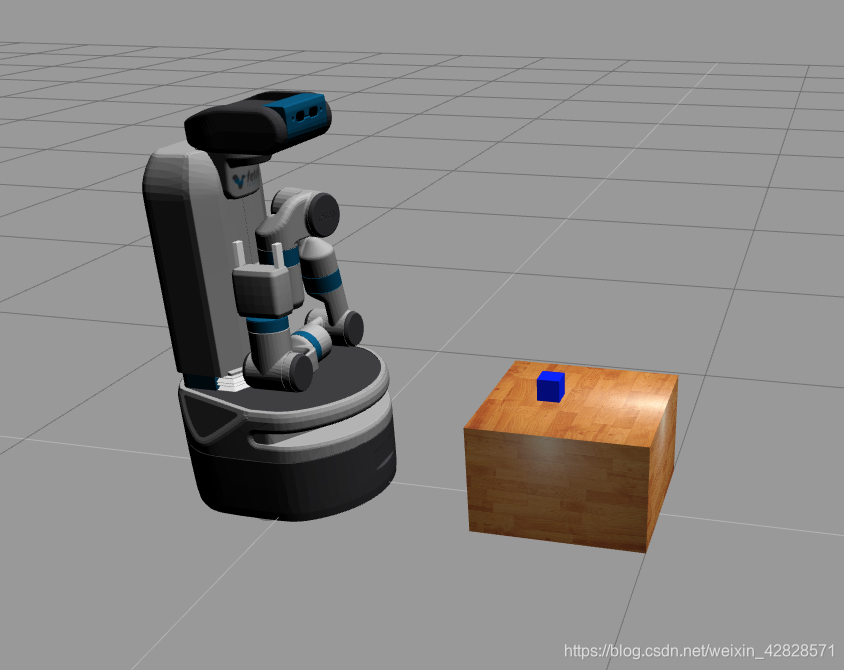

With the Robot Environment covered, let’s now have a look at how to build a Task Environment for training our Fetch robot. In this case, we are going to train the robot to reach a cube, which will be on top of a flat surface. For that, let’s first spawn our whole scene.

| Exercise 7.3 |

|---|

a) Create a new file named cube.urdf in your catkin_ws/src folder.

Execute in WebShell #1

roscd; cd ../src

touch cube.urdf

Paste the following code into that file.

<robot name="simple_box">

<link name="my_box">

<inertial>

<origin xyz="0 0 0.0145"/>

<mass value="0.1" />

<inertia ixx="0.0001" ixy="0.0" ixz="0.0" iyy="0.0001" iyz="0.0" izz="0.0001" />

</inertial>

<visual>

<origin xyz="-0.23 0 0.215"/>

<geometry>

<box size="0.47 0.46 0.3"/>

</geometry>

</visual>

<collision>

<origin xyz="-0.23 0 0.215"/>

<geometry>

<box size="0.47 0.46 0.3"/>

</geometry>

</collision>

</link>

<gazebo reference="my_box">

<material>Gazebo/Wood</material>

</gazebo>

<gazebo>

<static>true</static>

</gazebo>

</robot>

b) Execute the following command in order to spawn the cube right in front of the Fetch robot.

rosrun gazebo_ros spawn_model -file /home/user/catkin_ws/src/cube.urdf -urdf -x 1 -model my_object

c) Now, execute the next command in order to spawn the grasping block.

rosrun gazebo_ros spawn_model -database demo_cube -gazebo -model grasp_cube -x 0.70 -y 0 -z 0.35

At the end, you should have something like this:

| End of Exercise 7.3 |

|---|

Great! Now we are done with the scene, let’s keep working on our environments.

Task Environment

We will start by creating our Task Environment. This Task Environment, as you may already know, will be in charge of providing all the necessary functions and methods related to this specific training. This means that it will contain all the basic functions to be able to learn how to reach the cube, in this case.

We will start, as we did in the previous chapter, with the basic task environment template.

| template_my_task_env.py |

|---|

from gym import spaces

import my_robot_env

from gym.envs.registration import register

import rospy

# The path is __init__.py of openai_ros, where we import the MovingCubeOneDiskWalkEnv directly

timestep_limit_per_episode = 1000 # Can be any Value

register(

id='MyTrainingEnv-v0',

entry_point='template_my_training_env:MovingCubeOneDiskWalkEnv',

timestep_limit=timestep_limit_per_episode,

)

class MyTrainingEnv(cube_single_disk_env.MyRobotEnv):

def __init__(self):

# Only variable needed to be set here

number_actions = rospy.get_param('/my_robot_namespace/n_actions')

self.action_space = spaces.Discrete(number_actions)

# This is the most common case of Box observation type

high = numpy.array([

obs1_max_value,

obs12_max_value,

...

obsN_max_value

])

self.observation_space = spaces.Box(-high, high)

# Variables that we retrieve through the param server, loaded when launch training launch.

# Here we will add any init functions prior to starting the MyRobotEnv

super(MyTrainingEnv, self).__init__()

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

# TODO

def _init_env_variables(self):

"""

Inits variables needed to be initialised each time we reset at the start

of an episode.

:return:

"""

# TODO

def _set_action(self, action):

"""

Move the robot based on the action variable given

"""

# TODO: Move robot

def _get_obs(self):

"""

Here we define what sensor data of our robots observations

To know which Variables we have access to, we need to read the

MyRobotEnv API DOCS

:return: observations

"""

# TODO

return observations

def _is_done(self, observations):

"""

Decide if episode is done based on the observations

"""

# TODO

return done

def _compute_reward(self, observations, done):

"""

Return the reward based on the observations given

"""

# TODO

return reward

# Internal TaskEnv Methods

| template_my_task_env.py |

|---|

| Exercise 7.3 |

|---|

b) Inside the scripts folder of the package you created in the previous unit, create a new file named fetch_reach.py, and paste the above template inside. You can also rename the environment to FetchReachEnv. You will then make all the necessary changes to adapt it to the specific task to be trained.

| End of Exercise 7.3 |

|---|

Initialization of the class

Let’s go by parts. First of all, we’ll need to fill the initialization of the class. Here you can see an example of this:

def __init__(self):

self.get_params()

fetch_env.FetchEnv.__init__(self)

utils.EzPickle.__init__(self)

self._env_setup(initial_qpos=self.init_pos)

obs = self._get_obs()

self.action_space = spaces.Box(-1., 1., shape=(self.n_actions,), dtype='float32')

self.observation_space = spaces.Dict(dict(

desired_goal=spaces.Box(-np.inf, np.inf, shape=obs['achieved_goal'].shape, dtype='float32'),

achieved_goal=spaces.Box(-np.inf, np.inf, shape=obs['achieved_goal'].shape, dtype='float32'),

observation=spaces.Box(-np.inf, np.inf, shape=obs['observation'].shape, dtype='float32'),

))

The first thing we are doing, as you can see, is calling the get_params() function, which will get all the required parameters that the Fetch robot needs in order to perform the training.

self.get_params()

Next, we are calling the envsetup() function, which basically will set up everything required to start the training, and will send the arm of the Fetch robot to its initial position:

self._env_setup(initial_qpos=self.init_pos)

Also, we get a first observation, since we will need it to generate the observation_space.

obs = self._get_obs()

Finally, we define both the action_space and the observation_space.

self.action_space = spaces.Box(-1., 1., shape=(self.n_actions,), dtype='float32')

self.observation_space = spaces.Dict(dict(

desired_goal=spaces.Box(-np.inf, np.inf, shape=obs['achieved_goal'].shape, dtype='float32'),

achieved_goal=spaces.Box(-np.inf, np.inf, shape=obs['achieved_goal'].shape, dtype='float32'),

observation=spaces.Box(-np.inf, np.inf, shape=obs['observation'].shape, dtype='float32'),

))

As you can see, the observation space is a dict, which is specific to the Goal-based Environment, as we explained in the previous unit.

Methods needed by the Task Environment

So, let’s continue with the creation of our script by creating the methods needed by the Task Environment. These are all the methods that will be particular to this training. Let’s have a look at all the functions, and then we’ll comment on them, one by one.

def get_params(self):

#get configuration parameters

"""

self.n_actions = rospy.get_param('/fetch/n_actions')

self.has_object = rospy.get_param('/fetch/has_object')

self.block_gripper = rospy.get_param('/fetch/block_gripper')

self.n_substeps = rospy.get_param('/fetch/n_substeps')

self.gripper_extra_height = rospy.get_param('/fetch/gripper_extra_height')

self.target_in_the_air = rospy.get_param('/fetch/target_in_the_air')

self.target_offset = rospy.get_param('/fetch/target_offset')

self.obj_range = rospy.get_param('/fetch/obj_range')

self.target_range = rospy.get_param('/fetch/target_range')

self.distance_threshold = rospy.get_param('/fetch/distance_threshold')

self.init_pos = rospy.get_param('/fetch/init_pos')

self.reward_type = rospy.get_param('/fetch/reward_type')

"""

self.n_actions = 4

self.has_object = False

self.block_gripper = True

self.n_substeps = 20

self.gripper_extra_height = 0.2

self.target_in_the_air = True

self.target_offset = 0.0

self.obj_range = 0.15

self.target_range = 0.15

self.distance_threshold = 0.05

self.reward_type = "sparse"

self.init_pos = {

'joint0': 0.0,

'joint1': 0.0,

'joint2': 0.0,

'joint3': -1.5,

'joint4': 0.0,

'joint5': 1.5,

'joint6': 0.0,

}

def _set_action(self, action):

# Take action

assert action.shape == (4,)

action = action.copy() # ensure that we don't change the action outside of this scope

pos_ctrl, gripper_ctrl = action[:3], action[3]

#pos_ctrl *= 0.05 # limit maximum change in position

rot_ctrl = [1., 0., 1., 0.] # fixed rotation of the end effector, expressed as a quaternion

gripper_ctrl = np.array([gripper_ctrl, gripper_ctrl])

assert gripper_ctrl.shape == (2,)

if self.block_gripper:

gripper_ctrl = np.zeros_like(gripper_ctrl)

action = np.concatenate([pos_ctrl, rot_ctrl, gripper_ctrl])

# Apply action to simulation.

self.set_trajectory_ee(action)

def _get_obs(self):

grip_pos = self.get_ee_pose()

grip_pos_array = np.array([grip_pos.pose.position.x, grip_pos.pose.position.y, grip_pos.pose.position.z])

#dt = self.sim.nsubsteps * self.sim.model.opt.timestep #What is this??

#grip_velp = self.sim.data.get_site_xvelp('robot0:grip') * dt

grip_rpy = self.get_ee_rpy()

#print grip_rpy

grip_velp = np.array([grip_rpy.y, grip_rpy.y])

robot_qpos, robot_qvel = fetch_utils.robot_get_obs(self.joints)

if self.has_object:

object_pos = self.sim.data.get_site_xpos('object0')

# rotations

object_rot = rotations.mat2euler(self.sim.data.get_site_xmat('object0'))

# velocities

object_velp = self.sim.data.get_site_xvelp('object0') * dt

object_velr = self.sim.data.get_site_xvelr('object0') * dt

# gripper state

object_rel_pos = object_pos - grip_pos

object_velp -= grip_velp

else:

object_pos = object_rot = object_velp = object_velr = object_rel_pos = np.zeros(0)

gripper_state = robot_qpos[-2:]

gripper_vel = robot_qvel[-2:] #* dt # change to a scalar if the gripper is made symmetric

"""

if not self.has_object:

achieved_goal = grip_pos_array.copy()

else:

achieved_goal = np.squeeze(object_pos.copy())

"""

achieved_goal = self._sample_achieved_goal(grip_pos_array, object_pos)

obs = np.concatenate([

grip_pos_array, object_pos.ravel(), object_rel_pos.ravel(), gripper_state, object_rot.ravel(),

object_velp.ravel(), object_velr.ravel(), gripper_vel,

])

return {

'observation': obs.copy(),

'achieved_goal': achieved_goal.copy(),

'desired_goal': self.goal.copy(),

}

def _is_done(self, observations):

d = self.goal_distance(observations['achieved_goal'], self.goal)

return (d < self.distance_threshold).astype(np.float32)

def _compute_reward(self, observations, done):

d = self.goal_distance(observations['achieved_goal'], self.goal)

if self.reward_type == 'sparse':

return -(d > self.distance_threshold).astype(np.float32)

else:

return -d

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

self.gazebo.unpauseSim()

self.set_trajectory_joints(self.init_pos)

return True

def goal_distance(self, goal_a, goal_b):

assert goal_a.shape == goal_b.shape

return np.linalg.norm(goal_a - goal_b, axis=-1)

def _sample_goal(self):

if self.has_object:

goal = self.initial_gripper_xpos[:3] + self.np_random.uniform(-self.target_range, self.target_range, size=3)

goal += self.target_offset

goal[2] = self.height_offset

if self.target_in_the_air and self.np_random.uniform() < 0.5:

goal[2] += self.np_random.uniform(0, 0.45)

else:

goal = self.initial_gripper_xpos[:3] + self.np_random.uniform(-0.15, 0.15, size=3)

#return goal.copy()

return goal

def _sample_achieved_goal(self, grip_pos_array, object_pos):

if not self.has_object:

achieved_goal = grip_pos_array.copy()

else:

achieved_goal = np.squeeze(object_pos.copy())

#return achieved_goal.copy()

return achieved_goal

def _env_setup(self, initial_qpos):

print ("Init Pos:")

print (initial_qpos)

#for name, value in initial_qpos.items():

self.gazebo.unpauseSim()

self.set_trajectory_joints(initial_qpos)

#self.execute_trajectory()

#utils.reset_mocap_welds(self.sim)

#self.sim.forward()

# Move end effector into position.

gripper_target = np.array([0.498, 0.005, 0.431 + self.gripper_extra_height])# + self.sim.data.get_site_xpos('robot0:grip')

gripper_rotation = np.array([1., 0., 1., 0.])

#self.sim.data.set_mocap_pos('robot0:mocap', gripper_target)

#self.sim.data.set_mocap_quat('robot0:mocap', gripper_rotation)

action = np.concatenate([gripper_target, gripper_rotation])

self.set_trajectory_ee(action)

#self.execute_trajectory()

#for _ in range(10):

#self.sim.step()

#self.step()

# Extract information for sampling goals.

#self.initial_gripper_xpos = self.sim.data.get_site_xpos('robot0:grip').copy()

gripper_pos = self.get_ee_pose()

gripper_pose_array = np.array([gripper_pos.pose.position.x, gripper_pos.pose.position.y, gripper_pos.pose.position.z])

self.initial_gripper_xpos = gripper_pose_array.copy()

if self.has_object:

self.height_offset = self.sim.data.get_site_xpos('object0')[2]

self.goal = self._sample_goal()

self._get_obs()

You already know the get_params() function from the previous chapters, so I will skip it. It’s exactly the same, just using different parameters (in this case, the ones needed for the Fetch robot).

So, first of all, we have the setaction(self, action) function.

def _set_action(self, action):

# Take action

assert action.shape == (4,)

action = action.copy() # ensure that we don't change the action outside of this scope

pos_ctrl, gripper_ctrl = action[:3], action[3]

#pos_ctrl *= 0.05 # limit maximum change in position

rot_ctrl = [1., 0., 1., 0.] # fixed rotation of the end effector, expressed as a quaternion

gripper_ctrl = np.array([gripper_ctrl, gripper_ctrl])

assert gripper_ctrl.shape == (2,)

if self.block_gripper:

gripper_ctrl = np.zeros_like(gripper_ctrl)

action = np.concatenate([pos_ctrl, rot_ctrl, gripper_ctrl])

# Apply action to simulation.

self.set_trajectory_ee(action)

This function will receive an action variable as input. This action variable will contain a desired goal position for the end effector of the manipulator. Then, we basically reformat this action variable and send it through the set_trajectory_ee(action) function, which we explained in the previous chapter. This function will call the required Service of the support script (also from the previous Chapter) in order to execute the motion.

Next, we have the getobs(self) function.

def _get_obs(self):

grip_pos = self.get_ee_pose()

grip_pos_array = np.array([grip_pos.pose.position.x, grip_pos.pose.position.y, grip_pos.pose.position.z])

#dt = self.sim.nsubsteps * self.sim.model.opt.timestep #What is this??

#grip_velp = self.sim.data.get_site_xvelp('robot0:grip') * dt

grip_rpy = self.get_ee_rpy()

#print grip_rpy

grip_velp = np.array([grip_rpy.y, grip_rpy.y])

robot_qpos, robot_qvel = self.robot_get_obs(self.joints)

if self.has_object:

object_pos = self.sim.data.get_site_xpos('object0')

# rotations

object_rot = rotations.mat2euler(self.sim.data.get_site_xmat('object0'))

# velocities

object_velp = self.sim.data.get_site_xvelp('object0') * dt

object_velr = self.sim.data.get_site_xvelr('object0') * dt

# gripper state

object_rel_pos = object_pos - grip_pos

object_velp -= grip_velp

else:

object_pos = object_rot = object_velp = object_velr = object_rel_pos = np.zeros(0)

gripper_state = robot_qpos[-2:]

gripper_vel = robot_qvel[-2:] #* dt # change to a scalar if the gripper is made symmetric

"""

if not self.has_object:

achieved_goal = grip_pos_array.copy()

else:

achieved_goal = np.squeeze(object_pos.copy())

"""

achieved_goal = self._sample_achieved_goal(grip_pos_array, object_pos)

obs = np.concatenate([

grip_pos_array, object_pos.ravel(), object_rel_pos.ravel(), gripper_state, object_rot.ravel(),

object_velp.ravel(), object_velr.ravel(), gripper_vel,

])

return {

'observation': obs.copy(),

'achieved_goal': achieved_goal.copy(),

'desired_goal': self.goal.copy(),

}

The joints_callback() function is the callback for the Subscriber that we declared in the initialization of the class (self.joint_states_sub). It just stores the data from the /joint_states topic in the variable of the class, self.joints.

The next one is the is_done (self, observations) function.

def _is_done(self, observations):

d = self.goal_distance(observations['achieved_goal'], self.goal)

return (d < self.distance_threshold).astype(np.float32)

This function basically checks whether the goal has been achieved or not. In this case, the goal would be to reach the cube with the end effector of the Fetch robot.

Next, we have the computereward (self, observations, done) function.

def _compute_reward(self, observations, done):

d = self.goal_distance(observations['achieved_goal'], self.goal)

if self.reward_type == 'sparse':

return -(d > self.distance_threshold).astype(np.float32)

else:

return -d

This function, as the name itself implies, will calculate the reward for each action taken, depending on the distance between the end effector and the cube.

We also have the setinit_pose (self) function, which is in charge of sending the arm of the Fetch robot to an initial position after each step of the training has finished.

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

self.gazebo.unpauseSim()

self.set_trajectory_joints(self.init_pos)

return True

We can also see the samplegoal (self) and the sampleachieved_goal (self, grip_pos_array, object_pos) functions.

def _sample_goal(self):

if self.has_object:

goal = self.initial_gripper_xpos[:3] + self.np_random.uniform(-self.target_range, self.target_range, size=3)

goal += self.target_offset

goal[2] = self.height_offset

if self.target_in_the_air and self.np_random.uniform() < 0.5:

goal[2] += self.np_random.uniform(0, 0.45)

else:

goal = self.initial_gripper_xpos[:3] + self.np_random.uniform(-0.15, 0.15, size=3)

#return goal.copy()

return goal

def _sample_achieved_goal(self, grip_pos_array, object_pos):

if not self.has_object:

achieved_goal = grip_pos_array.copy()

else:

achieved_goal = np.squeeze(object_pos.copy())

#return achieved_goal.copy()

return achieved_goal

This function formats and returns both the current goal to be reached and the actual goal that has been reached, respectively.

Finally, we have the envsetup (self, initial_qpos) function, which we mentioned before.

def _env_setup(self, initial_qpos):

print ("Init Pos:")

print (initial_qpos)

#for name, value in initial_qpos.items():

self.gazebo.unpauseSim()

self.set_trajectory_joints(initial_qpos)

#self.execute_trajectory()

#utils.reset_mocap_welds(self.sim)

#self.sim.forward()

# Move end effector into position.

gripper_target = np.array([0.498, 0.005, 0.431 + self.gripper_extra_height])# + self.sim.data.get_site_xpos('robot0:grip')

gripper_rotation = np.array([1., 0., 1., 0.])

#self.sim.data.set_mocap_pos('robot0:mocap', gripper_target)

#self.sim.data.set_mocap_quat('robot0:mocap', gripper_rotation)

action = np.concatenate([gripper_target, gripper_rotation])

self.set_trajectory_ee(action)

#self.execute_trajectory()

#for _ in range(10):

#self.sim.step()

#self.step()

# Extract information for sampling goals.

#self.initial_gripper_xpos = self.sim.data.get_site_xpos('robot0:grip').copy()

gripper_pos = self.get_ee_pose()

gripper_pose_array = np.array([gripper_pos.pose.position.x, gripper_pos.pose.position.y, gripper_pos.pose.position.z])

self.initial_gripper_xpos = gripper_pose_array.copy()

if self.has_object:

self.height_offset = self.sim.data.get_site_xpos('object0')[2]

self.goal = self._sample_goal()

self._get_obs()

This function sets up the environment so that everything is ready for the training to start.

Finally, we have the robot_get_obs(data) function.

def robot_get_obs(self, data):

"""

Returns all joint positions and velocities associated with a robot.

"""

if data.position is not None and data.name:

#names = [n for n in data.name if n.startswith('robot')]

names = [n for n in data.name]

i = 0

r = 0

for name in names:

r += 1

return (

np.array([data.position[i] for i in range(r)]),

np.array([data.velocity[i] for i in range(r)]),

)

return np.zeros(0), np.zeros(0)

This function gets a data variable as input, which contains data about all the joints, and returns all joint positions and velocities associated with the Fetch robot.

And that’s it! With the above methods, we will be able to train our Fetch robot in the task of reaching a cube. At the end, you should have a file like this one:

| fetch_reach.py |

|---|

from gym import utils

import rospy

from gym import spaces

from openai_ros.robot_envs import fetch_env_v2

from gym.envs.registration import register

import numpy as np

from sensor_msgs.msg import JointState

from fetch_train.srv import EePose, EePoseRequest, EeRpy, EeRpyRequest, EeTraj, EeTrajRequest, JointTraj, JointTrajRequest

register(

id='FetchReach-v0',

entry_point='openai_ros:task_envs.fetch_reach.fetch_reach.FetchReachEnv',

timestep_limit=1000,

)

class FetchReachEnv(fetch_env_v2.FetchEnv, utils.EzPickle):

def __init__(self):

print ("Entered Reach Env")

self.get_params()

fetch_env_v2.FetchEnv.__init__(self)

utils.EzPickle.__init__(self)

print ("Call env setup")

self._env_setup(initial_qpos=self.init_pos)

print ("Call get_obs")

obs = self._get_obs()

self.action_space = spaces.Box(-1., 1., shape=(self.n_actions,), dtype='float32')

self.observation_space = spaces.Dict(dict(

desired_goal=spaces.Box(-np.inf, np.inf, shape=obs['achieved_goal'].shape, dtype='float32'),

achieved_goal=spaces.Box(-np.inf, np.inf, shape=obs['achieved_goal'].shape, dtype='float32'),

observation=spaces.Box(-np.inf, np.inf, shape=obs['observation'].shape, dtype='float32'),

))

def get_params(self):

#get configuration parameters

"""

self.n_actions = rospy.get_param('/fetch/n_actions')

self.has_object = rospy.get_param('/fetch/has_object')

self.block_gripper = rospy.get_param('/fetch/block_gripper')

self.n_substeps = rospy.get_param('/fetch/n_substeps')

self.gripper_extra_height = rospy.get_param('/fetch/gripper_extra_height')

self.target_in_the_air = rospy.get_param('/fetch/target_in_the_air')

self.target_offset = rospy.get_param('/fetch/target_offset')

self.obj_range = rospy.get_param('/fetch/obj_range')

self.target_range = rospy.get_param('/fetch/target_range')

self.distance_threshold = rospy.get_param('/fetch/distance_threshold')

self.init_pos = rospy.get_param('/fetch/init_pos')

self.reward_type = rospy.get_param('/fetch/reward_type')

"""

self.n_actions = 4

self.has_object = False

self.block_gripper = True

self.n_substeps = 20

self.gripper_extra_height = 0.2

self.target_in_the_air = True

self.target_offset = 0.0

self.obj_range = 0.15

self.target_range = 0.15

self.distance_threshold = 0.05

self.reward_type = "sparse"

self.init_pos = {

'joint0': 0.0,

'joint1': 0.0,

'joint2': 0.0,

'joint3': -1.5,

'joint4': 0.0,

'joint5': 1.5,

'joint6': 0.0,

}

def _set_action(self, action):

# Take action

assert action.shape == (4,)

action = action.copy() # ensure that we don't change the action outside of this scope

pos_ctrl, gripper_ctrl = action[:3], action[3]

#pos_ctrl *= 0.05 # limit maximum change in position

rot_ctrl = [1., 0., 1., 0.] # fixed rotation of the end effector, expressed as a quaternion

gripper_ctrl = np.array([gripper_ctrl, gripper_ctrl])

assert gripper_ctrl.shape == (2,)

if self.block_gripper:

gripper_ctrl = np.zeros_like(gripper_ctrl)

action = np.concatenate([pos_ctrl, rot_ctrl, gripper_ctrl])

# Apply action to simulation.

self.set_trajectory_ee(action)

def _get_obs(self):

grip_pos = self.get_ee_pose()

grip_pos_array = np.array([grip_pos.pose.position.x, grip_pos.pose.position.y, grip_pos.pose.position.z])

#dt = self.sim.nsubsteps * self.sim.model.opt.timestep #What is this??

#grip_velp = self.sim.data.get_site_xvelp('robot0:grip') * dt

grip_rpy = self.get_ee_rpy()

#print grip_rpy

grip_velp = np.array([grip_rpy.y, grip_rpy.y])

robot_qpos, robot_qvel = self.robot_get_obs(self.joints)

if self.has_object:

object_pos = self.sim.data.get_site_xpos('object0')

# rotations

object_rot = rotations.mat2euler(self.sim.data.get_site_xmat('object0'))

# velocities

object_velp = self.sim.data.get_site_xvelp('object0') * dt

object_velr = self.sim.data.get_site_xvelr('object0') * dt

# gripper state

object_rel_pos = object_pos - grip_pos

object_velp -= grip_velp

else:

object_pos = object_rot = object_velp = object_velr = object_rel_pos = np.zeros(0)

gripper_state = robot_qpos[-2:]

gripper_vel = robot_qvel[-2:] #* dt # change to a scalar if the gripper is made symmetric

"""

if not self.has_object:

achieved_goal = grip_pos_array.copy()

else:

achieved_goal = np.squeeze(object_pos.copy())

"""

achieved_goal = self._sample_achieved_goal(grip_pos_array, object_pos)

obs = np.concatenate([

grip_pos_array, object_pos.ravel(), object_rel_pos.ravel(), gripper_state, object_rot.ravel(),

object_velp.ravel(), object_velr.ravel(), gripper_vel,

])

return {

'observation': obs.copy(),

'achieved_goal': achieved_goal.copy(),

'desired_goal': self.goal.copy(),

}

def _is_done(self, observations):

d = self.goal_distance(observations['achieved_goal'], self.goal)

return (d < self.distance_threshold).astype(np.float32)

def _compute_reward(self, observations, done):

d = self.goal_distance(observations['achieved_goal'], self.goal)

if self.reward_type == 'sparse':

return -(d > self.distance_threshold).astype(np.float32)

else:

return -d

def _init_env_variables(self):

"""

Inits variables needed to be initialized each time we reset at the start

of an episode.

:return:

"""

pass

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

self.gazebo.unpauseSim()

self.set_trajectory_joints(self.init_pos)

return True

def goal_distance(self, goal_a, goal_b):

assert goal_a.shape == goal_b.shape

return np.linalg.norm(goal_a - goal_b, axis=-1)

def _sample_goal(self):

if self.has_object:

goal = self.initial_gripper_xpos[:3] + self.np_random.uniform(-self.target_range, self.target_range, size=3)

goal += self.target_offset

goal[2] = self.height_offset

if self.target_in_the_air and self.np_random.uniform() < 0.5:

goal[2] += self.np_random.uniform(0, 0.45)

else:

goal = self.initial_gripper_xpos[:3] + self.np_random.uniform(-0.15, 0.15, size=3)

#return goal.copy()

return goal

def _sample_achieved_goal(self, grip_pos_array, object_pos):

if not self.has_object:

achieved_goal = grip_pos_array.copy()

else:

achieved_goal = np.squeeze(object_pos.copy())

#return achieved_goal.copy()

return achieved_goal

def _env_setup(self, initial_qpos):

print ("Init Pos:")

print (initial_qpos)

#for name, value in initial_qpos.items():

self.gazebo.unpauseSim()

self.set_trajectory_joints(initial_qpos)

#self.execute_trajectory()

#utils.reset_mocap_welds(self.sim)

#self.sim.forward()

# Move end effector into position.

gripper_target = np.array([0.498, 0.005, 0.431 + self.gripper_extra_height])# + self.sim.data.get_site_xpos('robot0:grip')

gripper_rotation = np.array([1., 0., 1., 0.])

#self.sim.data.set_mocap_pos('robot0:mocap', gripper_target)

#self.sim.data.set_mocap_quat('robot0:mocap', gripper_rotation)

action = np.concatenate([gripper_target, gripper_rotation])

self.set_trajectory_ee(action)

#self.execute_trajectory()

#for _ in range(10):

#self.sim.step()

#self.step()

# Extract information for sampling goals.

#self.initial_gripper_xpos = self.sim.data.get_site_xpos('robot0:grip').copy()

gripper_pos = self.get_ee_pose()

gripper_pose_array = np.array([gripper_pos.pose.position.x, gripper_pos.pose.position.y, gripper_pos.pose.position.z])

self.initial_gripper_xpos = gripper_pose_array.copy()

if self.has_object:

self.height_offset = self.sim.data.get_site_xpos('object0')[2]

self.goal = self._sample_goal()

self._get_obs()

def robot_get_obs(self, data):

"""

Returns all joint positions and velocities associated with a robot.

"""

if data.position is not None and data.name:

#names = [n for n in data.name if n.startswith('robot')]

names = [n for n in data.name]

i = 0

r = 0

for name in names:

r += 1

return (

np.array([data.position[i] for i in range(r)]),

np.array([data.velocity[i] for i in range(r)]),

)

return np.zeros(0), np.zeros(0)

| fetch_reach.py |

|---|

Training Script (HER)

Everything is ready to start with the training! We already have all our environment structures ready, so it’s time to execute the learning algorithm! For this case, we’ll be using HER, which stands for Hindsight Experience Replay.

To understand what HER does, let’s look at it in the context of our current task, where we need to learn to reach a cube on top of a table. Our first attempt will very likely not be a successful one. Unless we get very lucky, the next few attempts will likely also not succeed. Typical reinforcement learning algorithms would not learn anything from this experience since they just obtain a constant reward (in this case: -1) that does not contain any learning signal.

The key insight that HER formalizes is what humans do intuitively: Even though we have not succeeded at a specific goal, we have at least achieved a different one. So, why not just pretend that we wanted to achieve this goal to begin with, instead of the one that we set out to achieve originally? By doing this substitution, the reinforcement learning algorithm can obtain a learning signal since it has achieved a goal, even if it wasn’t the one that we meant to achieve originally. If we repeat this process, we will eventually learn how to achieve arbitrary goals, including the goals that we really want to achieve.

This approach let’s us learn how to reach a cube on top of the table, even though our reward is sparse, and even though we may have never actually hit the desired goal early on. This technique is called Hindsight Experience Replay because it replays experiences (a technique often used in off-policy RL algorithms, like DQN and DDPG) with goals that are chosen in hindsight, after the episode has finished.

Everything that you saw in the previous unit about Goal-based environments probably makes more sense to you now, right?

Great! So with the HER algorithm properly introduced, let’s try it! But, as we had to do with the deepq algorithm, we’ll need to modify some things in the scripts in order to make it work properly.

Also, keep in mind that we are not going to explain the contents of the HER algorithm scripts because it is quite a complex algorithm and it would take a complete course to do so. So, for now, we are just going to focus on having it running, in order to be able to use it to train our robot. Let’s go then!

| Exercise 7.4 |

|---|

a) Modify the necessary scripts to have the HER algorithm working. You will find the HER algorithm scripts in baselines/baselines/her.

STEP 1: The script that will launch the training session will be called train.py. Here, you will need to import your Task Environment, and also initialize it. Below you can see an example of this file already modified. Place the below script in baselines/baselines/her/.

| train.py |

|---|

import os

import sys

import click

import numpy as np

import json

from mpi4py import MPI

from baselines import logger

from baselines.common import set_global_seeds

from baselines.common.mpi_moments import mpi_moments

import baselines.her.experiment.config as config

from baselines.her.rollout import RolloutWorker

from baselines.her.util import mpi_fork

from subprocess import CalledProcessError

#from gym.envs.robotics.fetch import reach

from openai_ros.task_envs.fetch_reach import fetch_reach

import rospy

def mpi_average(value):

if value == []:

value = [0.]

if not isinstance(value, list):

value = [value]

return mpi_moments(np.array(value))[0]

def train(policy, rollout_worker, evaluator,

n_epochs, n_test_rollouts, n_cycles, n_batches, policy_save_interval,

save_policies, **kwargs):

rank = MPI.COMM_WORLD.Get_rank()

latest_policy_path = os.path.join(logger.get_dir(), 'policy_latest.pkl')

best_policy_path = os.path.join(logger.get_dir(), 'policy_best.pkl')

periodic_policy_path = os.path.join(logger.get_dir(), 'policy_{}.pkl')

logger.info("Training...")

best_success_rate = -1

for epoch in range(n_epochs):

# train

rollout_worker.clear_history()

for _ in range(n_cycles):

episode = rollout_worker.generate_rollouts()

policy.store_episode(episode)

for _ in range(n_batches):

policy.train()

policy.update_target_net()

# test

evaluator.clear_history()

for _ in range(n_test_rollouts):

evaluator.generate_rollouts()

# record logs

logger.record_tabular('epoch', epoch)

for key, val in evaluator.logs('test'):

logger.record_tabular(key, mpi_average(val))

for key, val in rollout_worker.logs('train'):

logger.record_tabular(key, mpi_average(val))

for key, val in policy.logs():

logger.record_tabular(key, mpi_average(val))

if rank == 0:

logger.dump_tabular()

# save the policy if it's better than the previous ones

success_rate = mpi_average(evaluator.current_success_rate())

if rank == 0 and success_rate >= best_success_rate and save_policies:

best_success_rate = success_rate

logger.info('New best success rate: {}. Saving policy to {} ...'.format(best_success_rate, best_policy_path))

evaluator.save_policy(best_policy_path)

evaluator.save_policy(latest_policy_path)

if rank == 0 and policy_save_interval > 0 and epoch % policy_save_interval == 0 and save_policies:

policy_path = periodic_policy_path.format(epoch)

logger.info('Saving periodic policy to {} ...'.format(policy_path))

evaluator.save_policy(policy_path)

# make sure that different threads have different seeds

local_uniform = np.random.uniform(size=(1,))

root_uniform = local_uniform.copy()

MPI.COMM_WORLD.Bcast(root_uniform, root=0)

if rank != 0:

assert local_uniform[0] != root_uniform[0]

def launch(

env, logdir, n_epochs, num_cpu, seed, replay_strategy, policy_save_interval, clip_return,

override_params={}, save_policies=True

):

# Fork for multi-CPU MPI implementation.

if num_cpu > 1:

try:

whoami = mpi_fork(num_cpu, ['--bind-to', 'core'])

except CalledProcessError:

# fancy version of mpi call failed, try simple version

whoami = mpi_fork(num_cpu)

if whoami == 'parent':

sys.exit(0)

import baselines.common.tf_util as U

U.single_threaded_session().__enter__()

rank = MPI.COMM_WORLD.Get_rank()

# Configure logging

if rank == 0:

if logdir or logger.get_dir() is None:

logger.configure(dir=logdir)

else:

logger.configure()

logdir = logger.get_dir()

assert logdir is not None

os.makedirs(logdir, exist_ok=True)

# Seed everything.

rank_seed = seed + 1000000 * rank

set_global_seeds(rank_seed)

# Prepare params.

params = config.DEFAULT_PARAMS

params['env_name'] = env

params['replay_strategy'] = replay_strategy

if env in config.DEFAULT_ENV_PARAMS:

params.update(config.DEFAULT_ENV_PARAMS[env]) # merge env-specific parameters in

params.update(**override_params) # makes it possible to override any parameter

with open(os.path.join(logger.get_dir(), 'params.json'), 'w') as f:

json.dump(params, f)

params = config.prepare_params(params)

config.log_params(params, logger=logger)

if num_cpu == 1:

logger.warn()

logger.warn('*** Warning ***')

logger.warn(

'You are running HER with just a single MPI worker. This will work, but the ' +

'experiments that we report in Plappert et al. (2018, https://arxiv.org/abs/1802.09464) ' +

'were obtained with --num_cpu 19. This makes a significant difference and if you ' +

'are looking to reproduce those results, be aware of this. Please also refer to ' +

'https://github.com/openai/baselines/issues/314 for further details.')

logger.warn('****************')

logger.warn()

dims = config.configure_dims(params)

policy = config.configure_ddpg(dims=dims, params=params, clip_return=clip_return)

rollout_params = {

'exploit': False,

'use_target_net': False,

'use_demo_states': True,

'compute_Q': False,

'T': params['T'],

}

eval_params = {

'exploit': True,

'use_target_net': params['test_with_polyak'],

'use_demo_states': False,

'compute_Q': True,

'T': params['T'],

}

for name in ['T', 'rollout_batch_size', 'gamma', 'noise_eps', 'random_eps']:

rollout_params[name] = params[name]

eval_params[name] = params[name]

rollout_worker = RolloutWorker(params['make_env'], policy, dims, logger, **rollout_params)

rollout_worker.seed(rank_seed)

evaluator = RolloutWorker(params['make_env'], policy, dims, logger, **eval_params)

evaluator.seed(rank_seed)

train(

logdir=logdir, policy=policy, rollout_worker=rollout_worker,

evaluator=evaluator, n_epochs=n_epochs, n_test_rollouts=params['n_test_rollouts'],

n_cycles=params['n_cycles'], n_batches=params['n_batches'],

policy_save_interval=policy_save_interval, save_policies=save_policies)

@click.command()

@click.option('--env', type=str, default='FetchReach-v0', help='the name of the OpenAI Gym environment that you want to train on')

@click.option('--logdir', type=str, default=None, help='the path to where logs and policy pickles should go. If not specified, creates a folder in /tmp/')

@click.option('--n_epochs', type=int, default=50, help='the number of training epochs to run')

@click.option('--num_cpu', type=int, default=1, help='the number of CPU cores to use (using MPI)')

@click.option('--seed', type=int, default=0, help='the random seed used to seed both the environment and the training code')

@click.option('--policy_save_interval', type=int, default=5, help='the interval with which policy pickles are saved. If set to 0, only the best and latest policy will be pickled.')

@click.option('--replay_strategy', type=click.Choice(['future', 'none']), default='future', help='the HER replay strategy to be used. "future" uses HER, "none" disables HER.')

@click.option('--clip_return', type=int, default=1, help='whether or not returns should be clipped')

def main(**kwargs):

rospy.init_node("train_fetch_her")

launch(**kwargs)

if __name__ == '__main__':

main()

| train.py |

|---|

Execute the train.py script and see how Fetch starts training.

IMPORTANT: Remember that, in order to execute the HER algorithm, you will need to do it from a Python 3 virtual env, just as you did with the deepq algorithm.

IMPORTANT: The complete sequence in order to correctly launch the training would be like this:

- First, you start the fetch robot MoveIt package that you should have created in the previous Unit from a WebShell. For instance, assuming that you named your package fetch_moveit_config, it would be something like this:

roslaunch fetch_moveit_config fetch_planning_execution.launch

- Next, you start the support script, also created in the previous Unit, from another WebShell. If you followed all the steps correctly, it will be something like this:

rosrun fetch_train execute_trajectories.py

- Finally, from the Python 3 venv, you will run the training script. But first, you will need to execute the following command, to avoid possible errors.

export LC_ALL=C.UTF-8

export LANG=C.UTF-8

- And finally, execute the training script

python train.py

| End of Exercise 7.4 |

|---|

As you may have noticed, the Fetch arm stays long periods of time without performing any motion. This is because the space where the arm can perform motions is reduced. There are many values that will cause the motion plan to fail, due to different reasons.

If you have a look at the Task Environment we have created, the action_space takes values between -1 and 1.

self.action_space = spaces.Box(-1., 1., shape=(self.n_actions,), dtype='float32')

So, for instance, a possible goal position for the end effector could be [0.5, 0, -1] in [x,y,z].

| Exercise 7.5 |

|---|

Try to improve the ratio in which motion plans are successfully calculated. For that, you will need to do some modifications in the Task Environment. For instance, you could try to process the values received from the action_space, so that we remove some values that we know will cause the motion plan to fail.

| End of Exercise 7.5 |

|---|

1106

1106

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?