👨🎓个人主页:研学社的博客

💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

📋📋📋本文目录如下:🎁🎁🎁

目录

💥1 概述

本文利用粒子群优化(PSO)和引力搜索算法(GSA)的混合体,称为PSOGSA,用于训练前馈神经网络(FNN)。该算法应用于众所周知的鸢尾花数据集。

📚2 运行结果

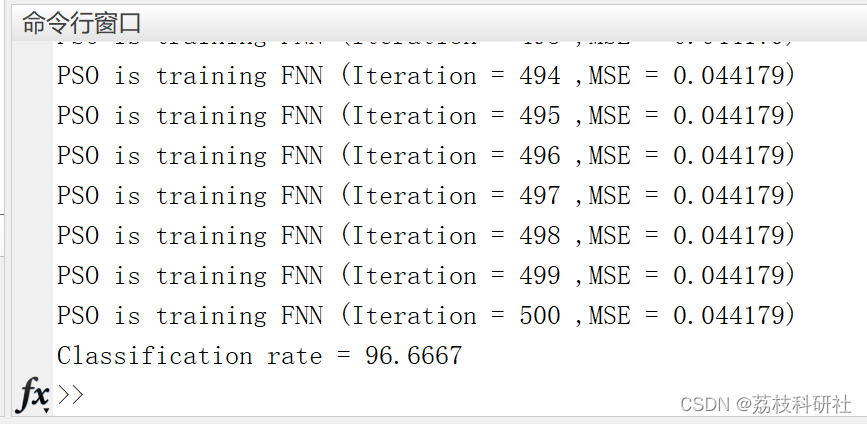

2.1 FNNPSO

2.2 FNNPSOGSA

2.2 FNNPSOGSA

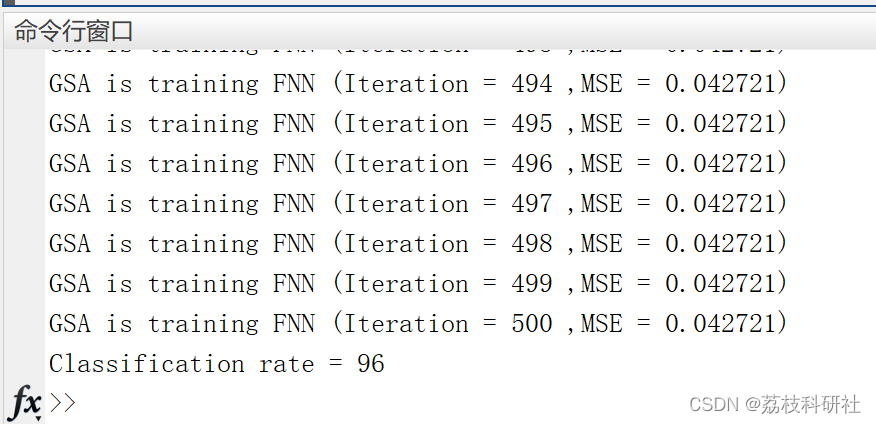

2.3 FNNGSA

2.3 FNNGSA

部分代码:

HiddenNodes=15; %Number of hidden codes

Dim=8*HiddenNodes+3; %Dimension of particles in PSO

TrainingNO=150; %Number of training samples

%% PSO/

%Initial Parameters for PSO

noP=30; %Number of particles

Max_iteration=500;%Maximum number of iterations

w=2; %Inirtia weight

wMax=0.9; %Max inirtia weight

wMin=0.5; %Min inirtia weight

c1=2;

c2=2;

dt=0.8;

vel=zeros(noP,Dim); %Velocity vector

pos=zeros(noP,Dim); %Position vector

%Cognitive component/

pBestScore=zeros(noP);

pBest=zeros(noP,Dim);

%

%Social component///

gBestScore=inf;

gBest=zeros(1,Dim);

%///

ConvergenceCurve=zeros(1,Max_iteration); %Convergence vector

%Initialization

for i=1:size(pos,1) % For each Particle

for j=1:size(pos,2) % For each dimension

pos(i,j)=rand();

vel(i,j)=0.3*rand();

end

end

%initialize gBestScore for min

gBestScore=inf;

for Iteration=1:Max_iteration

%Calculate MSE

for i=1:size(pos,1)

for ww=1:(7*HiddenNodes)

Weights(ww)=pos(i,ww);

end

for bb=7*HiddenNodes+1:Dim

Biases(bb-(7*HiddenNodes))=pos(i,bb);

end

fitness=0;

for pp=1:TrainingNO

actualvalue=My_FNN(4,HiddenNodes,3,Weights,Biases,I2(pp,1),I2(pp,2), I2(pp,3),I2(pp,4));

if(T(pp)==-1)

fitness=fitness+(1-actualvalue(1))^2;

fitness=fitness+(0-actualvalue(2))^2;

fitness=fitness+(0-actualvalue(3))^2;

end

if(T(pp)==0)

fitness=fitness+(0-actualvalue(1))^2;

fitness=fitness+(1-actualvalue(2))^2;

fitness=fitness+(0-actualvalue(3))^2;

end

if(T(pp)==1)

fitness=fitness+(0-actualvalue(1))^2;

fitness=fitness+(0-actualvalue(2))^2;

fitness=fitness+(1-actualvalue(3))^2;

end

end

fitness=fitness/TrainingNO;

if Iteration==1

pBestScore(i)=fitness;

end

if(pBestScore(i)>fitness)

pBestScore(i)=fitness;

pBest(i,:)=pos(i,:);

end

🎉3 参考文献

部分理论来源于网络,如有侵权请联系删除。

[1]S. Mirjalili, S. Z. Mohd Hashim, and H. Moradian Sardroudi, "Training

feedforward neural networks using hybrid particle swarm optimization and

gravitational search algorithm," Applied Mathematics and Computation,

vol. 218, pp. 11125-11137, 2012.

[2]乔楠.基于SSA-FNN的光伏功率超短期预测研究[J].光源与照明,2022(10):98-100.

135

135

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?