1. 概述

简单介绍如何使用Kalibr工具箱进行针孔模型相机标定的步骤,供自己以后参考,同时希望给大家带来帮助。

2. 准备

- 提前准备好

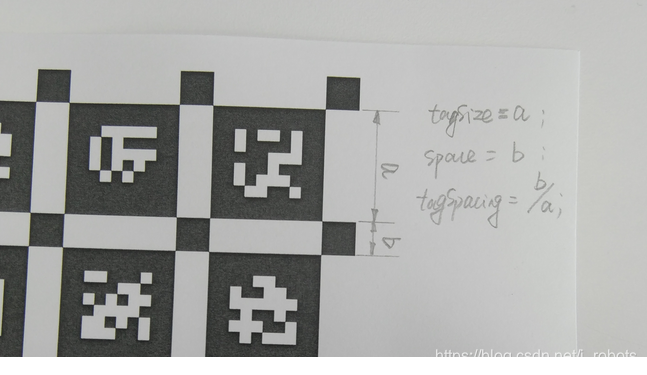

标签并打印出来,可以使用A4纸打印,然后粘贴在纸板上,注意粘贴时贴紧纸板,确保位于纸张上的点位于同一平面。官方提供了Aprilgrid、棋盘格、圆形板三种格式。本次标定使用April tag,它是一种AR tag,属于编码信息的棋盘格,详细的介绍见文末参考链接。

- 保证包含相机图像的topic能够正常发布信息,使用

rosbag命令提前录制好bag包,实际使用时需要设置bag包的路径,确保每张图片包含标签信息,并不断旋转移动标定板,保证采取到不同位置下的标定板信息。 - 确保已经配置好Kalibr工具箱。如果报错,可以参考

在文件src/Kalibr/Schweizer-Messer/sm_python/python/sm/PlotCollection.py中第8行

from matplotlib.backends.backend_wxagg import NavigationToolbar2Wx as Toolbar

改为

from matplotlib.backends.backend_wx import NavigationToolbar2Wx as Toolbar

这样更改之后需要安装igraph

sudo add-apt-repository ppa:igraph/ppa

sudo apt-get update

sudo apt-get install python-igraph

- 运行Kalibr标定工具,他内部提供了

多IMU标定、相机IMU标定,多相机标定的功能,这里使用多个相机标定命令实现对单个相机的标定。命令格式

kalibr_calibrate_cameras --bag [filename.bag] --topics [TOPIC_0 ... TOPIC_N] --models [MODEL_0 ... MODEL_N] --target [target.yaml]

3. 步骤

- 录制含有标定板图像的bag包,注意需要不断移动标定板。

- 运行Kalibr工具箱中

多相机标定节点程序。仿照之前提到的命令格式

kalibr_calibrate_cameras --bag [filename.bag] --topics [TOPIC_0 ... TOPIC_N] --models [MODEL_0 ... MODEL_N] --target [target.yaml]

我们使用的最终命令

- 使用的bag文件

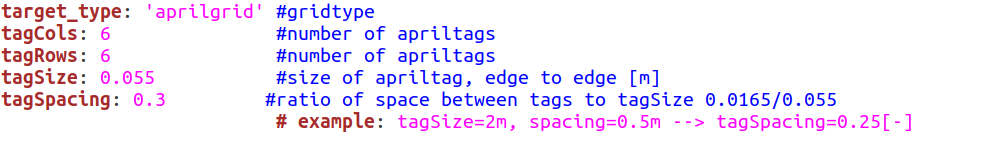

mdvncalib.bag,里面存储了含有标定板的图像 - 使用标定板规格

apriltag_6_6.yaml,可多种选择 - 相机的畸变模型

pinhole-radtan,其他模型还有omni-radtan - bag文件中相机发布图像的topic话题

/mindvision_image/image_raw

kalibr_calibrate_cameras --target apriltag_6_6.yaml --bag mdvncalib.bag --models pinhole-radtan --topics /mindvision_image/image_raw

- 对于标定板规格yaml文件,有下面

- 对于相机模型,有下面的可选参数

Camera models

Kalibr supports the following projection models:

pinhole camera model (pinhole)

(intrinsics vector: [fu fv pu pv])

omnidirectional camera model (omni)

(intrinsics vector: [xi fu fv pu pv])

double sphere camera model (ds)

(intrinsics vector: [xi alpha fu fv pu pv])

extended unified camera model (eucm)

(intrinsics vector: [alpha beta fu fv pu pv])

The intrinsics vector contains all parameters for the model:

fu, fv: focal-length

pu, pv: principal point

xi: mirror parameter (only omni)

xi, alpha: double sphere model parameters (only ds)

alpha, beta: extended unified model parameters (only eucm)

Distortion models

Kalibr supports the following distortion models:

radial-tangential (radtan)

(distortion_coeffs: [k1 k2 r1 r2])

equidistant (equi)

(distortion_coeffs: [k1 k2 k3 k4])

fov (fov)

(distortion_coeffs: [w])

none (none)

(distortion_coeffs: [])

- 特别注意

tagpacing参数的设置,具体值为spacing/tagSize,如果参数设置错误,程序会不能收敛。出现下面的状况

------------------------------------------------------------------

Progress 99 / 281 Time remaining: 8m 26s [ERROR] [1615619159.104217]: Did not converge in maxIterations... restarting...

[ WARN] [1615619159.108985]: Optimization diverged possibly due to a bad initialization. (Do the models fit the lenses well?)

[ERROR] [1615619159.113029]: Max. attemps reached... Giving up...

- bag文件中包含的图像,含有完整的标定板信息。

- 成功解算后,程序会收敛,输出下面的内容

importing libraries

Initializing cam0:

Camera model: pinhole-equi

Dataset: mdvncalib.bag

Topic: /mindvision_image/image_raw

Number of images: 345

Extracting calibration target corners

Extracted corners for 281 images (of 345 images)

Projection initialized to: [438.6721643 438.66383647 372.48037062 236.70589066]

Distortion initialized to: [ 0.32703815 0.14118879 -0.00890147 0.01792949]

initializing initial guesses

initialized cam0 to:

projection cam0: [438.6721643 438.66383647 372.48037062 236.70589066]

distortion cam0: [ 0.32703815 0.14118879 -0.00890147 0.01792949]

initializing calibrator

starting calibration...

Progress 24 / 281 Time remaining: 1m 34s

Filtering outliers in all batches...

Progress 25 / 281 Time remaining: 3m 10s

------------------------------------------------------------------

Processed 26 of 281 views with 22 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32710466 0.14202389 -0.02049318 0.04095833] +- [0.02432163 0.2025968 0.65607631 0.7078789 ]

projection: [439.20665943 439.10791457 372.88902916 236.72670244] +- [13.76841787 14.13206358 4.94726545 1.02564782]

reprojection error: [0.000000, 0.000000] +- [0.086313, 0.078595]

------------------------------------------------------------------

Progress 50 / 281 Time remaining: 3m 37s

------------------------------------------------------------------

Processed 51 of 281 views with 32 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32728665 0.13403398 0.01639567 -0.00727319] +- [0.01774083 0.13251309 0.38793167 0.37707258]

projection: [438.26508681 438.20911525 372.70193784 236.68664074] +- [3.27726915 3.2902056 1.35965128 0.66717352]

reprojection error: [0.000000, 0.000001] +- [0.089265, 0.079946]

------------------------------------------------------------------

Progress 75 / 281 Time remaining: 3m 37s

------------------------------------------------------------------

Processed 76 of 281 views with 34 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32775491 0.13017415 0.02602528 -0.01492172] +- [0.01720159 0.12850844 0.37604398 0.36604881]

projection: [438.37321649 438.33436377 372.56378249 236.69749113] +- [2.81898454 2.76730741 0.96622194 0.63440076]

reprojection error: [-0.000000, -0.000001] +- [0.089264, 0.080443]

------------------------------------------------------------------

Progress 100 / 281 Time remaining: 3m 16s

------------------------------------------------------------------

Processed 101 of 281 views with 34 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32775491 0.13017415 0.02602528 -0.01492172] +- [0.01720159 0.12850844 0.37604398 0.36604881]

projection: [438.37321649 438.33436377 372.56378249 236.69749113] +- [2.81898454 2.76730741 0.96622194 0.63440076]

reprojection error: [-0.000000, -0.000001] +- [0.089264, 0.080443]

------------------------------------------------------------------

Progress 125 / 281 Time remaining: 2m 55s

------------------------------------------------------------------

Processed 126 of 281 views with 38 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32829332 0.12388074 0.05002678 -0.0433495 ] +- [0.01620897 0.12001424 0.34887475 0.33895895]

projection: [438.74401121 438.70356246 372.50240468 236.72751328] +- [2.22118088 2.153459 0.84273684 0.60170743]

reprojection error: [0.000000, 0.000001] +- [0.090030, 0.080678]

------------------------------------------------------------------

Progress 150 / 281 Time remaining: 2m 35s

------------------------------------------------------------------

Processed 151 of 281 views with 40 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32832952 0.12354684 0.05046028 -0.04345913] +- [0.01582768 0.11683892 0.3390672 0.32933824]

projection: [438.78012952 438.74173992 372.47651789 236.7721033 ] +- [2.17263531 2.09377601 0.76247689 0.58058732]

reprojection error: [-0.000000, 0.000000] +- [0.090100, 0.080282]

------------------------------------------------------------------

Progress 175 / 281 Time remaining: 2m 9s

------------------------------------------------------------------

Processed 176 of 281 views with 41 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32776265 0.12851606 0.03357976 -0.0240838 ] +- [0.01549595 0.11299861 0.32434821 0.31169184]

projection: [438.60870212 438.57624432 372.48892675 236.74587901] +- [1.95983746 1.88260041 0.75585735 0.56960847]

reprojection error: [-0.000000, 0.000000] +- [0.090819, 0.080529]

------------------------------------------------------------------

Progress 200 / 281 Time remaining: 1m 39s

------------------------------------------------------------------

Processed 201 of 281 views with 42 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32750805 0.13048132 0.02782932 -0.01846147] +- [0.01518672 0.10944173 0.31093888 0.29595248]

projection: [438.60719688 438.57616461 372.48782507 236.74246186] +- [1.954112 1.87760844 0.7550914 0.56851862]

reprojection error: [0.000000, 0.000000] +- [0.091623, 0.080121]

------------------------------------------------------------------

Progress 225 / 281 Time remaining: 1m 10s

------------------------------------------------------------------

Processed 226 of 281 views with 45 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32709825 0.13357998 0.0188562 -0.01022901] +- [0.01442735 0.10229035 0.28661738 0.26949111]

projection: [438.71025296 438.67587013 372.47015576 236.78964676] +- [1.85865775 1.77255795 0.68877392 0.53897615]

reprojection error: [-0.000000, -0.000000] +- [0.092180, 0.080401]

------------------------------------------------------------------

Progress 246 / 281 Time remaining: 45s

Progress 250 / 281 Time remaining: 40s

------------------------------------------------------------------

Processed 251 of 281 views with 45 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32709825 0.13357998 0.0188562 -0.01022901] +- [0.01442735 0.10229035 0.28661738 0.26949111]

projection: [438.71025296 438.67587013 372.47015576 236.78964676] +- [1.85865775 1.77255795 0.68877392 0.53897615]

reprojection error: [-0.000000, -0.000000] +- [0.092180, 0.080401]

------------------------------------------------------------------

Progress 275 / 281 Time remaining: 7s

------------------------------------------------------------------

Processed 276 of 281 views with 46 views used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32686643 0.13551974 0.01176498 -0.00221591] +- [0.01410261 0.09889869 0.27452127 0.2560078 ]

projection: [438.70302025 438.66842238 372.47288635 236.78685704] +- [1.85219048 1.76679621 0.68652563 0.53835071]

reprojection error: [0.000000, 0.000000] +- [0.092647, 0.080445]

------------------------------------------------------------------

Progress 281 / 281 Time remaining:

All views have been processed.

Starting final outlier filtering...

Progress 46 / 46 Time remaining:

..................................................................

Calibration complete.

[ WARN] [1615621286.631595]: Removed 29 outlier corners.

Processed 281 images with 46 images used

Camera-system parameters:

cam0 (/mindvision_image/image_raw):

type: <class 'aslam_cv.libaslam_cv_python.EquidistantDistortedPinholeCameraGeometry'>

distortion: [ 0.32686643 0.13551974 0.01176498 -0.00221591] +- [0.01410261 0.09889869 0.27452127 0.2560078 ]

projection: [438.70302025 438.66842238 372.47288635 236.78685704] +- [1.85219048 1.76679621 0.68652563 0.53835071]

reprojection error: [0.000000, 0.000000] +- [0.092647, 0.080445]

Results written to file: camchain-mdvncalib.yaml

Detailed results written to file: results-cam-mdvncalib.txt

/home/gy/.local/lib/python2.7/site-packages/matplotlib/cbook/deprecation.py:107: MatplotlibDeprecationWarning: Passing one of 'on', 'true', 'off', 'false' as a boolean is deprecated; use an actual boolean (True/False) instead.

warnings.warn(message, mplDeprecation, stacklevel=1)

Report written to: report-cam-mdvncalib.pdf

/home/gy/catkin_slam/src/kalibr/Schweizer-Messer/sm_python/python/sm/PlotCollection.py:58: wxPyDeprecationWarning: Using deprecated class PySimpleApp.

app = wx.PySimpleApp()

具体的标定信息会存放到yaml文件中

Results written to file: camchain-mdvncalib.yaml

Detailed results written to file: results-cam-mdvncalib.txt

并且展示具体的误差报告

4. 参考链接

Kalibr工具箱官方github地址

igraph问题解决

April tag

学习Kalibr工具–Camera标定过程

985

985

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?