★★★ 本文源自AI Studio社区精品项目,【点击此处】查看更多精品内容 >>>

CondLaneNet: 使用动态卷积核预测每一条车道线

本项目基于PPLanedet对CondLaneNet进行复现,PPLanedet中目前复现了SCNN、RESA、RTFormer、UFLD、CondLaneNet等高性能车道线检测算法,欢迎大家使用

1 引言

近年来,基于深度学习的车道线检测技术取得很大进展,但仍有挑战。挑战之一为车道线的实例检测,通常做法是将不同的车道线检测为不同的类别,但该方法通常需要预定义最多能检测到的车道线条数;另外一种做法是对语义分割的结果进行聚类,得到不同的车道线,该方法无法解决车道线交叉、密集的场景。如下图所示。

作者借鉴了分割领域中的conditional instance segmentation策略,参考CondInst和SOLOv2,将其适配到车道线检测任务中,提出了新的车道线检测算法CondLaneNet,来解决车道线的实例检测问题。

CondLaneNet能够解决交叉线等复杂场景,且具有端到端和推理速度快的优势

2 模型整体结构

由于笔者不太会在markdown里面敲公式,故以图片的方式展示。

第二个部分是 Proposal Head,具体结构描述如下

模型中存在同一proposal point对应2个车道线实例的情况,比如交叉线。作者提出了 RIM(Recurrent Instance Module) 来解决该问题。

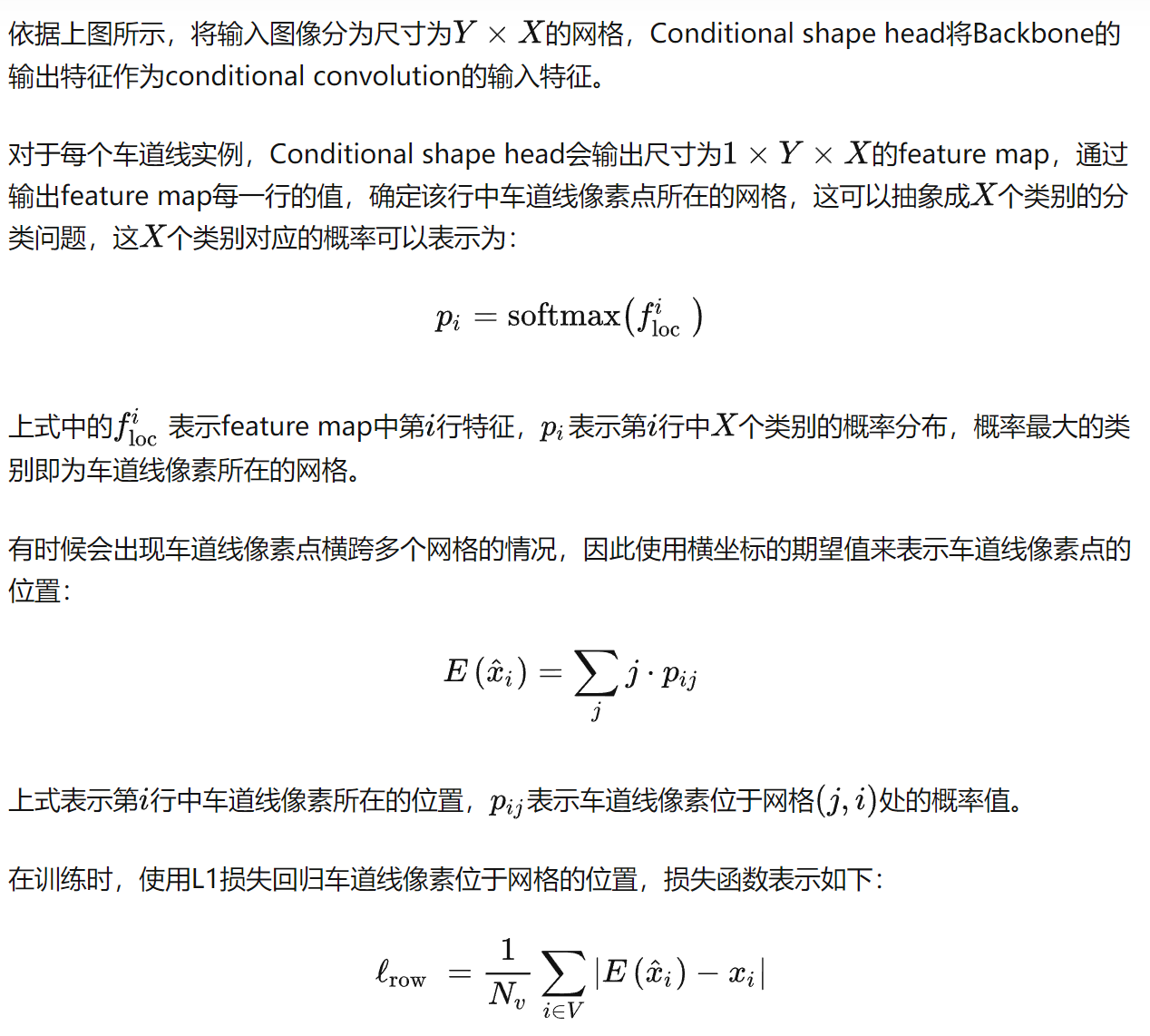

第三个部分是 Conditional Shape Head,具体描述如下。

Conditional Shape Head中点在每一行位置的确定规则如下。

纵向范围的确定

最后便是车道线的表示,具体描述如下

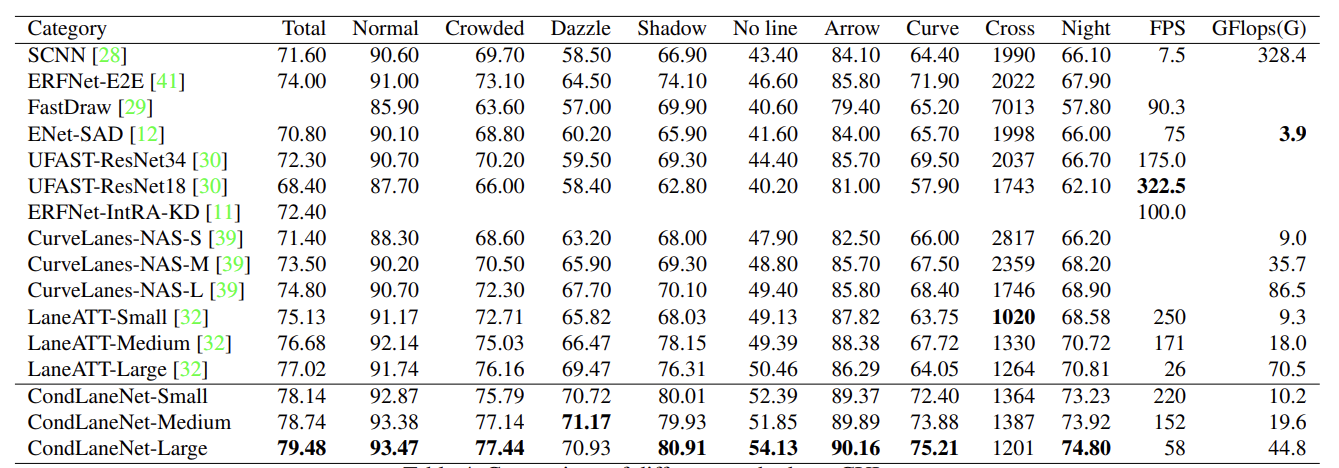

3、具体实验结果

CULane上的实验结果如下图所示。

4、关键部分 CondLaneNet head代码展示,具体代码在pplandet/model/heads/condlane.py

class CondLaneHead(nn.Layer):

def __init__(self,

heads,

in_channels,

num_classes,

head_channels=64,

head_layers=1,

reg_branch_channels = None,

disable_coords=False,

branch_in_channels=288,

branch_channels=64,

branch_out_channels=64,

branch_num_conv=1,

norm=True,

hm_idx=-1,

mask_idx=0,

compute_locations_pre=True,

location_configs=None,

mask_norm_act=True,

regression=True,

crit_loss = None,

crit_kp_loss = None,

crit_ce_loss =None,

cfg=None):

super(CondLaneHead, self).__init__()

self.cfg = cfg

self.num_classes = num_classes

self.hm_idx = hm_idx

self.mask_idx = mask_idx

self.regression = regression

if mask_norm_act:

final_norm_cfg = True

final_act_cfg = 'relu'

else:

final_norm_cfg = None

final_act_cfg = None

# mask branch

mask_branch = []

mask_branch.append(

ConvModule(

sum(in_channels),

branch_channels,

kernel_size=3,

norm=norm))

for i in range(branch_num_conv):

mask_branch.append(

ConvModule(

branch_channels,

branch_channels,

kernel_size=3,

norm=norm))

mask_branch.append(

ConvModule(

branch_channels,

branch_out_channels,

kernel_size=3,

norm=final_norm_cfg,

act=final_act_cfg))

self.add_sublayer('mask_branch', nn.Sequential(*mask_branch))

self.mask_weight_nums, self.mask_bias_nums = self.cal_num_params(

head_layers, disable_coords, head_channels, out_channels=1)

# print('mask: ', self.mask_weight_nums, self.mask_bias_nums) # 66 1

self.num_mask_params = sum(self.mask_weight_nums) + sum(

self.mask_bias_nums)

self.reg_weight_nums, self.reg_bias_nums = self.cal_num_params(

head_layers, disable_coords, head_channels, out_channels=1)

# print('reg: ', self.reg_weight_nums, self.reg_bias_nums) # 66 1

self.num_reg_params = sum(self.reg_weight_nums) + sum(

self.reg_bias_nums)

if self.regression:

self.num_gen_params = self.num_mask_params + self.num_reg_params

else:

self.num_gen_params = self.num_mask_params

self.num_reg_params = 0

self.mask_head = DynamicMaskHead(

head_layers,

branch_out_channels,

branch_out_channels,

1,

self.mask_weight_nums,

self.mask_bias_nums,

disable_coords=False,

compute_locations_pre=compute_locations_pre,

location_configs=location_configs)

if self.regression:

self.reg_head = DynamicMaskHead(

head_layers,

branch_out_channels,

branch_out_channels,

1,

self.reg_weight_nums,

self.reg_bias_nums,

disable_coords=False,

out_channels=1,

compute_locations_pre=compute_locations_pre,

location_configs=location_configs)

if 'params' not in heads:

heads['params'] = num_classes * (

self.num_mask_params + self.num_reg_params)

self.ctnet_head = CtnetHead(

heads,

channels_in=branch_in_channels,

final_kernel=1,

# head_conv=64,)

head_conv=branch_in_channels)

self.feat_width = location_configs['size'][-1]

self.mlp = MLP(self.feat_width, 64, 2, 2)

self.post_process = CondLanePostProcessor(

mask_size=self.cfg.mask_size, hm_thr=0.5, use_offset=True,

nms_thr=4)

self.loss_impl = CondLaneLoss(cfg.loss_weights,

1,

crit_loss=crit_loss,

crit_ce_loss=crit_ce_loss,

crit_kp_loss=crit_kp_loss,

cfg=cfg)

def loss(self, output, batch):

img_metas = batch.pop('img_metas')

return self.loss_impl(output, img_metas, **batch)

def cal_num_params(self,

num_layers,

disable_coords,

channels,

out_channels=1):

weight_nums, bias_nums = [], []

for l in range(num_layers):

if l == num_layers - 1:

if num_layers == 1:

weight_nums.append((channels + 2) * out_channels)

else:

weight_nums.append(channels * out_channels)

bias_nums.append(out_channels)

elif l == 0:

if not disable_coords:

weight_nums.append((channels + 2) * channels)

else:

weight_nums.append(channels * channels)

bias_nums.append(channels)

else:

weight_nums.append(channels * channels)

bias_nums.append(channels)

return weight_nums, bias_nums

def parse_gt(self, gts, device=None):

reg = (paddle.to_tensor(gts['reg'])).unsqueeze(0)

reg_mask = (paddle.to_tensor(gts['reg_mask'])).unsqueeze(0)

row = (paddle.to_tensor(

gts['row'])).unsqueeze(0).unsqueeze(0)

row_mask = (paddle.to_tensor(

gts['row_mask'])).unsqueeze(0).unsqueeze(0)

if 'range' in gts:

lane_range = paddle.to_tensor(gts['range']) # new add: squeeze

#lane_range = (gts['range']).to(device).squeeze(0) # new add: squeeze

else:

mask = None

lane_range = paddle.zeros((1, mask.shape[-2]),

dtype=paddle.int64)

return reg, reg_mask, row, row_mask, lane_range

def parse_pos(self, gt_masks, hm_shape, device, mask_shape=None):

b = len(gt_masks)

n = self.num_classes

hm_h, hm_w = hm_shape[:2]

if mask_shape is None:

mask_h, mask_w = hm_shape[:2]

else:

mask_h, mask_w = mask_shape[:2]

poses = []

regs = []

reg_masks = []

rows = []

row_masks = []

lane_ranges = []

labels = []

num_ins = []

for idx, m_img in enumerate(gt_masks):

num = 0

for m in m_img:

gts = self.parse_gt(m, device=device)

reg, reg_mask, row, row_mask, lane_range = gts

label = m['label']

num += len(m['points'])

for p in m['points']:

pos = idx * n * hm_h * hm_w + label * hm_h * hm_w + p[

1] * hm_w + p[0]

poses.append(pos)

for i in range(len(m['points'])):

labels.append(label)

regs.append(reg)

reg_masks.append(reg_mask)

rows.append(row)

row_masks.append(row_mask)

lane_ranges.append(lane_range)

if num == 0:

reg = paddle.zeros((1, 1, mask_h, mask_w))

reg_mask = paddle.zeros((1, 1, mask_h, mask_w))

row = paddle.zeros((1, 1, mask_h))

row_mask = paddle.zeros((1, 1, mask_h))

lane_range = paddle.zeros((1, mask_h),

dtype=paddle.int64)

label = 0

pos = idx * n * hm_h * hm_w + random.randint(

0, n * hm_h * hm_w - 1)

num = 1

labels.append(label)

poses.append(pos)

regs.append(reg)

reg_masks.append(reg_mask)

rows.append(row)

row_masks.append(row_mask)

lane_ranges.append(lane_range)

num_ins.append(num)

if len(regs) > 0:

regs = paddle.concat(regs, 1)

reg_masks = paddle.concat(reg_masks, 1)

rows = paddle.concat(rows, 1)

row_masks = paddle.concat(row_masks, 1)

lane_ranges = paddle.concat(lane_ranges, 0)

gts = dict(

gt_reg=regs,

gt_reg_mask=reg_masks,

gt_rows=rows,

gt_row_masks=row_masks,

gt_ranges=lane_ranges)

return poses, labels, num_ins, gts

def ctdet_decode(self, heat, thr=0.1):

heat = heat.unsqueeze(0)

def _nms(heat, kernel=3):

pad = (kernel - 1) // 2

hmax = nn.functional.max_pool2d(

heat, (kernel, kernel), stride=1, padding=pad)

keep = (hmax == heat).astype('float')

return heat * keep

def _format(heat, inds):

ret = []

for y, x, c in zip(inds[0], inds[1], inds[2]):

id_class = c + 1

coord = [x, y]

score = heat[y, x, c]

ret.append({

'coord': coord,

'id_class': id_class,

'score': score

})

return ret

heat_nms = _nms(heat)

heat_nms = heat_nms.squeeze(0)

# print(heat.shape, heat_nms.shape)

heat_nms = heat_nms.transpose((1, 2, 0)).detach().cpu().numpy()

inds = np.where(heat_nms > thr)

seeds = _format(heat_nms, inds)

# heat_nms = heat_nms.permute(0, 2, 3, 1).detach().cpu().numpy()

# inds = np.where(heat_nms > thr)

# print(len(inds))

# seeds = _format(heat_nms, inds)

return seeds

def forward_train(self, output, batch):

img_metas = batch['img_metas']

img_metas = img_metas._data[0]

gt_batch_masks = [m['gt_masks'] for m in img_metas]

hm_shape = img_metas[0]['hm_shape']

mask_shape = img_metas[0]['mask_shape']

inputs = output

pos, labels, num_ins, gts = self.parse_pos(

gt_batch_masks, hm_shape, inputs[0], mask_shape=mask_shape)

batch.update(gts)

x_list = list(inputs)

f_hm = x_list[self.hm_idx]

f_mask = x_list[self.mask_idx]

m_batchsize = f_hm.shape[0]

# f_mask

z = self.ctnet_head(f_hm)

hm, params = z['hm'], z['params']

h_hm, w_hm = hm.shape[2:]

h_mask, w_mask = f_mask.shape[2:]

params = params.reshape((m_batchsize, self.num_classes, -1, h_hm, w_hm))

mask_branch = self.mask_branch(f_mask)

reg_branch = mask_branch

# reg_branch = self.reg_branch(f_mask)

params = params.transpose((0, 1, 3, 4,

2)).reshape((-1, self.num_gen_params))

pos_tensor = paddle.to_tensor(np.array(pos, dtype=np.float64)).astype('int64').unsqueeze(1)

pos_tensor = pos_tensor.expand((-1, self.num_gen_params))

mask_pos_tensor = pos_tensor[:, :self.num_mask_params]

reg_pos_tensor = pos_tensor[:, self.num_mask_params:]

if pos_tensor.shape[0] == 0:

masks = None

feat_range = None

else:

mask_params = params[:, :self.num_mask_params].take_along_axis(

mask_pos_tensor,0)

masks = self.mask_head(mask_branch, mask_params, num_ins)

if self.regression:

reg_params = params[:, self.num_mask_params:].take_along_axis(

reg_pos_tensor,0)

regs = self.reg_head(reg_branch, reg_params, num_ins)

else:

regs = masks

# regs = regs.view(sum(num_ins), 1, h_mask, w_mask)

feat_range = masks.transpose((0, 1, 3,

2)).reshape((sum(num_ins), w_mask, h_mask))

feat_range = self.mlp(feat_range)

batch.update(dict(mask_branch=mask_branch, reg_branch=reg_branch))

return hm, regs, masks, feat_range, [mask_branch, reg_branch]

def forward_test(

self,

inputs,

hack_seeds=None,

hm_thr=0.5,

):

def parse_pos(seeds, batchsize, num_classes, h, w):

pos_list = [[p['coord'], p['id_class'] - 1] for p in seeds]

poses = []

for p in pos_list:

[c, r], label = p

pos = label * h * w + r * w + c

poses.append(pos)

poses = paddle.to_tensor(np.array(

poses, np.long)).astype('int64').unsqueeze(1)

return poses

# with Timer("Elapsed time in stage1: %f"): # ignore

x_list = list(inputs)

f_hm = x_list[self.hm_idx]

f_mask = x_list[self.mask_idx]

m_batchsize = f_hm.shape[0]

f_deep = f_mask

m_batchsize = f_deep.shape[0]

# with Timer("Elapsed time in ctnet_head: %f"): # 0.3ms

z = self.ctnet_head(f_hm)

h_hm, w_hm = f_hm.shape[2:]

h_mask, w_mask = f_mask.shape[2:]

hms, params = z['hm'], z['params']

hms = paddle.clip(F.sigmoid(hms), min=1e-4, max=1 - 1e-4)

params = params.reshape((m_batchsize, self.num_classes, -1, h_hm, w_hm))

# with Timer("Elapsed time in two branch: %f"): # 0.6ms

mask_branchs = self.mask_branch(f_mask)

reg_branchs = mask_branchs

# reg_branch = self.reg_branch(f_mask)

params = params.transpose((0, 1, 3, 4,

2)).reshape((m_batchsize, -1, self.num_gen_params))

batch_size, num_classes, h, w = hms.shape

# with Timer("Elapsed time in ct decode: %f"): # 0.2ms

out_seeds, out_hm = [], []

idx = 0

for hm, param, mask_branch, reg_branch in zip(hms, params, mask_branchs, reg_branchs):

mask_branch = mask_branch.unsqueeze(0)

reg_branch = reg_branch.unsqueeze(0)

seeds = self.ctdet_decode(hm, thr=hm_thr)

if hack_seeds is not None:

seeds = hack_seeds

# with Timer("Elapsed time in stage2: %f"): # 0.08ms

pos_tensor = parse_pos(seeds, batch_size, num_classes, h, w)

pos_tensor = pos_tensor.expand((-1, self.num_gen_params))

num_ins = [pos_tensor.shape[0]]

mask_pos_tensor = pos_tensor[:, :self.num_mask_params]

if self.regression:

reg_pos_tensor = pos_tensor[:, self.num_mask_params:]

# with Timer("Elapsed time in stage3: %f"): # 0.8ms

if pos_tensor.shape[0] == 0:

seeds = []

else:

mask_params = param[:, :self.num_mask_params].take_along_axis(

mask_pos_tensor,0)

# with Timer("Elapsed time in mask_head: %f"): #0.3ms

masks = self.mask_head(mask_branch, mask_params, num_ins, idx)

if self.regression:

reg_params = param[:, self.num_mask_params:].take_along_axis(

reg_pos_tensor,0)

# with Timer("Elapsed time in reg_head: %f"): # 0.25ms

regs = self.reg_head(reg_branch, reg_params, num_ins, idx)

else:

regs = masks

feat_range = masks.transpose((0, 1, 3,

2)).reshape((sum(num_ins), w_mask, h_mask))

feat_range = self.mlp(feat_range)

for i in range(len(seeds)):

seeds[i]['reg'] = regs[0, i:i + 1, :, :]

m = masks[0, i:i + 1, :, :]

seeds[i]['mask'] = m

seeds[i]['range'] = feat_range[i:i + 1]

out_seeds.append(seeds)

out_hm.append(hm)

idx+=1

output = {'seeds': out_seeds, 'hm': out_hm}

return output

def forward(

self,

x_list,

**kwargs):

if self.training:

return self.forward_train(x_list, kwargs['batch'])

return self.forward_test(x_list, )

def get_lanes(self, output):

out_seeds, out_hm = output['seeds'], output['hm']

ret = []

for seeds, hm in zip(out_seeds, out_hm):

lanes, seed = self.post_process(seeds, self.cfg.mask_down_scale)

result = adjust_result(

lanes=lanes,

crop_bbox=self.cfg.crop_bbox,

img_shape=(self.cfg.img_height, self.cfg.img_width),

tgt_shape=(self.cfg.ori_img_h, self.cfg.ori_img_w),

)

lanes = []

for lane in result:

coord = []

for x, y in lane:

coord.append([x, y])

coord = np.array(coord)

coord[:, 0] /= self.cfg.ori_img_w

coord[:, 1] /= self.cfg.ori_img_h

lanes.append(Lane(coord))

ret.append(lanes)

return ret

def init_weights(self):

# ctnet_head will init weights during building

pass

5、具体代码复现

#clone PPLanedet

!git clone https://github.com/zkyseu/PPlanedet

#解压数据集

!tar -zxvf /home/aistudio/data/data88752/list.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/list.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_23_30frame_part2.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_23_30frame_part2.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_100_30frame.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_100_30frame.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_193_90frame.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_193_90frame.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_23_30frame_part1.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_23_30frame_part1.tar.gz

!tar -zxvf /home/aistudio/data/data88752/laneseg_label_w16.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/laneseg_label_w16.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_161_90frame.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_161_90frame.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_37_30frame.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_37_30frame.tar.gz

!tar -zxvf /home/aistudio/data/data88752/driver_182_30frame.tar.gz -C /home/aistudio/data

!rm -rf /home/aistudio/data/data88752/driver_182_30frame.tar.gz

%cd PPlanedet

/home/aistudio/PPlanedet

#安装必要的库

!pip install -r requirements.txt --user

#开启训练

python tools/train.py -c configs/condlane/resnet50_culane.py

6、复现精度

CondLaneNet在CULane上复现精度为79.69%,超越论文中的精度,大家可以运行下面代码体验一下

!wget https://github.com/zkyseu/PPlanedet/releases/download/CondLaneNewt/model.pd #下载预训练权重

训练权重

python tools/train.py -c configs/condlane/resnet50_culane.py --load model.pd --evaluate-only #如果报错请确认模型权重位置是否正确。

7、总结

作者借鉴了分割领域中的conditional instance segmentation策略,提出了CondLaneNet算法用于车道线检测任务,来应对车道线实例检测、交叉线的挑战。

CondLaneNet中包含Proposal head和Conditional shape head,其中Proposal head用于预测车道线实例和实例级别的卷积核动态参数,Conditional shape head用于预测每个车道线实例的形状信息。CondLaneNet能够很好地解决车道线实例分割、交叉线检测问题,并且有很好的实时性。

参考文献

请点击此处查看本环境基本用法.

Please click here for more detailed instructions.

CondLaneNet是一种针对车道线检测的深度学习算法,它解决了实例检测和交叉线处理的挑战。模型由ProposalHead和ConditionalShapeHead组成,能动态预测车道线实例及其形状。在CULane数据集上,该模型实现了79.69%的精度,优于原论文结果,且具有较高的实时性。

CondLaneNet是一种针对车道线检测的深度学习算法,它解决了实例检测和交叉线处理的挑战。模型由ProposalHead和ConditionalShapeHead组成,能动态预测车道线实例及其形状。在CULane数据集上,该模型实现了79.69%的精度,优于原论文结果,且具有较高的实时性。

3758

3758

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?