caffe模型在训练完成后,会生成一个*.caffemodel的文件,在运行的时候,直接调用caffe就可以读取其中的相应权值参数。但是如果用一个第三方软件打开这个,却是不可以可视化的二值乱码。

将模型中的参数导出,可编辑化后能有哪些好处呢,

(1)方便进行fpga平台的移植

(2)可以基于别人训练好的模型,0数据训练自己的模型,使用自己的模型拟合别人模型的权值分布,达到用模型训模型的目的。

(3)可以对网络进行剪支,加速等操作。

将模型中的特征图和权值可视化有哪些好处呢,

(1)方便对卷积网络的特征有所了解,训练好的特征总是有规则的特征图,可以侧面辅助训练过程。

这里分析lenet5这样的网络结构,所有其他网络都通用。

核心程序:

(1)只导出weights,不进行显示

void parse_caffemodel(string caffemodel, string outtxt)

{

printf("%s\n", caffemodel.c_str());

NetParameter net;

bool success = loadCaffemodel(caffemodel.c_str(), &net);

if (!success){

printf("读取错误啦:%s\n", caffemodel.c_str());

return;

}

FILE* fmodel = fopen(outtxt.c_str(), "wb");

for (int i = 0; i < net.layer_size(); ++i){

LayerParameter& param = *net.mutable_layer(i);

int n = param.mutable_blobs()->size();

if (n){

const BlobProto& blob = param.blobs(0);

printf("layer: %s weight(%d)", param.name().c_str(), blob.data_size());

fprintf(fmodel, "\nlayer: %s weight(%d)\n", param.name().c_str(), blob.data_size());

writeData(fmodel, blob.data().data(), blob.data_size());

if (n > 1){

const BlobProto& bais = param.blobs(1);

printf(" bais(%d)", bais.data_size());

fprintf(fmodel, "\nlayer: %s bais(%d)\n", param.name().c_str(), bais.data_size());

writeData(fmodel, bais.data().data(), bais.data_size());

}

printf("\n");

}

}

fclose(fmodel);

}

(2)weights可视化

cv::Mat visualize_weights(string prototxt, string caffemodel, int weights_layer_num)

{

::google::InitGoogleLogging("0");

#ifdef CPU_ONLY

Caffe::set_mode(Caffe::CPU);

#else

Caffe::set_mode(Caffe::GPU);

#endif

Net<float> net(prototxt, TEST);

net.CopyTrainedLayersFrom(caffemodel);

vector<shared_ptr<Blob<float> > > params = net.params();

std::cout << "各层参数的维度信息为:\n";

for (int i = 0; i<params.size(); ++i)

std::cout << params[i]->shape_string() << std::endl;

int width = params[weights_layer_num]->shape(3); //宽度

int height = params[weights_layer_num]->shape(2); //高度

int channel = params[weights_layer_num]->shape(1); //通道数

int num = params[weights_layer_num]->shape(0); //个数

int imgHeight = (int)(1 + sqrt(num))*height;

int imgWidth = (int)(1 + sqrt(num))*width;

Mat img(imgHeight, imgWidth, CV_8UC3, Scalar(0, 0, 0));

float maxValue = -1000, minValue = 10000;

const float* tmpValue = params[weights_layer_num]->cpu_data();

for (int i = 0; i<params[weights_layer_num]->count(); i++){

maxValue = std::max(maxValue, tmpValue[i]);

minValue = std::min(minValue, tmpValue[i]);

}

int kk = 0;

for (int y = 0; y<imgHeight; y += height){

for (int x = 0; x<imgWidth; x += width){

if (kk >= num)

continue;

Mat roi = img(Rect(x, y, width, height));

for (int i = 0; i<height; i++){

for (int j = 0; j<width; j++){

for (int k = 0; k<channel; k++){

float value = params[weights_layer_num]->data_at(kk, k, i, j);

roi.at<Vec3b>(i, j)[k] = (value - minValue) / (maxValue - minValue) * 255; }

}

}

++kk;

}

}

return img;

}

(3)featuremap可视化

cv::Mat Classifier::visualize_featuremap(const cv::Mat& img,string layer_name)

{

Blob<float>* input_layer = net_->input_blobs()[0];

input_layer->Reshape(1, num_channels_, input_geometry_.height, input_geometry_.width);

net_->Reshape();

std::vector<cv::Mat> input_channels;

WrapInputLayer(&input_channels);

Preprocess(img, &input_channels);

net_->Forward();

std::cout << "网络中的Blobs名称为:\n";

vector<shared_ptr<Blob<float> > > blobs = net_->blobs();

vector<string> blob_names = net_->blob_names();

std::cout << blobs.size() << " " << blob_names.size() << std::endl;

for (int i = 0; i<blobs.size(); i++){

std::cout << blob_names[i] << " " << blobs[i]->shape_string() << std::endl;

}

std::cout << std::endl;

assert(net_->has_blob(layer_name));

shared_ptr<Blob<float> > conv1Blob = net_->blob_by_name(layer_name);

std::cout << "测试图片的特征响应图的形状信息为:" << conv1Blob->shape_string() << std::endl;

float maxValue = -10000000, minValue = 10000000;

const float* tmpValue = conv1Blob->cpu_data();

for (int i = 0; i<conv1Blob->count(); i++){

maxValue = std::max(maxValue, tmpValue[i]);

minValue = std::min(minValue, tmpValue[i]);

}

int width = conv1Blob->shape(3); //响应图的高度

int height = conv1Blob->shape(2); //响应图的宽度

int channel = conv1Blob->shape(1); //通道数

int num = conv1Blob->shape(0); //个数

int imgHeight = (int)(1 + sqrt(channel))*height;

int imgWidth = (int)(1 + sqrt(channel))*width;

cv::Mat img(imgHeight, imgWidth, CV_8UC1, cv::Scalar(0));

int kk = 0;

for (int x = 0; x<imgHeight; x += height){

for (int y = 0; y<imgWidth; y += width){

if (kk >= channel)

continue;

cv::Mat roi = img(cv::Rect(y, x, width, height));

for (int i = 0; i<height; i++){

for (int j = 0; j<width; j++){

float value = conv1Blob->data_at(0, kk, i, j);

roi.at<uchar>(i, j) = (value - minValue) / (maxValue - minValue) * 255;

}

}

kk++;

}

}

return img;

}

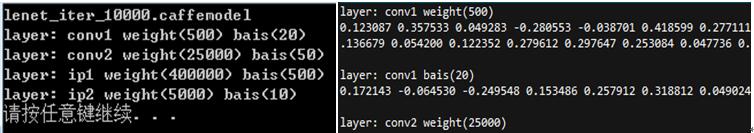

运行结果:

(1)

string caffemodel = "lenet_iter_10000.caffemodel";;

string outtxt = "lenet.txt";

parse_caffemodel(caffemodel, outtxt);

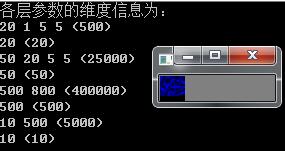

(2)

string prototxt = "lenet.prototxt";

string caffemodel = "lenet_iter_10000.caffemodel";

int weights_layer_num = 0;

Mat image=visualize_weights(prototxt, caffemodel, weights_layer_num);

imshow("weights", image);

waitKey(0);

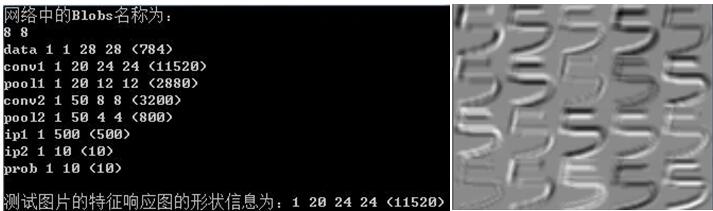

(3)

::google::InitGoogleLogging(argv[0]);

string model_file = "lenet.prototxt";

string trained_file = "lenet_iter_10000.caffemodel";

Classifier classifier(model_file, trained_file);

string file = "5.jpg";

cv::Mat img = cv::imread(file, -1);

CHECK(!img.empty()) << "Unable to decode image " << file;

cv::Mat feature_map = classifier.visualize_featuremap(img,"conv2");

imshow("feature_map", feature_map);

cv::waitKey(0);

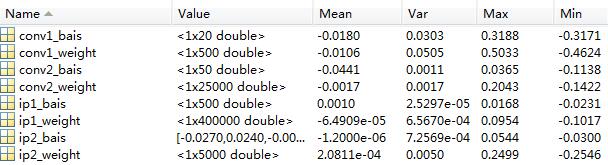

将权值导入matlab中,可以看到权值基本都是服从均值为0,方差很小的分布。

完整程序下载链接:http://download.csdn.net/detail/qq_14845119/9895412

856

856

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?