课程Github地址:https://github.com/wrk666/VSLAM-Course/tree/master

1. 内容

作业已完成,这里分享一下部分代码。

2. T2

1. ORB提取

void computeAngle(const cv::Mat &image, vector<cv::KeyPoint> &keypoints) {

int half_patch_size = 8;

// int half_boundry = 16;

int bad_points =0; //角点中不能计算角度的点

for (auto &kp : keypoints) {

// START YOUR CODE HERE (~7 lines)

int u=kp.pt.x, v = kp.pt.y;

if(u>=half_patch_size && v>=half_patch_size && u+half_patch_size<=image.cols && v+half_patch_size<=image.rows)

{

float m01=0, m10=0;

for(int i=u-half_patch_size; i < u + half_patch_size; ++i) //x方向遍历16个点(右)

for(int j=v-half_patch_size; j < v + half_patch_size; ++j) //y方向遍历16个点(下)

{

m10 +=i * image.at<uchar>(j, i);

m01 +=j * image.at<uchar>(j, i);

}

//计算角度(弧度制)并转换为角度

kp.angle = (float)std::atan(m01/m10) * 180/pi ; //或者std::atan2(m01, m10)*180/pi;

}

// END YOUR CODE HERE

}

return;

}

2. ORB描述

void computeORBDesc(const cv::Mat &image, vector<cv::KeyPoint> &keypoints, vector<DescType> &desc)

{

int half_patch_size = 8, bad_points = 0;

for (auto &kp : keypoints)

{

int u = kp.pt.x, v = kp.pt.y;

DescType d(256, false); //256位描述子

//STEP1:检查是否越界

if(u>=half_patch_size && v>=half_patch_size && u+half_patch_size<=image.cols && v+half_patch_size<=image.rows)

{

//STEP2:若不越界,则根据pattern来采集点,结合特征点的方向角theta来旋转点p,q->p',q',计算p',q'的坐标,并比较大小,结果作为该位描述子的结果(0 or 1)

for (int i = 0; i < 256; i++)

{

// START YOUR CODE HERE (~7 lines)

//寻找取点pattern的下标

cv::Point2f p(ORB_pattern[i * 4], ORB_pattern[i * 4 + 1]);

cv::Point2f q(ORB_pattern[i * 4 + 2], ORB_pattern[i * 4 + 3]);

//使用sin,cos, 角度转换为弧度 *pi/180

double theta = kp.angle * pi / 180;

double cos_theta = cos(theta) , sin_theta = sin(theta);

int u_p_ = (int)(cos_theta * p.x - sin_theta * p.y) + u;

int v_p_ = (int)(sin_theta * p.x + cos_theta * p.y) + v;

int u_q_ = (int)(cos_theta * q.x - sin_theta * q.y) + u;

int v_q_ = (int)(sin_theta * q.x + cos_theta * q.y) + v;

//判断根据关键点得到的经过旋转的p、q是否出界,若出界,则该描述子清空作废

if(u_p_<0 || v_p_<0 || u_p_ >image.cols || v_p_ > image.rows || u_q_<0 || v_q_<0 || u_q_ >image.cols || v_q_ > image.rows)

{

d = {};

break; //跳出描述子循环

}

d[i] = image.at<uchar>(v_p_, u_p_) > image.at<uchar>(v_q_, u_q_) ? false : true; //前者大取false,后者大取true,vector随机访问器,不够快,但是掌握算法是关键

}

}

//越界则不使用

else

{

++bad_points;

d.clear();

};

desc.push_back(d);

// END YOUR CODE HERE

}

cout << "bad/total: " << bad_points << "/" << desc.size() << endl;

return;

}

3. 暴力匹配

// brute-force matching

void bfMatch(const vector<DescType> &desc1, const vector<DescType> &desc2,

vector<cv::DMatch> &matches) {

int d_max = 50;

// START YOUR CODE HERE (~12 lines)

// find matches between desc1 and desc2. 核心方法是按位异或^,但是这里是按照vector<bool>的每一位来判断

for(size_t i1=0; i1<desc1.size(); ++i1)

{

if(desc1[i1].empty()) continue;

cv::DMatch m{i1, 0, 256}; //DMatch(int _queryIdx, int _trainIdx, float _distance):_queryIdx:为第i1个点进行匹配; _trainIdx:目标点匹配点为第0个;_distance:最小距离初始化为256

for(size_t i2=0;i2<desc2.size();++i2)

{

if(desc2[i2].empty()) continue;

int hanming_distance = 0;

for(unsigned int j=0; j!=256; ++j) //暴力匹配desc1和desc2中所有的关键点

{

hanming_distance += desc1[i1][j] ^ desc2[i2][j]; //不相等时距离增加

}

if(hanming_distance<d_max && hanming_distance<m.distance) //如果distance符合阈值且小于现在的distance,更新最小distance和最佳匹配点

{

m.queryIdx = i1; //被匹配点

m.trainIdx = i2; //更新最佳匹配点

m.distance = hanming_distance; //更新最小distance

}

}

//匹配成功的

if(m.distance<d_max)

matches.push_back(m);

}

// END YOUR CODE HERE

// for (auto &m : matches) {

// cout << m.queryIdx << ", " << m.trainIdx << ", " << m.distance << endl;

// }

return;

}

4. 多线程ORB

结果如图

这部分主要按照群里助教给的文档安装了gcc,g++和tbb库(如果有需要的话,可以评论区跟我说),之前没安装过gcc,所以直接装上9.4版本,无需版本管理。tbb库安装时主要碰到软链接的问题,SLAM的ORB程序中需要libtbb.so.2的软链接,但是安装时只创建了libtbb.so的软链接,所以多创建一个即可。

重点是多线程程序的编写,在遍历所有特征点时,使用多线程来加速。补充一些关于多线程的知识

for_each是按照迭代器来遍历元素,从C++17开始添加了执行策略ExecutionPolicy,有3种策略:

- std::execution::seq 使算法在单个线程中以确定性顺序执行,即不并行且不并发;

- std::execution::par 使算法在多个线程中执行,并且线程各自具有自己的顺序任务,即并行但不并发;

- std::execution::par_unseq 使算法在多个线程中执行,并且线程可以具有并发的多个任务,即并行和并发。

first和last分别是起始和终止的迭代器,最后一个是函数f(程序里使用了lambda表达式),接受[first,last)内的迭代器。函数执行策略从C++17开始可以选择,在这里我们使用par_unseq(并行和并发),

需要#include <execution>

下面的加锁部分很关键,当时卡了有一会儿:

计算Angle时不用关心数据冲突问题,因为都是使用指针进行访问,顺序无所谓,但是在计算描述子时,我们需要把计算出来的描述子push_back到vector中,而vector是顺序容器,并行且并发不能保证push_back的顺序,在多线程环境下,对共享的资源的插入会出现不可预知的错误。所以需要加锁保护,在C++11中新增了<mutex>,我们#include <mutex>并加锁(参考这里),这点至关重要,否则计算描述子只能在单线程seq中计算,最终各部分核心代码如Listing4所示,运行结果如图2.3所示。(不明白的是为什么开始计算多线程会比较慢,紧接着再调用就较快了,是否是因为有缓存?)

void computeAngleMT(const cv::Mat &image, vector<cv::KeyPoint> &keypoints)

{

int half_patch_size = 8;

std::mutex m;

//or each设计的初衷就是要遍历each one,所以只能遍历完,

std::for_each(std::execution::par_unseq, keypoints.begin(), keypoints.end(),

[&half_patch_size, &image, &m](auto &kp)

{

// START YOUR CODE HERE

int u=kp.pt.x, v = kp.pt.y;

if(u>=half_patch_size && v>=half_patch_size && u+half_patch_size<=image.cols && v+half_patch_size<=image.rows)

{

float m01=0, m10=0;

for(int i=u-half_patch_size; i < u + half_patch_size; ++i) //x方向遍历16个点(右)

for(int j=v-half_patch_size; j < v + half_patch_size; ++j) //y方向遍历16个点(下)

{

m10 +=i * image.at<uchar>(j, i);

m01 +=j * image.at<uchar>(j, i);

}

std::lock_guard<std::mutex> guard(m);//代替m.lock; m.unlock();

//计算角度(弧度制)并转换为角度

kp.angle = (float)std::atan(m01/m10) * 180/pi ; //或者std::atan2(m01, m10)*180/pi;

}

// END YOUR CODE HERE

});

return;

}

void computeORBDescMT(const cv::Mat &image, vector<cv::KeyPoint> &keypoints, vector<DescType> &desc)

{

// START YOUR CODE HERE (~20 lines)

std::mutex m;

std::for_each(std::execution::par_unseq, keypoints.begin(), keypoints.end(),

[&image, &desc, &m] (auto& kp) //lambda表达式,这个function函数接受迭代器

{

int u = kp.pt.x, v = kp.pt.y; //迭代器要使用->来访问成员(将解引用和成员访问.结合在一起)

DescType d(256, false); //256位描述子

//STEP1:检查是否越界

if(u>=8 && v>=8 && u+8<=image.cols && v+8<=image.rows)

{

//STEP2:若不越界,则根据pattern来采集点,结合特征点的方向角theta来旋转点p,q->p',q',计算p',q'的坐标,并比较大小,结果作为该位描述子的结果(0 or 1)

for (int i = 0; i < 256; i++)

{

// START YOUR CODE HERE (~7 lines)

//寻找取点pattern的下标

cv::Point2f p(ORB_pattern[i * 4], ORB_pattern[i * 4 + 1]);

cv::Point2f q(ORB_pattern[i * 4 + 2], ORB_pattern[i * 4 + 3]);

//使用sin,cos, 角度转换为弧度 *pi/180

double theta = kp.angle * pi / 180;

double cos_theta = cos(theta) , sin_theta = sin(theta);

int u_p_ = (int)(cos_theta * p.x - sin_theta * p.y) + u;

int v_p_ = (int)(sin_theta * p.x + cos_theta * p.y) + v;

int u_q_ = (int)(cos_theta * q.x - sin_theta * q.y) + u;

int v_q_ = (int)(sin_theta * q.x + cos_theta * q.y) + v;

//判断根据关键点得到的经过旋转的p、q是否出界,若出界,则该描述子清空作废

if(u_p_<0 || v_p_<0 || u_p_ >image.cols || v_p_ > image.rows || u_q_<0 || v_q_<0 || u_q_ >image.cols || v_q_ > image.rows)

{

d.clear();

// d = {};

break; //跳出描述子循环

}

d[i] = image.at<uchar>(v_p_, u_p_) > image.at<uchar>(v_q_, u_q_) ? false : true; //前者大取false,后者大取true,vector随机访问器,不够快,但是掌握算法是关键

}

}

//越界则不使用

else

{

d.clear();

// d = {};

}

std::lock_guard<std::mutex> guard(m);//代替m.lock; m.unlock();

desc.push_back(d);

});

int bad_points = 0;

for(auto d:desc)

{

if(d.empty())

++bad_points;

}

cout << "Desc bad/total: " << bad_points << "/" << desc.size() << endl;

return;

// END YOUR CODE HERE

}

CMakeLists.txt(别忘了link tbb库)

cmake_minimum_required(VERSION 3.21)

project(ORB_Extract)

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_BUILD_TYPE "Release")

#set(CMAKE_BUILD_TYPE "Debug")

MESSAGE(STATUS "CMAKE_BUILD_TYPE IS ${CMAKE_BUILD_TYPE}")

find_package(OpenCV 3 REQUIRED)

#添加头文件

include_directories(

${OpenCV_INCLUDE_DIRS}

${G2O_INCLUDE_DIRS}

${Sophus_INCLUDE_DIRS}

"/usr/local/include/eigen3/"

)

# 手写ORB特征

add_executable(computeORB computeORB.cpp)

#链接OpenCV库和tbb库

target_link_libraries(computeORB ${OpenCV_LIBS} tbb)

3. 从E中恢复R,t

没什么好说的,不过手写一遍印象更深刻。

int main(int argc, char **argv) {

// 给定Essential矩阵

Matrix3d E;

E << -0.0203618550523477, -0.4007110038118445, -0.03324074249824097,

0.3939270778216369, -0.03506401846698079, 0.5857110303721015,

-0.006788487241438284, -0.5815434272915686, -0.01438258684486258;

// 待计算的R,t

Matrix3d R;

Vector3d t;

// SVD and fix sigular values

// START YOUR CODE HERE

// SVD on Sigma

Eigen::JacobiSVD<Eigen::Matrix3d> svd(E, Eigen::ComputeFullU | Eigen::ComputeFullV); //Eigen的svd函数,计算满秩的U和V

Eigen::Matrix3d U = svd.matrixU();

Eigen::Matrix3d V = svd.matrixV();

Eigen::Vector3d sv = svd.singularValues();

cout << "U=" << U << endl;

cout << "V=" << V << endl;

cout << "sv=" << sv << endl;

Eigen::Matrix3d Sigma = Eigen::Matrix3d::Zero();

Sigma(0,0) = sv(0);

Sigma(1,1) = sv(1);

cout << "Sigma:\n" << Sigma << endl;

// END YOUR CODE HERE

// set t1, t2, R1, R2

// START YOUR CODE HERE

//use AngleAxis

Eigen::AngleAxisd rotation_vector_neg ( -M_PI/2, Eigen::Vector3d ( 0,0,1 ) ); //沿 Z 轴旋转 -90 度

Eigen::AngleAxisd rotation_vector_pos ( M_PI/2, Eigen::Vector3d ( 0,0,1 ) ); //沿 Z 轴旋转 90 度

Eigen::Matrix3d RzNegHalfPi = rotation_vector_neg.toRotationMatrix();

Eigen::Matrix3d RzPosHalfPi = rotation_vector_pos.toRotationMatrix();

Matrix3d t_wedge1 = U * RzPosHalfPi * Sigma * U.transpose();

Matrix3d t_wedge2 = U * RzNegHalfPi * Sigma * U.transpose();

Matrix3d R1 = U * RzPosHalfPi.transpose() * V.transpose();

Matrix3d R2 = U * RzNegHalfPi.transpose() * V.transpose();

// END YOUR CODE HERE

cout << "R1 = \n" << R1 << endl;

cout << "R2 = \n" << R2 << endl;

cout << "t1 = \n" << Sophus::SO3d::vee(t_wedge1) << endl; //求李代数??

cout << "t2 = \n" << Sophus::SO3d::vee(t_wedge2) << endl;

// check t^R=E up to scale

Matrix3d tR = t_wedge1 * R1;

cout << "t^R = " << tR << endl;

return 0;

}

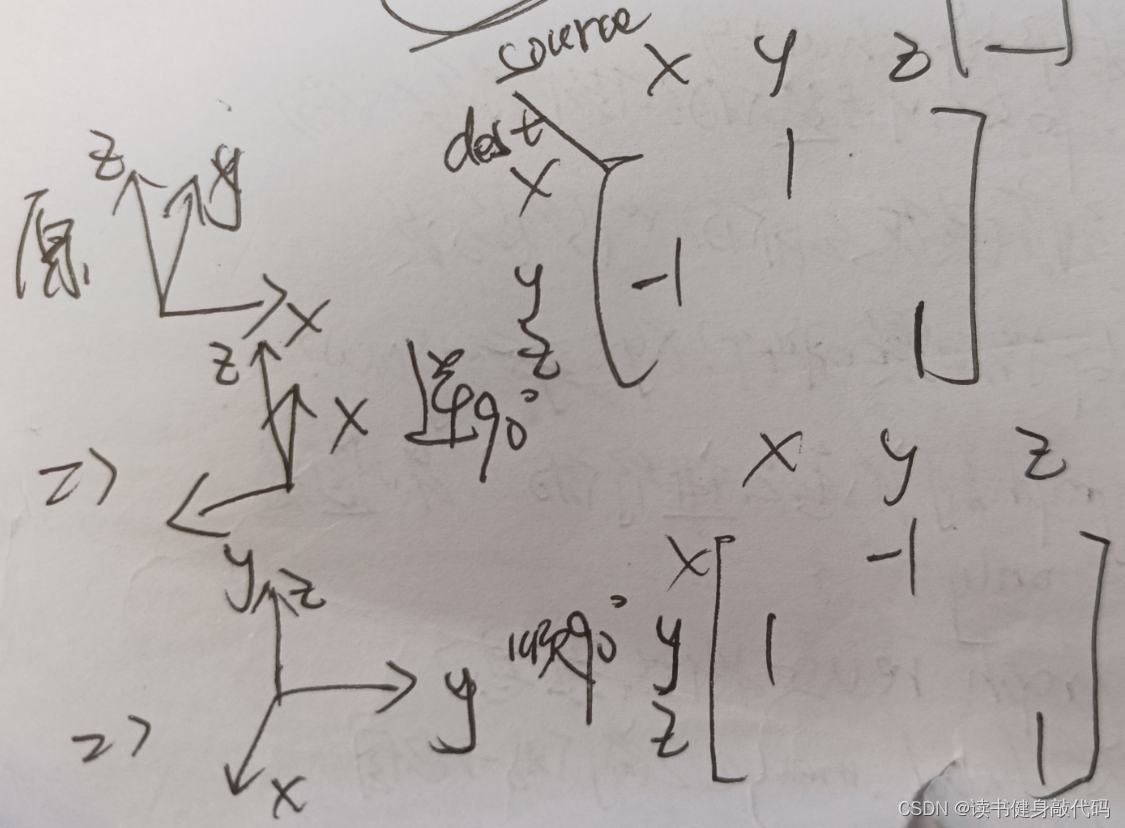

上面只是为了理解原理,其实可以更简洁,由于绕Z轴顺逆时针旋转的旋转矩阵是固定的,所以可以直接定义出来,

定义的方法见下图:

授人以渔:

顺便再授人以鱼:

以下是VINS-MONO中对应的代码,加了些注释:

void InitialEXRotation::decomposeE(cv::Mat E,

cv::Mat_<double> &R1, cv::Mat_<double> &R2,

cv::Mat_<double> &t1, cv::Mat_<double> &t2)

{

cv::SVD svd(E, cv::SVD::MODIFY_A);

//绕z顺时针90°

cv::Matx33d W(0, -1, 0,

1, 0, 0,

0, 0, 1);

//绕z逆时针90°

cv::Matx33d Wt(0, 1, 0,

-1, 0, 0,

0, 0, 1);

R1 = svd.u * cv::Mat(W) * svd.vt;

R2 = svd.u * cv::Mat(Wt) * svd.vt;

t1 = svd.u.col(2);//3*3取最后一个是待求量(为什么t2可以直接取负号?)

t2 = -svd.u.col(2);

}

4. 用G-N实现Bundle Adjustment中的位姿估计

int main(int argc, char **argv)

{

VecVector2d p2d;

VecVector3d p3d;

Matrix3d K;

double fx = 520.9, fy = 521.0, cx = 325.1, cy = 249.7;

K << fx, 0, cx, 0, fy, cy, 0, 0, 1;

// load points in to p3d and p2d

// START YOUR CODE HERE

double data2d[2] = {0}, data3d[3] = {0};

ifstream fin2d(p2d_file), fin3d(p3d_file);

for(int i=0;i<76;++i)

{

fin2d>>data2d[0];

fin2d>>data2d[1];

p2d.push_back(Eigen::Vector2d(data2d[0], data2d[1]));

fin3d>>data3d[0];

fin3d>>data3d[1];

fin3d>>data3d[2];

p3d.push_back(Eigen::Vector3d(data3d[0], data3d[1], data3d[2]));

}

// END YOUR CODE HERE

assert(p3d.size() == p2d.size());

int iterations = 100;

double cost = 0, lastCost = 0;

int nPoints = p3d.size();

cout << "points: " << nPoints << endl;

Sophus::SE3d T_esti; // estimated pose,李群,不是李代数,李代数是se3,是Vector3d

for (int iter = 0; iter < iterations; iter++) {

Matrix<double, 6, 6> H = Matrix<double, 6, 6>::Zero();

Vector6d b = Vector6d::Zero();

cost = 0;

// compute cost 计算误差,是 观测-预测

for (int i = 0; i < nPoints; i++)

{

// compute cost for p3d[I] and p2d[I]

// START YOUR CODE HERE

Eigen::Vector3d pc = T_esti * p3d[i]; //3D点转换到相机坐标系下(取了前3维)

double inv_z = 1.0 / pc[2];

double inv_z2 = inv_z * inv_z;

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy); //重投影,预测

Eigen::Vector2d e = p2d[i] - proj;

cost += e.transpose() * e;

// END YOUR CODE HERE

// compute jacobian

Matrix<double, 2, 6> J;

// START YOUR CODE HERE

J<<fx * inv_z,

0,

-fx * pc[0] * inv_z2,

-fx * pc[0] * pc[1] * inv_z2,

fx + fx * pc[0] * pc[0] * inv_z2,

-fx * pc[1] * inv_z,

0,

fy * inv_z,

-fy * pc[1] * inv_z2,

-fy - fy * pc[1] * pc[1] * inv_z2,

fy * pc[0] * pc[1] * inv_z2,

fy * pc[0] * inv_z;

J = -J;

// END YOUR CODE HERE

//高斯牛顿的系数矩阵和非齐次项

H += J.transpose() * J;

b += -J.transpose() * e;

}

// solve dx

Vector6d dx; //解出来的△x是李代数

// START YOUR CODE HERE

dx = H.ldlt().solve(b); //解方程

// END YOUR CODE HERE

if (isnan(dx[0]))

{

cout << "result is nan!" << endl;

break;

}

if (iter > 0 && cost >= lastCost) {

// cost increase, update is not good

cout << "cost: " << cost << ", last cost: " << lastCost << endl;

break;

}

// update your estimation

// START YOUR CODE HERE

T_esti = Sophus::SE3d::exp(dx) * T_esti;

// END YOUR CODE HERE

lastCost = cost;

cout << "iteration " << iter << " cost=" << cout.precision(12) << cost << endl;

}

cout << "estimated pose: \n" << T_esti.matrix() << endl;

return 0;

}

5. 使用ICP实现轨迹对齐

使用第三章的轨迹绘制和读取代码进行修改,添加了手写的ICP部分的程序,最终轨迹输出和 T g e T_{ge} Tge的估计结果如图所示,核心部分代码如下所示。

思考:本身想计算出两条轨迹的RMSE,但是思考之后发现此处将平移部分看做位置P,那么两个轨迹也就相当于两帧图像,之间只有一次位姿变换T,而计算RMSE是计算整条轨迹上所有的T的误差,那么求出 T g e T_{ge} Tge后对 T e T_e Te进行变换,就能计算RMSE了。

#include <iostream>

#include <fstream>

#include <unistd.h>

#include <pangolin/pangolin.h>

#include <sophus/se3.hpp>

#include <opencv2/core/core.hpp>

using namespace Sophus;

using namespace std;

using namespace cv;

string compare_file = "./compare.txt";

typedef vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> TrajectoryType;

typedef vector<TrajectoryType> LongTrajectoryType;

typedef Eigen::Matrix<double,6,1> Vector6d;

void DrawTrajectory(const vector<Point3d> >, const vector<Point3d> &esti, const string& title);

vector<TrajectoryType> ReadTrajectory(const string &path);

vector<Point3d> GetPoint(TrajectoryType TT);

void pose_estimation_3d3d(const vector<Point3d> &pts1, const vector<Point3d> &pts2, Mat &R, Mat &t);

vector<Point3d> TrajectoryTransform(Mat T, Mat t, vector<Point3d> esti );

int main(int argc, char **argv) {

LongTrajectoryType CompareData = ReadTrajectory(compare_file);

assert(!CompareData.empty());

cout<<"size: "<<CompareData.size()<<endl;

vector<Point3d> EstiPt = GetPoint(CompareData[0]);

vector<Point3d> GtPt = GetPoint(CompareData[1]);

Mat R, t; //待求位姿

pose_estimation_3d3d( GtPt, EstiPt, R, t);

cout << "ICP via SVD results: \n" << endl;

cout << "R = \n" << R << endl;

cout << "t = \n" << t << endl;

cout << "R_inv = \n" << R.t() << endl;

cout << "t_inv = \n" << -R.t() * t << endl;

DrawTrajectory(GtPt, EstiPt, "Before Calibrate");

vector<Point3d> EstiCali = TrajectoryTransform(R, t, EstiPt);

DrawTrajectory(GtPt, EstiCali, "Atfer Calibrate");

return 0;

}

LongTrajectoryType ReadTrajectory(const string &path)

{

ifstream fin(path);

TrajectoryType trajectory1, trajectory2;

if (!fin) {

cerr << "trajectory " << path << " not found." << endl;

return {};

}

while (!fin.eof()) {

double time1, tx1, ty1, tz1, qx1, qy1, qz1, qw1;

fin >> time1 >> tx1 >> ty1 >> tz1 >> qx1 >> qy1 >> qz1 >> qw1;

double time2, tx2, ty2, tz2, qx2, qy2, qz2, qw2;

fin >> time2 >> tx2 >> ty2 >> tz2 >> qx2 >> qy2 >> qz2 >> qw2;

Sophus::SE3d p1(Eigen::Quaterniond(qw1, qx1, qy1, qz1), Eigen::Vector3d(tx1, ty1, tz1));

trajectory1.push_back(p1);

Sophus::SE3d p2(Eigen::Quaterniond(qw2, qx2, qy2, qz2), Eigen::Vector3d(tx2, ty2, tz2));

trajectory2.push_back(p2);

}

LongTrajectoryType ret{trajectory1, trajectory2};

return ret;

}

void DrawTrajectory(const vector<Point3d> >, const vector<Point3d> &esti, const string& title)

{

// create pangolin window and plot the trajectory

pangolin::CreateWindowAndBind(title, 1024, 768);

glEnable(GL_DEPTH_TEST);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

pangolin::OpenGlRenderState s_cam(

pangolin::ProjectionMatrix(1024, 768, 500, 500, 512, 389, 0.1, 1000),

pangolin::ModelViewLookAt(0, -0.1, -1.8, 0, 0, 0, 0.0, -1.0, 0.0)

);

pangolin::View &d_cam = pangolin::CreateDisplay()

.SetBounds(0.0, 1.0, pangolin::Attach::Pix(175), 1.0, -1024.0f / 768.0f)

.SetHandler(new pangolin::Handler3D(s_cam));

while (pangolin::ShouldQuit() == false) {

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

d_cam.Activate(s_cam);

glClearColor(1.0f, 1.0f, 1.0f, 1.0f);

glLineWidth(2);

for (size_t i = 0; i < gt.size() - 1; i++) {

glColor3f(0.0f, 0.0f, 1.0f); // blue for ground truth

glBegin(GL_LINES);

auto p1 = gt[i], p2 = gt[i + 1];

glVertex3d(p1.x, p1.y, p1.z);

glVertex3d(p2.x, p2.y, p2.z);

glEnd();

}

for (size_t i = 0; i < esti.size() - 1; i++) {

glColor3f(1.0f, 0.0f, 0.0f); // red for estimated

glBegin(GL_LINES);

auto p1 = esti[i], p2 = esti[i + 1];

glVertex3d(p1.x, p1.y, p1.z);

glVertex3d(p2.x, p2.y, p2.z);

glEnd();

}

pangolin::FinishFrame();

usleep(5000); // sleep 5 ms

}

}

void pose_estimation_3d3d(const vector<Point3d> &pts1,

const vector<Point3d> &pts2,

Mat &R, Mat &t) {

Point3d p1, p2; // center of mass 质心,这里p1表示第1幅图,p2表示第2幅图,和书上的R是反着的,所以要计算R21=这里的R12^(-1)=R12^(T),最后也输出了

int N = pts1.size();

for (int i = 0; i < N; i++) {

p1 += pts1[i];

p2 += pts2[i];

}

p1 = Point3d(Vec3d(p1) / N);

p2 = Point3d(Vec3d(p2) / N);

vector<Point3d> q1(N), q2(N); // remove the center 去质心

for (int i = 0; i < N; i++) {

q1[i] = pts1[i] - p1;

q2[i] = pts2[i] - p2;

}

// compute q1*q2^T

Eigen::Matrix3d W = Eigen::Matrix3d::Zero();

for (int i = 0; i < N; i++)

{

W += Eigen::Vector3d(q1[i].x, q1[i].y, q1[i].z) * Eigen::Vector3d(q2[i].x, q2[i].y, q2[i].z).transpose(); //这里是2->1 R12,求R21要转置

}

cout << "W= \n" << W << endl;

// SVD on W

Eigen::JacobiSVD<Eigen::Matrix3d> svd(W, Eigen::ComputeFullU | Eigen::ComputeFullV); //Eigen的svd函数,计算满秩的U和V

Eigen::Matrix3d U = svd.matrixU();

Eigen::Matrix3d V = svd.matrixV();

cout << "U= \n" << U << endl;

cout << "V= \n" << V << endl;

Eigen::Matrix3d R_ = U * (V.transpose()); //这里能保证满足det(R)=1且正交吗?

cout<<"我的输出: det(R_): "<<R_.determinant()<<"\nR_: \n"<<R_<<endl; //Eigen的Mat

if (R_.determinant() < 0) //若行列式为负,取-R

{

R_ = -R_;

}

Eigen::Vector3d t_ = Eigen::Vector3d(p1.x, p1.y, p1.z) - R_ * Eigen::Vector3d(p2.x, p2.y, p2.z); //最优的t=p-Rp'

// convert to cv::Mat

R = (Mat_<double>(3, 3) <<

R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2)

);

t = (Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

}

vector<Point3d> GetPoint(TrajectoryType TT)

{

vector<Point3d> pts;

for(auto each:TT)

//不用做相机模型的处理,也不/5000

pts.push_back(Point3d(each.translation()[0], each.translation()[1], each.translation()[2]));

return pts;

}

//转换

vector<Point3d> TrajectoryTransform(Mat T, Mat t, vector<Point3d> esti )

{

vector<Point3d> calibrated={};

Mat Mat__31;

Sophus::SE3d SE3D;

for(auto each:esti)

{

Mat__31 = (Mat_<double>(3, 1)<<each.x, each.y, each.z);

Mat__31 = T * Mat__31 + t;

calibrated.push_back( Point3d(Mat__31));

}

return calibrated;

}

接着赶进度!

1931

1931

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?