- ORB特征点

实现代码:

#include <opencv2/opencv.hpp>

#include <execution> //并行处理for_each中的std::execution

#include <string>

#include <mutex>

#include <math.h>

#include <chrono>

using namespace std;

using namespace cv;

string first_file = "../1.png";

string second_file = "../2.png";

const double pi = 3.1415926;

//FAST

void computeAngle(const Mat &image, vector<KeyPoint> & keypoints);

void computeAngleMT(const const Mat &image, vector<KeyPoint> & keypoints); //使用for_each的并行化处理接口

//Brief

typedef vector<bool>DescType;

void computeORBDesc(const Mat &image, vector<KeyPoint> & KeyPoints, vector<DescType> &desc);

void computeORBDescMT(const Mat &image, vector<KeyPoint> & KeyPoints, vector<DescType> &desc);

void bfMatch(const vector<DescType> & desc1, const vector<DescType> &desc2, vector<DMatch> & matches);

//打印执行时间

template <typename FuncT>

void evaluate_and_call(FuncT func, const std::string &func_name = "", int times = 10)

{

double total_time = 0;

for(int i = 0; i < times; i++) {

auto t1 = std::chrono::steady_clock::now();

func();

auto t2 = std::chrono::steady_clock::now();

total_time += std::chrono::duration_cast<std::chrono::duration<double>>(t2 - t1).count() * 1000 ;

}

cout << "方法" << func_name << "平均调用时间/次数" << total_time/times << "/" << times << "毫秒" << endl;

}

int main(int argc, char **argv)

{

cv::Mat first_image = cv::imread(first_file, 0);

cv::Mat second_image = cv::imread(second_file, 0);

cv::imshow("first image", first_image);

cv::imshow("second image", second_image);

cv::waitKey(0);

vector<cv::KeyPoint> keypoints;

cv::FAST(first_image, keypoints, 40);

cout << "keypoints :" << keypoints.size() << endl;

evaluate_and_call([&]() {computeAngle(first_image, keypoints);}, "compute angle", 1);

evaluate_and_call([&]() {computeAngleMT(first_image, keypoints);}, "compute angel mt", 1);

vector<DescType> descriptors;

evaluate_and_call([&]() { computeORBDesc(first_image, keypoints, descriptors); },"compute orb descriptor", 1);

vector<DescType> descriptors_mt;

evaluate_and_call([&]() { computeORBDescMT(first_image, keypoints, descriptors_mt); },"compute orb descriptor mt", 1);

cv::Mat image_show;

cv::drawKeypoints(first_image, keypoints, image_show, cv::Scalar::all(-1), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

cv::imshow("features", image_show);

cv::imwrite("feat1.png", image_show);

cv::waitKey(0);

vector<cv::KeyPoint> keypoints2;

cv::FAST(second_image, keypoints2, 40);

cout << "keypoints: " << keypoints2.size() << endl;

computeAngle(second_image, keypoints2);

computeAngleMT(second_image, keypoints2);

vector<DescType> descriptors2;

computeORBDesc(second_image, keypoints2, descriptors2);

computeORBDescMT(second_image, keypoints2, descriptors2);

vector<cv::DMatch> matches;

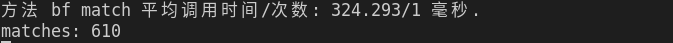

evaluate_and_call([&]() { bfMatch(descriptors, descriptors2, matches); },

"bf match", 1);

cout << "matches: " << matches.size() << endl;

cv::drawMatches(first_image, keypoints, second_image, keypoints2, matches,

image_show);

cv::imshow("matches", image_show);

cv::imwrite("matches.png", image_show);

// cv::waitKey(0);

while(char(cvWaitKey(1) != 'q')){}

cout << "done." << endl;

return 0;

}

void computeAngle(const cv::Mat &image, vector<cv::KeyPoint> &keypoints) {

int half_patch_size = 8;

int bad_points = 0;

for (auto &kp : keypoints) {

// START YOUR CODE HERE (~7 lines)

int u=kp.pt.x, v=kp.pt.y;

if(u > half_patch_size && v > half_patch_size && u + half_patch_size < image.cols && v + half_patch_size < image.rows)

{

float m10 = 0, m01 = 0;

for(int i = u - half_patch_size; i< u + half_patch_size; i++)

{

for(int j = v - half_patch_size; j<v+half_patch_size; j++)

{

m10 += j * image.at<uchar>(j,i);

m01 += i * image.at<uchar>(j,i);

}

}

kp.angle = (float)std::atan(m01/m10);

}

}

return;

}

void computeAngleMT(const cv::Mat &image, vector<cv::KeyPoint> &keypoints) {

int half_patch_size = 8;

std::mutex m;

std::for_each(std::execution::par_unseq, keypoints.begin(), keypoints.end(),

[&half_patch_size, &image, &m](auto &kp) {

int u=kp.pt.x, v=kp.pt.y;

if(u > half_patch_size && v > half_patch_size && u + half_patch_size < image.cols && v + half_patch_size < image.rows)

{

float m10 = 0, m01 = 0;

for(int i = u - half_patch_size; i< u + half_patch_size; i++)

{

for(int j = v - half_patch_size; j<v+half_patch_size; j++)

{

m10 += j * image.at<uchar>(j,i);

m01 += i * image.at<uchar>(j,i);

}

}

std::lock_guard<std::mutex> guard(m);

kp.angle = (float)std::atan(m01/m10);

}

});

return;

}

int ORB_pattern[256 * 4] ={......} ; //略

void computeORBDesc(const cv::Mat &image, vector<cv::KeyPoint> &keypoints,

vector<DescType> &desc) {

int half_patch_size = 8;

for (auto &kp : keypoints) {

DescType d(256, false);

int u = kp.pt.x, v = kp.pt.y;

if(u >= half_patch_size && v >= half_patch_size && u+half_patch_size <=image.cols && v+half_patch_size <=image.rows)

{

for (int i = 0; i < 256; i++) {

cv::Point2f p(ORB_pattern[i * 4] , ORB_pattern[i * 4 + 1]);

cv::Point2f q(ORB_pattern[i * 4 + 2], ORB_pattern[i * 4 + 3]);

double theta = kp.angle * pi / 180;

double cos_theta = cos(theta), sin_theta = sin(theta);

cv::Point2f pp = cv::Point2f(p.x * cos_theta - p.y * sin_theta, sin_theta * p.x + cos_theta * p.y) + kp.pt;

cv::Point2f qq = cv::Point2f(q.x * cos_theta - q.y * sin_theta, sin_theta * q.x + cos_theta * q.y) + kp.pt;

if(pp.x < 0 || pp.y < 0 || pp.x > image.cols || pp.y > image.rows || qq.x < 0 || qq.y < 0 || qq.x > image.cols || qq.y > image.rows)

{

d = {};

break;

}

d[i] = image.at<uchar>(pp.y, pp.x) > image.at<uchar>(qq.y, qq.x)? false:true;

}

} else {

d.clear();

}

desc.push_back(d);

}

cout << "bad/total: " << "/" << desc.size() << endl;

return;

}

void computeORBDescMT(const cv::Mat &image, vector<cv::KeyPoint> &keypoints,

vector<DescType> &desc) {

int half_patch_size = 8;

std::mutex m;

std::for_each(std::execution::par_unseq, keypoints.begin(), keypoints.end(),

[&image, &desc, &m](auto &kp) {

DescType d(256, false);

for (int i = 0; i < 256; i++) {

cv::Point2f p(ORB_pattern[i * 4] , ORB_pattern[i * 4 + 1]);

cv::Point2f q(ORB_pattern[i * 4 + 2], ORB_pattern[i * 4 + 3]);

double theta = kp.angle * pi / 180;

double cos_theta = cos(theta), sin_theta = sin(theta);

cv::Point2f pp = cv::Point2f(p.x * cos_theta - p.y * sin_theta, sin_theta * p.x + cos_theta * p.y) + kp.pt;

cv::Point2f qq = cv::Point2f(q.x * cos_theta - q.y * sin_theta, sin_theta * q.x + cos_theta * q.y) + kp.pt;

if(pp.x < 0 || pp.y < 0 || pp.x > image.cols || pp.y > image.rows || qq.x < 0 || qq.y < 0 || qq.x > image.cols || qq.y > image.rows)

{

d = {};

break;

}

d[i] = image.at<uchar>(pp.y, pp.x) > image.at<uchar>(qq.y, qq.x)? false:true;

}

std::lock_guard<std::mutex> guard(m);

desc.push_back(d);

});

}

void bfMatch(const vector<DescType> &desc1, const vector<DescType> &desc2,

vector<cv::DMatch> &matches) {

int d_max = 40;

for(int i1 = 0; i1 < desc1.size(); i1++) {

if(desc1[i1].empty())

continue;

cv::DMatch m{i1, 0, 256};

for(int i2 = 0; i2 < desc2.size(); i2++) {

if(desc2[i2].empty())

continue;

int distance = 0;

for(int i = 0; i < 256; i++) {

distance += desc1[i1][i] ^ desc2[i2][i];

}

if(distance < d_max && distance < m.distance)

{

m.distance = distance;

m.queryIdx = i1;

m.trainIdx = i2;

}

}

if(m.distance < d_max)

{

matches.push_back(m);

}

}

for (auto &m : matches) {

// cout << m.queryIdx << ", " << m.trainIdx << ", " << m.distance << endl;

}

return;

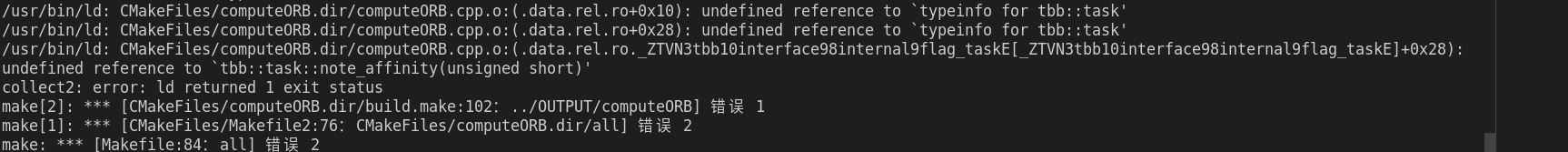

cmake_minimum_required(VERSION 3.16.3)

project(COMPUTEORB)

set(CMAEK_CXX_STANDARD 17)

#IF (NOT DEFINED ${CMAKE_BUILD_TYPE})

SET(CMAKE_BUILD_TYPE Release)

#ENDIF()

MESSAGE(STATUS "CMAKE_BUILD_TYPE IS ${CMAKE_BUILD_TYPE}")

SET(CMAKE_CXX_STANDARD 17)

find_package(OpenCV 3.4.15 REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS} "/usr/local/include/eigen3" "/usr/local/include/sophus")

SET(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/OUTPUT)

add_executable(computeORB computeORB.cpp)

target_link_libraries(computeORB ${OpenCV_LIBS} tbb)

实现效果:

注意,当使用题目中给定的应验值d_max = 50时,会存在误匹配,的不出筛选后的匹配图,所以本题中我将d_max改为40,匹配达到最好。

从第一次运行时间可以看出,单线程和多线程时间好像并没有对运行性能有所提升。

2.4

<1> 因为ORB的brief(描述子)是通过在特征点附近随即的选取若干个点对,将这些点对的灰度值的大小,组合成一个二进制串。

<2> 我的机器上匹配时,选阈值为40最合适,超过50,会出现误匹配的情况,低于40,导致匹配的点对太少,进行地图重建的时候,由于匹配的点对太少,出现地图稀疏的情况,不适合建图,更适合定位。

<3>暴力匹配

暴力匹配平均调用时间:0.3s

当特征点数量很大时,暴力匹配法的运算量将变得很大,特别的当想要匹配某个帧和一张地图的时候,这不符合在SLAM中的时实性需求,而FLANN(快速近似最近邻)算法更适合于匹配点数量极多的情况,从而实现快速高效匹配。

FLANN,参考:https://editor.csdn.net/md/?articleId=124145732

<4>性能方面,多进程比单进程快些,单具体性能提升多少随运行次数在改变。

CMakeLists.txt中需要链接tbb的库,否则会报上述错误;

2,从E恢复R,t

代码:

#include <Eigen/Core>

#include <Eigen/Dense>

#include <Eigen/Geometry>

#include <sophus/so3.hpp>

#include <iostream>

using namespace std;

using namespace Eigen;

int main(int argc, char **argv) {

// 给定Essential矩阵

Matrix3d E;

E << -0.0203618550523477, -0.4007110038118445, -0.03324074249824097,

0.3939270778216369, -0.03506401846698079, 0.5857110303721015,

-0.006788487241438284, -0.5815434272915686, -0.01438258684486258;

// 待计算的R,t

Matrix3d R;

Vector3d t;

JacobiSVD<Matrix3d> svd(E, ComputeFullU|ComputeFullV);

Matrix3d t_wedge1;

Matrix3d t_wedge2;

Matrix3d R1;

Matrix3d R2;

Matrix3d U=svd.matrixU();

Matrix3d V=svd.matrixV();

Matrix3d SIGMA=U.inverse()*E*V.transpose().inverse();

Vector3d sigma_value = svd.singularValues();

//处理后的sigma值

Vector3d sigma_value2={(sigma_value[0]+sigma_value[1])/2,(sigma_value[0]+sigma_value[1])/2,0};

Matrix3d SIGMA2=sigma_value2.asDiagonal();

Matrix3d R_z1=AngleAxisd(M_PI/2,Vector3d(0,0,1)).toRotationMatrix();

Matrix3d R_z2=AngleAxisd(-M_PI/2, Vector3d(0,0,1)).toRotationMatrix();

t_wedge1=U*R_z1*SIGMA*U.transpose();

t_wedge2=U*R_z2*SIGMA*U.transpose();

R1=U*R_z1.transpose()*V.transpose();

R2=U*R_z2.transpose()*V.transpose();

// END YOUR CODE HERE

cout << "R1 = " << R1 << endl;

cout << "R2 = " << R2 << endl;

cout << "t1 = " << Sophus::SO3d::vee(t_wedge1) << endl;

cout << "t2 = " << Sophus::SO3d::vee(t_wedge2) << endl;

// check t^R=E up to scale

Matrix3d tR = t_wedge1 * R1;

cout << "t^R = " << tR << endl;

return 0;

}

CMakeLists.txt

cmake_minimum_required(VERSION 3.16)

project(E2RT)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE "Release")

#添加头文件

include_directories( "/usr/include/eigen3")

find_package(Sophus REQUIRED)

include_directories(${Sophus_INCLUDE_DIRS})

add_executable(E2Rt E2Rt.cpp)

#链接OpenCV库

target_link_libraries(E2Rt ${Sophus_LIBRARIES} fmt)

运行结果:

3,用G-N实现Bundle Adjustment中的位姿估计

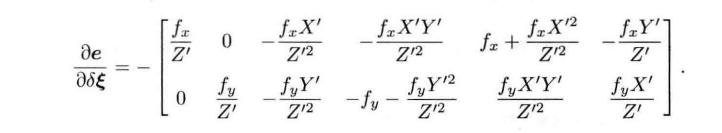

1.重投影误差:像素坐标(观测的到的投影位置)与3D点按照当前估计的相机位姿进行投影得到的位置作差.

2.

.

3. 通过李群到李代数的指数映射,将更新量dx的指数映射乘到估计位姿态上即可;

T_esti = Sophus::SE3d::exp(dx) * T_esti;

实现代码:

#include <Eigen/Core>

#include <Eigen/Dense>

using namespace Eigen;

#include <vector>

#include <fstream>

#include <iostream>

#include <iomanip>

#include "sophus/se3.hpp"

using namespace std;

typedef vector<Vector3d, Eigen::aligned_allocator<Vector3d>> VecVector3d;

typedef vector<Vector2d, Eigen::aligned_allocator<Vector2d>> VecVector2d;

typedef Matrix<double, 6, 1> Vector6d;

string p3d_file = "../p3d.txt";

string p2d_file = "../p2d.txt";

int main(int argc, char **argv) {

VecVector2d p2d;

VecVector3d p3d;

Matrix3d K;

double fx = 520.9, fy = 521.0, cx = 325.1, cy = 249.7;

K << fx, 0, cx, 0, fy, cy, 0, 0, 1;

// load points in to p3d and p2d

ifstream p3d_fin(p3d_file);

ifstream p2d_fin(p2d_file);

Vector3d p3d_input;

Vector2d p2d_input;

if (!p3d_fin) {

cerr << "p3d_fin " << p3d_file << " not found." << endl;

}

while (!p3d_fin.eof()) {

p3d_fin >> p3d_input(0) >> p3d_input(1) >> p3d_input(2);

p3d.push_back(p3d_input);

}

p3d_fin.close();

if (!p2d_fin) {

cerr << "p2d_fin " << p2d_file << " not found." << endl;

}

while (!p2d_fin.eof()) {

p2d_fin >> p2d_input(0) >> p2d_input(1);

p2d.push_back(p2d_input);

}

p2d_fin.close();

assert(p3d.size() == p2d.size());

int iterations = 100;

double cost = 0, lastCost = 0;

int nPoints = p3d.size();

cout << "points: " << nPoints << endl;

Sophus::SE3d T_esti; // estimated pose //直接定义一个T,它是单位矩阵

cout << "xxxx" << T_esti.matrix() << endl;

for (int iter = 0; iter < iterations; iter++) {

Matrix<double, 6, 6> H = Matrix<double, 6, 6>::Zero();

Vector6d b = Vector6d::Zero();

cost = 0;

// compute cost

for (int i = 0; i < nPoints; i++) {

// compute cost for p3d[I] and p2d[I]

Eigen::Vector3d pc = T_esti * p3d[i];

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy);

Eigen::Vector2d e = p2d[i] - proj;

cost += e.squaredNorm()/2; //平方范数

// compute jacobian

Matrix<double, 2, 6> J;

double inv_z = 1.0 / pc[2];

double inv_z2 = inv_z * inv_z;

J << -fx * inv_z,

0,

fx * pc[0] * inv_z2,

fx * pc[0] * pc[1] * inv_z2,

-fx - fx * pc[0] * pc[0] * inv_z2,

fx * pc[1] * inv_z,

0,

-fy * inv_z,

fy * pc[1] * inv_z2,

fy + fy * pc[1] * pc[1] * inv_z2,

-fy * pc[0] * pc[1] * inv_z2,

-fy * pc[0] * inv_z;

H += J.transpose() * J;

b += -J.transpose() * e;

}

Vector6d dx;

dx = H.ldlt().solve(b);

if (isnan(dx[0])) {

cout << "result is nan!" << endl;

break;

}

if (iter > 0 && cost >= lastCost) {

// cost increase, update is not good

cout << "cost: " << cost << ", last cost: " << lastCost << endl;

break;

}

T_esti = Sophus::SE3d::exp(dx) * T_esti;

lastCost = cost;

cout << "iteration " << iter << " cost=" << cout.precision(12) << cost << endl;

}

cout << "estimated pose: \n" << T_esti.matrix() << endl;

return 0;

}

CMakeLists.txt

project(GN)

cmake_minimum_required(VERSION 3.4)

find_package(OpenCV 3.4.15 REQUIRED)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE "Release")

include_directories("/usr/include/eigen3")

find_package(Sophus REQUIRED)

set(Sophus_LIBRARIES libSophus.so)

include_directories(${Sophus_INCLUDE_DIRS})

add_executable(gn GN-BA.cpp)

target_link_libraries(gn ${Sophus_LIBRARIES})

4,用ICP实现轨迹的对齐

两条轨迹的观测坐标系不同,需要通过ICP的SVD方法算出R,t,然后将真实位姿乘以求出的T(R,t),将其变换到相机坐标下进行显示:

实现代码:

#include <sophus/se3.hpp>

#include <string>

#include <iostream>

#include <Eigen/Core>

#include <Eigen/Geometry>

#include <opencv2/core/core.hpp>

#include <pangolin/pangolin.h>

#include <unistd.h>

using namespace std;

using namespace Eigen;

using namespace cv;

string trajectory_file = "../compare.txt";

void pose_estimation_3d3d(const vector<Point3f> &pts1,const vector<Point3f> &pts2, Eigen::Matrix3d &R_, Eigen::Vector3d &t_);

void DrawTrajectory(vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_e,

vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_g,

const string& ID);

int main(int argc, char **argv) {

vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_e;

vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_g;

vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_gt;

vector<Point3f> pts_e,pts_g;

ifstream fin(trajectory_file);

if(!fin){

cerr<<"can't find file at "<<trajectory_file<<endl;

return 1;

}

while(!fin.eof()){

double t1,tx1,ty1,tz1,qx1,qy1,qz1,qw1;

double t2,tx2,ty2,tz2,qx2,qy2,qz2,qw2;

fin>>t1>>tx1>>ty1>>tz1>>qx1>>qy1>>qz1>>qw1>>t2>>tx2>>ty2>>tz2>>qx2>>qy2>>qz2>>qw2;

pts_e.push_back(Point3f(tx1,ty1,tz1));

pts_g.push_back(Point3f(tx2,ty2,tz2));

poses_e.push_back(Sophus::SE3d(Quaterniond(qw1,qx1,qy1,qz1),Vector3d(tx1,ty1,tz1)));

poses_g.push_back(Sophus::SE3d(Quaterniond(qw2,qx2,qy2,qz2),Vector3d(tx2,ty2,tz2)));

}

Matrix3d R;

Vector3d t;

pose_estimation_3d3d(pts_e,pts_g,R,t);

Sophus::SE3d T_eg(R,t);

for(auto SE_g:poses_g) {

Sophus::SE3d T_e=T_eg*SE_g; //相机坐标系下的poses_g的真实位姿

poses_gt.push_back(T_e);

}

DrawTrajectory(poses_e,poses_g," Before Align");

DrawTrajectory(poses_e,poses_gt," After Align");

return 0;

}

void pose_estimation_3d3d(const vector<Point3f> &pts1,

const vector<Point3f> &pts2,

Eigen::Matrix3d &R_, Eigen::Vector3d &t_) {

Point3f p1, p2; // center of mass

int N = pts1.size();

for (int i = 0; i < N; i++) {

p1 += pts1[i];

p2 += pts2[i];

}

p1 = Point3f(Vec3f(p1) / N);

p2 = Point3f(Vec3f(p2) / N);

vector<Point3f> q1(N), q2(N); // remove the center

for (int i = 0; i < N; i++) {

q1[i] = pts1[i] - p1;

q2[i] = pts2[i] - p2;

}

// compute q1*q2^T

Eigen::Matrix3d W = Eigen::Matrix3d::Zero();

for (int i = 0; i < N; i++) {

W += Eigen::Vector3d(q1[i].x, q1[i].y, q1[i].z) * Eigen::Vector3d(q2[i].x, q2[i].y, q2[i].z).transpose();

}

cout << "W=" << W << endl;

// SVD on W

Eigen::JacobiSVD<Eigen::Matrix3d> svd(W, Eigen::ComputeFullU | Eigen::ComputeFullV);

Eigen::Matrix3d U = svd.matrixU();

Eigen::Matrix3d V = svd.matrixV();

cout << "U=" << U << endl;

cout << "V=" << V << endl;

R_ = U * (V.transpose());

if (R_.determinant() < 0) {

R_ = -R_;

}

t_ = Eigen::Vector3d(p1.x, p1.y, p1.z) - R_ * Eigen::Vector3d(p2.x, p2.y, p2.z);

}

void DrawTrajectory(vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_e,

vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> poses_g,

const string& ID) {

if (poses_e.empty() || poses_g.empty()) {

cerr << "Trajectory is empty!" << endl;

return;

}

string windowtitle = "Trajectory Viewer" + ID;

// create pangolin window and plot the trajectory

pangolin::CreateWindowAndBind(windowtitle, 1024, 768);

glEnable(GL_DEPTH_TEST);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

pangolin::OpenGlRenderState s_cam(

pangolin::ProjectionMatrix(1024, 768, 500, 500, 512, 389, 0.1, 1000),

pangolin::ModelViewLookAt(0, -0.1, -1.8, 0, 0, 0, 0.0, -1.0, 0.0)

);

pangolin::View &d_cam = pangolin::CreateDisplay()

.SetBounds(0.0, 1.0, pangolin::Attach::Pix(175), 1.0, -1024.0f / 768.0f)

.SetHandler(new pangolin::Handler3D(s_cam));

while (pangolin::ShouldQuit() == false) {

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

d_cam.Activate(s_cam);

glClearColor(1.0f, 1.0f, 1.0f, 1.0f);

glLineWidth(2);

for (size_t i = 0; i < poses_e.size() - 1; i++) {

glColor3f(1.0f, 0.0f, 0.0f);

glBegin(GL_LINES);

auto p1 = poses_e[i], p2 = poses_e[i + 1];

glVertex3d(p1.translation()[0], p1.translation()[1], p1.translation()[2]);

glVertex3d(p2.translation()[0], p2.translation()[1], p2.translation()[2]);

glEnd();

}

for (size_t i = 0; i < poses_g.size() - 1; i++) {

glColor3f(0.0f, 0.0f, 1.0f);

glBegin(GL_LINES);

auto p1 = poses_g[i], p2 = poses_g[i + 1];

glVertex3d(p1.translation()[0], p1.translation()[1], p1.translation()[2]);

glVertex3d(p2.translation()[0], p2.translation()[1], p2.translation()[2]);

glEnd();

}

pangolin::FinishFrame();

usleep(5000); // sleep 5 ms

}

}

CMakeLists.txt

project(ICP)

cmake_minimum_required(VERSION 3.14)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE "Release")

include_directories("/usr/include/eigen3")

find_package(Pangolin REQUIRED)

find_package(OpenCV 3.4.15 REQUIRED)

find_package(Sophus REQUIRED)

include_directories( ${OpenCV_INCLUDE_DIRS})

include_directories( ${Pangolin_INCLUDE_DIRS})

include_directories( ${Sophus_INCLUDE_DIRS})

add_executable(draw_trajectory draw_trajectory.cpp)

target_link_libraries(draw_trajectory ${Sophus_LIBRARIES} ${OpenCV_LIBS} ${Pangolin_LIBRARIES})

3367

3367

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?