rospy tf api

ML提示与技巧/ TF2 OD API (ML Tips&Tricks / TF2 OD API)

Tensorflow Object Detection API (TF OD API) just got even better. Recently, Google released the new version of TF OD API which now supports Tensorflow 2.x. This is a huge improvement that we’ve all been waiting for!

Tensorflow对象检测API(TF OD API)变得更好。 最近,Google发布了TF OD API的新版本,该版本现在支持Tensorflow2.x。 这是我们所有人都在等待的巨大进步!

介绍 (Intro)

Recent improvements in object detection (OD) are driven by the widespread adoption of the technology by industry. Car manufacturers use object detection to help vehicles navigate the roads autonomously, doctors use it to improve their diagnosis process, farmers use it to detect various crop diseases… and there are many other use-cases (yet to be discovered) where OD could provide enormous value.

物体检测(OD)的最新改进是由工业广泛采用该技术所推动的。 汽车制造商使用对象检测来帮助车辆导航的道路自主,医生用它来提高自己的诊断过程中,农民用它来检测各种作物病害...... 还有许多其他的用例(尚未被发现)其中OD可以提供巨大值。

Tensorflow is a deep learning framework that powers many of the state-of-the-art (SOTA) models in natural language processing (NLP), speech synthesis, semantic segmentation, and object detection. TF OD API is an open-sourced collection of object detection models which is used by both the deep learning enthusiasts, and by different experts in the field.

Tensorflow是一个深度学习框架,可为自然语言处理(NLP),语音合成,语义分段和对象检测中的许多最先进(SOTA)模型提供支持。 TF OD API是对象检测模型的开源集合,供深度学习爱好者和该领域的不同专家使用。

Now when we’ve covered the basic terminology, let’s see what the new TF OD API offers!

现在,当我们介绍了基本术语后, 让我们看看新的TF OD API提供了什么!

新的TF OD API (New TF OD API)

New TF2 OD API introduces eager execution that makes debugging of the object detection models much easier; it also includes new SOTA models that are supported in the TF2 Model Zoo. Good news for Tensorflow 1.x. users is that the new OD API is backward compatible, so you can still use TF1 if you like, although switching to TF2 is highly recommended!

新的TF2 OD API引入了渴望执行的功能 ,这使对象检测模型的调试更加容易。 它还包括TF2 Model Zoo支持的新SOTA模型 。 Tensorflow 1.x的好消息。 用户认为新的OD API是向后兼容的,因此尽管强烈建议您切换到TF2,但仍然可以使用TF1!

In addition to the SSD (MobileNet/ResNet), Faster R-CNN (ResNet/Inception ResNet), and Mask R-CNN models that were previously available in TF1 Model Zoo, TF2 Model Zoo introduces new SOTA models such as CenterNet, ExtremeNet, and EfficientDet.

除了SSD(MobileNet / RESNET),更快R-CNN(RESNET /启RESNET),和屏蔽了以前在TF1模型动物园R-CNN模型,TF2模型动物园引入了新的SOTA机型如 CenterNet , ExtremeNet ,和 EfficientDet 。

Models in the TF2 OD API Model Zoo are pre-trained on the COCO 2017 dataset. The pre-trained models can be useful for out-of-the-box inference if you are interested in categories already included in this dataset, or for initializing your models when training on novel datasets. Using TF OD API models instead of implementing the SOTA models on your own gives you more time to focus on the data, which is another crucial factor in achieving high performance of OD models. However, even if you decide to build the models yourself, TF OD API models present a good performance benchmark!

TF2 OD API模型动物园中的模型已在COCO 2017数据集中进行了预训练。 如果您对此数据集中已经包含的类别感兴趣,或者在使用新颖的数据集进行训练时初始化模型,则预训练的模型对于即用型推理非常有用。 使用TF OD API模型而不是自己实现SOTA模型可让您有更多时间专注于数据,这是实现OD模型高性能的另一个关键因素。 但是,即使您决定自己构建模型, TF OD API模型也会提供良好的性能基准 !

You can choose from a long list of different models depending on your requirements (speed vs. accuracy):

您可以根据需要从一长串不同型号中进行选择(速度与准确性):

In the previous table, you can see that only the mean COCO mAP metric is given in the table. Although it can be a fairly good orientation for the performance of the model, additional statistics can be useful if you’re interested in how the model performs on objects of different sizes or different types of objects. For instance, if you’re interested in developing your advanced driver-assistance systems (ADAS), you don’t really care if detectors’ ability to detect bananas is bad!

在上表中,您可以看到在表中仅给出了平均COCO mAP度量 。 尽管对于模型的性能来说这可能是一个很好的方向,但是如果您对模型如何在不同大小或不同类型的对象上执行感兴趣,则可以使用其他统计信息 。 例如,如果您对开发高级驾驶员辅助系统 ( ADAS )感兴趣,那么您实际上并不关心检测器检测香蕉的能力是否很差!

In this blog, we’ll focus on explaining how to perform a detailed evaluation of different pre-trained EfficientDet checkpoints that are readily available in the TF2 Model Zoo.

在此博客中,我们将重点介绍如何对TF2 Model Zoo中容易获得 的不同的经过预先训练的 EfficientDet 检查点进行详细评估 。

EfficientDets — SOTA OD模型 (EfficientDets — SOTA OD models)

EfficientDet is a single-shot detector fairly similar to the RetinaNet model with several improvements: EfficientNet backbone, weighted bi-directional feature pyramid network (BiFPN), and compound scaling method.

EfficientDet是与RetinaNet模型非常相似的单发检测器,但具有以下改进: EfficientNet主干网 ,加权双向特征金字塔网络(BiFPN)和复合缩放方法 。

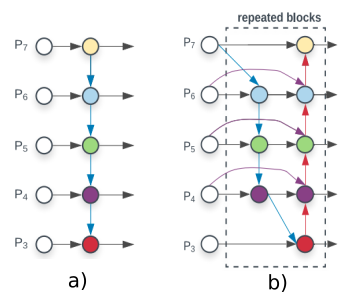

BiFPN is an improved version of the very popular FPN. It learns the weights that represent the importance of different input features, while repeatedly applying top-down and bottom-up multi-scale feature fusion.

BiFPN是非常流行的FPN的改进版本 。 它学习表示不同输入要素重要性的权重,同时反复应用自上而下和自下而上的多尺度特征融合。

The usual approach for improving the accuracy of the object detection models is to either increase the input image size or to use a bigger backbone network. Instead of operating on a single dimension or limited scaling dimensions, compound scaling jointly scales up the resolution/depth/width for backbone, feature network, and box/class prediction network.

提高对象检测模型准确性的常用方法是增加输入图像的大小或使用更大的骨干网络。 代替在单一维度或有限的缩放维度上进行操作, 复合缩放共同为骨干网,特征网络和框/类预测网络扩大了分辨率/深度/宽度。

EfficientDet models with different scaling factors are included in the TF2 OD API Model Zoo, and the scaling factor is denoted by the {X} in the name of the model, while the input image resolution is denoted by {RES}x{RES} EfficientDet D{X} {RES}x{RES}.

TF2 OD API模型库中包含具有不同缩放因子的EfficientDet模型,缩放因子由模型名称中的{ X}表示,而输入图像分辨率由{RES} x { RES}表示。 D { X} { RES} x { RES} 。

预训练的EfficientDet模型的评估 (Evaluation of pre-trained EfficientDet models)

We want to perform detailed accuracy comparisons to study the influence of the compound scaling configs on the performance of the network itself. For that reason, we’ve created a Google Colab notebook in which we explain how to perform the evaluation of the models and how to efficiently compare the evaluation results. We’re interested in detailed evaluation statistics including per class and different object size stats.

我们希望进行详细的精度比较,以研究复合缩放配置对网络本身性能的影响。 因此,我们创建了一个Google Colab笔记本 ,其中解释了如何执行模型评估以及如何有效地比较评估结果。 我们对详细的评估统计数据感兴趣, 包括每个类和不同的对象大小统计信息 。

Unfortunately, TF OD API doesn’t support such stats out of the box. That’s why we created a fork of the TF OD repo and updated the relevant scripts to introduce this functionality following the instructions given in this issue.

不幸的是,TF OD API不支持此类统计信息。 因此,我们创建了TF OD回购的分支,并按照本期给出的说明更新了相关脚本以引入此功能。

In the notebook, we provide instructions on how to setup Tensorflow 2 and the TF2 OD API. We also include scripts that make it easy to download the EfficientDet checkpoints, as well as additional scripts that help you to get the COCO 2017 Val dataset and create tfrecord files that are consumed by the TF OD API in the evaluation phase.

在笔记本中,我们提供有关如何设置 Tensorflow 2 和 TF2 OD API的说明 。 我们还包括可轻松下载EfficientDet检查点的 脚本,以及可帮助您获取COCO 2017 Val数据集并创建 TF OD API在评估阶段使用的tfrecord文件的其他脚本 。

Finally, we modify the pipeline.config files for the EfficientDet checkpoints to prepare everything for sequential evaluation of the 8 EfficientDet checkpoints. TF OD API uses them to configure the training and evaluation process. The schema for the training pipeline can be found in object_detection/protos/pipeline.proto. At a high level, the config file is split into 5 parts:

最后,我们修改EfficientDet检查点的pipeline.config文件,以准备对8个EfficientDet检查点进行顺序评估的所有内容。 TF OD API使用它们来配置培训和评估过程。 培训管道的模式可以在object_detection/protos/pipeline.proto 。 概括而言,配置文件分为5部分:

The

modelconfiguration. This defines what type of model will be trained (i.e., meta-architecture, feature extractor…).model配置。 这定义了将训练哪种类型的模型(即,元架构,特征提取器……)。The

train_config, which decides what parameters should be used to train model parameters (i.e., SGD parameters, input preprocessing, and feature extractor initialization values…).train_config,它决定应该使用哪些参数来训练模型参数(即SGD参数,输入预处理和特征提取器初始化值…)。The

eval_config, which determines what set of metrics will be reported for evaluation.eval_config,它确定将报告哪些度量标准集以进行评估。The

train_input_config, which defines what dataset the model should be trained on.train_input_config,它定义了应该对模型进行训练的数据集。The

eval_input_config, which defines what dataset the model will be evaluated on. Typically this should be different than the training input dataset.eval_input_config,它定义对模型进行评估的数据集 。 通常,这应该与训练输入数据集不同。

model {

(... Add model config here...)

}train_config : {

(... Add train_config here...)

}train_input_reader: {

(... Add train_input configuration here...)

}eval_config: {

}eval_input_reader: {

(... Add eval_input configuration here...)

}We’re only interested in the eval_config and eval_input_config parts of the config file. Take a closer look at this cell in the Google Colab for more details on how we set up the eval parameters. Two additional flags that are not enabled out of the box in the TF OD API are include_metrics_per_category and all_metrics_per_category. After applying the patch given in the Colab notebook, when set to truethese two enable detailed statistics (per category and size) that we’re interested in!

我们只对配置文件的eval_config和eval_input_config部分感兴趣。 请仔细查看Google Colab中的此单元格 ,以获取有关如何设置eval参数的更多详细信息。 TF OD API中没有立即启用的两个其他标志是include_metrics_per_category和all_metrics_per_category 。 应用Colab笔记本中给出的补丁后,将其设置为true这两个将启用我们感兴趣的详细统计信息(按类别和大小) !

Allegro火车-高效的实验管理 (Allegro Trains — efficient experiment management)

To be able to efficiently compare the model evaluations, we use an open-sourced experiment management tool called Allegro Trains. It’s very easy to integrate it into your code and it enables a load of different functionality out of the box. It can be used as an alternative to Tensorboard for visualizing experiment results.

为了能够有效地比较模型评估,我们使用了称为 Allegro Trains 的开源实验管理工具 。 将其集成到您的代码中非常容易,并且可以立即加载各种功能。 它可以用作Tensorboard的替代方案,以可视化实验结果。

Main script in the OD API is object_detection/model_main_tf2.py. It handles both the training and the eval stage. We created a small script that calls model_main_tf2.py in a loop to evaluate all EfficientDet checkpoints.

OD API中的主要脚本是object_detection/model_main_tf2.py 。 它同时处理培训和评估阶段。 我们创建了一个小脚本 ,该脚本在循环中调用model_main_tf2.py来评估所有EfficientDet检查点。

To integrate Allegro Trains experiment management into the evaluation script, we had to add 2 (+1) lines of code. In the model_main_tf2.py script we've added these lines:

要将Allegro Trains实验管理集成到评估脚本中,我们必须添加2(+1)行代码。 在model_main_tf2.py脚本中,我们添加了以下model_main_tf2.py行:

from trains import Tasktask = Task.init(project_name="NAME_OF_THE_PROJECT", task_name="NAME_OF_THE_TASK")# OPTIONAL - logs the pipeline.config into the Trains dashboard

task.connect_configuration(FLAGS.pipeline_config_path)and Trains automatically starts to log numerous things for you. You can find a comprehensive list of features here.

火车会自动开始为您记录许多事情。 您可以在此处找到完整的功能列表。

比较不同的EfficientDet模型 (Comparing different EfficientDet models)

On this link, you can find the results of the evaluation of 8 EfficientDet models included in the TF2 OD API. We’ve named the experiments as efficientdet_d{X}_coco17_tpu-32 where {x} denotes the compound scaling factor for the EfficientDet model. You’ll get the same results if you run the sample Colab notebook, and your experiments will show up on the demo Trains server.

在此 链接上 ,您可以找到TF2 OD API中包含的8个EfficientDet模型的评估结果 。 我们将实验命名为efficientdet_d{X}_coco17_tpu-32 ,其中{x}表示EfficientDet模型的复合比例因子。 如果运行示例Colab笔记本,您将获得相同的结果,并且实验将显示在演示Trains服务器上 。

In this section, we’ll show you how to efficiently compare different models and verify their performance on the evaluation dataset. We’re using COCO 2017 Val dataset since it’s a standard dataset for the evaluation of object detection models in the TF OD API.

在本节中,我们将向您展示如何有效地比较不同的模型并在评估数据集上验证其性能。 我们使用COCO 2017 Val数据集,因为它是用于评估TF OD API中对象检测模型的标准数据集。

We’re interested in the COCO Object Detection model evaluation metrics. Press here to see experiments’ results. This page contains graphs with all the metrics that we’re interested in.

我们对 COCO对象检测模型评估指标 感兴趣 。 点击此处查看实验结果。 此页面包含具有我们感兴趣的所有指标的图形。

We can first take a look at the DetectionBoxes_Precision plot which contains the average precision metric for all the categories in the dataset. The value of the mAP metric corresponds to the mAP metric reported in the table in the TF2 Model Zoo.

我们首先可以看一下DetectionBoxes_Precision图,其中包含数据集中所有类别的平均精度度量 。 mAP度量的值对应于TF2 Model Zoo中的表中报告的mAP度量。

Thanks to the patch we applied to the pycocotools, we can also get the per-category mAP metrics. Since there are 90 categories in the COCO dataset, we want to know the contribution of each category to the mean accuracy. This way we get more granular insight into the performance of the evaluated model. For example, you might be interested in how the model performs only for small objects in a certain category. From the aggregated statistics, it’s impossible to get such insights, while the proposed patch enables this!

多亏了我们应用于pycocotools的补丁程序,我们还可以获得每个类别的mAP指标 。 由于COCO数据集中有90个类别,因此我们想知道每个类别对平均准确度的贡献。 这样,我们可以更深入地了解评估模型的性能。 例如,您可能对模型仅对特定类别中的小对象的性能感兴趣。 从汇总的统计信息中,不可能获得这样的见解,而建议的补丁程序可以做到这一点!

We also use Allegro Trains’s capability to compare multiple experiments. The experiment comparison shows all the differences between the models. We can first get a detailed scalar and plot comparison of the relevant stats. In our example, we’ll compare the performance of EfficientDet D0, D1, and D2 models. Obviously, compound scaling positively influences the performance of the models.

我们还利用Allegro Trains的功能来比较多个实验。 实验比较显示了模型之间的所有差异。 我们首先可以获取有关统计数据的详细标量和绘图比较。 在我们的示例中,我们将比较EfficientDet D0,D1和D2模型的性能。 显然,复合缩放对模型的性能有积极影响。

One of the additional benefits of having per category stats is that you can analyze the influence of compound scaling factors on the accuracy of a certain class of interest. For example, if you’re interested in detecting buses in a surveillance video, you can analyze the graph that shows mAP performance for the bus category vs. compound scaling factor of the EfficientDet model. This helps decide which model to use, and where the sweet spot is between performance and computational complexity!

拥有每个类别统计信息的另一个好处是,您可以分析复合比例因子对某个特定类别的准确性的影响。 例如,如果您有兴趣检测监视视频中的总线,则可以分析该图,该图显示了总线类别的mAP性能与EfficientDet模型的复合比例因子。 这有助于确定使用哪种模型,以及性能和计算复杂性之间的最佳结合点!

One of the interesting things that you can also compare is the model configuration file pipeline.config. You can see that the basic difference between the EfficientDet models is in the dimensions of the input image and the number/depth of filters, as discussed earlier.

您还可以比较的有趣的事情之一是模型配置文件 pipeline.config. 您可以看到,EfficientDet模型之间的基本区别在于输入图像的尺寸和滤镜的数量/深度,如前所述。

The next plot contains the mAP values for 3 EfficientDet models. There is an obvious benefit in increasing the input image resolution, as well as increasing the number of filters in the model. While the D0 model achieves 33.55% mAP, the D2 model outperforms it and it achieves 41.79% mAP. You can also try out to perform per-class comparisons, comparison of other EfficientDet models, or whatever you find interesting for your application.

下一个图包含3个EfficientDet模型的mAP值。 增加输入图像分辨率以及增加模型中的滤波器数量有明显的好处 。 D0模型达到了33.55%的mAP,而D2模型则胜过了它,达到了41.79%的mAP。 您还可以尝试执行每个类的比较,其他EfficientDet模型的比较,或者对应用程序感兴趣的任何事情。

TF OD API如何用于提高施工现场安全性? (How is TF OD API used to improve construction site safety?)

Forsight is an early-stage startup and our mission is to turn construction sites into safe environments for workers. Forsight uses computer vision and machine learning, processing real-time CCTV footage, to help safety engineers monitor the proper use of personal protection equipment (PPE) to keep sites safe and secure.

Forsight是一家早期创业公司,我们的使命是将建筑工地转变为工人的安全环境。 Forsight使用计算机视觉和机器学习,处理实时CCTV录像,以帮助安全工程师监控个人保护设备(PPE)的正确使用,以确保场地安全。

Our construction site monitoring pipeline is built on top of the TF OD API and features include PPE detection and monitoring, social distance tracking, virtual geofence monitoring, no-park zone monitoring, and fire detection. At Forsight, we also use Trains to keep track of our experiments, share them between team members, and log everything so we can reproduce it.

我们的施工现场监控管道建立在TF OD API之上,其功能包括PPE检测和监控,社交距离跟踪,虚拟地理围栏监控,无停车场区域监控和火灾探测 。 在Forsight,我们还使用Trains跟踪我们的实验,在团队成员之间共享它们,并记录所有内容,以便我们进行复制。

As the COVID-19 pandemic continues, construction projects around the world are actively looking for ways to restart or keep projects going while keeping workers safe. Computer vision and machine learning can help construction managers ensure that their construction sites are safe. We built a real-time monitoring pipeline that tracks social distancing adherence between workers.

随着COVID-19大流行的继续,世界各地的建筑项目都在积极寻找重启或保持项目安全的方法。 计算机视觉和机器学习可以帮助建筑经理确保其建筑工地安全。 我们建立了一个实时监控管道,该管道可以跟踪工人之间的社会隔离度。

In addition to the new, invisible threat of COVID there are some age-old dangers that all construction workers face each day, notably, the ‘Fatal Four’: falls, struck by objects, caught in or between and electrocution hazards. Ensuring that workers wear their PPE is crucial for the overall safety of a construction site. TF OD API is a great starting point towards building an autonomous PPE monitoring pipeline. Our next blog will touch on how to train a basic helmet detector using the new TF OD API.

除了新的,看不见的COVID威胁外,所有建筑工人每天还面临着一些古老的危险,尤其是“致命四大危险”:跌落,被物体撞击,夹在中间或中间以及触电危险。 确保工人穿戴个人防护装备对建筑工地的整体安全至关重要。 TF OD API是建立自动PPE监视管道的一个很好的起点。 我们的下一个博客将介绍如何使用新的TF OD API训练基本的头盔探测器。

Some areas of a construction site are more dangerous than others. Creating virtual geofence areas and monitoring them using CCTV cameras adds huge value to the construction managers since they can focus on other tasks while being aware of any geofence breaches happening on their site. Moreover, geofencing can be easily extended to monitoring access to machines and heavy equipment.

施工现场的某些区域比其他区域更危险。 创建虚拟地理围栏区域并使用CCTV摄像机对其进行监视,为施工经理带来了巨大的价值,因为他们可以专注于其他任务,同时还可以了解其站点上发生的任何地理围栏违规情况。 此外,地理围栏可以轻松扩展到监视对机器和重型设备的访问。

结论 (Conclusion)

In this blog, we have discussed the benefits of using the new TF2 OD API. We have shown how to efficiently evaluate pre-trained OD models that are readily available in the TF2 OD API Model Zoo. We have also shown how to use Allegro Trains as an efficient experiment management solution that enables powerful insights and stats. Finally, we’ve shown some real-world applications of object detection in a construction environment.

在此博客中,我们讨论了使用新的TF2 OD API的好处 。 我们已经展示了如何有效地评估TF2 OD API模型Zoo中容易获得的预训练OD模型 。 我们还展示了如何将Allegro Trains用作有效的实验管理解决方案,以提供强大的见解和统计信息 。 最后,我们展示了在建筑环境中对象检测的一些实际应用 。

This blog is the first blog in the series of blogs that provide instructions and advice on using TF2 OD API. In the next blog, we’ll show how to train a custom object detector that enables you to detect workers wearing their PPE. Please follow us for more hands-on tutorials! Also, feel free to reach out to us if you have any questions or comments!

该博客是系列博客中的第一个博客,提供了有关使用TF2 OD API的说明和建议。 在下一个博客中,我们将展示如何训练自定义对象检测器,该检测器使您能够检测穿着PPE的工人 。 请关注我们以获取更多动手教程! 此外,如果您有任何疑问或意见,请随时与我们联系!

[1] “Speed/accuracy trade-offs for modern convolutional object detectors.”Huang J, Rathod V, Sun C, Zhu M, Korattikara A, Fathi A, Fischer I, Wojna Z,Song Y, Guadarrama S, Murphy K, CVPR 2017

[1]“现代卷积目标检测器的速度/精度权衡。”黄J,拉索德V,太阳C,朱M,科拉蒂卡拉A,法蒂A,菲舍尔I,沃伊娜Z,宋Y,瓜达拉马S,墨菲K, CVPR 2017

[2] TensorFlow Object Detection API, https://github.com/tensorflow/models/tree/master/research/object_detection

[2] TensorFlow对象检测API, https://github.com/tensorflow/models/tree/master/research/object_detection

[3] “EfficientDet: Scalable and Efficient Object Detection” Mingxing Tan, Ruoming Pang, Quoc V. Le, https://arxiv.org/abs/1911.09070

[3]“ EfficientDet:可扩展和高效的对象检测”,谭明兴,庞若鸣,Quoc V. Le, https://arxiv.org/abs/1911.09070

[4] “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks” Mingxing Tan and Quoc V. Le, 2019, https://arxiv.org/abs/1905.11946

[4]“ EfficientNet:对卷积神经网络的模型缩放的重新思考” Tan Mingxing Tan和Quoc V. Le,2019年, https://arxiv.org/abs/1905.11946

翻译自: https://medium.com/@ralasic1/new-tf2-object-detection-api-5c6ea8362a8c

rospy tf api

1944

1944

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?