要将 YOLOv3 的权重文件转换为 Keras 可以使用的 .h5 文件,需要进行以下步骤:

1. 下载并安装 `keras` 和 `tensorflow` 库:

```

pip install keras tensorflow

```

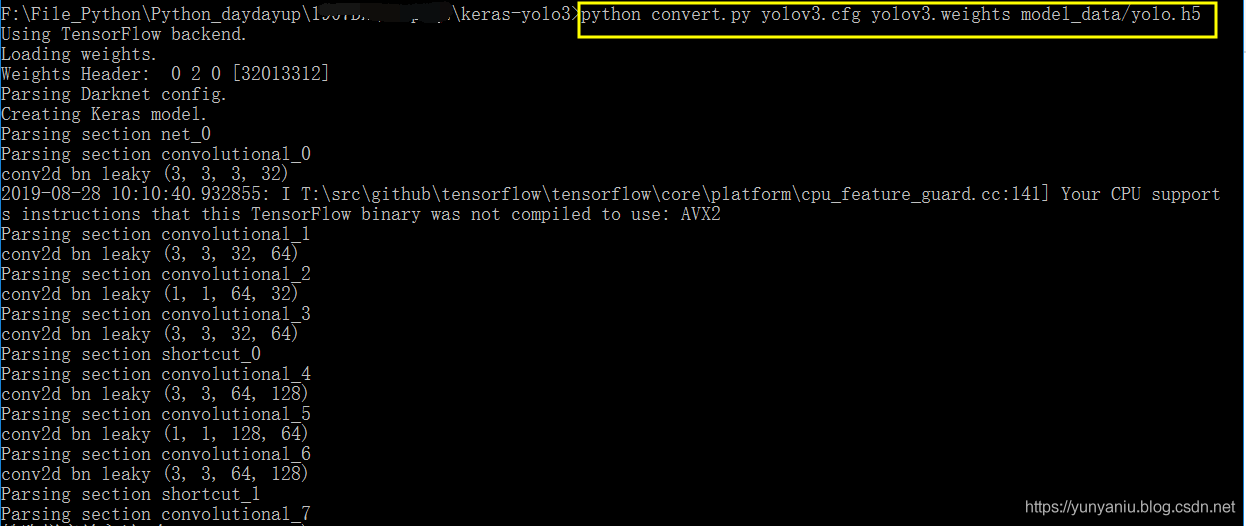

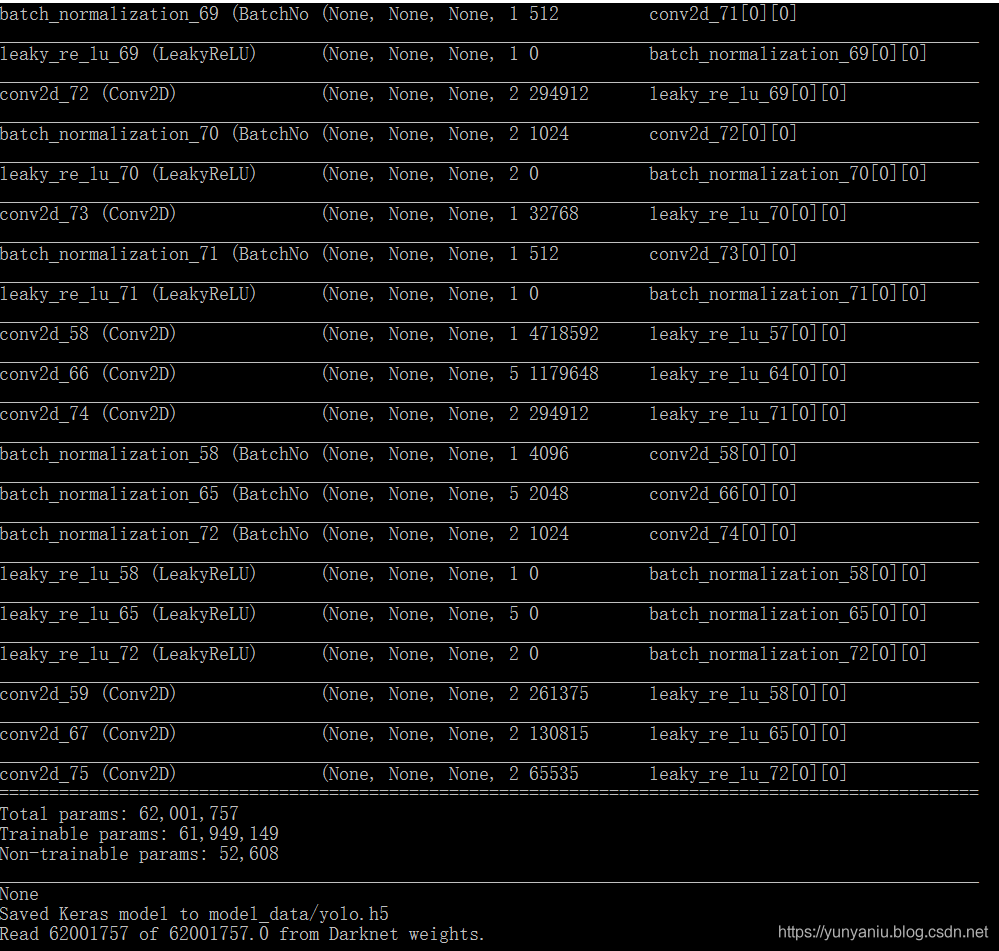

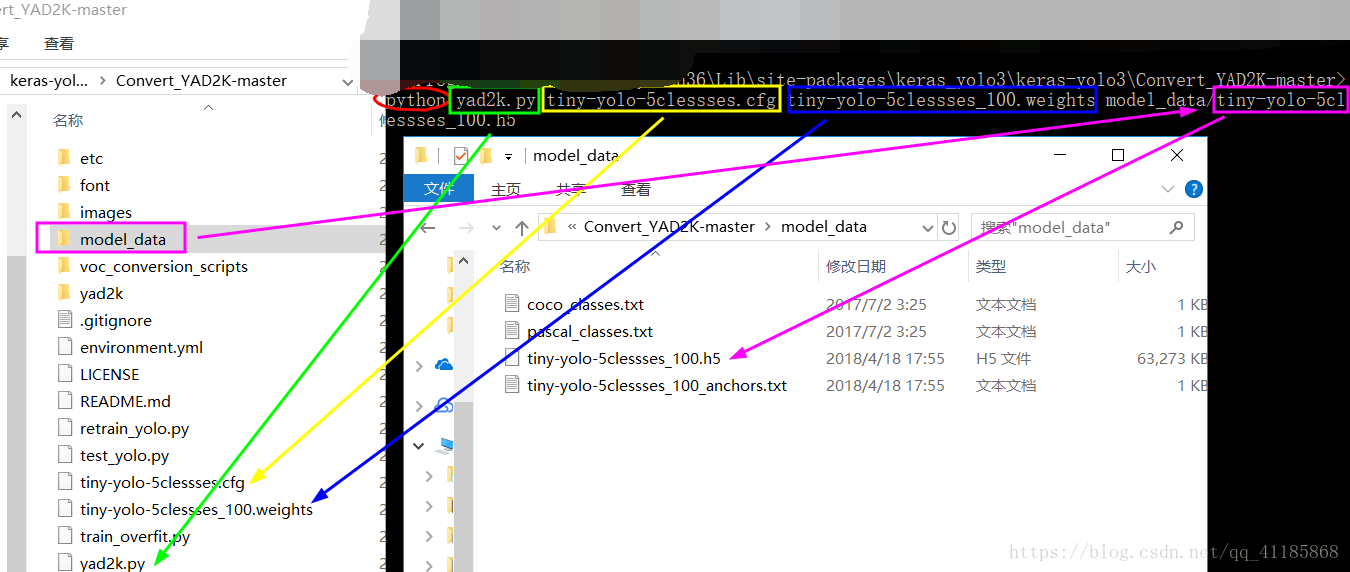

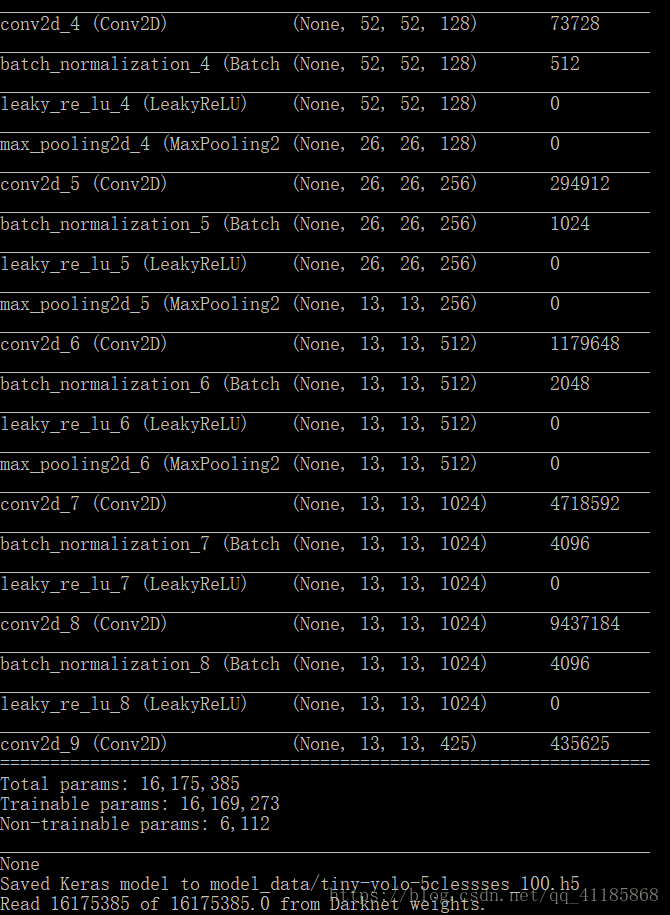

2. 下载 YOLOv3 的权重文件 `yolov3.weights` 和配置文件 `yolov3.cfg`。

3. 使用 `yolo_weights_convert.py` 脚本将权重文件转换为 Keras 模型:

```

python yolo_weights_convert.py yolov3.cfg yolov3.weights model_data/yolo.h5

```

其中,`yolov3.cfg` 是 YOLOv3 的配置文件路径,`yolov3.weights` 是权重文件路径,`model_data/yolo.h5` 是转换后的 Keras 模型保存路径。

以下是 `yolo_weights_convert.py` 的代码:

```python

import argparse

import numpy as np

import struct

import os

from keras.layers import Conv2D, Input, ZeroPadding2D, BatchNormalization, LeakyReLU, UpSampling2D

from keras.layers.merge import add, concatenate

from keras.models import Model

from keras.engine.topology import Layer

from keras import backend as K

class YoloLayer(Layer):

def __init__(self, anchors, max_grid, batch_size, warmup_batches, ignore_thresh, grid_scale,

obj_scale, noobj_scale, xywh_scale, class_scale, **kwargs):

self.ignore_thresh = ignore_thresh

self.warmup_batches = warmup_batches

self.anchors = anchors

self.grid_scale = grid_scale

self.obj_scale = obj_scale

self.noobj_scale = noobj_scale

self.xywh_scale = xywh_scale

self.class_scale = class_scale

self.batch_size = batch_size

self.true_boxes = K.placeholder(shape=(self.batch_size, 1, 1, 1, 50, 4))

super(YoloLayer, self).__init__(**kwargs)

def build(self, input_shape):

super(YoloLayer, self).build(input_shape)

def get_grid_size(self, net_h, net_w):

return net_h // 32, net_w // 32

def call(self, x):

input_image, y_pred, y_true = x

self.net_h, self.net_w = input_image.shape.as_list()[1:3]

self.grid_h, self.grid_w = self.get_grid_size(self.net_h, self.net_w)

# adjust the shape of the y_predict [batch, grid_h, grid_w, 3, 4+1+80]

y_pred = K.reshape(y_pred, (self.batch_size, self.grid_h, self.grid_w, 3, 4 + 1 + 80))

# convert the coordinates to absolute coordinates

box_xy = K.sigmoid(y_pred[..., :2])

box_wh = K.exp(y_pred[..., 2:4])

box_confidence = K.sigmoid(y_pred[..., 4:5])

box_class_probs = K.softmax(y_pred[..., 5:])

# adjust the shape of the y_true [batch, 50, 4+1]

object_mask = y_true[..., 4:5]

true_class_probs = y_true[..., 5:]

# true_boxes[..., 0:2] = center, true_boxes[..., 2:4] = wh

true_boxes = self.true_boxes[..., 0:4] # shape=[batch, 50, 4]

true_xy = true_boxes[..., 0:2] * [self.grid_w, self.grid_h] # shape=[batch, 50, 2]

true_wh = true_boxes[..., 2:4] * [self.net_w, self.net_h] # shape=[batch, 50, 2]

true_wh_half = true_wh / 2.

true_mins = true_xy - true_wh_half

true_maxes = true_xy + true_wh_half

# calculate the Intersection Over Union (IOU)

pred_xy = K.expand_dims(box_xy, 4)

pred_wh = K.expand_dims(box_wh, 4)

pred_wh_half = pred_wh / 2.

pred_mins = pred_xy - pred_wh_half

pred_maxes = pred_xy + pred_wh_half

intersect_mins = K.maximum(pred_mins, true_mins)

intersect_maxes = K.minimum(pred_maxes, true_maxes)

intersect_wh = K.maximum(intersect_maxes - intersect_mins, 0.)

intersect_areas = intersect_wh[..., 0] * intersect_wh[..., 1]

pred_areas = pred_wh[..., 0] * pred_wh[..., 1]

true_areas = true_wh[..., 0] * true_wh[..., 1]

union_areas = pred_areas + true_areas - intersect_areas

iou_scores = intersect_areas / union_areas

# calculate the best IOU, set the object mask and update the class probabilities

best_ious = K.max(iou_scores, axis=4)

object_mask_bool = K.cast(best_ious >= self.ignore_thresh, K.dtype(best_ious))

no_object_mask_bool = 1 - object_mask_bool

no_object_loss = no_object_mask_bool * box_confidence

no_object_loss = self.noobj_scale * K.mean(no_object_loss)

true_box_class = true_class_probs * object_mask

true_box_confidence = object_mask

true_box_xy = true_boxes[..., 0:2] * [self.grid_w, self.grid_h] - pred_mins

true_box_wh = K.log(true_boxes[..., 2:4] * [self.net_w, self.net_h] / pred_wh)

true_box_wh = K.switch(object_mask, true_box_wh, K.zeros_like(true_box_wh)) # avoid log(0)=-inf

true_box_xy = K.switch(object_mask, true_box_xy, K.zeros_like(true_box_xy)) # avoid log(0)=-inf

box_loss_scale = 2 - true_boxes[..., 2:3] * true_boxes[..., 3:4]

xy_loss = object_mask * box_loss_scale * K.binary_crossentropy(true_box_xy, box_xy)

wh_loss = object_mask * box_loss_scale * 0.5 * K.square(true_box_wh - box_wh)

confidence_loss = true_box_confidence * K.binary_crossentropy(box_confidence, true_box_confidence) \

+ (1 - true_box_confidence) * K.binary_crossentropy(box_confidence, true_box_confidence) \

* no_object_mask_bool

class_loss = object_mask * K.binary_crossentropy(true_box_class, box_class_probs)

xy_loss = K.mean(K.sum(xy_loss, axis=[1, 2, 3, 4]))

wh_loss = K.mean(K.sum(wh_loss, axis=[1, 2, 3, 4]))

confidence_loss = K.mean(K.sum(confidence_loss, axis=[1, 2, 3, 4]))

class_loss = K.mean(K.sum(class_loss, axis=[1, 2, 3, 4]))

loss = self.grid_scale * (xy_loss + wh_loss) + confidence_loss * self.obj_scale + no_object_loss \

+ class_loss * self.class_scale

# warm up training

batch_no = K.cast(self.batch_size / 2, dtype=K.dtype(object_mask))

warmup_steps = self.warmup_batches

warmup_lr = batch_no / warmup_steps

batch_no = K.cast(K.minimum(warmup_steps, batch_no), dtype=K.dtype(object_mask))

lr = self.batch_size / (batch_no * warmup_steps)

warmup_decay = (1 - batch_no / warmup_steps) ** 4

lr = lr * (1 - warmup_decay) + warmup_lr * warmup_decay

self.add_loss(loss)

self.add_metric(loss, name='loss', aggregation='mean')

self.add_metric(xy_loss, name='xy_loss', aggregation='mean')

self.add_metric(wh_loss, name='wh_loss', aggregation='mean')

self.add_metric(confidence_loss, name='confidence_loss', aggregation='mean')

self.add_metric(class_loss, name='class_loss', aggregation='mean')

self.add_metric(lr, name='lr', aggregation='mean')

return y_pred

def compute_output_shape(self, input_shape):

return input_shape[1]

def get_config(self):

config = {

'ignore_thresh': self.ignore_thresh,

'warmup_batches': self.warmup_batches,

'anchors': self.anchors,

'grid_scale': self.grid_scale,

'obj_scale': self.obj_scale,

'noobj_scale': self.noobj_scale,

'xywh_scale': self.xywh_scale,

'class_scale': self.class_scale

}

base_config = super(YoloLayer, self).get_config()

return dict(list(base_config.items()) + list(config.items()))

def _conv_block(inp, convs, skip=True):

x = inp

count = 0

for conv in convs:

if count == (len(convs) - 2) and skip:

skip_connection = x

count += 1

if conv['stride'] > 1:

x = ZeroPadding2D(((1, 0), (1, 0)))(x) # unlike tensorflow darknet prefer left and top paddings

x = Conv2D(conv['filter'],

conv['kernel'],

strides=conv['stride'],

padding='valid' if conv['stride'] > 1 else 'same', # unlike tensorflow darknet prefer left and top paddings

name='conv_' + str(conv['layer_idx']),

use_bias=False if conv['bnorm'] else True)(x)

if conv['bnorm']:

x = BatchNormalization(epsilon=0.001, name='bnorm_' + str(conv['layer_idx']))(x)

if conv['leaky']:

x = LeakyReLU(alpha=0.1, name='leaky_' + str(conv['layer_idx']))(x)

return add([skip_connection, x]) if skip else x

def make_yolov3_model():

input_image = Input(shape=(None, None, 3))

true_boxes = Input(shape=(1, 1, 1, 50, 4))

# Layer 0 => 4

x = _conv_block(input_image, [{'filter': 32, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 64, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True},

{'filter': 32, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 64, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 5 => 8

x = _conv_block(x, [{'filter': 128, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True},

{'filter': 64, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 128, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 9 => 11

x = _conv_block(x, [{'filter': 64, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 128, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 12 => 15

x = _conv_block(x, [{'filter': 256, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True},

{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 16 => 36

for i in range(7):

x = _conv_block(x, [{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

skip_36 = x

# Layer 37 => 40

x = _conv_block(x, [{'filter': 512, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True},

{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 41 => 61

for i in range(7):

x = _conv_block(x, [{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

skip_61 = x

# Layer 62 => 65

x = _conv_block(x, [{'filter': 1024, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 66 => 74

for i in range(3):

x = _conv_block(x, [{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 75 => 79

x = _conv_block(x, [{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True}])

# Layer 80 => 82

yolo_82 = _conv_block(x, [{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 255, 'kernel': 1, 'stride': 1, 'bnorm': False, 'leaky': False}], skip=False)

# Layer 83 => 86

x = _conv_block(x, [{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True}],

skip=False)

x = UpSampling2D(2)(x)

x = concatenate([x, skip_61])

# Layer 87 => 91

x = _conv_block(x, [{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True}], skip=False)

# Layer 92 => 94

yolo_94 = _conv_block(x, [{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 255, 'kernel': 1, 'stride': 1, 'bnorm': False, 'leaky': False}],

skip=False)

# Layer 95 => 98

x = _conv_block(x, [{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True}],

skip=False)

x = UpSampling2D(2)(x)

x = concatenate([x, skip_36])

# Layer 99 => 106

yolo_106 = _conv_block(x, [{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True},

{'filter

686

686

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?