在nx结合zed相机,使用yolo进行物体识别

1.venv虚拟环境安装

由于设备自带的python为python2.7环境,所以先创建虚拟环境并激活

sudo apt-get install software-properties-common

sudo apt install python3.6(可用存储库管理)

sudo aptitude install python3.6-venv

python3 -m venv torch2

source torch2/bin/activate

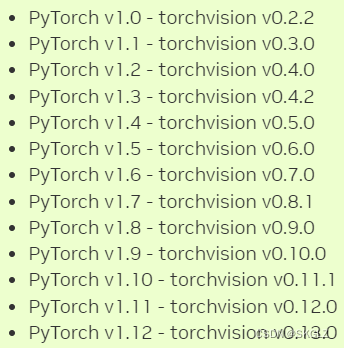

2.安装torch和torchvision

2.1安装torch及其依赖

NVIDIA论坛网址:https://forums.developer.nvidia.com/t/pytorch-for-jetson/72048,whl文件可从网站下载,torchvison从github下载对应版本即可

sudo apt-get install python3-pip libopenblas-base libopenmpi-dev libomp-dev

pip3 install Cython

pip3 install torch-1.8.0-cp36-cp36m-linux_aarch64.whl

2.2安装对应版本torchvision

由于我安装的torch1.7,git clone对应的0.8.1torchvision,下载之后执行以下操作:

cd torchvision

export BUILD_VERSION=0.8.1

python3 setup.py install(venv虚拟环境不能添加–user)

若过程中出现 invalid command ‘bdist_wheel’,如下:

解决方法:pip3 install wheel

安装完成后可能仍然无法使用torchvision,提示future feature annotations is not defined,可能是由于pillow版本不符合(过高),我使用的版本下对应解决方法为:pip install pillow==8.1.0

安装完成:

3.安装ZED的python api

cd /usr/local/zed

python3 get_python_api.py

其他问题:报错illegal instruction(core dumped)

4.安装yolo

为了方便最开始的测试,此处安装yolov5进行简单试验

直接git要使用的yolo版本,有些yolo版本无法正常安装使用,先换其他版本,不要换环境,若要使用ZED相机官方给的示例,2022年开始的版本更改个别函数名称或参数即可

安装yolo直接pip install -r requirements.txt即可

速度下载很慢或卡在building时,解决方法:

pip install --upgrade pip

安装opengl相关

pip install PyOpenGL PyOpenGL_accelerate

5.安装完成后,用自己修改和训练的模型正常测试即可,可用torch线程运行yolo检测,ros线程控制设备和相机进行交互

小实验的代码:

#!/usr/bin/env python3

import sys

import numpy as np

import argparse

import torch

import cv2

import pyzed.sl as sl

import torch.backends.cudnn as cudnn

sys.path.insert(0, './yolov5')

###problem

from models.experimental import attempt_load#######################################################

from utils.general import check_img_size, non_max_suppression, scale_coords, xyxy2xywh

from utils.torch_utils import select_device

from utils.augmentations import letterbox

###

from threading import Lock, Thread

from time import sleep

import ogl_viewer.viewer as gl

import cv_viewer.tracking_viewer as cv_viewer

###ros

from sys import maxunicode

from turtle import position

import rospy

from std_msgs.msg import String

from std_msgs.msg import Float64

from zed_interfaces.msg import Object

from zed_interfaces.msg import ObjectsStamped

from geometry_msgs.msg import Twist

import math

###

lock = Lock()

run_signal = False

run_signal2 = False

exit_signal = False

def img_preprocess(img, device, half, net_size):

net_image, ratio, pad = letterbox(img[:, :, :3], net_size, auto=False)

net_image = net_image.transpose((2, 0, 1))[::-1] # HWC to CHW, BGR to RGB

net_image = np.ascontiguousarray(net_image)

img = torch.from_numpy(net_image).to(device)

img = img.half() if half else img.float() # uint8 to fp16/32

img /= 255.0 # 0 - 255 to 0.0 - 1.0

if img.ndimension() == 3:

img = img.unsqueeze(0)

return img, ratio, pad

def xywh2abcd(xywh, im_shape):

output = np.zeros((4, 2))

# Center / Width / Height -> BBox corners coordinates

x_min = (xywh[0] - 0.5*xywh[2]) * im_shape[1]

x_max = (xywh[0] + 0.5*xywh[2]) * im_shape[1]

y_min = (xywh[1] - 0.5*xywh[3]) * im_shape[0]

y_max = (xywh[1] + 0.5*xywh[3]) * im_shape[0]

# A ------ B

# | Object |

# D ------ C

output[0][0] = x_min

output[0][1] = y_min

output[1][0] = x_max

output[1][1] = y_min

output[2][0] = x_min

output[2][1] = y_max

output[3][0] = x_max

output[3][1] = y_max

return output

def detections_to_custom_box(detections, im, im0):

output = []

for i, det in enumerate(detections):

if len(det):

det[:, :4] = scale_coords(im.shape[2:], det[:, :4], im0.shape).round()

gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # normalization gain whwh

for *xyxy, conf, cls in reversed(det):

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

# Creating ingestable objects for the ZED SDK

obj = sl.CustomBoxObjectData()

obj.bounding_box_2d = xywh2abcd(xywh, im0.shape)

obj.label = cls

obj.probability = conf

obj.is_grounded = False

output.append(obj)

return output

def torch_thread(weights, img_size, conf_thres=0.2, iou_thres=0.45):

global image_net, exit_signal, run_signal, detections

print("Intializing Network...")

device = select_device()

half = device.type != 'cpu' # half precision only supported on CUDA

imgsz = img_size

# Load model

model = attempt_load(weights, map_location=device) # load FP32

stride = int(model.stride.max()) # model stride

imgsz = check_img_size(imgsz, s=stride) # check img_size

if half:

model.half() # to FP16

cudnn.benchmark = True

# Run inference

if device.type != 'cpu':

model(torch.zeros(1, 3, imgsz, imgsz).to(device).type_as(next(model.parameters()))) # run once

while not exit_signal:

if run_signal:

lock.acquire()

img, ratio, pad = img_preprocess(image_net, device, half, imgsz)

pred = model(img)[0]

det = non_max_suppression(pred, conf_thres, iou_thres)

# ZED CustomBox format (with inverse letterboxing tf applied)

detections = detections_to_custom_box(det, img, image_net)

lock.release()

run_signal = False

sleep(0.01)

def distance(position):

x=-position[2]

y=-position[0]+0.06

z=position[1]

dis=math.sqrt(x*x+y*y+z*z)

return dis,x,y,z

#if people_exist==TRUE

def movecar1(dis,x,y,z):

#linear_x angular_z

if dis>=1.5:

linear_x=0.3

elif dis<=0.7:

linear_x=-0.3

else:

linear_x=0

if abs(y)<0.35:

angular_z = -0.2

linear_y=-0.1

elif abs(y)>1:

angular_z = 0.3

linear_y = 0.1

else:

angular_z=0

linear_y=0

return linear_x,linear_y,angular_z

#if people_exist==FALSE

def movecar2():

angular_z=0.5

return angular_z

def find_object(object_list):

object_tot=object_list

max_con=object_tot[0]

for obj_tmp in object_tot:

if obj_tmp.confidence>max_con.confidence:

max_con=obj_tmp

return max_con

def ros_thread():

#global image_net, exit_signal, run_signal, detections

global objects_total, run_signal2###

print("rostopic publish!!!!!!!!!!!!!!!!!!!!!!")

try:

#rospy.init_node('pylistener',anonymous=True)

while (not rospy.is_shutdown()) and (not exit_signal):

if run_signal2:

lock.acquire()

obj=objects_total

rospy.loginfo("Listener:")

rospy.loginfo("people num: %d",len(obj.object_list))

pub_vel_cmd = rospy.Publisher('/tianbot_01/cmd_vel', Twist, queue_size=10)

pub_info = rospy.Publisher('detect_position_info', Float64, queue_size=10)###trans info

if len(obj.object_list)>0:

object_max=find_object(obj.object_list)

if object_max.confidence>90:

#the colsest people position:x,y,z

###num=closest_people(obj)

#position=obj.object_list[0].position###先考虑0

position=object_max.position

rospy.loginfo("the closest car position(x,y,z): %f %f %f",-position[2],-position[0]+0.06,position[1])

dis,x,y,z=distance(position)

linear_x,linear_y,angular_z=movecar1(dis,x,y,z)

#move

vel_cmd = Twist()

vel_cmd.linear.x = linear_x

vel_cmd.linear.y = linear_y

vel_cmd.angular.z = angular_z

pub_vel_cmd.publish(vel_cmd)

pub_info.publish(dis)###trans info

else:

angular_z=movecar2()

#move

vel_cmd = Twist()

vel_cmd.linear.x = 0.2

vel_cmd.angular.z = 0.5

pub_vel_cmd.publish(vel_cmd)

lock.release()

run_signal2 = False

sleep(0.01)

except rospy.ROSInterruptException:

pass

def main():

global image_net, exit_signal, run_signal, detections, objects_total, run_signal2

capture_thread = Thread(target=torch_thread,

kwargs={'weights': opt.weights, 'img_size': opt.img_size, "conf_thres": opt.conf_thres})

capture_thread.start()

capture_thread2 = Thread(target=ros_thread)

capture_thread2.start()

print("Initializing Camera...")

zed = sl.Camera()

input_type = sl.InputType()

if opt.svo is not None:

input_type.set_from_svo_file(opt.svo)

# Create a InitParameters object and set configuration parameters

init_params = sl.InitParameters(input_t=input_type, svo_real_time_mode=True)

init_params.camera_resolution = sl.RESOLUTION.HD720

init_params.coordinate_units = sl.UNIT.METER

init_params.depth_mode = sl.DEPTH_MODE.ULTRA # QUALITY

init_params.coordinate_system = sl.COORDINATE_SYSTEM.RIGHT_HANDED_Y_UP

init_params.depth_maximum_distance = 50

runtime_params = sl.RuntimeParameters()

status = zed.open(init_params)

if status != sl.ERROR_CODE.SUCCESS:

print(repr(status))

exit()

image_left_tmp = sl.Mat() ###self

print("Initialized Camera")

positional_tracking_parameters = sl.PositionalTrackingParameters()

# If the camera is static, uncomment the following line to have better performances and boxes sticked to the ground.

# positional_tracking_parameters.set_as_static = True

zed.enable_positional_tracking(positional_tracking_parameters)

obj_param = sl.ObjectDetectionParameters()

obj_param.detection_model = sl.DETECTION_MODEL.CUSTOM_BOX_OBJECTS

obj_param.enable_tracking = True

zed.enable_object_detection(obj_param)

objects = sl.Objects()

obj_runtime_param = sl.ObjectDetectionRuntimeParameters()

# Display

camera_infos = zed.get_camera_information()

# Create OpenGL viewer

viewer = gl.GLViewer()

point_cloud_res = sl.Resolution(min(camera_infos.camera_resolution.width, 720),

min(camera_infos.camera_resolution.height, 404))

point_cloud_render = sl.Mat()

viewer.init(camera_infos.camera_model, point_cloud_res, obj_param.enable_tracking)

point_cloud = sl.Mat(point_cloud_res.width, point_cloud_res.height, sl.MAT_TYPE.F32_C4, sl.MEM.CPU)

image_left = sl.Mat()

# Utilities for 2D display

display_resolution = sl.Resolution(min(camera_infos.camera_resolution.width, 1280),

min(camera_infos.camera_resolution.height, 720))

image_scale = [display_resolution.width / camera_infos.camera_resolution.width, display_resolution.height / camera_infos.camera_resolution.height]

image_left_ocv = np.full((display_resolution.height, display_resolution.width, 4), [245, 239, 239, 255], np.uint8)

# Utilities for tracks view

camera_config = zed.get_camera_information().camera_configuration

tracks_resolution = sl.Resolution(400, display_resolution.height)

track_view_generator = cv_viewer.TrackingViewer(tracks_resolution, camera_config.camera_fps,

init_params.depth_maximum_distance)

track_view_generator.set_camera_calibration(camera_config.calibration_parameters)

image_track_ocv = np.zeros((tracks_resolution.height, tracks_resolution.width, 4), np.uint8)

# Camera pose

cam_w_pose = sl.Pose()

while viewer.is_available() and not exit_signal:

if zed.grab(runtime_params) == sl.ERROR_CODE.SUCCESS:

# -- Get the image

lock.acquire()

zed.retrieve_image(image_left_tmp, sl.VIEW.LEFT)

image_net = image_left_tmp.get_data()

lock.release()

run_signal = True

# -- Detection running on the other thread

while run_signal:

sleep(0.001)

# Wait for detections

lock.acquire()

# -- Ingest detections

zed.ingest_custom_box_objects(detections)

lock.release()

lock.acquire()

zed.retrieve_objects(objects, obj_runtime_param)

###print(len(objects.object_list))

objects_total=objects###

lock.release()

###move car

run_signal2=True

while run_signal2:

sleep(0.001)

# -- Display

# Retrieve display data

zed.retrieve_measure(point_cloud, sl.MEASURE.XYZRGBA, sl.MEM.CPU, point_cloud_res)

point_cloud.copy_to(point_cloud_render)

zed.retrieve_image(image_left, sl.VIEW.LEFT, sl.MEM.CPU, display_resolution)

zed.get_position(cam_w_pose, sl.REFERENCE_FRAME.WORLD)

# 3D rendering

viewer.updateData(point_cloud_render, objects)

# 2D rendering

np.copyto(image_left_ocv, image_left.get_data())

cv_viewer.render_2D(image_left_ocv, image_scale, objects, obj_param.enable_tracking)

global_image = cv2.hconcat([image_left_ocv, image_track_ocv])

# Tracking view

track_view_generator.generate_view(objects, cam_w_pose, image_track_ocv, objects.is_tracked)

cv2.imshow("ZED | 2D View and Birds View", global_image)

key = cv2.waitKey(10)

if key == 27:

exit_signal = True

else:

exit_signal = True

viewer.exit()

exit_signal = True

zed.close()

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--weights', nargs='+', type=str, default='./test4.pt', help='model.pt path(s)')

parser.add_argument('--svo', type=str, default=None, help='optional svo file')

parser.add_argument('--img_size', type=int, default=416, help='inference size (pixels)')

parser.add_argument('--conf_thres', type=float, default=0.4, help='object confidence threshold')

opt = parser.parse_args()

rospy.init_node('pylistener',anonymous=True)

with torch.no_grad():

main()

414

414

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?