一、前言

最近想实现AVM拼接,看了不少博客和论文,不过比较愚钝,一直没能很好理解原理,尤其是怎么在实现时把下文式1与式2中Z1和Z2消除的,所以严谨的推导了一下对应的公式,如有不对,水平有限,烦请指出~

IPM变换(逆透视变换),顾名思义,即将正常透视效应消除的变换,变换结果为鸟瞰图BEV(俯视图);AVM环视拼接一般是将车身前后左右的四个鱼眼相机拼接成环视图(也是俯视),其使用的单应矩阵将四个相机转到同一个俯视坐标系。

投影变换的通俗理解就是:假设同一个相机分别在A、B两个不同位置,以不同的位姿拍摄同一个平面(重点是拍摄平面,例如桌面、墙面、地平面),生成了两张图象,这两张图象之间的关系就叫做投影变换。

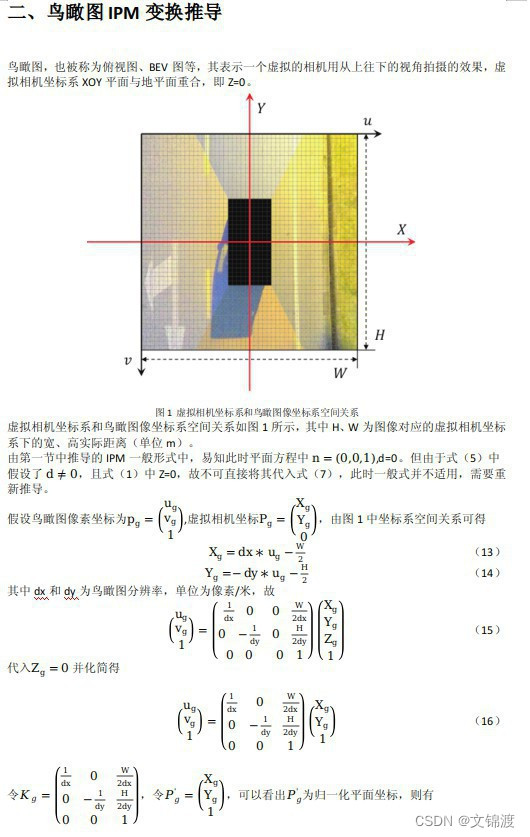

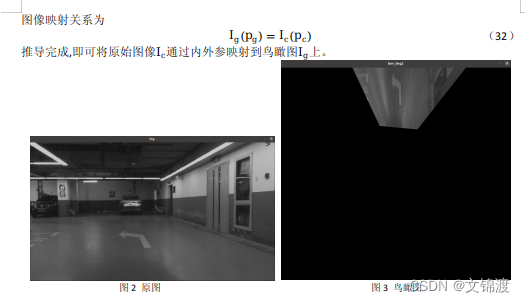

二、公式推导

公式太难打了,全用word存了,在这直接截图

这也就解释了:为什么有的AVM算法是需要标定相机的内外参,而有的只提供单应矩阵H。

三、示例代码

#include <Eigen/Core>

#include <Eigen/Dense>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/core/eigen.hpp>

#include <opencv2/opencv.hpp>

#include <vector>

void DistortEigenPoints(const Eigen::Vector3d &undis_pt, Eigen::Vector3d &dis_pt, double k1, double k2, double p1,

double p2) {

double x2 = undis_pt[0] * undis_pt[0];

double y2 = undis_pt[1] * undis_pt[1];

double r2 = x2 + y2;

if (r2 == 0) return;

double r4 = r2 * r2;

double r6 = r2 * r4;

double xy = undis_pt[0] * undis_pt[1];

dis_pt[0] = undis_pt[0] * (1 + k1 * r2 + k2 * r4) + 2 * p1 * xy + p2 * (r2 + 2 * x2);

dis_pt[1] = undis_pt[1] * (1 + k1 * r2 + k2 * r4) + p1 * (r2 + 2 * y2) + 2 * p2 * xy;

dis_pt[2] = 1;

return;

}

float interp(const cv::Mat &img, float x, float y) {

int ix = x;

int iy = y;

float dx = x - ix;

float dy = y - iy;

float ddx = 1.0f - dx;

float ddy = 1.0f - dy;

return ddx * ddy * img.data[iy * img.cols + ix] + ddx * dy * img.data[(iy + 1) * img.cols + ix] +

dx * ddy * img.data[iy * img.cols + ix + 1] + dx * dy * img.data[(iy + 1) * img.cols + ix + 1];

}

int main() {

std::string img_path = "Mynt/00001772.jpg";

Eigen::Matrix3d K_c;

K_c << 1037.536376953125, 0, 600.182861328125, 0, 1038.532958984375, 358.40493774414062500, 0, 0, 1;

double k1 = 0.03784750;

double k2 = -0.051872;

double p1 = -0.000938;

double p2 = 0.000157;

Eigen::Matrix3d R_gc;

R_gc << 0.9962626012012678, -0.08634899803974432, -0.00216332734845117, -0.005878375212093168, -0.04279258802138065,

-0.9990666840182884, 0.08617583276389137, 0.9953454902434454, -0.0431402468639808;

Eigen::Vector3d t_gc;

t_gc << 0.283289952815021, 1.136073800639606, -2.129837994618606;

// 鸟瞰图设置为长宽20m,分辨率0.2m

double W_m = 20;

double H_m = 20;

double dx = 0.02;

double dy = 0.02;

Eigen::Matrix3d K_g;

K_g << 1.0 / dx, 0, W_m / (2 * dx), 0, -1.0 / dy, H_m / (2 * dy), 0, 0, 1;

Eigen::Matrix3d J_gc;

J_gc.block<3, 2>(0, 0) = R_gc.block<3, 2>(0, 0);

J_gc.block<3, 1>(0, 2) = t_gc;

//一定要带上图像类型,比如IMREAD_GRAYSCALE,默认的是IMREAD_COLOR

cv::Mat img = cv::imread(img_path, cv::IMREAD_GRAYSCALE);

//一、未去畸变鸟瞰图

Eigen::Matrix3d H = K_c * J_gc * K_g.inverse();

cv::Mat trans_mat;

cv::eigen2cv(H, trans_mat);

cv::Mat bev_img(H_m / dy, W_m / dx, CV_8UC1);

// warpPerspective是透视变换,所有trans_mat需要取逆

cv::warpPerspective(img, bev_img, trans_mat.inv(), bev_img.size());

//二、去畸变鸟瞰图

Eigen::Matrix3d H_gc = J_gc * K_g.inverse();

cv::Mat bev_img2(H_m / dy, W_m / dx, CV_8UC1);

for (int row = 0; row < bev_img2.rows; row++) {

for (int col = 0; col < bev_img2.cols; col++) {

Eigen::Vector3d p_g(col, row, 1);

Eigen::Vector3d P_c = H_gc * p_g;

// 去掉在相机后面的点

if (P_c[2] < 0) continue;

P_c /= P_c[2];

Eigen::Vector3d P_c_dis;

DistortEigenPoints(P_c, P_c_dis, k1, k2, p1, p2);

Eigen::Vector3d p_c = K_c * P_c_dis;

// 在原图范围内的才能映射

if (p_c[0] >= 0 && p_c[1] >= 0 && p_c[0] < img.cols - 1 && p_c[1] < img.rows - 1) {

bev_img2.at<uchar>(row, col) = interp(img, p_c[0], p_c[1]);

}

}

}

cv::imshow("img", img);

cv::imshow("bev_img", bev_img);

cv::imshow("bev_img2", bev_img2);

cv::waitKey(0);

return 0;

}效果展示

四、工程化

从二、三部分可以看出,如果在大量处理图片的时候可以先将图像映射关系求解,再对每一次输入的图片进行remap即可,在工程上能节约大量不必要的计算(思路来源于相机畸变矫正时的UndistortImage()由initUndistortRectifyMap()和remap()的简单组合)

#include <Eigen/Core>

#include <Eigen/Dense>

#include <fstream>

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/core/eigen.hpp>

#include <opencv2/opencv.hpp>

#include <vector>

void DistortInNormalizedPlane(const Eigen::MatrixXd& P_c_eigen, Eigen::MatrixXd& P_c_dis_eigen,

const Eigen::Matrix<double, 6, 1> distort_par, const double focal) {

for (int i = 0; i < P_c_eigen.cols(); ++i) {

double r = sqrt(P_c_eigen(0, i) * P_c_eigen(0, i) + P_c_eigen(1, i) * P_c_eigen(1, i));

if (r == 0.0) {

P_c_dis_eigen(0, i) = P_c_dis_eigen(1, i) = P_c_dis_eigen(2, i) = 0;

continue;

}

double theta = atanf(r);

theta *= 180 / 3.141592653;

double theta2 = theta * theta;

double theta3 = theta2 * theta;

double theta4 = theta3 * theta;

double theta5 = theta4 * theta;

double rd = distort_par[5] * theta5 + distort_par[4] * theta4 + distort_par[3] * theta3 +

distort_par[2] * theta2 + distort_par[1] * theta + distort_par[0];

double tmp = rd / (r * focal);

P_c_dis_eigen(0, i) = P_c_eigen(0, i) * tmp;

P_c_dis_eigen(1, i) = P_c_eigen(1, i) * tmp;

P_c_dis_eigen(2, i) = 1;

}

return;

}

int main() {

double alpha = 1.270;

double beta = -0.019;

double sigma = 0.005;

double install_x = 0.0;

double install_y = 2220;

double install_z = 846;

int plr_image_height = 830;

int plr_image_width = 600;

int plr_image_size = plr_image_height * plr_image_width;

double focal = 0.7;

Eigen::Matrix<double, 6, 1> distort_par;

distort_par << 2.602669463289515e-4, 0.016492905052440, 8.654874453589382e-6, 3.522621922928950e-7,

2.190540817132008e-9, -3.361245302349930e-11;

float fSinAlpha = sinf(alpha);

float fCosAlpha = cosf(alpha);

float fSinBeta = sinf(-beta);

float fCosBeta = cosf(-beta);

float fSinSigma = sinf(sigma);

float fCosSigma = cosf(sigma);

Eigen::Matrix3d R_car_mid_camera;

R_car_mid_camera << fCosBeta * fCosSigma, fSinAlpha * fSinBeta * fCosSigma - fCosAlpha * fSinSigma,

fCosAlpha * fSinBeta * fCosSigma + fSinAlpha * fSinSigma, fCosBeta * fSinSigma,

fSinAlpha * fSinBeta * fSinSigma + fCosAlpha * fCosSigma,

fCosAlpha * fSinBeta * fSinSigma - fSinAlpha * fCosSigma, -fSinBeta, fSinAlpha * fCosBeta, fCosAlpha * fCosBeta;

Eigen::Matrix3d R_camera_car_mid = R_car_mid_camera.transpose();

Eigen::Vector3d t_car_mid_camera = Eigen::Vector3d(install_x / 1000., -install_y / 1000., -install_z / 1000.);

Eigen::Vector3d t_camera_car_mid = -R_car_mid_camera.transpose() * t_car_mid_camera;

std::cout << "R_camera_car_mid " << R_camera_car_mid << std::endl;

std::cout << "t_camera_car_mid " << t_camera_car_mid << std::endl;

Eigen::Matrix3d K_c, J_gc, K_g;

K_c << 120.69, 0, 320, 0, 120.69, 240, 0, 0, 1;

K_g << 50, 0, plr_image_width / 2, 0, 50, plr_image_height / 2, 0, 0, 1;

J_gc.block<3, 2>(0, 0) = R_camera_car_mid.block<3, 2>(0, 0);

J_gc.block<3, 1>(0, 2) = t_camera_car_mid;

Eigen::Matrix3d H = J_gc * K_g.inverse();

// //******************eigen remap ******************************

Eigen::MatrixXd p_g_eigen(3, plr_image_size);

for (int i = 0; i < plr_image_height; i++) {

for (int j = 0; j < plr_image_width; j++) {

p_g_eigen(0, plr_image_width * i + j) = j;

p_g_eigen(1, plr_image_width * i + j) = i;

p_g_eigen(2, plr_image_width * i + j) = 1;

}

}

Eigen::MatrixXd P_c_eigen(3, plr_image_size);

P_c_eigen = J_gc * K_g.inverse() * p_g_eigen;

for (int i = 0; i < plr_image_size; i++) {

P_c_eigen(0, i) = P_c_eigen(0, i) / P_c_eigen(2, i);

P_c_eigen(1, i) = P_c_eigen(1, i) / P_c_eigen(2, i);

P_c_eigen(2, i) = 1;

}

Eigen::MatrixXd P_c_dis_eigen(3, plr_image_size);

DistortInNormalizedPlane(P_c_eigen, P_c_dis_eigen, distort_par, focal);

Eigen::MatrixXd p_c_eigen(3, plr_image_size);

p_c_eigen = K_c * P_c_dis_eigen;

cv::Mat p_C1 = cv::Mat::zeros(1, plr_image_size, CV_64FC2);

std::vector<cv::Mat> channels(2);

cv::split(p_C1, channels);

Eigen::MatrixXd p_c_eigen1 = p_c_eigen.row(0);

Eigen::MatrixXd p_c_eigen2 = p_c_eigen.row(1);

cv::eigen2cv(p_c_eigen1, channels[0]);

cv::eigen2cv(p_c_eigen2, channels[1]);

cv::merge(channels, p_C1);

p_C1.reshape(plr_image_height, plr_image_width);

cv::Mat p_GC_table = p_C1.reshape(0, plr_image_height);

std::vector<cv::Mat> p_GC_table_channels(2);

cv::split(p_GC_table, p_GC_table_channels);

// 求得图像映射关系(无法剔除z<0部分 但可以按照固定行列取值)

cv::Mat p_GC_table_32F;

p_GC_table.convertTo(p_GC_table_32F, CV_32FC2);

for (int img_num = 0; img_num < 2000; img_num += 5) {

std::string img_path =

"/home/vedio_img/" + std::to_string(img_num) + ".png";

cv::Mat image = cv::imread(img_path, 0);

cv::resize(image, image, cv::Size(image.cols / 2, image.rows / 2));

// 计算反投影矩阵投影

cv::Mat bev_img;

cv::remap(image, bev_img, p_GC_table_32F, cv::Mat(), cv::INTER_LINEAR);

cv::imshow("img", image);

cv::imshow("img_GC", bev_img);

cv::waitKey(0);

}

return 0;

}ps.

// 1. 读取图像

cv::Mat img = cv::imread(img_path, cv::IMREAD_GRAYSCALE);使用时一定要记得加对应图像类型 ,比如灰度的为cv::IMREAD_GRAYSCALE,否则读取的会为三通道的IMREAD_COLOR,导致整个转换出问题,查了个半死。。。

参考文献:

自动驾驶AVM环视算法--3D碗型投影模式的算法原理和代码实现_3d avm算法原理-CSDN博客

Fisheye Calibration Basics- MATLAB & Simulink- MathWorks 中国

https://blog.51cto.com/u_16099267/10196291

逆透视变换详解 及 代码实现(二)_uvlimitsp-CSDN博客

Online Camera Pose Optimization for the Surround-view System

https://zhuanlan.zhihu.com/p/636990989

https://www.cnblogs.com/riddick/p/6711263.html

1464

1464

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?