Q:

“Batch, Batch, Batch:”

What Does It Really Mean?![]()

A:

下面是参考资料:

这个看代码里面batch相关的。

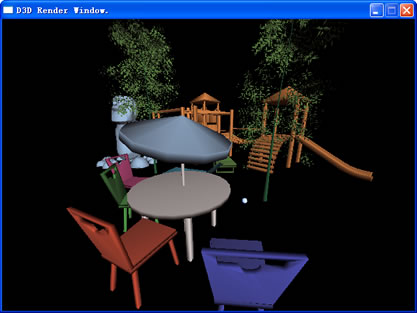

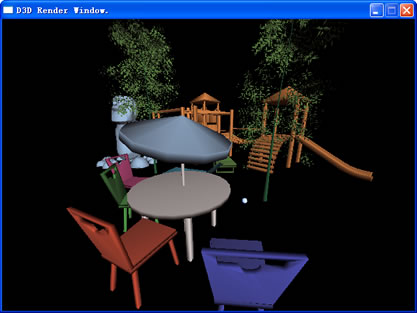

[Direct3D] 实现批次渲染、硬件 T&L 的渲染器和 D3DPipeline

D3DPipeline:

• Every DrawIndexedPrimitive() is a batch

– Submits n number of triangles to GPU

– Same render state applies to all tris in batch

– SetState calls prior to Draw are part of batch

You get X batches per frame,

X mainly depends on CPU spec.

How Many Triangles Per Batch?

• Up to you!

– Anything between 1 to 10,000+ tris possible

• If small number, either

– Triangles are large or extremely expensive

– Only GPU vertex engines are idle

• Or

– Game is CPU bound, but don’t care because

you budgeted your CPU ahead of time, right?

– GPU idle (available for upping visual quality)

这个看代码里面batch相关的。

[Direct3D] 实现批次渲染、硬件 T&L 的渲染器和 D3DPipeline

在是否从 D3DRender 提供顶点缓存区操作给流水线时做了一些权衡,最后决定暂时使用 IDirect3DDevice9::DrawPrimitiveUP 来渲染,因为它更容易书写,而且开销是一次顶点拷贝,流水线也不用操心对缓存的使用。

D3DPipeline 并不是完整的,其涉及到从场景管理器中传递的静态场景元素列表,这些元素需要事先被整理到各个子容器以便尽可能少地调整渲染状态和写顶点缓存。这些子容器由场景管理器维护,并在适当的时候调用 Render::DrawPrimitive 进行渲染。

大多数的 los-lib 结构与 D3DX 在内存上兼容的,在保持界面独立的同时不影响性能。例如 los::blaze::Material 与 D3DMATERIAL 即是兼容的。灯光定义则存在差异,主要原因在于 los-lib 使用了各个独立的灯光类型,而 D3DLIGHT9 则放置在统一的结构当中,当然,灯光对象通常并不在多个渲染状态间改变,所以执行两种灯光类型数据的转换并不影响效率。一桢通常仅进行一次这样的转换。

另一个容易犯的错误在于几何体法线列表的索引,法线为每个顶点索引设置独立的值,而不再通过顶点列表的索引形式,尝试使用顶点索引来查找法线将得到非预期的结果。

D3DRender:

virtual int DrawPrimitive(const std::vector<VertexXYZ_N>&

listVertex

, const Matrix& matWorld, const Matrix& matView, const Matrix& matProj

, const Material& material)

{

ptrDevice->SetTransform(D3DTS_WORLD, (CONST D3DMATRIX*)& matWorld);

ptrDevice->SetTransform(D3DTS_VIEW, (CONST D3DMATRIX*)& matView);

ptrDevice->SetTransform(D3DTS_PROJECTION, (CONST D3DMATRIX*)& matProj);

ptrDevice->SetFVF(D3DFVF_XYZ | D3DFVF_NORMAL);

ptrDevice-> SetRenderState(D3DRS_FILLMODE, D3DFILL_SOLID);

ptrDevice->SetMaterial((CONST D3DMATERIAL9*)& material);

uint nPrim = (uint)listVertex.size() / 3 ;

uint nBatch = nPrim / _D3DCaps.MaxPrimitiveCount;

uint nByteBatch =_D3DCaps.MaxPrimitiveCount * (uint)sizeof(VertexXYZ_N) * 3 ;

for (uint idx = 0; idx < nBatch ; ++ idx)

ptrDevice-> DrawPrimitiveUP(D3DPT_TRIANGLELIST

, _D3DCaps.MaxPrimitiveCount

, & listVertex.front()

+ idx * nByteBatch

, (uint)sizeof (VertexXYZ_N));

ptrDevice->DrawPrimitiveUP(D3DPT_TRIANGLELIST, nPrim % _D3DCaps.MaxPrimitiveCount

, & listVertex.front()

+ nBatch * nByteBatch

, (uint)sizeof (VertexXYZ_N));

return 0 ;

}

virtual int SetLights(const Lights& lights)

{

ptrDevice-> SetRenderState(D3DRS_AMBIENT

, (lights.globalLight.GetColor()

* lights.globalLight.GetIntensity()).ToColor());

uint idxLight = 0 ;

for (size_t idx = 0; idx < lights.listPointLight.size(); ++ idx)

{

const PointLight& refLight = lights.listPointLight[idx];

D3DLIGHT9 lght;

::memset(&lght, 0, sizeof (D3DLIGHT9));

lght.Type = D3DLIGHT_POINT;

lght.Range = refLight.GetDistance();

lght.Attenuation1 = 1.0f ;

Vector3 vPos = refLight.GetPosition();

lght.Position.x = vPos.x;

lght.Position.y = vPos.y;

lght.Position.z = vPos.z;

lght.Diffuse = lght.Specular

= *(D3DCOLORVALUE*)&(refLight.GetColor() * refLight.GetIntensity());

ptrDevice->SetLight(idxLight, & lght);

ptrDevice->LightEnable(idxLight++, true );

}

for (size_t idx = 0; idx < lights.listParallelLight.size(); ++ idx)

{

const ParallelLight& refLight = lights.listParallelLight[idx];

D3DLIGHT9 lght;

::memset(&lght, 0, sizeof (D3DLIGHT9));

lght.Type = D3DLIGHT_DIRECTIONAL;

Vector3 vDir = refLight.GetDirection();

lght.Direction.x = vDir.x;

lght.Direction.y = vDir.y;

lght.Direction.z = vDir.z;

lght.Diffuse = lght.Specular

= *(D3DCOLORVALUE*)&(refLight.GetColor() * refLight.GetIntensity());

ptrDevice->SetLight(idxLight, & lght);

ptrDevice->LightEnable(idxLight++, true );

}

for (size_t idx = 0; idx < lights.listSpotLight.size(); ++ idx)

{

const SpotLight& refLight = lights.listSpotLight[idx];

D3DLIGHT9 lght;

::memset(&lght, 0, sizeof (D3DLIGHT9));

lght.Type = D3DLIGHT_SPOT;

lght.Range = refLight.GetDistance();

lght.Attenuation1 = 1.0f ;

lght.Falloff = 1.0f ;

lght.Theta = refLight.GetHotspot().ToRadian();

lght.Phi = refLight.GetFalloff().ToRadian();

Vector3 vDir = refLight.GetDirection();

lght.Direction.x = vDir.x;

lght.Direction.y = vDir.y;

lght.Direction.z = vDir.z;

Vector3 vPos = refLight.GetPosition();

lght.Position.x = vPos.x;

lght.Position.y = vPos.y;

lght.Position.z = vPos.z;

lght.Diffuse = lght.Specular

= *(D3DCOLORVALUE*)&(refLight.GetColor() * refLight.GetIntensity());

ptrDevice->SetLight(idxLight, & lght);

ptrDevice->LightEnable(idxLight++, true );

}

return 0 ;

}

, const Matrix& matWorld, const Matrix& matView, const Matrix& matProj

, const Material& material)

{

ptrDevice->SetTransform(D3DTS_WORLD, (CONST D3DMATRIX*)& matWorld);

ptrDevice->SetTransform(D3DTS_VIEW, (CONST D3DMATRIX*)& matView);

ptrDevice->SetTransform(D3DTS_PROJECTION, (CONST D3DMATRIX*)& matProj);

ptrDevice->SetFVF(D3DFVF_XYZ | D3DFVF_NORMAL);

ptrDevice-> SetRenderState(D3DRS_FILLMODE, D3DFILL_SOLID);

ptrDevice->SetMaterial((CONST D3DMATERIAL9*)& material);

uint nPrim = (uint)listVertex.size() / 3 ;

uint nBatch = nPrim / _D3DCaps.MaxPrimitiveCount;

uint nByteBatch =_D3DCaps.MaxPrimitiveCount * (uint)sizeof(VertexXYZ_N) * 3 ;

for (uint idx = 0; idx < nBatch ; ++ idx)

ptrDevice-> DrawPrimitiveUP(D3DPT_TRIANGLELIST

, _D3DCaps.MaxPrimitiveCount

, & listVertex.front()

+ idx * nByteBatch

, (uint)sizeof (VertexXYZ_N));

ptrDevice->DrawPrimitiveUP(D3DPT_TRIANGLELIST, nPrim % _D3DCaps.MaxPrimitiveCount

, & listVertex.front()

+ nBatch * nByteBatch

, (uint)sizeof (VertexXYZ_N));

return 0 ;

}

virtual int SetLights(const Lights& lights)

{

ptrDevice-> SetRenderState(D3DRS_AMBIENT

, (lights.globalLight.GetColor()

* lights.globalLight.GetIntensity()).ToColor());

uint idxLight = 0 ;

for (size_t idx = 0; idx < lights.listPointLight.size(); ++ idx)

{

const PointLight& refLight = lights.listPointLight[idx];

D3DLIGHT9 lght;

::memset(&lght, 0, sizeof (D3DLIGHT9));

lght.Type = D3DLIGHT_POINT;

lght.Range = refLight.GetDistance();

lght.Attenuation1 = 1.0f ;

Vector3 vPos = refLight.GetPosition();

lght.Position.x = vPos.x;

lght.Position.y = vPos.y;

lght.Position.z = vPos.z;

lght.Diffuse = lght.Specular

= *(D3DCOLORVALUE*)&(refLight.GetColor() * refLight.GetIntensity());

ptrDevice->SetLight(idxLight, & lght);

ptrDevice->LightEnable(idxLight++, true );

}

for (size_t idx = 0; idx < lights.listParallelLight.size(); ++ idx)

{

const ParallelLight& refLight = lights.listParallelLight[idx];

D3DLIGHT9 lght;

::memset(&lght, 0, sizeof (D3DLIGHT9));

lght.Type = D3DLIGHT_DIRECTIONAL;

Vector3 vDir = refLight.GetDirection();

lght.Direction.x = vDir.x;

lght.Direction.y = vDir.y;

lght.Direction.z = vDir.z;

lght.Diffuse = lght.Specular

= *(D3DCOLORVALUE*)&(refLight.GetColor() * refLight.GetIntensity());

ptrDevice->SetLight(idxLight, & lght);

ptrDevice->LightEnable(idxLight++, true );

}

for (size_t idx = 0; idx < lights.listSpotLight.size(); ++ idx)

{

const SpotLight& refLight = lights.listSpotLight[idx];

D3DLIGHT9 lght;

::memset(&lght, 0, sizeof (D3DLIGHT9));

lght.Type = D3DLIGHT_SPOT;

lght.Range = refLight.GetDistance();

lght.Attenuation1 = 1.0f ;

lght.Falloff = 1.0f ;

lght.Theta = refLight.GetHotspot().ToRadian();

lght.Phi = refLight.GetFalloff().ToRadian();

Vector3 vDir = refLight.GetDirection();

lght.Direction.x = vDir.x;

lght.Direction.y = vDir.y;

lght.Direction.z = vDir.z;

Vector3 vPos = refLight.GetPosition();

lght.Position.x = vPos.x;

lght.Position.y = vPos.y;

lght.Position.z = vPos.z;

lght.Diffuse = lght.Specular

= *(D3DCOLORVALUE*)&(refLight.GetColor() * refLight.GetIntensity());

ptrDevice->SetLight(idxLight, & lght);

ptrDevice->LightEnable(idxLight++, true );

}

return 0 ;

}

D3DPipeline:

virtual

int

ProcessingObject(

const

Object3D

&

object

)

{

++ _DebugInfo.dynamic_object_counter;

const Model & refModel = object .GetModel();

const Vector3 & pos = object .GetPosition();

Matrix mat = object .GetTransform()

* object .GetOrientation().ObjectToInertial() * object .GetAxis()

* Matrix().BuildTranslation(pos.x, pos.y, pos.z);

for (size_t gidx = 0 ; gidx < refModel.listGeometry.size(); ++ gidx)

{

const Geometry & refGeom = refModel.listGeometry[gidx];

const Material & refMat = refModel.listMaterial[refGeom.indexMaterial];

// Triangle triangle;

// triangle.bitmap = (DeviceBitmap*)&refModel.listDeviceBitmap[refGeom.indexDeviceBitmap];

std::vector < VertexXYZ_N > listVertex;

listVertex.reserve(refGeom.listIndex.size());

for (size_t iidx = 0 ; iidx < refGeom.listIndex.size(); iidx += 3 )

{

const Vector3 & vertex0 = refGeom.listVertex[refGeom.listIndex[iidx]];

const Vector3 & vertex1 = refGeom.listVertex[refGeom.listIndex[iidx + 1 ]];

const Vector3 & vertex2 = refGeom.listVertex[refGeom.listIndex[iidx + 2 ]];

Vector3 normal0 = refGeom.listNormal[iidx];

Vector3 normal1 = refGeom.listNormal[iidx + 1 ];

Vector3 normal2 = refGeom.listNormal[iidx + 2 ];

listVertex.push_back(VertexXYZ_N());

VertexXYZ_N & refV0 = listVertex.back();

refV0.x = vertex0.x;

refV0.y = vertex0.y;

refV0.z = vertex0.z;

refV0.normal_x = normal0.x;

refV0.normal_y = normal0.y;

refV0.normal_z = normal0.z;

listVertex.push_back(VertexXYZ_N());

VertexXYZ_N & refV1 = listVertex.back();

refV1.x = vertex1.x;

refV1.y = vertex1.y;

refV1.z = vertex1.z;

refV1.normal_x = normal1.x;

refV1.normal_y = normal1.y;

refV1.normal_z = normal1.z;

listVertex.push_back(VertexXYZ_N());

VertexXYZ_N & refV2 = listVertex.back();

refV2.x = vertex2.x;

refV2.y = vertex2.y;

refV2.z = vertex2.z;

refV2.normal_x = normal2.x;

refV2.normal_y = normal2.y;

refV2.normal_z = normal2.z;

++ _DebugInfo.polygon_counter;

}

_PtrRender -> DrawPrimitive(listVertex, mat, _ViewMatrix, _PerspectiveMatrix, refMat);

}

return 0 ;

}

};

{

++ _DebugInfo.dynamic_object_counter;

const Model & refModel = object .GetModel();

const Vector3 & pos = object .GetPosition();

Matrix mat = object .GetTransform()

* object .GetOrientation().ObjectToInertial() * object .GetAxis()

* Matrix().BuildTranslation(pos.x, pos.y, pos.z);

for (size_t gidx = 0 ; gidx < refModel.listGeometry.size(); ++ gidx)

{

const Geometry & refGeom = refModel.listGeometry[gidx];

const Material & refMat = refModel.listMaterial[refGeom.indexMaterial];

// Triangle triangle;

// triangle.bitmap = (DeviceBitmap*)&refModel.listDeviceBitmap[refGeom.indexDeviceBitmap];

std::vector < VertexXYZ_N > listVertex;

listVertex.reserve(refGeom.listIndex.size());

for (size_t iidx = 0 ; iidx < refGeom.listIndex.size(); iidx += 3 )

{

const Vector3 & vertex0 = refGeom.listVertex[refGeom.listIndex[iidx]];

const Vector3 & vertex1 = refGeom.listVertex[refGeom.listIndex[iidx + 1 ]];

const Vector3 & vertex2 = refGeom.listVertex[refGeom.listIndex[iidx + 2 ]];

Vector3 normal0 = refGeom.listNormal[iidx];

Vector3 normal1 = refGeom.listNormal[iidx + 1 ];

Vector3 normal2 = refGeom.listNormal[iidx + 2 ];

listVertex.push_back(VertexXYZ_N());

VertexXYZ_N & refV0 = listVertex.back();

refV0.x = vertex0.x;

refV0.y = vertex0.y;

refV0.z = vertex0.z;

refV0.normal_x = normal0.x;

refV0.normal_y = normal0.y;

refV0.normal_z = normal0.z;

listVertex.push_back(VertexXYZ_N());

VertexXYZ_N & refV1 = listVertex.back();

refV1.x = vertex1.x;

refV1.y = vertex1.y;

refV1.z = vertex1.z;

refV1.normal_x = normal1.x;

refV1.normal_y = normal1.y;

refV1.normal_z = normal1.z;

listVertex.push_back(VertexXYZ_N());

VertexXYZ_N & refV2 = listVertex.back();

refV2.x = vertex2.x;

refV2.y = vertex2.y;

refV2.z = vertex2.z;

refV2.normal_x = normal2.x;

refV2.normal_y = normal2.y;

refV2.normal_z = normal2.z;

++ _DebugInfo.polygon_counter;

}

_PtrRender -> DrawPrimitive(listVertex, mat, _ViewMatrix, _PerspectiveMatrix, refMat);

}

return 0 ;

}

};

111

111

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?