LLMs之Benchmark之TableBench:《TableBench: A Comprehensive and Complex Benchmark for Table Question Answering一个全面且复杂的表格问答基准测试》翻译与解读

导读:TableBench为评估和改进LLM在TableQA任务中的能力提供了宝贵的工具,为推动AI在现实世界表格数据分析中的发展做出了重大贡献。这个研究为表格问答提供了一个新的标准,并为未来的研究和应用指明了方向。

背景痛点

>> 现有大模型的局限性:虽然大语言模型(LLMs)在表格数据解释和处理方面取得了显著的进展,但在工业应用中仍面临重大挑战,特别是涉及复杂推理时。

>> 学术基准与实际应用的差距:现有的学术基准测试(如TableQA)未能充分反映真实世界的复杂性和需求。

具体的解决方案

>> TableBench:提出一个全面复杂的基准测试,涵盖18个子领域和四大类表格问答能力,总计886个样本,包括事实核查(基于匹配的事实核查和多跳事实核查)、数值推理(算术计算、比较、排序、计数、聚合、时间计算、多跳数值推理和领域特定计算)、数据分析(描述性分析、异常检测、统计分析、相关性分析、因果分析、趋势预测、影响分析)和可视化(图表生成)。

>> TableInstruct:一个庞大的TableQA指令语料库,包含约20,000个样本。涵盖三种不同的推理方法:文本思维链 (TCoT使用文本推理步骤)、符号思维链 (SCoT使用Python命令进行符号推理步骤)、程序思维链 (PoT生成可执行代码进行推理)。

>> TABLELLM:基于TableInstruct训练的一个强大的基准模型,展示了与GPT-3.5相当的性能。

核心思路步骤

>> 对现实世界挑战的系统分析:作者仔细分析了现实世界中的表格数据应用,根据解决问题所需的推理步骤数量来定义任务的复杂性。

>> 数据收集和任务定义:从现有数据集中收集表格,确保每个表至少有8行和5列,着重于包含大量数值信息的表格。结合人工和自动化方法构建基准测试集。

>> 问题和答案标注:通过自我启发机制生成问题,并采用三种不同的推理方法(文本推理、符号推理、代码推理)进行答案标注。利用GPT-4代理在不同类别中生成复杂问题。

>> 质量控制:采用双盲和一致性检查机制,确保数据的准确性和复杂性。

优势

>> 复杂性和全面性:TableBench的复杂性显著高于现有数据集,特别是在数据分析和可视化问题上。

>> 实际应用:通过系统评估30多个模型,强调了现有模型在应对真实世界需求时的不足,推动了模型在复杂推理场景下的能力提升。

目录

《TableBench: A Comprehensive and Complex Benchmark for Table Question Answering》翻译与解读

《TableBench: A Comprehensive and Complex Benchmark for Table Question Answering》翻译与解读

| 地址 | |

| 时间 | 2024年8月17日 |

| 作者 | Xianjie Wu, Jian Yang, Linzheng Chai, Ge Zhang, Jiaheng Liu, Xinrun Du, Di Liang, Daixin Shu, Xianfu Cheng, Tianzhen Sun, Guanglin Niu, Tongliang Li, Zhoujun Li 北京航空航天大学、滑铁卢大学、复旦大学等 |

Abstract

| Recent advancements in Large Language Models (LLMs) have markedly enhanced the interpretation and processing of tabular data, introducing previously unimaginable capabilities. Despite these achievements, LLMs still encounter significant challenges when applied in industrial scenarios, particularly due to the increased complexity of reasoning required with real-world tabular data, underscoring a notable disparity between academic benchmarks and practical applications. To address this discrepancy, we conduct a detailed investigation into the application of tabular data in industrial scenarios and propose a comprehensive and complex benchmark TableBench, including 18 fields within four major categories of table question answering (TableQA) capabilities. Furthermore, we introduce TableLLM, trained on our meticulously constructed training set TableInstruct, achieving comparable performance with GPT-3.5. Massive experiments conducted on TableBench indicate that both open-source and proprietary LLMs still have significant room for improvement to meet real-world demands, where the most advanced model, GPT-4, achieves only a modest score compared to humans. | 最近大型语言模型(LLM)的进步显著增强了对表格数据的解释和处理能力,引入了以前难以想象的功能。尽管取得了这些成就,当LLM应用于工业场景时仍面临重大挑战,特别是在处理现实世界中的表格数据时所需的推理复杂性增加,这突显了学术基准与实际应用之间的一个显著差异。为了弥补这一差距,我们对表格数据在工业场景中的应用进行了详细调查,并提出了一个全面且复杂的基准TableBench,包括四个主要类别下的18个字段的表格问答(TableQA)能力。此外,我们引入了TableLLM,该模型在我们精心构建的训练集TableInstruct上训练,其性能与GPT-3.5相当。在TableBench上进行的大规模实验表明,无论是开源还是专有的LLM,在满足实际需求方面仍有很大的改进空间,其中最先进的模型GPT-4仅取得了与人类相比较为中等的成绩。 |

1 Introduction

| Recent studies have shown the potential of large language models (LLMs) on tabular tasks such as table question answering (TableQA) (Zhu et al., 2021; Zhao et al., 2023; Hegselmann et al., 2023; Li et al., 2023b; Zhang et al., 2024b; Lu et al., 2024) by adopting in-context learning and structure-aware prompts (Singha et al., 2023), suggesting that a well-organized representation of tables im-proves the interpretation of tabular. Tai et al.(2023) notes that eliciting a step-by-step reason-ing process from LLMs enhances their ability to comprehend and respond to tabular data queries. Furthermore, Zha et al. (2023) investigates the use of external interfaces for improved understanding of tabular data. | 最近的研究显示了大型语言模型(LLM)在表格任务如表格问答(TableQA)方面的潜力(Zhu等人,2021;Zhao等人,2023;Hegselmann等人,2023;Li等人,2023b;Zhang等人,2024b;Lu等人,2024),通过采用上下文学习和结构感知提示(Singha等人,2023),表明良好的表格表示可以改善表格的理解。Tai等人(2023)指出,从LLM中引出逐步推理过程可以增强它们理解和回答表格数据查询的能力。此外,Zha等人(2023)研究了使用外部接口来提高对表格数据的理解。 |

| Traditionally, adapting language models for tab-ular data processing entailed modifying their archi-tectures with specialized features such as position embeddings and attention mechanisms to grasp ta-bles’ structural nuances. However, the introduction of LLMs like GPT-4, GPT-3.5 (Brown et al., 2020; OpenAI, 2023), and PaLM2 (Anil et al., 2023) has heralded a new approach focused on the art of craft-ing precise, information-rich prompts that seam-lessly integrate table data, coupled with leveraging external programming languages like SQL, Python, or other languages (Wang et al., 2023; Chai et al., 2024a), which facilitates more sophisticated chain-of-thought (Wei et al., 2022; Chai et al., 2024b)(CoT) reasoning processes across both proprietary and open-source LLM platforms, including Llama. Such advancements have propelled the fine-tuning of models for tabular data-specific tasks, show-cased by initiatives like StructLM (Zhuang et al., 2024), enhancing capabilities in table structure recognition, fact verification, column type annota-tion, and beyond. However, the existing benchmark might not entirely resonate with the practical chal-lenges, especially complex reasoning requirements encountered by professionals routinely navigating tabular data in real-world settings. Therefore, there is a huge need for creating a benchmark to bridge the gap between the industrial scenarios and the academic benchmark. | 传统上,适应表格数据处理的语言模型需要修改它们的体系结构,使用特定的功能,如位置嵌入和注意机制,以掌握表格的结构细微差别。然而,GPT-4、GPT-3.5(Brown等人,2020;OpenAI,2023)和PaLM2(Anil等人,2023)等LLM的引入预示了一种新的方法,专注于构建精确、信息丰富的提示,无缝集成表格数据,并利用外部编程语言如SQL、Python或其他语言(Wang等人,2023;Chai等人,2024a),这促进了在专有和开源LLM平台上更为复杂的思想链(CoT)推理过程,包括Llama。这些进步推动了针对表格数据特定任务的模型微调,如StructLM(Zhuang等人,2024)所展示的那样,提高了表格结构识别、事实验证、列类型注释等方面的能力。然而,现有的基准可能无法完全反映实践中遇到的实际挑战,尤其是在专业人士日常处理表格数据时遇到的复杂推理要求。因此,非常需要创建一个基准来弥合工业场景与学术基准之间的差距。 |

| To better evaluate the capability of LLMs in Ta-ble QA, we introduce TableBench, a comprehen-sive and complex benchmark covering 18 subcate-gories within four major categories of TableQA abilities. First, We systematically analyze real-world challenges related to table applications and define task complexity based on the required num-ber of reasoning steps. Based on the analysis, we introduce a rigorous annotation workflow, integrat-ing manual and automated methods, to construct TableBench. Subsequently, We create a massively TableQA instruction corpora TableInstruct, cover-ing three distinct reasoning methods. Textual chain-of-thought (TCoT) utilizes a textual reasoning ap-proach, employing a series of inferential steps to de-duce the final answer. Symbolic chain-of-thought (SCoT) adopts symbolic reasoning steps, leverag-ing programming commands to iteratively simulate and refine results through a Think then Code pro-cess. Conversely, program-of-thought (PoT) gen-erates executable code, using lines of code as rea-soning steps within a programming environment to derive the final result. Based on open-source mod-els and TableInstruct, we propose TABLELLM as a strong baseline to explore the reasoning abilities of LLMs among tabular data, yielding comparable performance with GPT-3.5. Furthermore, we evalu-ate the performance of over 30 LLMs across these reasoning methods on TableBench, highlighting that both open-source and proprietary LLMs re-quire substantial improvements to meet real-world demands. Notably, even the most advanced model, GPT-4, achieves only a modest score when compared to human performance. | 为了更好地评估LLM在表格问答上的能力,我们引入了TableBench,这是一个涵盖四个主要类别内18个子类别的全面且复杂的基准。首先,我们系统地分析了表格应用相关的实际挑战,并根据所需推理步骤的数量定义了任务复杂度。基于此分析,提出了一套严格的标注流程,将手工标注与自动化标注相结合,构建TableBench。随后,我们创建了一个大规模的表格问答TableQA指令语料库TableInstruct,覆盖三种不同的推理方法。文本思想链(TCoT)使用文本推理方法,通过一系列推断步骤来推导最终答案。符号思想链(SCoT)采用符号推理步骤,利用编程命令通过“先思考后编码”过程迭代地模拟和改进结果。相反,程序思想链(PoT)生成可执行代码,使用代码行作为编程环境中的推理步骤来派生最终结果。基于开源模型和TableInstruct,我们提出了TABLELLM作为探索LLM在表格数据中的推理能力的强大基线,其性能与GPT-3.5相当。此外,我们在TableBench上评估了30多个LLM在这些推理方法上的性能,强调了开源和专有LLM都需要大量改进才能满足现实世界的需求。值得注意的是,即使是最先进的模型GPT-4,与人类的表现相比,也只能得到一个中等的分数。 |

| The contributions are summarized as follows: >> We propose TableBench, a human-annotated comprehensive and complex TableQA bench-mark comprising 886 samples across 18 fields, designed to facilitate fact-checking, numeri-cal reasoning, data analysis, and visualization tasks. >> We introduce TableInstruct, a massive TableQA instruction corpus covering three dis-tinct reasoning methods. TABLELLM, trained on TableInstruct, serves as a robust baseline for TableBench. >> We systematically evaluate the interpretation and processing capabilities of 30+ models on our crafted TableBench and create a leader-board to evaluate them on four main tasks. Notably, extensive experiments suggest that comprehensive and complex TableQA evalua-tion can realistically measure the gap between leading language models and human capabili-ties in real-world scenarios. | 这些贡献总结如下: >>我们提出TableBench,一个人工注释的全面且复杂的TableQA表格问答基准,包含跨18个领域的886个样本,旨在促进事实核查、数值推理、数据分析和可视化任务。 >>我们引入了TableInstruct,一个大规模的表格问答指令语料库,涵盖了三种不同的推理方法。基于TableInstruct训练的TABLELLM为TableBench提供了强大的基线。 >>我们系统地评估了30+个模型在我们构建的TableBench上的解释和处理能力,并创建了一个排行榜来评估它们在四项主要任务上的表现。值得注意的是,广泛的实验表明,全面且复杂的表格问答TableQA评估可以实际地衡量现实场景中领先语言模型与人类能力之间的差距。 |

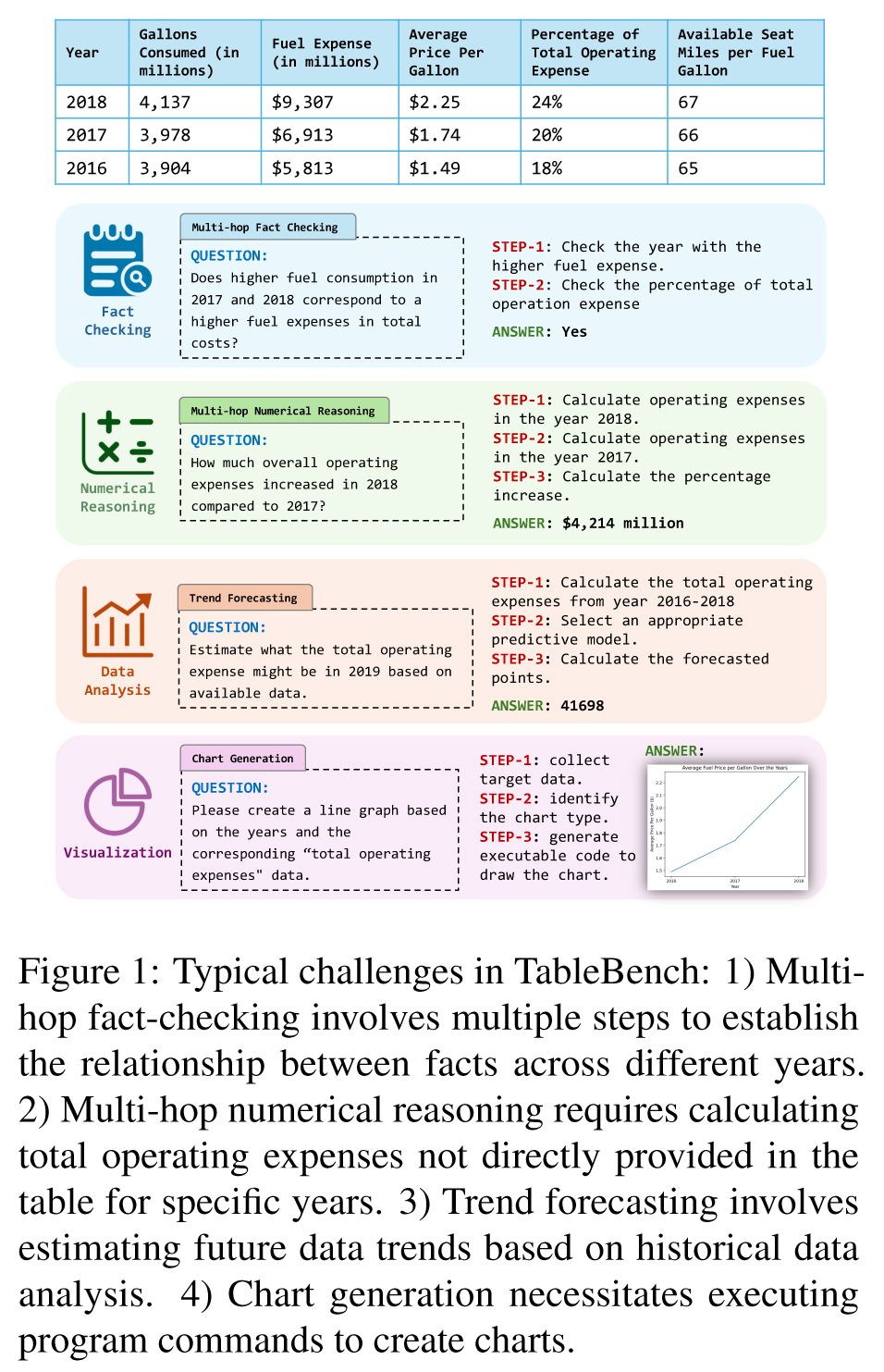

Figure 1: Typical challenges in TableBench: 1) Multi-hop fact-checking involves multiple steps to establish the relationship between facts across different years. 2)Multi-hop numerical reasoning requires calculating total operating expenses not directly provided in the table for specific years. 3) Trend forecasting involves estimating future data trends based on historical data analysis. 4) Chart generation necessitates executing program commands to create charts.图1:TableBench中的典型挑战:1)多跳事实核查涉及多个步骤以建立不同年份间事实的关系。2)多跳数值推理要求计算特定年份的总运营费用,而这些费用并未直接在表格中提供。3)趋势预测涉及基于历史数据分析来估计未来数据趋势。4)图表生成需要执行程序命令以创建图表。

7 Conclusion结论

| In this work, we introduce TableBench, a com-prehensive and complex benchmark designed to evaluate a broad spectrum of tabular skills. It en-compasses 886 question-answer pairs across 18 distinct capabilities, significantly contributing to bridging the gap between academic benchmarks and real-world applications. We evaluate 30+ mod-els with various reasoning methods on TableBench and provide a training set TableInstruct that enables TABLELLM to achieve performance comparable to ChatGPT. Despite these advancements, even the most advanced model, GPT-4, still lags signifi-cantly behind human performance on TableBench, underscoring the challenges of tabular tasks in real-world applications. | 在这项工作中,我们介绍了TableBench,一个全面且复杂的基准,设计用于评估广泛的表格技能。它包含了跨越18种不同能力的886个问答对,对缩小学术基准与实际应用之间的差距做出了重要贡献。我们在TableBench上评估了30多个模型的各种推理方法,并提供了一个使TABLELLM能够达到与ChatGPT相当性能的训练集TableInstruct。尽管有了这些进步,即使是最先进的模型GPT-4,在TableBench上的表现仍然明显落后于人类,这凸显了在实际应用中处理表格任务的挑战性。 |

988

988

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?