Mix-and-Match Tuning for Self-Supervised Semantic Segmentation

AAAI Conference on Artificial Intelligence (AAAI) 2018

http://mmlab.ie.cuhk.edu.hk/projects/M&M/

https://github.com/XiaohangZhan/mix-and-match/

这里简要说一下本文的大致思想思路,不太关注细节问题。

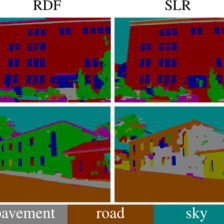

基于深度卷积网络的语义分割通常需要大量的标记数据作为训练样本如 ImageNet and MS COCO 用于网络的预训练,在预训练的基础上,再通过少量标记的目标数据(通常几千张)对模型进行微调得到最终的分割网络。为了降低人工标记的工作量,最近提出了一种自监督语义分割方法 self-supervised semantic segmentation,主要实现 pre-train a network without any human-provided labels。 这个方法的关键在于设计一个代理任务 proxy task (如 image colorization),通过这个代理任务,我们可以在未标记的数据上设计一个 discriminative loss,由于代理任务缺乏 critical supervision signals,所以不能针对目标图像分割任务生成 discriminative representation,所以 基于 self-supervision 方法的性能 和 supervised pre-training 相比较,仍有较大差距。为了克服 这个性能上的差距,我们提出在 self-supervision pipeline 里嵌入一个 ‘mix-and-match’ (M&M) tuning stage 来提升网络的性能。

Mix-and-Match Tuning

1)首先通过 self-supervised proxy task 在未标记的数据上对 CNN 网络进行预训练,得到CNN模型参数的初始化。

2)有了这个初始网络,我们在 target task data 对图像采取图像块,去除严重重叠的图像块,根据标记的图像真值提取图像块对应的 unique class labels ,将这些图像块全部混合在一起。

a large number of image patches with various spatial sizes are randomly sampled from a batch of images. Heavily overlapped patches are discarded. These patches are represented by using the features extracted from the CNN pre-trained in the stage of Fig. 2(a), and assigned with unique class labels based on the corresponding label map. The patches across all images are mixed to decouple any intra-image dependency so as to reflect the diverse and rich target distribution.

3)利用上面的初始网络对这些图像块进行相似性分析,这里使用 一个 class-wised connected graph,将每个图像块看作一个节点,属于同一类的图像块之间的权重比较大,不同类的图像块之间的权重比较小,因为我们有每个图像块的 类别标签信息,所以这是有监督学习的。通过这个学习我们可以让网络能够学习到图像块包含的类别信息

Our next goal is to exploit the patches to generate stable gradients for tuning the network. This is possible since patches are of different classes, and such relation can be employed to form a massive number of triplets

4) 在目标数据上利用标记的分割数据进行微调

fine-tune the CNN to the semantic segmentation task

我的博客即将搬运同步至腾讯云+社区,邀请大家一同入驻:https://cloud.tencent.com/developer/support-plan

1179

1179

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?