源码地址:https://github.com/tensorflow/playground/blob/master/src/nn.ts

Node节点(nn.ts的class Node)

A node in a neural network. Each node has a state (total input, output, and their respectively derivatives) which changes after every forward and back propagation run.

神经网络中的每个节点都有一个状态(总输入、输出及其各自的导数),该状态在每次向前和向后传播运行后都会发生变化。

| 属性 | 说明 | 注 |

|---|---|---|

| id: string; | ||

| inputLinks: Link[] | 输入链接列表 | |

| outputs: Link[] | 输出链接列表 | |

| totalInput: number; | ||

| output: number; | ||

| outputDer = 0; | 与本节点输出相关的错误函数的导数。 | |

| inputDer = 0; | 与本节点输入相关的错误函数的导数。 | |

| accInputDer = 0; | 与此节点的总输入相关的累计错误导数。 | 等于 dE/db ,其中b is the node’s bias term |

| numAccumulatedDers = 0; | 累计错误数。自上次更新以来相对于总输入的导数。 | |

| activation: ActivationFunction; | 激活函数 | |

| constructor(*) | 创建节点的函数 | |

| updateOutput(*) : number | 重新计算节点的输出 |

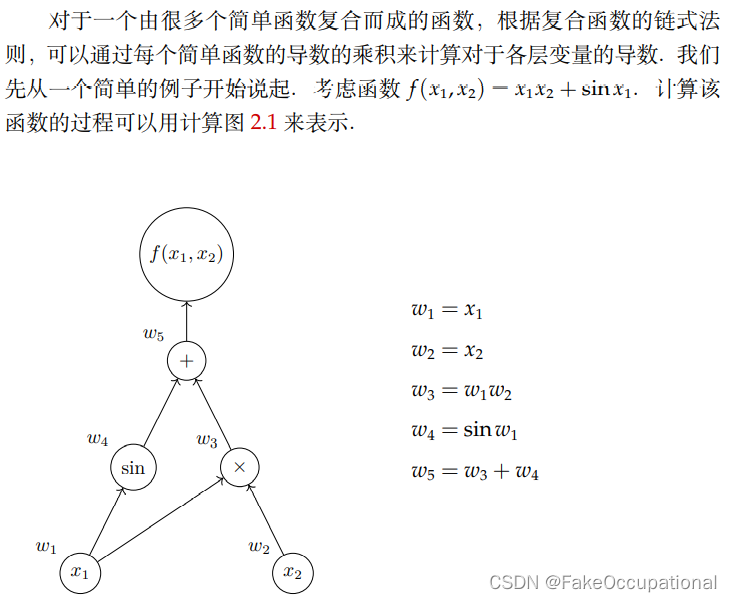

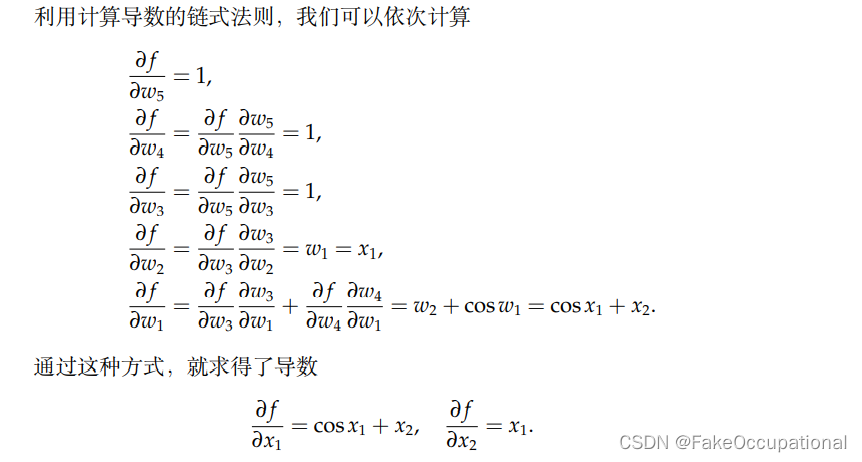

在《最优化:建模、算法与理论》刘浩洋、户将、李勇锋、文再文编著中解释了微分的过程:

主要计算函数

updateOutput(): number {

// Stores total input into the node.

this.totalInput = this.bias;

for (let j = 0; j < this.inputLinks.length; j++) {

let link = this.inputLinks[j];

this.totalInput += link.weight * link.source.output;

}

this.output = this.activation.output(this.totalInput);

return this.output;

}

激活函数(nn.ts的class Node)

/** A node's activation function and its derivative. */

export interface ActivationFunction {

output: (input: number) => number;

der: (input: number) => number;

}

/** Built-in activation functions */

export class Activations {

public static TANH: ActivationFunction = {

output: x => (Math as any).tanh(x),

der: x => {

let output = Activations.TANH.output(x);

return 1 - output * output;

}

};

public static RELU: ActivationFunction = {

output: x => Math.max(0, x),

der: x => x <= 0 ? 0 : 1

};

public static SIGMOID: ActivationFunction = {

output: x => 1 / (1 + Math.exp(-x)),

der: x => {

let output = Activations.SIGMOID.output(x);

return output * (1 - output);

}

};

public static LINEAR: ActivationFunction = {

output: x => x,

der: x => 1

};

}

完整代码

Node节点(nn.ts的class Node)

export class Node {

id: string;

/** List of input links. */

inputLinks: Link[] = [];

bias = 0.1;

/** List of output links. */

outputs: Link[] = [];

totalInput: number;

output: number;

/** Error derivative with respect to this node's output. */

outputDer = 0;

/** Error derivative with respect to this node's total input. */

inputDer = 0;

/**

* Accumulated error derivative with respect to this node's total input since

* the last update. This derivative equals dE/db where b is the node's

* bias term.

*/

accInputDer = 0;

/**

* Number of accumulated err. derivatives with respect to the total input

* since the last update.

*/

numAccumulatedDers = 0;

/** Activation function that takes total input and returns node's output */

activation: ActivationFunction;

/**

* Creates a new node with the provided id and activation function.

*/

constructor(id: string, activation: ActivationFunction, initZero?: boolean) {

this.id = id;

this.activation = activation;

if (initZero) {

this.bias = 0;

}

}

/** Recomputes the node's output and returns it. */

updateOutput(): number {

// Stores total input into the node.

this.totalInput = this.bias;

for (let j = 0; j < this.inputLinks.length; j++) {

let link = this.inputLinks[j];

this.totalInput += link.weight * link.source.output;

}

this.output = this.activation.output(this.totalInput);

return this.output;

}

}

激活函数(nn.ts中的interface ActivationFunction和class Activations)

/** A node's activation function and its derivative. */

export interface ActivationFunction {

output: (input: number) => number;

der: (input: number) => number;

}

/** Built-in activation functions */

export class Activations {

public static TANH: ActivationFunction = {

output: x => (Math as any).tanh(x),

der: x => {

let output = Activations.TANH.output(x);

return 1 - output * output;

}

};

public static RELU: ActivationFunction = {

output: x => Math.max(0, x),

der: x => x <= 0 ? 0 : 1

};

public static SIGMOID: ActivationFunction = {

output: x => 1 / (1 + Math.exp(-x)),

der: x => {

let output = Activations.SIGMOID.output(x);

return output * (1 - output);

}

};

public static LINEAR: ActivationFunction = {

output: x => x,

der: x => 1

};

}

7357

7357

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?