国际惯例:Src https://github.com/stereolabs/zed-examples

查看本人系列文章了解ZED2 SDK in Linux build(CUDA 10.0 used): https://blog.csdn.net/hhaowang/article/details/115401380?spm=1001.2014.3001.5501

ZED2官方文档:https://www.stereolabs.com/docs/

目录

Parameters with prefix general

SENSORS PARAMETERS (ONLY ZED-M AND ZED 2)

OBJECT DETECTION PARAMETERS (ONLY ZED 2)

1. 安装zed-ros-wrapper依赖

项目地址 https://github.com/stereolabs/zed-ros-wrapper#build-the-program

Build the program

The zed_ros_wrapper is a catkin package. It depends on the following ROS packages:

- nav_msgs

- tf2_geometry_msgs

- message_runtime

- catkin

- roscpp

- stereo_msgs

- rosconsole

- robot_state_publisher

- urdf

- sensor_msgs

- image_transport

- roslint

- diagnostic_updater

- dynamic_reconfigure

- tf2_ros

- message_generation

- nodelet

编译zed_ros_wrapper包

$ cd ~/catkin_ws/src

$ git clone https://github.com/stereolabs/zed-ros-wrapper.git

$ cd ../

$ rosdep install --from-paths src --ignore-src -r -y

$ catkin_make -DCMAKE_BUILD_TYPE=Release

$ source ./devel/setup.bash简单测试:

ZED2 camera:

$ roslaunch zed_wrapper zed2.launch2 ZED2 wrapper 话题列表

查看节点信息

查看话题信息

左相机发布话题列表

rgb_image相关话题默认以左相机数据帧发布

右相机话题

双目及深度话题

SLAM相关

(暂不考虑)

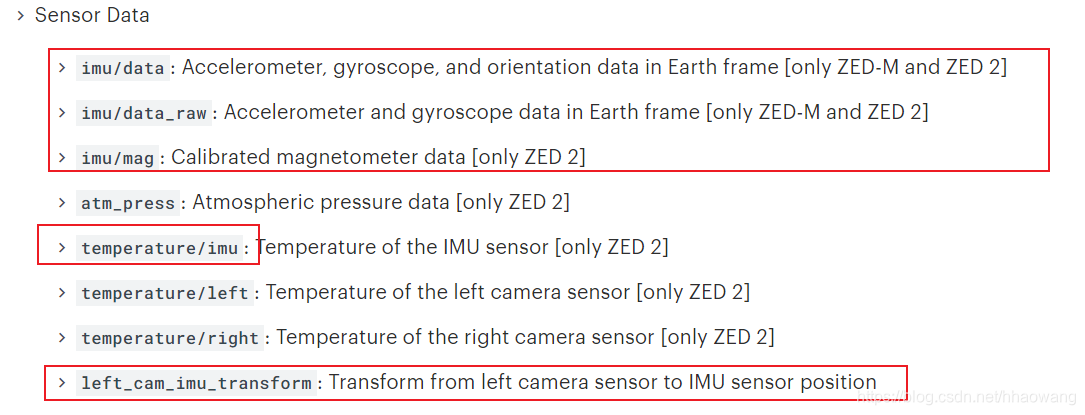

传感器数据发布话题

3. ZED参数服务器

# params/zed2.yaml

# Parameters for Stereolabs ZED2 camera

---

general:

camera_model: 'zed2'

depth:

min_depth: 0.7 # Min: 0.2, Max: 3.0 - Default 0.7 - Note: reducing this value wil require more computational power and GPU memory

max_depth: 20.0 # Max: 40.0

pos_tracking:

imu_fusion: true # enable/disable IMU fusion. When set to false, only the optical odometry will be used.

sensors:

sensors_timestamp_sync: false # Synchronize Sensors messages timestamp with latest received frame

publish_imu_tf: true # publish `IMU -> <cam_name>_left_camera_frame` TF

object_detection:

od_enabled: false # True to enable Object Detection [only ZED 2]

model: 0 # '0': MULTI_CLASS_BOX - '1': MULTI_CLASS_BOX_ACCURATE - '2': HUMAN_BODY_FAST - '3': HUMAN_BODY_ACCURATE

confidence_threshold: 50 # Minimum value of the detection confidence of an object [0,100]

max_range: 15. # Maximum detection range

object_tracking_enabled: true # Enable/disable the tracking of the detected objects

body_fitting: false # Enable/disable body fitting for 'HUMAN_BODY_FAST' and 'HUMAN_BODY_ACCURATE' models

mc_people: true # Enable/disable the detection of persons for 'MULTI_CLASS_BOX' and 'MULTI_CLASS_BOX_ACCURATE' models

mc_vehicle: true # Enable/disable the detection of vehicles for 'MULTI_CLASS_BOX' and 'MULTI_CLASS_BOX_ACCURATE' models

mc_bag: true # Enable/disable the detection of bags for 'MULTI_CLASS_BOX' and 'MULTI_CLASS_BOX_ACCURATE' models

mc_animal: true # Enable/disable the detection of animals for 'MULTI_CLASS_BOX' and 'MULTI_CLASS_BOX_ACCURATE' models

mc_electronics: true # Enable/disable the detection of electronic devices for 'MULTI_CLASS_BOX' and 'MULTI_CLASS_BOX_ACCURATE' models

mc_fruit_vegetable: true # Enable/disable the detection of fruits and vegetables for 'MULTI_CLASS_BOX' and 'MULTI_CLASS_BOX_ACCURATE' modelsParameters with prefix general

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| camera_name | A custom name for the ZED camera. Used as namespace and prefix for camera TF frames | string, default=zed |

| camera_model | Type of Stereolabs camera | zed: ZED, zedm: ZED-M, zed2: ZED 2 |

| camera_flip | Flip the camera data if it is mounted upsidedown | true, false |

| zed_id | Select a ZED camera by its ID. Useful when multiple cameras are connected. ID is ignored if an SVO path is specified | int, default 0 |

| serial_number | Select a ZED camera by its serial number | int, default 0 |

| resolution | Set ZED camera resolution | 0: HD2K, 1: HD1080, 2: HD720, 3: VGA |

| grab_frame_rate | Set ZED camera grabbing framerate | int |

| gpu_id | Select a GPU device for depth computation | int, default -1 (best device found) |

| base_frame | Frame_id of the frame that indicates the reference base of the robot | string, default=base_link |

| verbose | Enable/disable the verbosity of the SDK | true, false |

| svo_compression | Set SVO compression mode | 0: LOSSLESS (PNG/ZSTD), 1: H264 (AVCHD) ,2: H265 (HEVC) |

| self_calib | Enable/disable self calibration at starting | true, false |

VIDEO PARAMETERS

Parameters with prefix video

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| img_downsample_factor | Resample factor for images [0.01,1.0]. The SDK works with native image sizes, but publishes rescaled image. | double, default=1.0 |

| extrinsic_in_camera_frame | If false extrinsic parameter in camera_info will use ROS native frame (X FORWARD, Z UP) instead of the camera frame (Z FORWARD, Y DOWN) [true use old behavior as for version < v3.1] | true, false |

DEPTH PARAMETERS

Parameters with prefix depth

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| quality | Select depth map quality | 0: NONE, 1: PERFORMANCE, 2: MEDIUM, 3: QUALITY, 4: ULTRA |

| sensing_mode | Select depth sensing mode (change only for VR/AR applications) | 0: STANDARD, 1: FILL |

| depth_stabilization | Enable depth stabilization. Stabilizing the depth requires an additional computation load as it enables tracking | 0: disabled, 1: enabled |

| openni_depth_mode | Convert 32bit depth in meters to 16bit in millimeters | 0: 32bit float meters, 1: 16bit uchar millimeters |

| depth/depth_downsample_factor | Resample factor for depth data matrices [0.01,1.0]. The SDK works with native data sizes, but publishes rescaled matrices (depth map, point cloud, …) | double, default=1.0 |

| min_depth | Minimum value allowed for depth measures | Min: 0.3 (ZED) or 0.1 (ZED-M), Max: 3.0 - Note: reducing this value will require more computational power and GPU memory. In cases of limited compute power, increasing this value can provide better performance |

| max_depth | Maximum value allowed for depth measures | Min: 1.0, Max: 30.0 - Values beyond this limit will be reported as TOO_FAR |

POSITION PARAMETERS

Parameters with prefix pos_tracking

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| publish_tf | Enable/disable publish TF frames | true, false |

| publish_map_tf | Enable/disable publish map TF frame | true, false |

| map_frame | Frame_id of the pose message | string, default=map |

| odometry_frame | Frame_id of the odom message | string, default=odom |

| area_memory_db_path | Path of the database file for loop closure and relocalization that contains learnt visual information about the environment | string, default=`` |

| pose_smoothing | Enable smooth pose correction for small drift correction | 0: disabled, 1: enabled |

| area_memory | Enable Loop Closing | true, false |

| floor_alignment | Indicates if the floor must be used as origin for height measures | true, false |

| initial_base_pose | Initial reference pose | vector, default=[0.0,0.0,0.0, 0.0,0.0,0.0] -> [X, Y, Z, R, P, Y] |

| init_odom_with_first_valid_pose | Indicates if the odometry must be initialized with the first valid pose received by the tracking algorithm | true, false |

| path_pub_rate | Frequency (Hz) of publishing of the trajectory messages | float, default=2.0 |

| path_max_count | Maximum number of poses kept in the pose arrays (-1 for infinite) | int, default=-1 |

MAPPING PARAMETERS

Parameters with prefix mapping

Note: the mapping module requires SDK v2.8 or higher

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| mapping_enabled | Enable/disable the mapping module | true, false |

| resolution | Resolution of the fused point cloud [0.01, 0.2] | double, default=0.1 |

| max_mapping_range | Maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0] | double, default=-1 |

| fused_pointcloud_freq | Publishing frequency (Hz) of the 3D map as fused point cloud | double, default=1.0 |

SENSORS PARAMETERS (ONLY ZED-M AND ZED 2)

Parameters with prefix sensors

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| sensors_timestamp_sync | Synchronize Sensors message timestamp with latest received frame | true, false |

OBJECT DETECTION PARAMETERS (ONLY ZED 2)

Parameters with prefix object_detection

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| od_enabled | Enable/disable the Object Detection module | true, false |

| confidence_threshold | Minimum value of the detection confidence of an object | int [0,100] |

| object_tracking_enabled | Enable/disable the tracking of the detected objects | true, false |

| people_detection | Enable/disable the detection of persons | true, false |

| vehicle_detection | Enable/disable the detection of vehicles | true, false |

Dynamic parameters

The ZED node lets you reconfigure these parameters dynamically:

| PARAMETER | DESCRIPTION | VALUE |

|---|---|---|

| general/pub_frame_rate | Frequency of the publishing of Video and Depth images (equal or minor to grab_frame_rate value) | float [0.1,100.0] |

| depth/depth_confidence | Threshold to reject depth values based on their confidence. Each depth pixel has a corresponding confidence. A lower value means more confidence and precision (but less density). An upper value reduces filtering (more density, less certainty). A value of 100 will allow values from 0 to 100. (no filtering). A value of 90 will allow values from 10 to 100. (filtering lowest confidence values). A value of 30 will allow values from 70 to 100. (keeping highest confidence values and lowering the density of the depth map). The value should be in [1,100]. By default, the confidence threshold is set at 100, meaning that no depth pixel will be rejected. | int [0,100] |

| depth/depth_texture_conf | Threshold to reject depth values based on their textureness confidence. A lower value means more confidence and precision (but less density). An upper value reduces filtering (more density, less certainty). The value should be in [1,100]. By default, the confidence threshold is set at 100, meaning that no depth pixel will be rejected. | int [0,100] |

| depth/point_cloud_freq | Frequency of the pointcloud publishing (equal or minor to frame_rate value) | float [0.1,100.0] |

| video/brightness | Defines the brightness control | int [0,8] |

| video/contrast | Defines the contrast control | int [0,8] |

| video/hue | Defines the hue control | int [0,11] |

| video/saturation | Defines the saturation control | int [0,8] |

| video/sharpness | Defines the sharpness control | int [0,8] |

| video/gamma | Defines the gamma control | int [1,9] |

| video/auto_exposure_gain | Defines if the Gain and Exposure are in automatic mode or not | true, false |

| video/gain | Defines the gain control [only if auto_exposure_gain is false] | int [0,100] |

| video/exposure | Defines the exposure control [only if auto_exposure_gain is false] | int [0,100] |

| video/auto_whitebalance | Defines if the White balance is in automatic mode or not | true, false |

| video/whitebalance_temperature | Defines the color temperature value (x100) | int [42,65] |

To modify a dynamic parameter, you can use the GUI provided by the rqt stack:

$ rosrun rqt_reconfigure rqt_reconfigure

4. ZED TF

本文介绍如何在ROS环境中使用ZED2相机,并详细解释了ZED2 ROS Wrapper的配置参数及其作用,包括视频参数、深度参数、位置跟踪参数等。

本文介绍如何在ROS环境中使用ZED2相机,并详细解释了ZED2 ROS Wrapper的配置参数及其作用,包括视频参数、深度参数、位置跟踪参数等。

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?