@article{yue2023dif,

title={Dif-fusion: Towards high color fidelity in infrared and visible image fusion with diffusion models},

author={Yue, Jun and Fang, Leyuan and Xia, Shaobo and Deng, Yue and Ma, Jiayi},

journal={arXiv preprint arXiv:2301.08072},

year={2023}

}

论文级别:-

影响因子:-

文章目录

📖论文解读

以往的VIF网络将多通道图像转换为单通道图像,忽略了【颜色保真】,为了解决这个问题,作者提出了【基于扩散模型】的图像融合网络【Dif-Fusion】,在具有正向扩散和反向扩散的潜在空间中,使用降噪网络【建立多通道数据分布】,然后降噪网络【提取】包含了可见光信息和红外信息的【多通道扩散特征】,最后将扩散特征输入多通道融合模块生成三通道的融合图像。

🔑关键词

Image fusion, color fidelity, multimodal information, diffusion models, latent representation, deep generative

model.

图像融合,颜色保真度,多模态信息,扩散模型,潜在表示,深度生成模型

💭核心思想

将源图像通道拼接,输入扩散模型,然后从扩散模型中提取扩散特征,通过多通道的扩散特征,输入多通道融合网络中恢复出多通道的融合图像

🪢网络结构

作者提出的网络结构如下所示。

📉损失函数

🔢数据集

图像融合数据集链接

[图像融合常用数据集整理]

🎢训练设置

🔬实验

📏评价指标

- MI

- VIF

- SF

- Qabf

- SD

参考资料

[图像融合定量指标分析]

🥅Baseline

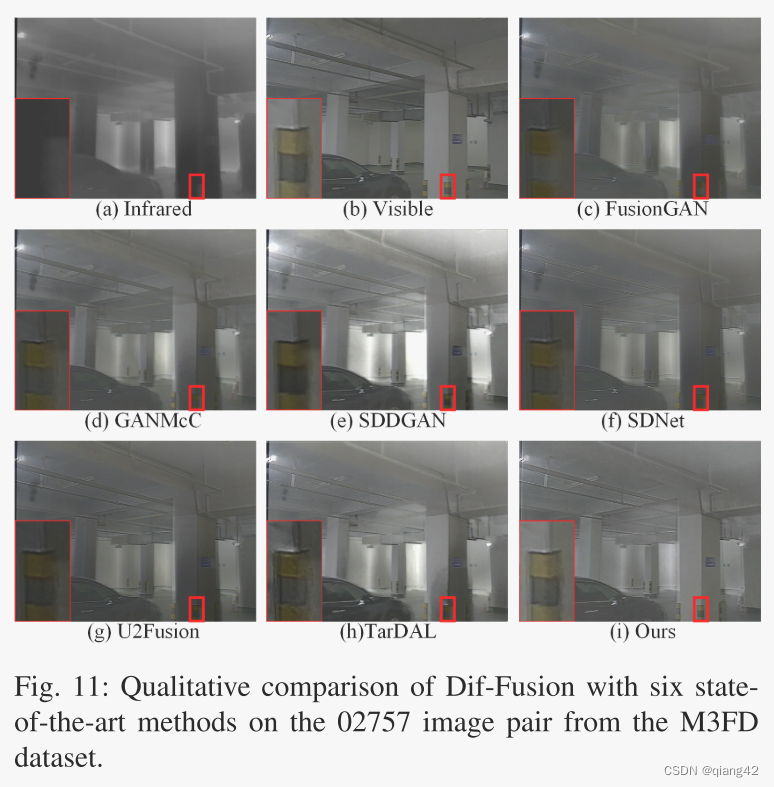

- FusionGAN, SDDGAN, GANMcC, SDNet, U2Fusion, TarDAL

✨✨✨参考资料

✨✨✨强烈推荐必看博客[图像融合论文baseline及其网络模型]✨✨✨

🔬实验结果

更多实验结果及分析可以查看原文:

📖[论文下载地址]

💽[代码下载地址]

🚀传送门

📑图像融合相关论文阅读笔记

📑[LRRNet: A Novel Representation Learning Guided Fusion Network for Infrared and Visible Images]

📑[(DeFusion)Fusion from decomposition: A self-supervised decomposition approach for image fusion]

📑[ReCoNet: Recurrent Correction Network for Fast and Efficient Multi-modality Image Fusion]

📑[RFN-Nest: An end-to-end resid- ual fusion network for infrared and visible images]

📑[SwinFuse: A Residual Swin Transformer Fusion Network for Infrared and Visible Images]

📑[SwinFusion: Cross-domain Long-range Learning for General Image Fusion via Swin Transformer]

📑[(MFEIF)Learning a Deep Multi-Scale Feature Ensemble and an Edge-Attention Guidance for Image Fusion]

📑[DenseFuse: A fusion approach to infrared and visible images]

📑[DeepFuse: A Deep Unsupervised Approach for Exposure Fusion with Extreme Exposure Image Pair]

📑[GANMcC: A Generative Adversarial Network With Multiclassification Constraints for IVIF]

📑[DIDFuse: Deep Image Decomposition for Infrared and Visible Image Fusion]

📑[IFCNN: A general image fusion framework based on convolutional neural network]

📑[(PMGI) Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity]

📑[SDNet: A Versatile Squeeze-and-Decomposition Network for Real-Time Image Fusion]

📑[DDcGAN: A Dual-Discriminator Conditional Generative Adversarial Network for Multi-Resolution Image Fusion]

📑[FusionGAN: A generative adversarial network for infrared and visible image fusion]

📑[PIAFusion: A progressive infrared and visible image fusion network based on illumination aw]

📑[CDDFuse: Correlation-Driven Dual-Branch Feature Decomposition for Multi-Modality Image Fusion]

📑[U2Fusion: A Unified Unsupervised Image Fusion Network]

📑综述[Visible and Infrared Image Fusion Using Deep Learning]

📚图像融合论文baseline总结

📑其他论文

📑[3D目标检测综述:Multi-Modal 3D Object Detection in Autonomous Driving:A Survey]

🎈其他总结

🎈[CVPR2023、ICCV2023论文题目汇总及词频统计]

✨精品文章总结

✨[图像融合论文及代码整理最全大合集]

✨[图像融合常用数据集整理]

如有疑问可联系:420269520@qq.com;

码字不易,【关注,收藏,点赞】一键三连是我持续更新的动力,祝各位早发paper,顺利毕业~

962

962

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?