安装

Home · eggnogdb/eggnog-mapper Wiki · GitHub

git clone https://github.com/eggnogdb/eggnog-mapper.git

cd eggnog-mapper

python setup.py install

# 如果您的 eggnog-mapper 路径是/home/user/eggnog-mapper

export PATH=/home/user/eggnog-mapper:/home/user/eggnog-mapper/eggnogmapper/bin:"$PATH"

# 设置database安装路径

export EGGNOG_DATA_DIR=/home/user/eggnog-mapper-data

# 下载默认数据库

download_eggnog_data.py

# 构建特定物种数据库

create_dbs.py -m diamond --dbname Viruses --taxids 2 #细菌的

#hmmer太慢了,舍弃

########################################################################################

# 下载数据库(我这里下载了hmmer的病毒数据库加上默认数据库)

# 更多可见 http://eggnog5.embl.de/#/app/downloads 和 查看download_eggnog_data.py --help

#download_eggnog_data.py -y -P -H -d 10239 --dbname Viruses

# 顺便把hmmer的其他几个关键数据库下载了

#nohup download_eggnog_data.py -y -H -d 2 --dbname Bacteria

#nohup download_eggnog_data.py -y -H -d 2759 --dbname Eukaryota

#nohup download_eggnog_data.py -y -H -d 2157 --dbname Archaea

使用

python ~/Software/eggnog-mapper/emapper.py -i A4.2.1_wanzheng.faa -o 13 --cpu 90 --usemem --override --evalue 1e-5 --score 50 -m hmmer -d Viruses-i A4.2.1_wanzheng.faa:输入文件,应为一个包含蛋白质序列的FASTA文件。-o 13:输出文件的前缀,这里是13。EggNOG-mapper会生成多个输出文件,如13.emapper.annotations。--cpu 90:使用的CPU核心数,这里是90。--usemem:这个参数表示在搜索过程中使用尽可能多的内存。--override:如果输出文件已经存在,这个参数会让EggNOG-mapper覆盖它们。--evalue 1e-5:设置E值阈值,这里是1e-5。E值越小,表示匹配结果的可信度越高。--score 50:设置比对得分阈值,这里是50。得分越高,表示匹配结果的相似度越高

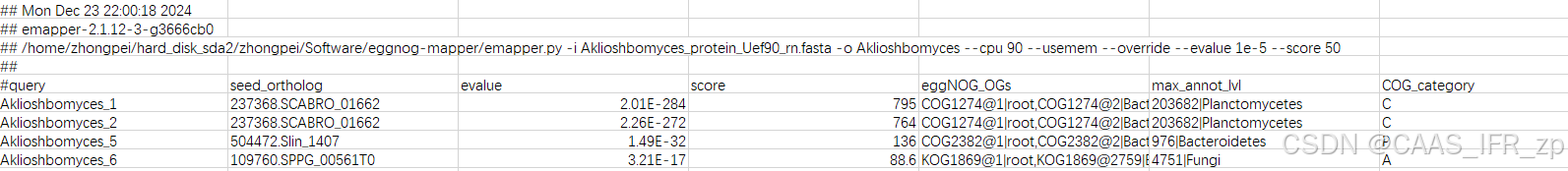

结果文件是emapper.annotations结尾的这个

参考别人的解释:基因组注释(6)——在线版eggNOG-mapper注释功能基因 - 我的小破站

我想写一个代码,对多个进行了eggnog注释的结果文件,根据结果的不同列,进行合并和计数

我想写一个代码,对多个进行了eggnog注释的结果文件,根据结果的不同列,进行合并和计数

#! /usr/bin/env python

#########################################################

# make eggnog result together

# written by PeiZhong in IFR of CAAS

import argparse

import os

import pandas as pd

parser = argparse.ArgumentParser(description='TPM count file combine')

parser.add_argument('--input_dir', "-dir", required=True, help='Path to eggnog result files')

parser.add_argument('--marker', "-m", nargs='+', required=True, help='marker that can identify result files')

parser.add_argument('--out_prefix', required=True, help='output file prefix')

parser.add_argument('--level', '-l', type=str, required=True, help='COG_category, Description, GOs, EC, KEGG_ko, KEGG_Pathway, KEGG_Module, CAZy, PFAMs, only use one in a time')

args = parser.parse_args()

input_dir = args.input_dir

markers = args.marker

out_prefix = args.out_prefix

level = args.level

ls_level = ["COG_category","Description","GOs","EC","KEGG_ko","KEGG_Pathway","KEGG_Module","CAZy","PFAMs"]

if level not in ls_level:

raise ValueError("Invalid level specified. Use COG_category, Description, GOs, EC, KEGG_ko, KEGG_Pathway, KEGG_Module, CAZy, PFAMs.")

result_ls = []

# Step 1: find result files

print(f"Scanning input directory: {input_dir}")

all_files = os.listdir(input_dir)

filtered_files = [

os.path.join(input_dir, file)

for file in all_files

if all(marker in file for marker in markers)

]

if not filtered_files:

print(f"No files matched the makers: {' '.join(markers)} in directory {input_dir}")

exit(1)

# Step 2: Read and process files

result_df = pd.DataFrame()

temp_files = [] # List to track temporary files

for file in filtered_files:

print(f"Preprocessing file: {file}")

temp_file = f"{file}.temp"

temp_files.append(temp_file)

with open(file, "r") as infile, open(temp_file, "w") as outfile:

for line in infile:

if line.startswith("#query"):

outfile.write(line.lstrip("#"))

else:

outfile.write(line)

sample_name = os.path.basename(file) # Use the filename as the sample name

print(f"Processing file: {temp_file}")

df = pd.read_csv(temp_file, sep='\t', comment='#') # Use the preprocessed temporary file

if level not in df.columns:

print(f"Level '{level}' not found in file {temp_file}. Skipping.")

continue

# Split comma-separated annotations into separate rows

df = df[[level]].dropna()

df[level] = df[level].str.split(',') # Split annotations by commas

df = df.explode(level) # Expand the DataFrame so each annotation has its own row

df[level] = df[level].str.strip("\n") # Remove extra spaces if present

# Count annotations for this sample

count_df = df[level].value_counts().reset_index()

count_df.columns = [level, sample_name]

# Merge the count table into the result DataFrame

if result_df.empty:

result_df = count_df

else:

result_df = pd.merge(result_df, count_df, on=level, how='outer')

# Step 3: Fill missing values with 0 and output result

result_df.fillna(0, inplace=True) # Replace NaN with 0 for counts

result_df = result_df.sort_values(by=level) # Optional: Sort by annotation name

output_file = f"{out_prefix}_{level}_counts_matrix.csv"

print(f"Writing output to {output_file}")

result_df.to_csv(output_file, index=False)

# Step 4: Clean up temporary files

print("Cleaning up temporary files...")

for temp_file in temp_files:

if os.path.exists(temp_file):

os.remove(temp_file)

print("Done!")

eggnog_level_together.py -dir . -m emapper.annotations --out_prefix 16_ANF_genes -l CAZy结果:

2694

2694

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?