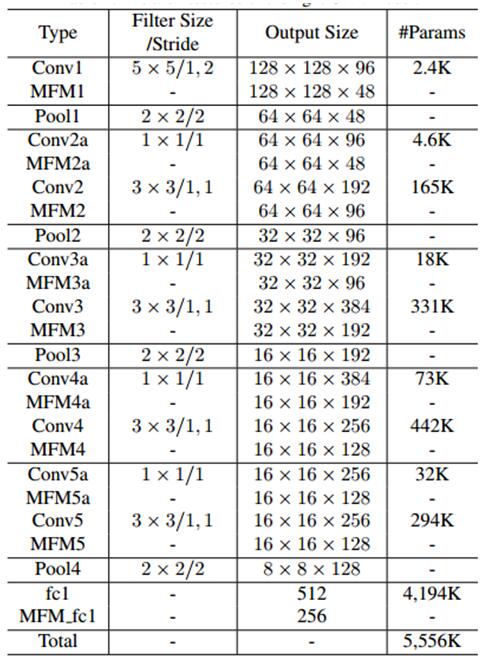

light_cnn出自2016 cvpr吴翔A Light CNN for Deep Face Representation with Noisy Labels,

优势在于一个很小的模型和一个非常不错的识别率。主要原因在于,

(1)作者使用maxout作为激活函数,实现了对噪声的过滤和对有用信号的保留,从而产生更好的特征图MFM(Max-Feature-Map)。这个思想非常不错,本人将此思想用在center_loss中,实现了大概0.5%的性能提升,同时,这个maxout也就是所谓的slice+eltwise,这2个层的好处就是,一,不会产生训练的参数,二,基本很少耗时,给人的感觉就是不做白不做,性能还有提升。

(2)作者使用了NIN(Network inNetwork)来减少参数,并提升效果,作者提供的A模型是没有NIN操作的,B模型是有NIN操作的,2个模型的训练数据集都是CASIA,但是性能有0.5%的提升,当然代价是会有额外参数的产生。但是相比其他网络结构,使用NIN还是会使模型小不少,作者论文中的网络结构和B,C模型相对应。

模型比较如下:

作者论文中的识别率是98.80%和实际测试结果非常接近,本人测试使用的对齐方式是使用作者提供的对齐方式。

这里的S模型,是大神分享的基于light_cnn稍作修改的模型,其实就是通过卷基层替换全连接层来减少参数,当然为了保证输出为256维特征,作者在第一个卷基层的stride*2,这样使得卷积后特征图缩小为原来1半。这种方法减少了模型参数,同时,stride*2使得卷积过程少了1半以上,也许GPU并行后未必会有明显的反应,但是cpu模式下,就会看出运行时间减少不少。

修改的作者的对齐程序:

基本思想还是反射变换warpaffine的思想,根据眼睛做旋转,根据眼睛中点和嘴巴中点做缩放,然后crop而出)。

function [res, eyec2, cropped, resize_scale] = align(img, f5pt, ec_mc_y, ec_y,crop_size)

f5pt = double(f5pt);

ang_tan = (f5pt(1,2)-f5pt(2,2))/(f5pt(1,1)-f5pt(2,1));

ang = atan(ang_tan) / pi * 180;

img_rot = imrotate(img, ang, 'bicubic');

imgh = size(img,1);

imgw = size(img,2);

% eye center

x = (f5pt(1,1)+f5pt(2,1))/2;

y = (f5pt(1,2)+f5pt(2,2))/2;

% x = ffp(1);

% y = ffp(2);

ang = -ang/180*pi;

%{

x0 = x - imgw/2;

y0 = y - imgh/2;

xx = x0*cos(ang) - y0*sin(ang) + size(img_rot,2)/2;

yy = x0*sin(ang) + y0*cos(ang) + size(img_rot,1)/2;

%}

[xx, yy] = transform(x, y, ang, size(img), size(img_rot));

eyec = round([xx yy]);

x = (f5pt(4,1)+f5pt(5,1))/2;

y = (f5pt(4,2)+f5pt(5,2))/2;

[xx, yy] = transform(x, y, ang, size(img), size(img_rot));

mouthc = round([xx yy]);

resize_scale = ec_mc_y/abs(mouthc(2)-eyec(2));

img_resize = imresize(img_rot, resize_scale);

res = img_resize;

eyec2 = (eyec - [size(img_rot,2)/2 size(img_rot,1)/2]) * resize_scale + [size(img_resize,2)/2 size(img_resize,1)/2];

eyec2 = round(eyec2);

img_crop = zeros(crop_size, crop_size, size(img_rot,3));

% crop_y = eyec2(2) -floor(crop_size*1/3);

crop_y = eyec2(2) - ec_y;

crop_y_end = crop_y + crop_size - 1;

crop_x = eyec2(1)-floor(crop_size/2);

crop_x_end = crop_x + crop_size - 1;

box = guard([crop_x crop_x_end crop_y crop_y_end], size(img_resize,1));

if (box(2)>size(img_resize,2)||box(4)>size(img_resize,1))

img_crop(box(3)-crop_y+1:box(4)-crop_y+1, box(1)-crop_x+1:box(2)-crop_x+1,:) =imresize(img_resize,[box(4)-box(3)+1,box(2)-box(1)+1]);

else

img_crop(box(3)-crop_y+1:box(4)-crop_y+1, box(1)-crop_x+1:box(2)-crop_x+1,:) = img_resize(box(3):box(4),box(1):box(2),:);

end

% img_crop = img_rot(crop_y:crop_y+img_size-1,crop_x:crop_x+img_size-1);

cropped = img_crop/255;

end

function r = guard(x, N)

x(x<1)=1;

x(x>N)=N;

r = x;

end

function [xx, yy] = transform(x, y, ang, s0, s1)

% x,y position

% ang angle

% s0 size of original image

% s1 size of target image

x0 = x - s0(2)/2;

y0 = y - s0(1)/2;

xx = x0*cos(ang) - y0*sin(ang) + s1(2)/2;

yy = x0*sin(ang) + y0*cos(ang) + s1(1)/2;

end

测试程序:

其中,list.txt数据格式如下:

路径/图片类别eye_x eye_y eye_x eye_y nose_x nose_y mouse_x mouse _y

clear;

clc;

imagelist=importdata('list.txt');

addpath('/home/caffe/matlab');

caffe.reset_all();

% load face model and creat network

caffe.set_device(0);

caffe.set_mode_gpu();

%-------1

model = 'LightenedCNN_A_deploy.prototxt';

weights = 'LightenedCNN_A.caffemodel';

%-------2

% model = 'LightenedCNN_B_deploy.prototxt';

% weights = 'LightenedCNN_B.caffemodel';

%-------3

% model = 'LightenedCNN_C_deploy.prototxt';

% weights = 'LightenedCNN_C.caffemodel';

net = caffe.Net(model, weights, 'test');

features=[];

for k=1:size(imagelist.textdata,1)

f5pt = [imagelist.data(k,3),imagelist.data(k,5), imagelist.data(k,7),imagelist.data(k,9),imagelist.data(k,11);...

imagelist.data(k,2),imagelist.data(k,4), imagelist.data(k,6),imagelist.data(k,8),imagelist.data(k,10)];

f5pt=f5pt';

crop_size=128;

ec_mc_y=48;

ec_y=40;

[img2, eyec, img_cropped, resize_scale] = align(img, f5pt, ec_mc_y, ec_y, crop_size);

img_final = imresize(img_cropped, [crop_size crop_size], 'Method', 'bicubic');

img_final = permute(img_final, [2,1,3]);

img_final = img_final(:,:,[3,2,1]);

if size(img_final,3)>1

img_final = rgb2gray(img_final);

end

tic

res = net.forward({img_final});

toc

features=[features;[res{1,1}]'];

end

score=[];

for i=1:2:size(features,1)

scoretmp=pdist2(features(i,:),features(i+1,:),'cosine');%chisq,emd,L1,cosine,euclidean

score=[score;scoretmp];

end

figure,plot(score);

caffe.reset_all();

训练的proto文件:http://download.csdn.net/detail/qq_14845119/9790137

reference:

https://github.com/AlfredXiangWu/face_verification_experiment

3374

3374

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?