Paper之EfficientDet: 《Scalable and Efficient Object Detection—可扩展和高效的目标检测》的翻译及其解读

导读:2019年11月21日,谷歌大脑团队发布了论文 EfficientDet: Scalable and Efficient Object Detection 。

Google Brain 团队的三位 Auto ML 大佬 Mingxing Tan Ruoming Pang Quoc V. Le 最近在 Arxiv 上发表了该文章,有网友猜测是投到 CVPR 2020。通过改进 FPN 中多尺度特征融合的结构和借鉴 EfficientNet 模型缩放方法,提出了一种模型可缩放且高效的目标检测算法 EfficientDet。

这篇工作可以看做是中了 ICML 2019 Oral 的 EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks 扩展,从分类任务扩展到检测任务(Object Detection)。

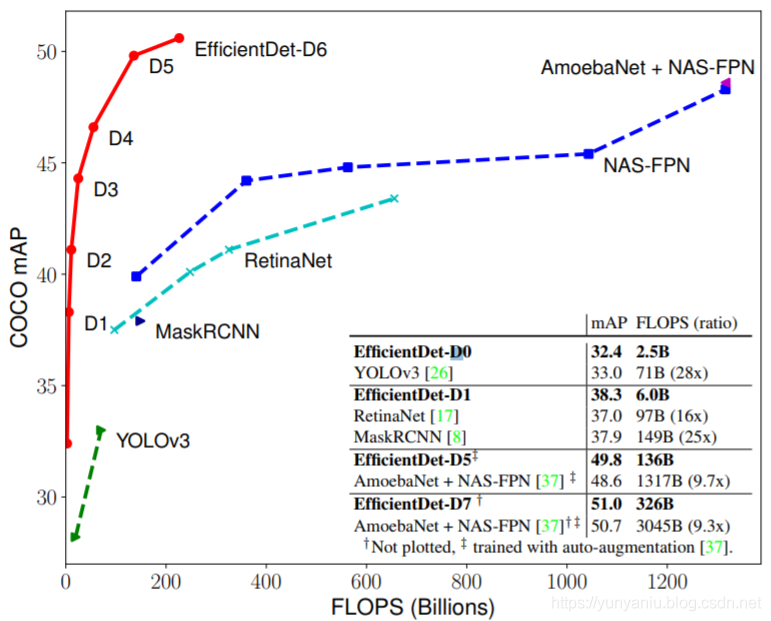

从图表1中,就能看出,神经网络的FLOPS速度和mAP精度之间根据场景需求存在某种平衡,从 EfficientDet D1 ~ EfficientDet D7的曲线可知,FLOPS逐渐变慢,同时mAP逐渐提高。

目录

Scalable and Efficient Object Detection的翻译及其解读

Scalable and Efficient Object Detection的翻译及其解读

论文地址:https://arxiv.org/pdf/1911.09070.pdf

论文作者:Mingxing Tan Ruoming Pang Quoc V. Le Google Research, Brain Team {tanmingxing, rpang, qvl}@google.com

Abstract

| Model efficiency has become increasingly important in computer vision. In this paper, we systematically study various neural network architecture design choices for object detection and propose several key optimizations to improve efficiency. First, we propose a weighted bi-directional feature pyramid network (BiFPN), which allows easy and fast multi-scale feature fusion; Second, we propose a compound scaling method that uniformly scales the resolution, depth, and width for all backbone, feature network, and box/class prediction networks at the same time. Based on these optimizations, we have developed a new family of object detectors, called EfficientDet, which consistently achieve an order-of-magnitude better efficiency than prior art across a wide spectrum of resource constraints. In particular, without bells and whistles, our EfficientDet-D7 achieves stateof-the-art 51.0 mAP on COCO dataset with 52M parameters and 326B FLOPS1 , being 4x smaller and using 9.3x fewer FLOPS yet still more accurate (+0.3% mAP) than the best previous detector. | 模型效率在计算机视觉中越来越重要。在本文中,我们系统地研究了用于目标检测的各种神经网络体系结构的设计选择,并提出了提高效率的几个关键优化方案。首先,我们提出了一种加权双向特征金字塔网络(BiFPN),它可以方便、快速地融合多尺度特征;其次,我们提出了一种混合缩放方法,可以同时对所有主干、特征网络和box/class预测网络的分辨率、深度和宽度进行均匀缩放。基于这些优化,我们开发了一个新的对象检测器系列,称为EfficientDet,在广泛的资源约束范围内,它始终能够达到比现有技术更好的数量级效率。特别是,在没有任何附加功能的情况下,我们的EfficientDet-D7在COCO数据集上实现了最先进的51.0 mAP,参数为52M, FLOPS1为326B,比之前最好的检测器小4倍,少用9.3倍的FLOPS,但仍然比之前的检测器更精确(+0.3% mAP)。 |

1. Introduction

|

Figure 1: Model FLOPS vs COCO accuracy – All numbers are for single-model single-scale. Our EfficientDet achieves much better accuracy with fewer computations than other detectors. In particular, EfficientDet-D7 achieves new state-of-the-art 51.0% COCO mAP with 4x fewer parameters and 9.3x fewer FLOPS. Details are in Table 2. | |

| Tremendous progresses have been made in recent years towards more accurate object detection; meanwhile, stateof-the-art object detectors also become increasingly more expensive. For example, the latest AmoebaNet-based NASFPN detector [37] requires 167M parameters and 3045B FLOPS (30x more than RetinaNet [17]) to achieve state-ofthe-art accuracy. The large model sizes and expensive computation costs deter their deployment in many real-world applications such as robotics and self-driving cars where model size and latency are highly constrained. Given these real-world resource constraints, model efficiency becomes increasingly important for object detection. | 近年来,在提高目标检测精度方面取得了巨大的进展;与此同时,最先进的物体探测器也变得越来越昂贵。例如,最新的基于AmoebaNet的NASFPN探测器[37]需要1.67亿个参数和3045B FLOPS(比RetinaNet[17]多30倍)才能达到最新的精度。大型模型尺寸和昂贵的计算成本阻碍了它们在机器人和自动驾驶汽车等许多现实世界应用程序中的部署,这些应用程序的模型尺寸和延迟都受到高度限制。考虑到这些现实的资源约束,模型效率对于对象检测变得越来越重要。 |

| There have been many previous works aiming to develop more efficient detector architectures, such as onestage [20, 25, 26, 17] and anchor-free detectors [14, 36, 32],or compress existing models [21, 22]. Although these methods tend to achieve better efficiency, they usually sacrifice accuracy. Moreover, most previous works only focus on a specific or a small range of resource requirements, but the variety of real-world applications, from mobile devices to datacenters, often demand different resource constraints. | 之前有许多致力于开发更高效的探测器架构的工作,如onestage[20,25,26,17]和无锚探测器[14,36,32],或压缩现有模型[21,22]。虽然这些方法趋向于获得更好的效率,但它们通常会牺牲准确性。此外,以前的大多数工作只关注特定的或小范围的资源需求,但是从移动设备到数据中心的各种实际应用程序常常需要不同的资源约束。 |

A natural question is: Is it possible to build a scalable detection architecture with both higher accuracy and better efficiency across a wide spectrum of resource constraints (e.g., from 3B to 300B FLOPS)? This paper aims to tackle this problem by systematically studying various design choices of detector architectures. Based on the onestage detector paradigm, we examine the design choices for backbone, feature fusion, and class/box network, and identify two main challenges:

| 一个很自然的问题是:是否有可能构建一个可伸缩的检测架构,该架构具有更高的准确性和更大的效率,可以跨越各种资源约束(例如,从3B到300B FLOPS)?本文旨在通过系统地研究探测器结构的各种设计选择来解决这一问题。基于onestage检测器范例,我们检查了主干、特征融合和类/盒网络的设计选择,并确定了两个主要挑战:

|

| Finally, we also observe that the recently introduced EfficientNets [31] achieve better efficiency than previous commonly used backbones (e.g., ResNets [9], ResNeXt [33], and AmoebaNet [24]). Combining EfficientNet backbones with our propose BiFPN and compound scaling, we have developed a new family of object detectors, named EfficientDet, which consistently achieve better accuracy with an order-of-magnitude fewer parameters and FLOPS than previous object detectors. Figure 1 and Figure 4 show the performance comparison on COCO dataset [18]. Under similar accuracy constraint, our EfficientDet uses 28x fewer FLOPS than YOLOv3 [26], 30x fewer FLOPS than RetinaNet [17], and 19x fewer FLOPS than the recent NASFPN [5]. In particular, with single-model and single testtime scale, our EfficientDet-D7 achieves state-of-the-art 51.0 mAP with 52M parameters and 326B FLOPS, being 4x smaller and using 9.3x fewer FLOPS yet still more accurate (+0.3% mAP) than the best previous models [37]. Our EfficientDet models are also up to 3.2x faster on GPU and 8.1x faster on CPU than previous detectors, as shown in Figure 4 and Table 2. | 最后,我们还观察到,最近推出的EfficientNets [31]比之前常用的骨干(例如,ResNets [9], ResNeXt [33], AmoebaNet[24])的效率更高。我们将effecentnet主干与我们提出的BiFPN和复合标度相结合,开发了一个新的对象检测器家族,命名为efficient entdet,与以前的对象检测器相比,它始终能够在较少数量级的参数和错误的情况下获得更好的准确性。图1和图4显示了对COCO数据集[18]的性能比较。在类似的精度约束下,我们的effecentdet使用的FLOPS比YOLOv3[26]少28倍,比RetinaNet[17]少30倍,比最近的NASFPN[5]少19倍。特别地,在单模型和单测试时间尺度的情况下,我们的效率测点- d7在52M参数和326B FLOPS的情况下,实现了最先进的51.0 mAP,比以前最好的模型[37]小4倍,减少了9.3倍的FLOPS,但仍然比以前的模型更精确(+0.3% mAP)。我们的EfficientDet模型在GPU上比以前的检测器快3.2倍,在CPU上比以前的检测器快8.1倍,如图4和表2所示。 |

| Our contributions can be summarized as: • We proposed BiFPN, a weighted bidirectional feature network for easy and fast multi-scale feature fusion. • We proposed a new compound scaling method, which jointly scales up backbone, feature network, box/class network, and resolution, in a principled way. • Based on BiFPN and compound scaling, we developed EfficientDet, a new family of detectors with significantly better accuracy and efficiency across a wide spectrum of resource constraints. | 我们的贡献可以总结为: •我们提出了一个加权的双向特征网络BiFPN,用于方便快速的多尺度特征融合。•我们提出了一种新的复合标度方法,可以原则性地对主干、feature network、box/class network、resolution进行联合标度。•基于BiFPN和复合标度,我们开发了EfficientDet,这是一种新的探测器家族,在广泛的资源约束范围内具有更高的准确性和效率。 |

2. Related Work

| One-Stage Detectors: Existing object detectors are mostly categorized by whether they have a region-ofinterest proposal step (two-stage [6, 27, 3, 8]) or not (onestage [28, 20, 25, 17]). While two-stage detectors tend to be more flexible and more accurate, one-stage detectors are often considered to be simpler and more efficient by leveraging predefined anchors [11]. Recently, one-stage detectors have attracted substantial attention due to their efficiency and simplicity [14, 34, 36]. In this paper, we mainly follow the one-stage detector design, and we show it is possible to achieve both better efficiency and higher accuracy with optimized network architectures. | |

| Multi-Scale Feature Representations: One of the main difficulties in object detection is to effectively represent and process multi-scale features. Earlier detectors often directly perform predictions based on the pyramidal feature hierarchy extracted from backbone networks [2, 20, 28]. As one of the pioneering works, feature pyramid network (FPN) [16] proposes a top-down pathway to combine multi-scale features. Following this idea, PANet [19] adds an extra bottom-up path aggregation network on top of FPN; STDL [35] proposes a scale-transfer module to exploit cross-scale features; M2det [34] proposes a U-shape module to fuse multi-scale features, and G-FRNet [1] introduces gate units for controlling information flow across features. More recently, NAS-FPN [5] leverages neural architecture search to automatically design feature network topology. Although it achieves better performance, NAS-FPN requires thousands of GPU hours during search, and the resulting feature network is irregular and thus difficult to interpret. In this paper, we aim to optimize multi-scale feature fusion with a more intuitive and principled way. | |

| Model Scaling: In order to obtain better accuracy, it is common to scale up a baseline detector by employing bigger backbone networks (e.g., from mobile-size models [30, 10] and ResNet [9], to ResNeXt [33] and AmoebaNet [24]), or increasing input image size (e.g., from 512x512 [17] to 1536x1536 [37]). Some recent works [5, 37] show that increasing the channel size and repeating feature networks can also lead to higher accuracy. These scaling methods mostly focus on single or limited scaling dimensions. Recently, [31] demonstrates remarkable model efficiency for image classification by jointly scaling up network width, depth, and resolution. Our proposed compound scaling method for object detection is mostly inspired by [31]. |

3、BiFPN

| In this section, we first formulate the multi-scale feature fusion problem, and then introduce the two main ideas for our proposed BiFPN: efficient bidirectional cross-scale connections and weighted feature fusion. | |

|

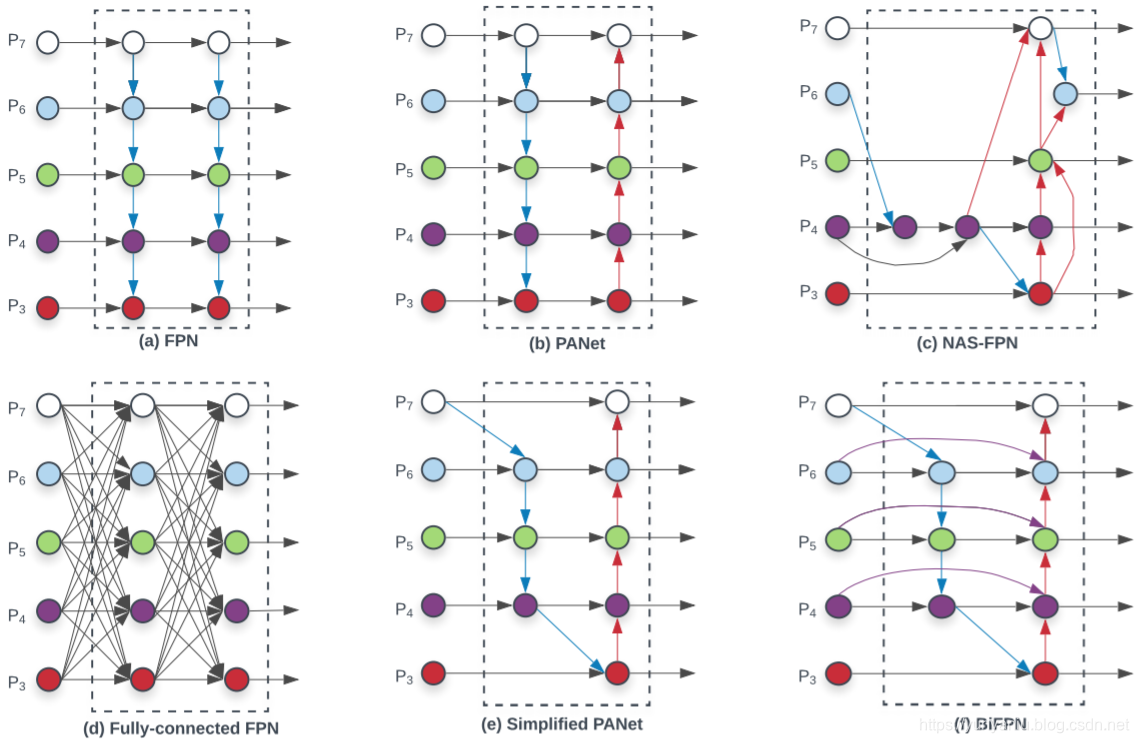

Figure 2: Feature network design – (a) FPN [16] introduces a top-down pathway to fuse multi-scale features from level 3 to 7 (P3 - P7); (b) PANet [19] adds an additional bottom-up pathway on top of FPN; (c) NAS-FPN [5] use neural architecture search to find an irregular feature network topology; (d)-(f) are three alternatives studied in this paper. (d) adds expensive connections from all input feature to output features; (e) simplifies PANet by removing nodes if they only have one input edge; (f) is our BiFPN with better accuracy and efficiency trade-offs. | |

3.1. Problem Formulation

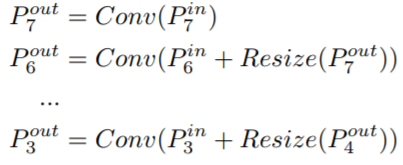

| Multi-scale feature fusion aims to aggregate features at different resolutions. Formally, given a list of multi-scale features P~ in = (P in l1 , Pin l2 , ...), where P in li represents the feature at level li , our goal is to find a transformation f that can effectively aggregate different features and output a list of new features: P~ out = f(P~ in). As a concrete example, Figure 2(a) shows the conventional top-down FPN [16]. It takes level 3-7 input features P~ in = (P in 3 , ...Pin 7 ), where P in i represents a feature level with resolution of 1/2 i of the input images. For instance, if input resolution is 640x640, then P in 3 represents feature level 3 (640/2 3 = 80) with resolution 80x80, while P in 7 represents feature level 7 with resolution 5x5. The conventional FPN aggregates multi-scale features in a top-down manner:

|

3.2. Cross-Scale Connections

| Conventional top-down FPN is inherently limited by the one-way information flow. To address this issue, PANet [19] adds an extra bottom-up path aggregation network, as shown in Figure 2(b). Cross-scale connections are further studied in [13, 12, 34]. Recently, NAS-FPN [5] employs neural architecture search to search for better cross-scale feature network topology, but it requires thousands of GPU hours during search and the found network is irregular and difficult to interpret or modify, as shown in Figure 2(c). | |

| By studying the performance and efficiency of these three networks (Table 4), we observe that PANet achieves better accuracy than FPN and NAS-FPN, but with the cost of more parameters and computations. To improve model efficiency, this paper proposes several optimizations for cross-scale connections: First, we remove those nodes that only have one input edge. Our intuition is simple: if a node has only one input edge with no feature fusion, then it will have less contribution to feature network that aims at fusing different features. This leads to a simplified PANet as shown in Figure 2(e); Second, we add an extra edge from the original input to output node if they are at the same level, in order to fuse more features without adding much cost, as shown in Figure 2(f); Third, unlike PANet [19] that only has one top-down and one bottom-up path, we treat each bidirectional (top-down & bottom-up) path as one feature network layer, and repeat the same layer multiple times to enable more high-level feature fusion. Section 4.2 will discuss how to determine the number of layers for different resource constraints using a compound scaling method. With these optimizations, we name the new feature network as bidirectional feature pyramid network (BiFPN), as shown in Figure 2(f) and 3. |

3.3. Weighted Feature Fusion

| When fusing multiple input features with different resolutions, a common way is to first resize them to the same resolution and then sum them up. Pyramid attention network [15] introduces global self-attention upsampling to recover pixel localization, which is further studied in [5]. | |

| Previous feature fusion methods treat all input features equally without distinction. However, we observe that since different input features are at different resolutions, they usually contribute to the output feature unequally. To address this issue, we propose to add an additional weight for each input during feature fusion, and let the network to learn the importance of each input feature. Based on this idea, we consider three weighted fusion approaches: | |

| Unbounded fusion: O = P i wi · Ii , where wi is a learnable weight that can be a scalar (per-feature), a vector (per-channel), or a multi-dimensional tensor (per-pixel). We find a scale can achieve comparable accuracy to other approaches with minimal computational costs. However, since the scalar weight is unbounded, it could potentially cause training instability. Therefore, we resort to weight normalization to bound the value range of each weight. | |

| Softmax-based fusion: O = P i e wi P j e wj · Ii . An intuitive idea is to apply softmax to each weight, such that all weights are normalized to be a probability with value range from 0 to 1, representing the importance of each input. However, as shown in our ablation study in section 6.3, the extra softmax leads to significant slowdown on GPU hardware. To minimize the extra latency cost, we further propose a fast fusion approach. |

678

678

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?