文章目录

1、前言

在检测过程中,碰到小的目标物体,采用YOLOv5模型,难以检测到小目标,因此本文提出,增加小目标检测头,从浅层特征图学习到小目标的特征信息后,与深层特征图拼接,从而使深层网络也能够学习到小目标的特征信息,让网络更加关注小目标的检测,提高目标检测效果。唯一缺点是随着网络层数增多,带来计算量的增加导致检测推理速度减慢。

2、如何计算参数

其中,H1代表输入宽度,K代表卷积核个数,P代表零填充,S代表步长。

- Conv:卷积,一般来说宽高会降低一半(主要看参数)。

- Upsample: 上采样,宽高升高一倍。

- Concat:拼接,宽高一致情况下,维度相加。

- 一般S步长为2时候,宽高都会缩小一半,因为除以2。

3、YOLOv5小目标改进-1

3.1、结构文件

yolov5l.yaml 源文件

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

# args: channel,kernel_size,stride,padding,bias等

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

添加多一层小目标检测,即默认三头检测,现在是四头检测。

创建 yolov5l-4P.yaml ,代码如下

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

anchors:

- [ 10,13, 16,30, 33,23 ] # P2/4

- [ 30,61, 62,45, 59,119 ] # P3/8

- [ 116,90, 156,198, 373,326 ] # P4/16

- [ 436,615, 739,380, 925,792 ] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

# args: channel,kernel_size,stride,padding,bias等

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]], # 10 20*20

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [512, 1, 1]], # 14 40*40 [512,40,40]

[-1, 1, nn.Upsample, [None, 2, 'nearest']], # [512,80,80]

[[-1, 4], 1, Concat, [1]], # cat backbone P3 80*80 [768,80,80]

[-1, 3, C3, [512, False]], # 17 (P3/8-small) 80*80 [768,80,80]

[-1, 1, Conv, [256, 1, 1]], # 18 [256,80,80]

[-1, 1, nn.Upsample, [None, 2, 'nearest']], # [256,160,160]

[[-1, 2], 1, Concat, [1]], # cat backbone P3 # [384,160,160]

[-1, 3, C3, [256, False]], # 21 (P3/8-small) # [256,160,160]

[-1, 1, Conv, [256, 3, 2]], # 22 80*8 [256,80,80]

[[-1, 18], 1, Concat, [1]], # cat head P4 [512,80,80]

[-1, 3, C3, [256, False]], # 24 (P4/16-medium) [256,80,80]

[-1, 1, Conv, [256, 3, 2]], # [256,40,40]

[[-1, 14], 1, Concat, [1]], # cat head P4 [768,40,40]

[-1, 3, C3, [512, False]], # 27 (P4/16-medium) [512,40,40]

[-1, 1, Conv, [512, 3, 2]], # [512,20,20]

[[-1, 10], 1, Concat, [1]], # cat head P5 [1024,20,20]

[-1, 3, C3, [1024, False]], # 30 (P5/32-large) [1024,20,20]

[[21, 24, 27,30], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

3.2、结构图

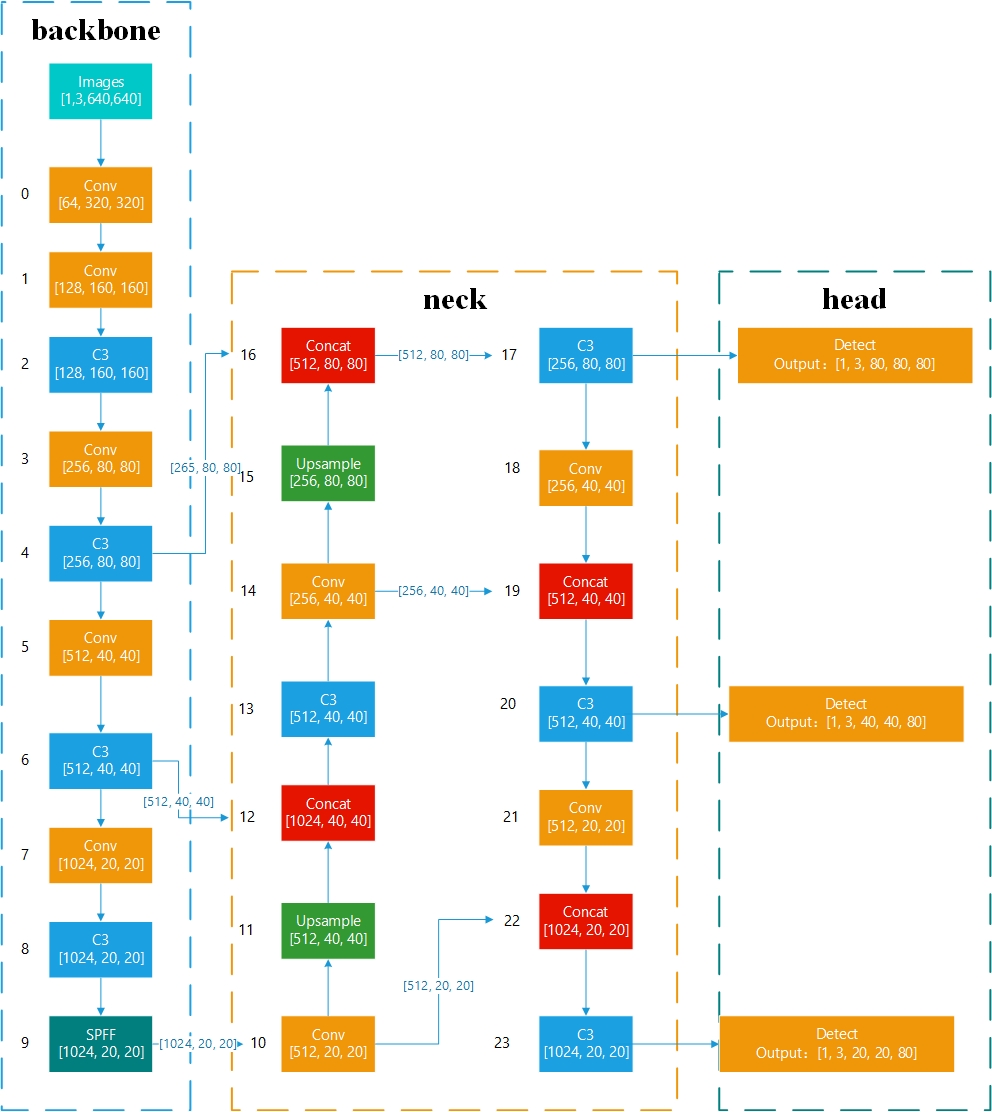

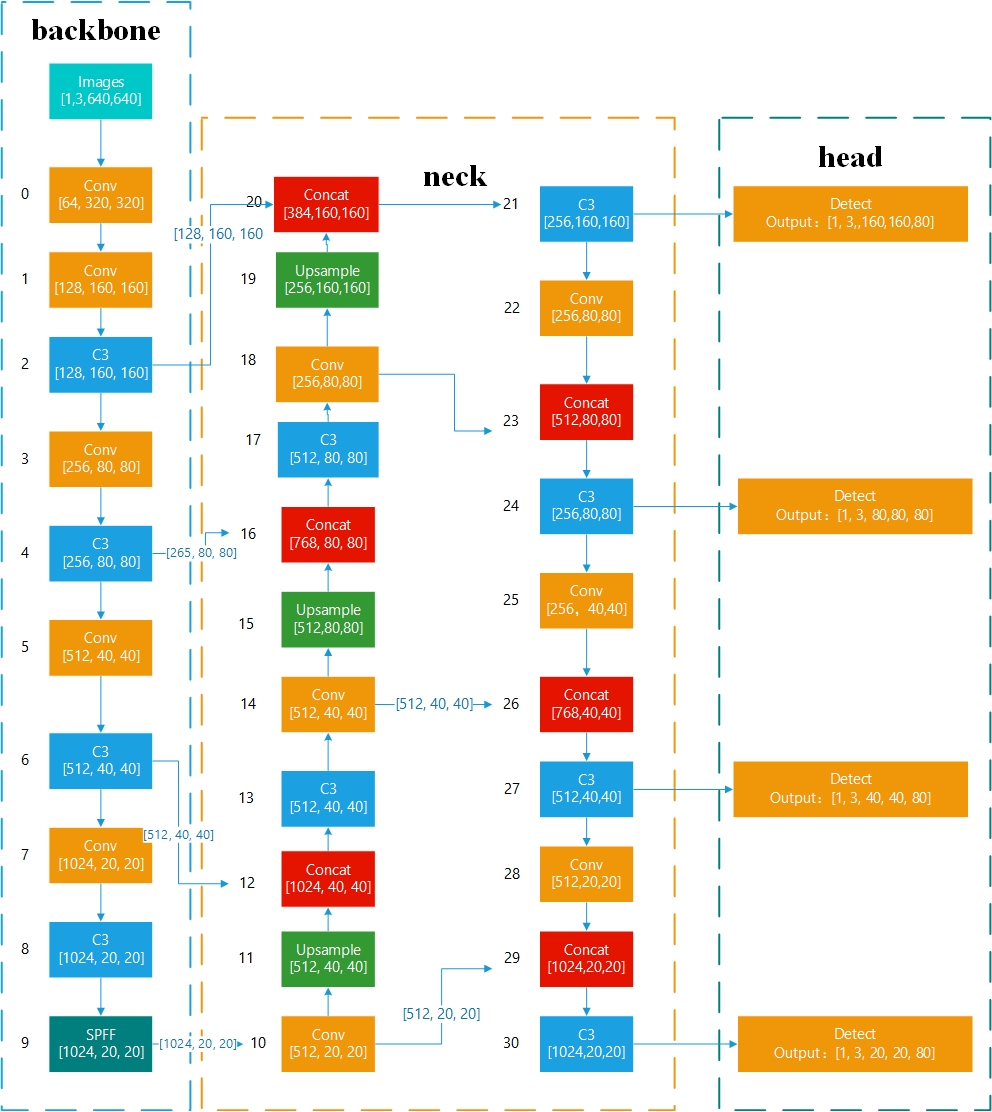

改进前

改进后

3.3、参数对比

3.3.1、yolov5l.yaml 解析表

1. 注:yolov5l.yaml的depth_multiple和width_multiple都为1

2. 利用上述公式进行计算,H₂=(H1-K+2P)/S+1。

3. args: channel,kernel_size,stride,padding,bias等

4. (640-6+2*2)/ 2 +1 =320

| 层数 | from | moudule | arguments | input | output |

|---|---|---|---|---|---|

| 0 | -1 | Conv | [3, 64, 6, 2, 2] | [3, 640, 640] | [64, 320, 320] |

| 1 | -1 | Conv | [64, 128, 3, 2] | [64, 320, 320] | [128, 160, 160] |

| 2 | -1 | C3 | [128,128, 1] | [128, 160, 160] | [128 160, 160] |

| 3 | -1 | Conv | [128,256, 3, 2] | [128 160, 160] | [256, 80, 80] |

| 4 | -1 | C3 | [256,256, 2] | [256, 80, 80] | [256, 80, 80] |

| 5 | -1 | Conv | [256,512, 3, 2] | [256, 80, 80] | [512, 40, 40] |

| 6 | -1 | C3 | [512, 512, 3] | [512, 40, 40] | [512, 40, 40] |

| 7 | -1 | Conv | [512, 1024, 3, 2] | [512, 40, 40] | [1024, 20, 20] |

| 8 | -1 | C3 | [1024, 1024, 1] | [1024, 20, 20] | [1024, 20, 20] |

| 9 | -1 | SPPF | [1024, 1024, 5] | [1024, 20, 20] | [1024, 20, 20] |

| 10 | -1 | Conv | [1024,512, 1, 1] | [1024, 20, 20] | [512, 20, 20] |

| 11 | -1 | Upsample | [None, 2, ‘nearest’] | [512, 20, 20] | [512, 40, 40] |

| 12 | [-1, 6] | Concat | [512+512] | [1, 512, 40, 40],[1, 512, 40, 40] | [1024, 40, 40] |

| 13 | -1 | C3 | [1024,512, 1, False] | [1024, 40, 40] | [512, 40, 40] |

| 14 | -1 | Conv | [512,256, 1, 1] | [512, 40, 40] | [256, 40, 40] |

| 15 | -1 | Upsample | [None, 2, ‘nearest’] | [256, 40, 40] | [256, 80, 80] |

| 16 | [-1, 4] | Concat | [256+256] | [1, 256, 80, 80],[1, 256, 80, 80] | [512, 80, 80] |

| 17 | -1 | C3 | [512, 256, 1, False] | [512, 80, 80] | [256, 80, 80] |

| 18 | -1 | Conv | [256, 256, 3, 2] | [256, 80, 80] | [256, 40, 40] |

| 19 | [-1, 14] | Concat | [256+256] | [1, 256, 40, 40],[1, 256, 40, 40] | [512, 40, 40] |

| 20 | -1 | C3 | [512, 512, 1, False] | [512, 40, 40] | [512, 40, 40] |

| 21 | -1 | Conv | [512, 512, 3, 2] | [512, 40, 40] | [512, 20, 20] |

| 22 | [-1, 10] | Concat | [512+512] | [1, 512, 20, 20],[1, 512, 20, 20] | [1024 20, 20] |

| 23 | -1 | C3 | [1024,1024, 1, False] | [1024, 20, 20] | [1024, 20, 20] |

| 24 | [17, 20, 23] | Detect | [80, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]] | [1, 256, 80, 80],[1, 512, 40, 40],[1, 1024, 20, 20] | [1, 3, 80, 80, 85],[1, 3, 40, 40, 85],[1, 3, 20, 20, 85] |

3.3.2、 yolov5l-4P.yaml 解析表

注:yolov5l.yaml的depth_multiple和width_multiple都为1

| 层数 | from | moudule | arguments | input | output |

|---|---|---|---|---|---|

| 0 | -1 | Conv | [3, 64, 6, 2, 2] | [3, 640, 640] | [64, 320, 320] |

| 1 | -1 | Conv | [64, 128, 3, 2] | [64, 320, 320] | [128, 160, 160] |

| 2 | -1 | C3 | [128,128, 1] | [128, 160, 160] | [128 160, 160] |

| 3 | -1 | Conv | [128,256, 3, 2] | [128 160, 160] | [256, 80, 80] |

| 4 | -1 | C3 | [256,256, 2] | [256, 80, 80] | [256, 80, 80] |

| 5 | -1 | Conv | [256,512, 3, 2] | [256, 80, 80] | [512, 40, 40] |

| 6 | -1 | C3 | [512, 512, 3] | [512, 40, 40] | [512, 40, 40] |

| 7 | -1 | Conv | [512, 1024, 3, 2] | [512, 40, 40] | [1024, 20, 20] |

| 8 | -1 | C3 | [1024, 1024, 1] | [1024, 20, 20] | [1024, 20, 20] |

| 9 | -1 | SPPF | [1024, 1024, 5] | [1024, 20, 20] | [1024, 20, 20] |

| 10 | -1 | Conv | [1024,512, 1, 1] | [1024, 20, 20] | [512, 20, 20] |

| 11 | -1 | Upsample | [None, 2, ‘nearest’] | [512, 20, 20] | [512, 40, 40] |

| 12 | [-1, 6] | Concat | [512+512] | [1, 512, 40, 40],[1, 512, 40, 40] | [1024, 40, 40] |

| 13 | -1 | C3 | [1024,512, 1, False] | [1024, 40, 40] | [512, 40, 40] |

| 14 | -1 | Conv | [512,512, 1, 1] | [512, 40, 40] | [512, 40, 40] |

| 15 | -1 | Upsample | [None, 2, ‘nearest’] | [512, 40, 40] | [512, 80, 80] |

| 16 | [-1, 4] | Concat | [256+512] | [1, 256, 80, 80],[1, 512, 80, 80] | [768, 80, 80] |

| 17 | -1 | C3 | [768, 512, 1, False] | [768, 80, 80] | [512, 80, 80] |

| 18 | -1 | Conv | [512, 256, 1, 1] | [512, 80, 80] | [256, 80, 80] |

| 19 | -1 | Upsample | [None, 2, ‘nearest’] | [256, 80, 80] | [256, 160, 160] |

| 20 | [-1, 2] | Concat | [256+128] | [1, 256, 160, 160],[1, 128, 160, 160] | [384, 160, 160] |

| 21 | -1 | C3 | [384, 256, 1, False] | [384, 160, 160] | [256, 160, 160] |

| 22 | -1 | Conv | [256, 256, 3, 2] | [256, 160, 160] | [256, 80, 80] |

| 23 | [-1, 18] | Concat | [256+256] | [1, 256, 80, 80],[1,256, 80, 80] | [512, 80, 80] |

| 24 | -1 | C3 | [512,256, 1, False] | [512, 80, 80] | [256,80,80] |

| 25 | -1 | Conv | [256, 3, 2] | [256,80,80] | [256,40,40] |

| 26 | [-1, 14] | Concat | [256+512] | [256,40,40] | [768,40,40] |

| 27 | -1 | C3 | [768,512, 1, False] | [768,40,40] | [512,40,40] |

| 28 | -1 | Conv | [512, 3, 2] | [512,40,40] | [512,20,20] |

| 29 | [-1, 10] | Concat | [512+512] | [512,20,20] | [1024,20,20] |

| 30 | -1 | C3 | [1024,1024, 1, False] | [1024,20,20] | [1024,20,20] |

| 31 | [21, 24, 27,30] | Detect | [80, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326],[ 436,615, 739,380, 925,792 ]], [256, 256, 512,1024]] | [1, 256, 160, 160],[1, 256, 80, 80],[1, 512, 40, 40],[1, 1024, 20, 20] | [1, 3, 160, 160, 80],[1, 3, 80, 80, 80],[1, 3, 40, 40, 80],[1, 3, 20, 20, 80] |

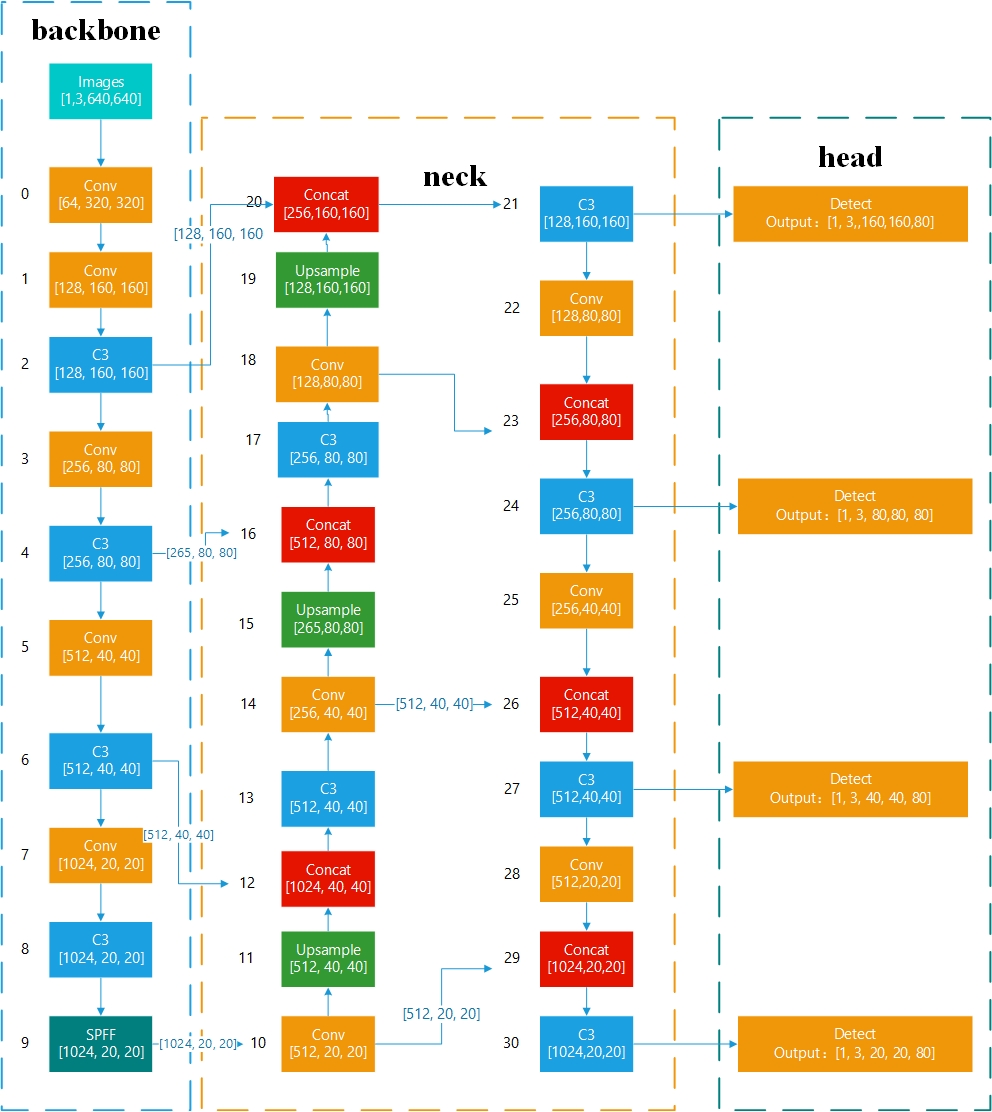

4、YOLOv5小目标改进-2

4.1、结构文件

自动锚框四头(P2, P3, P4, P5)

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

anchors: 3 # AutoAnchor evolves 3 anchors per P output layer

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head with (P2, P3, P4, P5) outputs

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [128, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 2], 1, Concat, [1]], # cat backbone P2

[-1, 1, C3, [128, False]], # 21 (P2/4-xsmall)

[-1, 1, Conv, [128, 3, 2]],

[[-1, 18], 1, Concat, [1]], # cat head P3

[-1, 3, C3, [256, False]], # 24 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 27 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 30 (P5/32-large)

[[21, 24, 27, 30], 1, Detect, [nc, anchors]], # Detect(P2, P3, P4, P5)

]

4.2、结构图

5、YOLOv5小目标改进-3

5.1、结构文件

四头BiFPN(P3, P4, P5, P6)

# parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# anchors

anchors:

- [ 19,27, 44,40, 38,94 ] # P3/8

- [ 96,68, 86,152, 180,137 ] # P4/16

- [ 140,301, 303,264, 238,542 ] # P5/32

- [ 436,615, 739,380, 925,792 ] # P6/64

# YOLOv5 backbone

backbone:

# [from, number, module, args]

[ [-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [768, 3, 2]], # 7-P5/32

[-1, 3, C3, [768]],

[-1, 1, Conv, [1024, 3, 2]], # 9-P6/64

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 11

]

# YOLOv5 head

head:

[ [ -1, 1, Conv, [ 768, 1, 1 ] ], # 12 head

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 8 ], 1, BiFPN_Add2, [ 384,384] ], # cat backbone P5

[ -1, 3, C3, [ 768, False ] ], # 15

[ -1, 1, Conv, [ 512, 1, 1 ] ],

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 6 ], 1, BiFPN_Add2, [ 256,256] ], # cat backbone P4

[ -1, 3, C3, [ 512, False ] ], # 19

[ -1, 1, Conv, [ 256, 1, 1 ] ],

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 4 ], 1, BiFPN_Add2, [ 128,128] ], # cat backbone P3

[ -1, 3, C3, [ 256, False ] ], # 23 (P3/8-small)

[ -1, 1, Conv, [ 512, 3, 2 ] ],

[ [ -1, 6, 19 ], 1, BiFPN_Add3, [ 256 , 256 ] ], # cat head P4

[ -1, 3, C3, [ 512, False ] ], # 26 (P4/16-medium)

[ -1, 1, Conv, [ 768, 3, 2 ] ],

[ [ -1, 8, 15 ], 1, BiFPN_Add3, [ 384,384 ] ], # cat head P5

[ -1, 3, C3, [ 768, False ] ], # 29 (P5/32-large)

[ -1, 1, Conv, [ 1024, 3, 2 ] ], #30

[ [ -1, 11 ], 1, BiFPN_Add2, [ 512, 512] ], # cat head P6

[ -1, 3, C3, [ 1024, False ] ], # 32 (P6/64-xlarge)

[ [ 23, 26, 29, 32 ], 1, Detect, [ nc, anchors ] ], # Detect(P3, P4, P5, P6)

]

6、YOLOv5小目标改进-4

6.1、结构文件

两头(P3,P4)

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors: 3 # AutoAnchor evolves 3 anchors per P output layer

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[ [ -1, 1, Conv, [ 64, 6, 2, 2 ] ], # 0-P1/2

[ -1, 1, Conv, [ 128, 3, 2 ] ], # 1-P2/4

[ -1, 3, C3, [ 128 ] ],

[ -1, 1, Conv, [ 256, 3, 2 ] ], # 3-P3/8

[ -1, 6, C3, [ 256 ] ],

[ -1, 1, Conv, [ 512, 3, 2 ] ], # 5-P4/16

[ -1, 9, C3, [ 512 ] ],

[ -1, 1, Conv, [ 1024, 3, 2 ] ], # 7-P5/32

[ -1, 3, C3, [ 1024 ] ],

[ -1, 1, SPPF, [ 1024, 5 ] ], # 9

]

# YOLOv5 v6.0 head with (P3, P4) outputs

head:

[ [ -1, 1, Conv, [ 512, 1, 1 ] ],

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 6 ], 1, Concat, [ 1 ] ], # cat backbone P4

[ -1, 3, C3, [ 512, False ] ], # 13

[ -1, 1, Conv, [ 256, 1, 1 ] ],

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

[ [ -1, 4 ], 1, Concat, [ 1 ] ], # cat backbone P3

[ -1, 3, C3, [ 256, False ] ], # 17 (P3/8-small)

[ -1, 1, Conv, [ 256, 3, 2 ] ],

[ [ -1, 14 ], 1, Concat, [ 1 ] ], # cat head P4

[ -1, 3, C3, [ 512, False ] ], # 20 (P4/16-medium)

[ [ 17, 20 ], 1, Detect, [ nc, anchors ] ], # Detect(P3, P4)

]

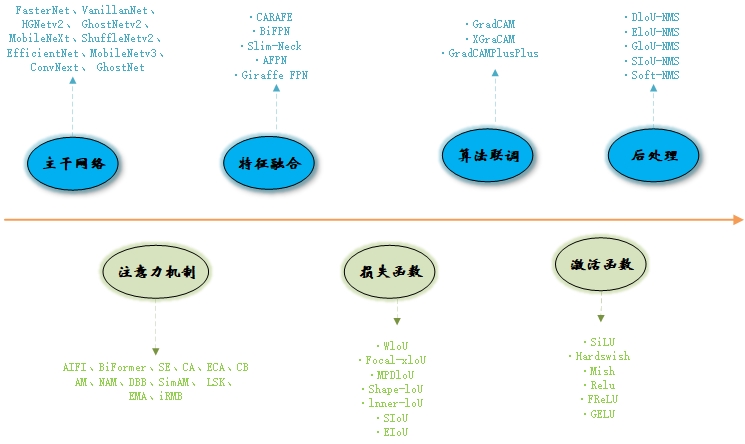

7、优化点

7.1、改进模型其他思路

7.2、如何使用更深层次的网络

更深层次的网络在models/hub里面自带了,一般是高分辨率图像适合使用的。

8、遇到的问题

RuntimeError: The size of tensor a (3) must match the size of tensor b (4) at non-singleton dimension 0

原因:

- 要求anchors个数是4个,目前匹配到了3个,还缺少1个。

- 因为添加了额外输出层,但是我们没有为该层定义任何的anchors。

解决方案:

- 我们额外为该层添加一个anchors

- 设置自动添加anchor

- anchors: 3 # AutoAnchor evolves 3 anchors per P output layer,为每一个特征输出层自动生成3个锚框

# 原始:

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# 添加新的anchors:

anchors:

- [ 10,13, 16,30, 33,23 ] # P2/4

- [ 30,61, 62,45, 59,119 ] # P3/8

- [ 116,90, 156,198, 373,326 ] # P4/16

- [ 436,615, 739,380, 925,792 ] # P5/32

9、参考文章

- 万字长文!YOLO算法模型yaml文件史上最详细解析与教程!小白也能看懂!掌握了这个就掌握了魔改YOLO的核心!

- YOLOv8/YOLOv7/YOLOv5系列算法改进【NO.6】增加小目标检测层,提高对小目标的检测效果

8405

8405

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?