了解一下工作原理

video: https://www.youtube.com/watch?v=nc65CF1W9B0

-

先进行三维重建

-

用户输入要被校准的目标,然后通过3次点击提供初始化校准

- 剩下的就是自动完成了

数据生成的框架

paper:https://arxiv.org/abs/1707.04796

RGBD数据的采集

稠密三维重建

目标网格生成

人工辅助标记

标记图像的渲染和目标位姿

-

RGBD数据的采集

可以通过机械臂或者直接手持

Asus Xtion Pro. 30Hz,典型的logs是120秒 -

稠密三维重建

用elasticfusion来进行三维重建,在英伟达GTX 1080 gpu上能实时运行,提供了基于局部重建关键帧的相机位姿的跟踪,这是我们在绘制标记图像时的一个事实。

场景中的几何特征和rgb纹理的数量会影响重建的效果。 -

目标网格生成

框架的预处理阶段中有一个步骤是获取目标的mesh网格。

可以利用扫描仪,可以手工搭建几何模型,也有一些利用RGBD相机生成mesh网格的方案,只要满足ICP配准就行。 -

人工辅助标记

通过人工点击生成粗略的位姿,然后通过ICP得到精确的位姿 -

标记图像的渲染和目标位姿

把3d物体投影到2d的RGB图像,有每一帧目标的位姿,有2D投影

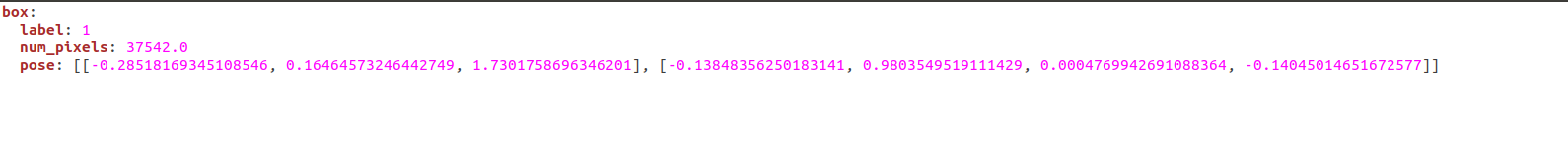

Label Fusion的数据组织

地址:https://github.com/RobotLocomotion/LabelFusion/blob/master/docs/data_organization.rst

把数据所在文件夹挂载到docker,目标路径为/root/labelfusion/data.

LabelFusion/docker/docker_run.sh /path/to/data-folder

最高级别目录结构

- logs* - A number of logs folders, described in the next section

- dataset_status.csv - Overall status script, described in Section 4.

- object-meshses - Contains the meshes for all objects across all datasets

- object_data.yaml - Critical file which is a dictionary of all objects in the database, their pixel label (i.e. “1” is the oil_bottle), and the mesh (.vtp or .obj, etc) that is used for this object.

Object Meshes

LabelFusion 需要Object Meshes来执行模板的模型对齐和渲染。Object Meshes的默认存储路径为

data\

object-meshes\

drill_mesh.vtp

当运行对齐工具时,软件需要知道特定对象的mesh的存储位置。特别是调用 g r . l a u n c h O b j e c t A l i g n m e n t ( < o b j e c t N a m / > ) gr.launchObjectAlignment(<objectNam/>) gr.launchObjectAlignment(<objectNam/>)会去寻找 < o b j e c t N a m e > <objectName> <objectName>的mesh网格。这些信息包含在object_data.yaml,位置是

data\

object_data.yaml

这个 yaml 文件中的每个条目都是这种格式

drill:

mesh: object-meshes/handheld-scanner/drill_decimated_mesh.vtp

label: 1

mesh 条目指向mesh的位置,基于最高级data文件夹。label条目时这一目标的全局标记。当灰度mask图像被渲染时,值为1的像素对应于drill(我理解就是标记的对象),值为0总是表示北京,所以不能用作对象label.

Log high-level organization

每个 logs * 目录的用途是:

- logs_test - 我们所有的日志都在这里。虽然有点用词不当,但它的存在是有历史原因的。

- logs_arch - 这些日志是不完美的(不是正确的对象集,对于 ElasticFusion,来说太摇晃,等等) ,但是我们还不想删除。

Each log organization

每个log在所有数据集中都应该有一个唯一的名称。可以选择这个形式的唯一日期:

- YYYY-MM-DD-##

这里的##指代的时这一天的第几个log

子目录应该命名为此log的名称。

在每个日志中应该以下列文件和目录开始:

logs_test/

2017-06-07-01/

*lcmlog*

在运行所有脚本之后,将创建以下文件和目录:

logs_test/

2017-06-07-01/

info.yaml

original_log.lcmlog

trimmed_log.lcmlog # if trimmed

images/

..._rgb.png

..._color_labels.png

..._labels.png

..._utime.txt

..._poses.yaml

resized_images/ # downsized to 480 x 360 for training

..._rgb.png

..._labels.png

above_table_pointcloud.vtp

reconstructed_pointcloud.vtp

converted_to_ascii.ply

converted_to_ascii_modified_header.ply

posegraph.posegraph

registration_result.yaml

transforms.yaml

查看数据集状态的脚本

dataset_update_status提供了一种跟踪每个日志状态的方法。

每个log可以通过向 info.yaml. 添加例如:this log looks good line 注释

请注意,为了保持终端输出格式化良好,只会显示此注释的少量字符。

dataset_update_status脚本将为每个步骤查找以下内容的存在:

run_trim - check for info.yaml

run_prep - check for reconstructed_pointcloud.vtp

run_alignment_tool - check for registration_result.yaml

run_create_data - check for images/0000000001_color_labels.png

run_resize - check for resize_images/0000000001_labels.png

注意:

- 脚本检查这5个中的每个步骤,并为每个步骤写一个 x 或 _。

- 图像的数量也被计算和显示。

这个脚本创建dataset_status.csv 并把输出cat到命令行,例如

dataset_update_status

logs_test/2017-05-04-00 x x _ _ imgs ----- | |

logs_test/2017-05-04-01 x x x x imgs 01880 | |

logs_test/2017-05-04-02 x x x x imgs 01730 |looks good |

logs_test/2017-05-04-03 x x x x imgs 00172 | |

logs_test/2017-05-04-04 x x x x imgs 01821 | |

logs_test/2017-05-04-05 x x _ _ imgs ----- | |

logs_test/2017-05-04-06 x x _ _ imgs ----- | |

logs_test/2017-05-04-07 x x x _ imgs ----- | |

logs_test/2017-05-25-00 _ _ _ _ imgs ----- | |

logs_test/2017-05-25-01 x x x _ imgs ----- |ready for alignment |

传递-o arg 将检查每个日志中是否存在对象:

dataset_update_status -o

logs_test/2017-05-04-00 x x _ _ imgs ----- | | []

logs_test/2017-05-04-01 x x x x imgs 01880 | | |['phone', 'robot']|

logs_test/2017-05-04-02 x x x x imgs 01730 |looks good | |['phone', 'robot']|

logs_test/2017-05-04-03 x x x x imgs 00172 | | |['phone', 'robot', 'tissue_box']|

logs_test/2017-05-04-04 x x x x imgs 01821 | | |['phone', 'robot', 'tissue_box']|

logs_test/2017-05-04-05 x x _ _ imgs ----- | | []

logs_test/2017-05-04-06 x x _ _ imgs ----- | | []

logs_test/2017-05-04-07 x x x _ imgs ----- | | |['phone', 'robot']|

logs_test/2017-05-25-00 _ _ _ _ imgs ----- | | []

logs_test/2017-05-25-01 x x x _ imgs ----- |ready for alignment | |['oil_bottle', 'phone']|

Inspecting Data from LabelFusion

- 安装 Install nvidia-docker

- 下载工程

- 使用nvidia-docker2的话要用这个工程,现在ubuntu18.04应该是不支持nvidia-docker

git clone https://github.com/ianre657/LabelFusion.git - 使用nvidia-docker的系统,要用这个版本的工程

https://github.com/RobotLocomotion/LabelFusion

-

运行docker

LabelFusion/docker/docker_run.sh /path/to/data-folder我自己的例子

cd ./LabelFusion/docker ./docker_run.sh /home/stone/data/LabelFusion_Sample_Data -

在docker映像内部,导航到日志目录并运行对齐工具。即使数据已经标记,您也可以检查结果:

cd ~/labelfusion/data/logs/2017-06-13-29 run_alignment_tool

-

检查标记图片(cd path-to-labelfusion-data/logs_test/2017-06-16-20/images并且浏览图片)

-

运行脚本打印出数据集的整体状态(note this may take ~10-20 seconds to run for the full dataset):dataset_update_status -o

Labelfusion 提供训练数据,可以训练各种感知系统,包括:

- semantic segmentation (pixelwise classification)

- 2D object detection (bounding box + classification) – note that we provide the segmentation masks, not the bounding boxes, but the bounding boxes could be computed from the masks

- 6 DoF object poses

- 3D object detection (bounding box + classidication) – the 3D bounding box can be computed from the 6 DoF object poses together with their mesh.

- 6 DoF camera pose - this is provided without any labeling, just through the use of the dense SLAM method we use, ElasticFusion

用LabelFusion来制作新的标记数据

https://github.com/RobotLocomotion/LabelFusion/blob/master/docs/pipeline.rst

只列出了适合我现在使用的部分,如有需求请跳转原网页进行学习

refrece:https://zhuanlan.zhihu.com/p/163324089

Q1:

Failed to get BotCamTrans for camera: OPENNI_FRAME_D

Failed to get coord_frame for camera: OPENNI_FRAME_D

https://github.com/RobotLocomotion/LabelFusion/issues/30

Q2:

error: GLSL 3.30 is not supported

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/draw_global_surface.vert:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/draw_global_surface.geom:

;

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/draw_global_surface_phong.frag:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/empty.vert:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/quad.geom:

, 0.0, 1.0);

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/fxaa.frag:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

OpenGL Error: XX (1280)

In: /root/ElasticFusion/Pangolin/include/pangolin/gl/gl.hpp, line 192

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/empty.vert:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/quad.geom:

, 0.0, 1.0);

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/resize.frag:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

GLSL Shader compilation failed: /root/ElasticFusion/Core/src/Shaders/empty.vert:

0:19(10): error: GLSL 3.30 is not supported. Supported versions are: 1.10, 1.20, 1.30, 1.00 ES, and 3.00 ES

copy source :https://github.com/RobotLocomotion/LabelFusion/issues/94

########################

# run_create_data

########################

cd ~/labelfusion/modules/labelfusion/

vim rendertrainingimages.py

view.setFixedSize(672, 376)

def setCameraInstrinsicsAsus(view):

principalX = 326.785

principalY = 168.432

focalLength = 338.546630859375 # fx = fy = focalLength

setCameraIntrinsics(view, principalX, principalY, focalLength)

########################

# camera config

########################

cd ~/labelfusion/config/

vim bot_frames.cfg

width = 672;

height= 376;

pinhole = [338.546630859375, 338.546630859375, 0, 326.785, 168.432]; # fx fy skew cx cy

########################

# ElasticFusion

########################

# for cuda

cd ~/ElasticFusion/Core/src/

vim CMakeLists.txt

# delete the following line:

# set(CUDA_ARCH_BIN "30 35 50 52 61" CACHE STRING "Specify 'real' GPU arch to build binaries for, BIN(PTX) format is supported. Example: 1.3 2.1(1.3) or 13 21(13)")

cd ~/ElasticFusion/Core/

rm -rf build && mkdir build && cd build && cmake ../src/ && make -j20

# for camera params

cd ~/ElasticFusion/GUI/src/

vim MainController.cpp

Resolution::getInstance(672, 376);

Intrinsics::getInstance(338.546630859375, 338.546630859375, 326.785, 168.432);

cd ~/ElasticFusion/GUI/

rm -rf build && mkdir build && cd build && cmake ../src/ && make -j20

########################

# pipeline

########################

cd ~/labelfusion/data/logs/006_mustard_bottle_01/

run_trim

run_prep

run_alignment_tool

gr.rotateReconstructionToStandardOrientation()

gr.segmentTable()

gr.saveAboveTablePolyData()

gr.launchObjectAlignment("006_mustard_bottle")

gr.saveRegistrationResults()

########################

# create data

########################

run_create_data -d

- nvidia-docker2 https://github.com/RobotLocomotion/LabelFusion/issues/84

https://github.com/RobotLocomotion/LabelFusion/issues/48

https://hub.docker.com/repository/docker/ianre657/labelfusion

https://github.com/RobotLocomotion/LabelFusion/pull/40

Q3:

error: XDG_RUNTIME_DIR not set in the environment.

Q4.

Your GPU "GeForce GTX 1080 Ti" isn't in the ICP Step performance database, please add it

Your GPU "GeForce GTX 1080 Ti" isn't in the RGB Step performance database, please add it

Your GPU "GeForce GTX 1080 Ti" isn't in the RGB Res performance database, please add it

Your GPU "GeForce GTX 1080 Ti" isn't in the SO3 Step performance database, please add it

解决办法

root@7d2e80fbee5d:~/ElasticFusion/GPUTest/build# ./GPUTest ../

GeForce GTX 1080 Ti

Searching for the best thread/block configuration for your GPU...

Your GPU "GeForce GTX 1080 Ti" isn't in the ICP Step performance database, please add it

Your GPU "GeForce GTX 1080 Ti" isn't in the RGB Step performance database, please add it

Your GPU "GeForce GTX 1080 Ti" isn't in the RGB Res performance database, please add it

Your GPU "GeForce GTX 1080 Ti" isn't in the SO3 Step performance database, please add it

Best: 3.164, 100%

icpStepMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(128, 112);

rgbStepMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(96, 112);

rgbResMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(128, 416);

so3StepMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(64, 160);

vim ~/ElasticFusion/Core/src/Utils/GPUConfig.h

加上去

icpStepMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(128, 112);

rgbStepMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(96, 112);

rgbResMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(128, 416);

so3StepMap["GeForce GTX 1080 Ti"] = std::pair<int, int>(64, 160);

icpStepMap["GeForce GTX 1050 Ti"] = std::pair<int, int>(96, 240);

rgbStepMap["GeForce GTX 1050 Ti"] = std::pair<int, int>(80, 272);

rgbResMap["GeForce GTX 1050 Ti"] = std::pair<int, int>(176, 160);

so3StepMap["GeForce GTX 1050 Ti"] = std::pair<int, int>(256, 16);

最后在得到位姿之后,进行重投影的时候需要注意

quat = pose[6], pose[3], pose[4], pose[5] # quat data from file is ordered as x, y, z, w

四元数的顺序不匹配,需要把四元数第一个数挪到最后

4214

4214

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?