多情应笑我早生华发

- 0. 一些概念

- 1. 系统安装(刷机、flash)

- 2. JetPack SDK

- 3. NVIDIA SDK Manager

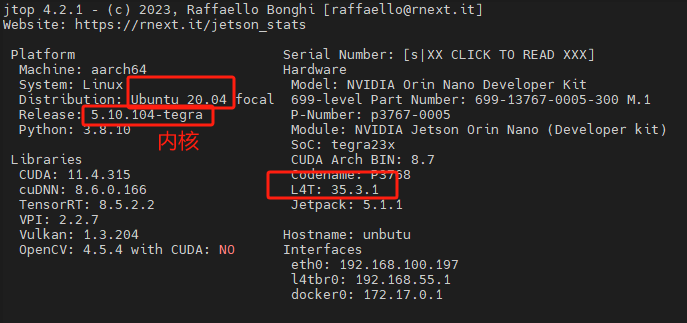

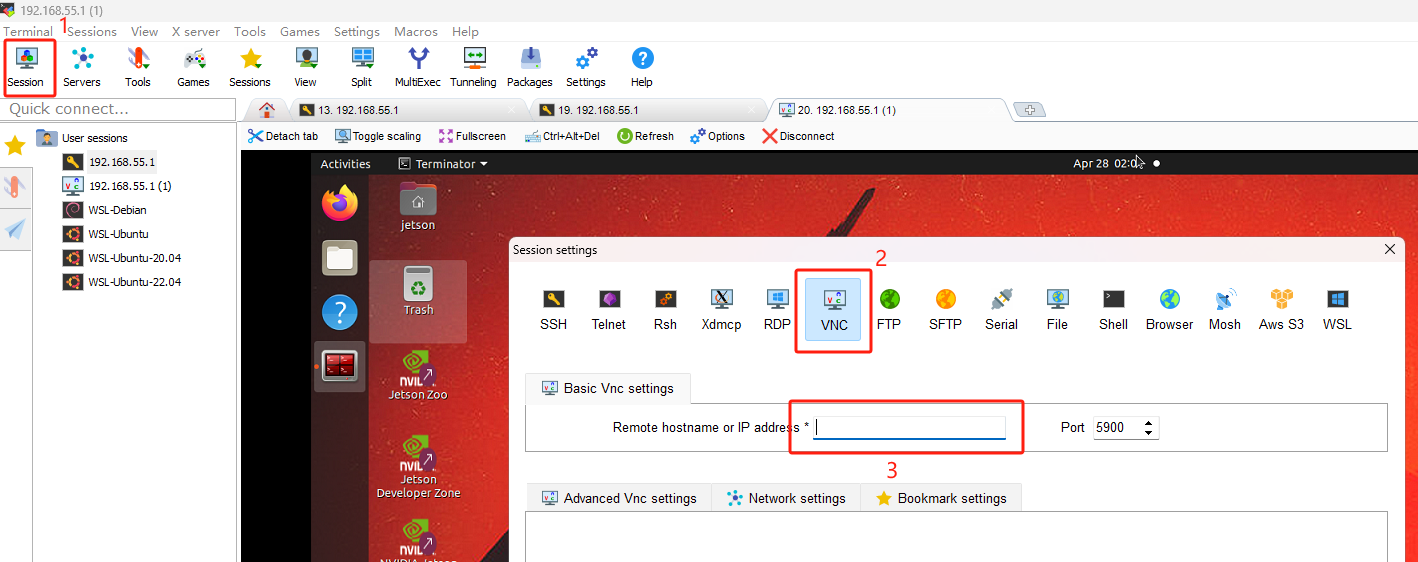

- 4. SSH远程登录、文件传输、VNC远程桌面

- 5. Jtop的安装和使用

- 6. Intel RealSense SDK 2.0 安装

- 7. VISP安装

- 8. 安装vscode

- 9. visp:通过 RealSense SDK 获取 D455 相机的视频帧

- 10. visp:通过 opencv 获取 D455 相机的视频帧

- 11. visp:标定 D455 相机内参

- 12. visp-d455:斑点跟踪

- 13. visp-d455:关键点(特征点)跟踪

- 14. visp-d455:边缘跟踪

- 15. visp-d455:基于通用模型的目标检测和跟踪-RGB相机

- 16. visp-d455:基于通用模型的目标检测和跟踪-RGBD相机

- 17. visp-d455:AprilTag二维码跟踪,可实现相机位姿估计

- 18. visp-d455:【visp+深度学习算法MegaPose】实现目标跟踪、位姿估计

- 19. visp-d455:AprilTag码检测 - 实现位姿估计

- 20. 设置静态IP地址

- 21++. 更多内容请参考后篇

0. 一些概念

-

论坛

提问、项目分享。 -

对于开发小白,Developers、Documentation、Getting Started、User Guide,到底先看哪个呢?

建议小白从 Getting Started 开始!

再看 User Guide

在开发过程中从Documentation 按需查看。

从使用案例中寻找开发灵感。

提问和 trouble shooting 请上 论坛 。

图文、视频教程请浏览 Tech 。

资源下载请移步 Download 。 -

Jetson Orin Nano 开发板简介

-

几个有用的导航

可以找到你想要的,如资源、教程、社区、相关项目、案例、下载等等…- Developers 页面下的 Resource 栏

- Developers ~> Jetson developer kits 页面下的 Community 栏

- Developers 页面下的 Resource 栏

-

教程、视频教程

对于Jetson Orin Nano开发版,导航到教程的方式如下:

Developers

~> Browse by Solution Areas

~> Autonomous Machines

~> Jetson developer kits

~> Community

~> Tech、Forum -

JetPack SDK 是什么?

JetPack 为构建人工智能应用程序提供了完整的开发环境。

JetPack 包含以下组件(模块):- 一个 Ubuntu 系统

这个 Ubuntu 系统由以下模块构成。Ubuntu 版本、Jetson Linux系统版本因 JetPack 版本而异。

(1)Jetson Linux系统

(2)Linux 内核

(3)系统引导(bootloader)

(4)Ubuntu 文件系统 - 一些具有GPU加速的计算机视觉库;

- 一些用于 AI 计算的SDK,如 CUDA 、Tensorrt、cuDNN、VPI 等。

- 一些例程和文档;。

- 一个 Ubuntu 系统

-

NVIDIA SDK Manager 又是什么?

顾名思义就是一个管理 NVIDIA SDK 的工具。例如可用于为开发板安装 JetPack SDK 或操作系统。- 适用于不同系列的NVIDIA开发板;

- 可以为开发板安装不同的操作系统;

- 快速便捷地配置开发环境;

- A central location for all your software development needs.

- Coordination of SDKs, library and driver combinations, and any needed compatibilities or dependencies.

- Notifications for software updates keep your system up-to-date with the latest releases.

-

SD Card image

用于为开发板首次安装操作系统的镜像文件,本质上是JetPack 。 -

pre-configured SD card image

用于为开发板首次安装操作系统的自定义镜像文件,可预安装一些包如ROS、OpenCV等。 -

Jetson 旗下的 机载端人工智能平台有哪些?

如Jetson Orin Nano开发板等… -

Jetson 生态系统

与 Jetson 平台合作的厂商、兼容的传感器、硬件设备… -

ROS + Intel RealSense camera 实现室内 VSLAM

Jetson Isaac ROS Visual SLAM Tutorial

1. 系统安装(刷机、flash)

1.1 使用SD卡刷机

- 参考:Getting Started

- 过程简述:把SD Card Image 镜像制作到SD卡中,然后用SD卡去Boot开发板以实现系统的首次安装。

- 本质:通过SD卡为Jetson开发板安装 JetPack SDK,JetPack SDK 包含了一个适配NVIDIA开发板的Ubuntu系统。

- 这里的系统安装与下一节的【使用 SD Card Image 方式安装 JetPack】是相同的过程和结果。

1.2 使用固态硬盘刷机

- 参考:https://www.yahboom.com/study/Jetson-Orin-NANO

- 过程简述:把 SD Card Image 镜像制作到固态硬盘中,然后用固态硬盘去Boot开发板以实现系统的首次安装。

1.3 如何安装纯净的Ubuntu?

如何安装纯净的Ubuntu而不是Jetson Linux ??

待续…

1.4 扩容

sudo apt install gparted

sudo gparted

2. JetPack SDK

2.1 一些概念

- JetPack 简介

- JetPack 文档

- JetPack SDK的作用是为构建人工智能应用程序提供了一个完整的开发环境。

- JetPack 包含以下组件(模块):

- 一个 Ubuntu 系统

这个 Ubuntu 系统由以下模块构成。Ubuntu 版本、Jetson Linux系统版本因 JetPack 版本而异。- Jetson Linux系统

- Linux 内核

- 系统引导(bootloader)

- Ubuntu 文件系统

- 一些具有GPU加速的计算机视觉库;

- 一些用于 AI 计算的SDK,如 CUDA 、Tensorrt、cuDNN、VPI 等。

- 一些例程和文档;

- 一个 Ubuntu 系统

- full JetPack 和 runtime JetPack 的区别:

full JetPack 包含示例和文档,有利于开发工作,适用于开发环境。runtime JetPack不包含示例和文档,比较简洁,适用于生产环境。 - Ubuntu系统、Linux内核、Jetson Linux系统、L4T版本 的关系

Ubuntu、Red Hat、Fedora等是Linux的一个发行版本(Linux Distribution)。

Linux是一个开源操作系统内核,而Ubuntu是基于Linux内核的操作系统之一。经常说的Linux版本实质指的是Linux内核版本。

Ubuntu = Linux内核 + 界面系统。

Jetson Linux 系统是NVIDIA公司开发的适用于Jetson 开发板的Linux内核,本质上是一种根据硬件型号定制的Linux内核。

L4T 是 Linux for Tegra的缩写,因为jetson系列用的是Tegra架构,因此L4T可以理解为jetson定制的Linux操作系统,具体的来说就是 Ubuntu 定制款。

因此,L4T版本指的是 Jetson Linux 版本。

- JetPack Archive 发行日志

2.2 安装、升级

- 使用 apt 安装完整的JetPack

需要事先为开发板安装好 Jetson Linux 36.2 系统。

参考:Package Management Tool

如:sudo apt show nvidia-jetpack sudo apt update sudo apt install nvidia-jetpack - 使用 apt 选择性安装 JetPack 中的某些packages

需要事先为开发板安装好Ubuntu系统。

参考:JetPack Debian Packages on Host

如:sudo apt-get install cuda-toolkit-12-2 cuda-cross-aarch64-12-2 nvsci libnvvpi3 vpi3-dev vpi3-cross-aarch64-l4t python3.9-vpi3 vpi3-samples vpi3-python-src nsight-systems-2023.4.3 nsight-graphics-for-embeddedlinux-2023.3.0.0 - JetPack Debian Packages 列表

参考:List of JetPack Debian Packages

- 使用 SD Card Image 方式安装 JetPack

不需要事先为开发板安装Ubuntu,因为JetPack包含了一个Ubuntu系统。

参考:Installing JetPack by SD Card Image - 使用 NVIDIA SDK Manager 方式安装 JetPack

参考:Installing JetPack by NVIDIA SDK Manager - 升级

不支持使用apt工具从 JetPack 5 升级到 JetPack 6。

请使用NVIDIA SDK Manager 或 卸载重装 的方式进行升级。

2.3 相关链接

- Jetson Software Documentation

- JetPack 6.0 Release Notes and Documentation

- Jetson Linux 36.2 Release Notes

- Jetson Linux Developer Guide

- Jetson AGX Orin Developer Kit User Guide

- Jetson Orin Nano Developer Kit User Guide

3. NVIDIA SDK Manager

参考:NVIDIA Software Development Kit (SDK) Manager

NVIDIA SDK Manager 顾名思义就是一个管理 NVIDIA SDK 的工具。例如可用于为开发板安装 JetPack SDK 或操作系统。特的用处包括:

- 适用于不同系列的NVIDIA开发板;

- 可以为开发板安装不同的操作系统;

- 快速便捷地配置开发环境;

- A central location for all your software development needs.

- Coordination of SDKs, library and driver combinations, and any needed compatibilities or dependencies.

- Notifications for software updates keep your system up-to-date with the latest releases.

待续…

4. SSH远程登录、文件传输、VNC远程桌面

- SSH:Secure Shell,Secure Socket Shell,是用于安全访问远程机器的Unix命令接口和协议,它被网络管理员广泛用来远程控制网络和其它服务。

- 作用:windows借助PuTTY通过网络远程登录机载计算机进入shell页面、本地计算机的windows与远程计算机Linux相互传输文件。

常用的远程登录工具有PuTTY、SSH、Xshell等,哪个最好用?

推荐使用 MobaXterm ,优点:家庭版免费,可以通过USB或局域网进行SSH、拥有IP扫描功能。缺点:个人版是收费的。

次推荐使用 PuTTY,优点:开源,简洁。缺点:只能通过局域网进行ssh。

MobaXterm官网。

PuTTY官网。

4.1 使用 MobaXterm 进行SSH

- 用USB连接进行SSH

- 下载并安装MobaXterm。

- 用USB连接PC与Jetson Orin Nano 开发板。

- 打开MobaXterm

- Session ~> SSH ~> Remote host 的IP地址是固定的:192.168.55.1 ~> OK

- 使用局域网进行SSH

- 将PC和Jetson Orin Nano 开发板用网线或WiFi连接到同一个局域网下

- 查看Jetson Orin Nano的IP地址。根据不同情况有以下三种方法

- 打开 MobaXterm ~> Tools ~> Network scanner:SS栏显示绿色打勾图标的则表明该设备可以进行SSH:

- 登录路由器查看

- 如果Jetson Orin Nano连接了屏幕,使用ifconfig命令查看

- 打开MobaXterm

- Session ~> SSH ~> Remote hos:Jetson Orin Nano的IP地址 ~> OK

- 如果使用的是yahboom固态硬盘的出厂镜像系统,账号是jetson,密码是yahboom。

注意事项

- MobaXterm 的SSH命令窗口中识别不了

ll命令?文件夹没变蓝色?

要source ~/.bashrc一下。

4.2 使用 MobaXterm + x11vnc 进行VNC远程桌面

VNC能干嘛?即Virtual Network Console,功能像windows的向日葵远程一样,可以实现远程桌面控制。

- 安装和配置 x11vnc

参考:https://blog.csdn.net/jiexijihe945/article/details/126222983 - 开启VNC服务

- (1)要先用MobaXterm进行SSH连接

- (2)开启VNC服务

sudo systemctl start x11vnc - (3)查看VNC状态

systemctl status x11vnc

- MobaXterm 远程桌面

usb连接方式,ip:192.168.55.1

局域网连接方式,ip:开发板的IP地址

4.3 文件传输

将文件从windows拖进蓝色框区域。

4.2 使用VSCode进行SSH并打开远程目录

- 远程主机的Linux系统已安装openssh-server

sudo apt-get install openssh-server - windows的VSCode做如下操作:

- 安装插件remote ssh

- 第一次连接

- 查看配置

- 连接

- 打开工作目录

- 安装插件remote ssh

5. Jtop的安装和使用

https://www.yahboom.com/build.html?id=6453&cid=590

6. Intel RealSense SDK 2.0 安装

参考:https://blog.csdn.net/weixin_43321489/article/details/138284219

要先断开D455相机与开发板的连接。

# 更新系统,最好不用执行会影响其他

#sudo apt-get update && sudo apt-get upgrade && sudo apt-get dist-upgrade

# 安装编译工具

sudo apt-get install git wget cmake build-essential

# 安装依赖库

sudo apt-get install git libssl-dev libusb-1.0-0-dev pkg-config libgtk-3-dev

# 如果你想为SDK构建支持OpenGL的示例,请安装以下依赖

sudo apt-get install libglfw3-dev libgl1-mesa-dev libglu1-mesa-dev

# 下载源码,可以选择合适的版本

cd ~/shd

git clone https://github.com/IntelRealSense/librealsense.git

cd librealsense

# Realsense permissions script

./scripts/setup_udev_rules.sh

# 编译

mkdir build && cd build

cmake .. -DBUILD_EXAMPLES=true -DCMAKE_BUILD_TYPE=release -DBUILD_WITH_CUDA=true

make -j$(($(nproc)-1))

# 安装,默认安装在usr/local中

sudo make install

验证是否安装成功:

rs-depth或 realsense-viewe

7. VISP安装

参考:在Ubuntu上安装visp 和 在jetson上安装visp。

步骤:

- 安装opencv

opencv是ViSP的依赖库。

如果不需要使用opencv最新版本,可以直接使用apt安装opencv的预编译包,如:sudo apt-get install libopencv-dev。

如果需要使用opencv最新版本,建议通过编译源码的方式安装。推荐使用此法:安装参考opencv篇。

本文使用编译源码方式安装opencv。 - 安装其他依赖库

# 编译工具 sudo apt-get install build-essential cmake-curses-gui git wget # x11 sudo apt-get install libx11-dev # 矩阵运算相关 sudo apt-get install liblapack-dev libblas-dev libeigen3-dev libopenblas-dev libatlas-base-dev libgsl-dev # 视频、图像获取 相关 sudo apt-get install libv4l-dev libzbar-dev libpthread-stubs0-dev nlohmann-json3-dev - 安装

libdc1394

libdc1394是ViSP的依赖库,用于访问USB摄像机。

注意:libdc1394-dev和libdc1394-22-dev的区别:libdc1394-22是早期版本,仅支持Ubuntu21.04以前的系统!

libdc1394-dev 或 libdc1394-22-dev,根据提示信息选择安装哪一个!

例如,选其中一个安装时提示要移除opencv或其他的库则证明这个包与当前的系统不兼容,安装另一个。sudo apt-get install libdc1394-22-dev - 安装mavsdk1.4

目前最新版本的mavsdk(v2.x)会导致visp编译报错,至少当前的最新版本visp3.2.x编译会报错,后续visp版本不知会不会修复此问题。我自己修复了此问题:请参考 visp-d455:基于IBVS的Pixhawk无人机视觉伺服 章节!

稳妥起见本次以安装mavsdk1.4为例:cd ~/shd sudo apt-get update sudo apt-get install build-essential cmake git git clone https://github.com/mavlink/MAVSDK.git -b v1.4 cd MAVSDK git branch git submodule update --init --recursive cmake -DCMAKE_BUILD_TYPE=Release -Bbuild/default -H. sudo cmake --build build/default --target install -j4

- 下载VISP源码、编译

设置环境变量 VISP_DIRecho "export VISP_WS=$HOME/shd/visp-ws" >> ~/.bashrc source ~/.bashrc mkdir -p $VISP_WS cd ~/shd/visp-ws git clone https://github.com/lagadic/visp.git mkdir -p ~/shd/visp-ws/visp-build cd ~/shd/visp-ws/visp-build cmake ../visp -DUSE_BLAS/LAPACK=GSL make -j$(nproc)

验证是否安装成功:echo "export VISP_DIR=$VISP_WS/visp-build" >> ~/.bashrc source ~/.bashrccd ~/shd/visp-ws/visp-build/tutorial/image ./tutorial-image-display-scaled-auto

cmake配置提示参考:

jetson@unbutu:~/shd/visp-ws/visp-build$ cmake ../visp -DUSE_BLAS/LAPACK=GSL

-- Detected processor: aarch64

-- Could NOT find Python3 (missing: Python3_LIBRARIES Python3_INCLUDE_DIRS Development) (found version "3.9.5")

-- Required CMake version for Python bindings is 3.19.0,

but you have 3.16.3.

Python bindings generation will be deactivated.

-- Could NOT find MKL (missing: MKL_LIBRARIES MKL_INCLUDE_DIRS MKL_INTERFACE_LIBRARY MKL_SEQUENTIAL_LAYER_LIBRARY MKL_CORE_LIBRARY)

-- Found OpenBLAS libraries: /usr/lib/aarch64-linux-gnu/liblapack.so

-- Found OpenBLAS include: /usr/include/aarch64-linux-gnu

-- Found Atlas (include: /usr/include/aarch64-linux-gnu, library: /usr/lib/aarch64-linux-gnu/libatlas.so)

-- Could NOT find OpenGL (missing: OPENGL_opengl_LIBRARY OPENGL_glx_LIBRARY OPENGL_INCLUDE_DIR)

-- Try C99 flag = [-std=gnu99]

-- Performing Test C99_FLAG_DETECTED

-- Performing Test C99_FLAG_DETECTED - Success

-- mavsdk found

-- v4l2 found

-- Could NOT find FTIITSDK (missing: FTIITSDK_FT_LIBRARY FTIITSDK_COMMUNICATION_LIBRARY FTIITSDK_INCLUDE_DIR)

-- openmp found

-- eigen3 found

Package libusb was not found in the pkg-config search path.

Perhaps you should add the directory containing `libusb.pc'

to the PKG_CONFIG_PATH environment variable

No package 'libusb' found

-- libusb_1 found

-- realsense2 found

-- threads found

-- xml2 found

-- Found CUDA: /usr/local/cuda-11.4 (found suitable exact version "11.4")

-- opencv found

-- zlib found

-- x11 found

-- jpeg found

-- png found

-- zbar found

-- Found CUDA: /usr/local/cuda-11.4 (found version "11.4")

-- tensorrt found

-- Performing Test HAVE_FUNC_ISNAN

-- Performing Test HAVE_FUNC_ISNAN - Success

-- Performing Test HAVE_FUNC_STD_ISNAN

-- Performing Test HAVE_FUNC_STD_ISNAN - Success

-- Performing Test HAVE_FUNC__ISNAN

-- Performing Test HAVE_FUNC__ISNAN - Failed

-- Performing Test HAVE_FUNC_ISINF

-- Performing Test HAVE_FUNC_ISINF - Success

-- Performing Test HAVE_FUNC_STD_ISINF

-- Performing Test HAVE_FUNC_STD_ISINF - Success

-- Performing Test HAVE_FUNC_ROUND

-- Performing Test HAVE_FUNC_ROUND - Success

-- Performing Test HAVE_FUNC_STD_ROUND

-- Performing Test HAVE_FUNC_STD_ROUND - Success

-- Performing Test HAVE_FUNC_ERFC

-- Performing Test HAVE_FUNC_ERFC - Success

-- Performing Test HAVE_FUNC_STD_ERFC

-- Performing Test HAVE_FUNC_STD_ERFC - Success

-- Performing Test HAVE_FUNC_ISFINITE

-- Performing Test HAVE_FUNC_ISFINITE - Success

-- Performing Test HAVE_FUNC_STD_ISFINITE

-- Performing Test HAVE_FUNC_STD_ISFINITE - Success

-- Performing Test HAVE_FUNC__FINITE

-- Performing Test HAVE_FUNC__FINITE - Failed

-- Performing Test HAVE_FUNC_LOG1P

-- Performing Test HAVE_FUNC_LOG1P - Success

-- Could NOT find Doxygen (missing: DOXYGEN_EXECUTABLE)

-- Could NOT find JNI (missing: JAVA_AWT_LIBRARY JAVA_JVM_LIBRARY JAVA_INCLUDE_PATH JAVA_INCLUDE_PATH2 JAVA_AWT_INCLUDE_PATH)

--

-- ==========================================================

-- General configuration information for ViSP 3.6.1

--

-- Version control: v3.6.0-880-g659128117

--

-- Platform:

-- Timestamp: 2024-05-13T15:47:41Z

-- Host: Linux 5.10.104-tegra aarch64

-- CMake: 3.16.3

-- CMake generator: Unix Makefiles

-- CMake build tool: /usr/bin/make

-- Configuration: Release

--

-- System information:

-- Number of CPU logical cores: 6

-- Number of CPU physical cores: 1

-- Total physical memory (in MiB): 6480

-- OS name: Linux

-- OS release: 5.10.104-tegra

-- OS version: #1 SMP PREEMPT Sun Mar 19 07:55:28 PDT 2023

-- OS platform: aarch64

-- CPU name: Unknown family

-- Is the CPU 64-bit? yes

-- Does the CPU have FPU? no

-- CPU optimization: NEON

--

-- C/C++:

-- Built as dynamic libs?: yes

-- C++ Compiler: /usr/bin/c++ (ver 9.4.0)

-- C++ flags (Release): -Wall -Wextra -fopenmp -pthread -std=c++17 -fvisibility=hidden -fPIC -O3 -DNDEBUG

-- C++ flags (Debug): -Wall -Wextra -fopenmp -pthread -std=c++17 -fvisibility=hidden -fPIC -g

-- C Compiler: /usr/bin/cc

-- C flags (Release): -Wall -Wextra -fopenmp -pthread -std=c++17 -fvisibility=hidden -fPIC -O3 -DNDEBUG

-- C flags (Debug): -Wall -Wextra -fopenmp -pthread -std=c++17 -fvisibility=hidden -fPIC -g

-- Linker flags (Release):

-- Linker flags (Debug):

-- Use cxx standard: 17

--

-- ViSP modules:

-- To be built: core gui imgproc io java_bindings_generator klt me sensor ar blob robot visual_features vs vision detection mbt tt tt_mi

-- Disabled: -

-- Disabled by dependency: -

-- Unavailable: java

--

-- Python 3:

-- Interpreter: /usr/bin/python3.9 (ver 3.9.5)

--

-- Java:

-- ant: no

-- JNI: no

--

-- Python3 bindings: no

-- Requirements:

-- Python version > 3.7.0: python not found or too old (3.9.5)

-- Python in Virtual environment or conda:

-- ok

-- Pybind11 found: failed

-- CMake > 3.19.0: failed (3.16.3)

-- C++ standard > 201703L: ok (201703L)

--

-- Build options:

-- Build deprecated: yes

-- Build with moment combine: no

--

-- OpenCV:

-- Version: 4.9.0

-- Modules: calib3d core dnn features2d flann gapi highgui imgcodecs imgproc ml objdetect photo stitching video videoio alphamat aruco bgsegm bioinspired ccalib cudaarithm cudabgsegm cudacodec cudafeatures2d cudafilters cudaimgproc cudalegacy cudaobjdetect cudaoptflow cudastereo cudawarping cudev datasets dnn_objdetect dnn_superres dpm face fuzzy hdf hfs img_hash intensity_transform line_descriptor mcc optflow phase_unwrapping plot quality rapid reg rgbd saliency shape signal stereo structured_light superres surface_matching text tracking videostab wechat_qrcode xfeatures2d ximgproc xobjdetect xphoto

-- OpenCV dir: /usr/local/lib/cmake/opencv4

--

-- Mathematics:

-- Blas/Lapack: yes

-- \- Use MKL: no

-- \- Use OpenBLAS: no

-- \- Use Atlas: no

-- \- Use Netlib: no

-- \- Use GSL: yes (ver 2.5)

-- \- Use Lapack (built-in): no

-- Use Eigen3: yes (ver 3.3.7)

-- Use OpenCV: yes (ver 4.9.0)

--

-- Simulator:

-- Ogre simulator:

-- \- Use Ogre3D: no

-- \- Use OIS: no

-- Coin simulator:

-- \- Use Coin3D: no

-- \- Use SoWin: no

-- \- Use SoXt: no

-- \- Use SoQt: no

-- \- Use Qt5: no

-- \- Use Qt4: no

-- \- Use Qt3: no

--

-- Media I/O:

-- Use JPEG: yes (ver 80)

-- Use PNG: yes (ver 1.6.37)

-- \- Use ZLIB: yes (ver 1.2.11)

-- Use OpenCV: yes (ver 4.9.0)

-- Use stb_image (built-in): yes (ver 2.27.0)

-- Use TinyEXR (built-in): yes (ver 1.0.2)

-- Use simdlib (built-in): yes

--

-- Real robots:

-- Use Afma4: no

-- Use Afma6: no

-- Use Franka: no

-- Use Viper650: no

-- Use Viper850: no

-- Use ur_rtde: no

-- Use Kinova Jaco: no

-- Use aria (Pioneer): no

-- Use PTU46: no

-- Use Biclops PTU: no

-- Use Flir PTU SDK: no

-- Use MAVSDK: yes (ver 1.4.18)

-- Use Parrot ARSDK: no

-- \-Use ffmpeg: no

-- Use Virtuose: no

-- Use qbdevice (built-in): yes (ver 2.6.0)

-- Use takktile2 (built-in): yes (ver 1.0.0)

-- Use pololu (built-in): yes (ver 1.0.0)

--

-- GUI:

-- Use X11: yes

-- Use GTK: no

-- Use OpenCV: yes (ver 4.9.0)

-- Use GDI: no

-- Use Direct3D: no

--

-- Cameras:

-- Use DC1394-2.x: no

-- Use CMU 1394: no

-- Use V4L2: yes (ver 1.18.0)

-- Use directshow: no

-- Use OpenCV: yes (ver 4.9.0)

-- Use FLIR Flycapture: no

-- Use Basler Pylon: no

-- Use IDS uEye: no

--

-- RGB-D sensors:

-- Use Realsense: no

-- Use Realsense2: yes (ver 2.55.1)

-- Use Occipital Structure: no

-- Use Kinect: no

-- \- Use libfreenect: no

-- \- Use libusb-1: yes (ver 1.0.23)

-- \- Use std::thread: yes

-- Use PCL: no

-- \- Use VTK: no

--

-- F/T sensors:

-- Use atidaq (built-in): no

-- Use comedi: no

-- Use IIT SDK: no

--

-- Mocap:

-- Use Qualisys: no

-- Use Vicon: no

--

-- Detection:

-- Use zbar: yes (ver 0.23)

-- Use dmtx: no

-- Use AprilTag (built-in): yes (ver 3.1.1)

-- \- Use AprilTag big family: no

--

-- Misc:

-- Use Clipper (built-in): yes (ver 6.4.2)

-- Use pugixml (built-in): yes (ver 1.9.0)

-- Use libxml2: yes (ver 2.9.10)

-- Use json (nlohmann): no

--

-- Optimization:

-- Use OpenMP: yes

-- Use std::thread: yes

-- Use pthread: yes

-- Use pthread (built-in): no

-- Use simdlib (built-in): yes

--

-- DNN:

-- Use CUDA Toolkit: yes (ver 11.4)

-- Use TensorRT: yes (ver 8.5.2.2)

--

-- Documentation:

-- Use doxygen: no

-- \- Use mathjax: no

--

-- Tests and samples:

-- Use catch2 (built-in): yes (ver 2.13.7)

-- Tests: yes

-- Apps: yes

-- Demos: yes

-- Examples: yes

-- Tutorials: yes

-- Dataset found: no

--

-- Library dirs:

-- Eigen3 include dir: /usr/lib/cmake/eigen3

-- OpenCV dir: /usr/local/lib/cmake/opencv4

--

-- Install path: /usr/local

--

-- ==========================================================

-- Configuring done

-- Generating done

-- Build files have been written to: /home/jetson/shd/visp-ws/visp-build

jetson@unbutu:~/shd/visp-ws/visp-build$

8. 安装vscode

下载 vscode.deb.

使用MobaXterm将vscode.deb复制到Ubuntu中。

安装vscode:sudo dpkg -i vscode.deb

打开vscode:在命令行中输入code

取消文件窗口覆盖:在设置中搜索 workbench.editor.Enable Preview,并将它取消勾选。

9. visp:通过 RealSense SDK 获取 D455 相机的视频帧

运行VISP例程:

cd ~/shd/visp-ws/visp-build/tutorial/grabber

./tutorial-grabber-realsense --seqname I%04d.pgm --record 1

注意:--seqname和 --record要一起使用才可以,不然会报 invalid directory 错误。

10. visp:通过 opencv 获取 D455 相机的视频帧

运行例程 tutorial-grabber-opencv报错,初步判定原因是RealSense SDK安装过程中跳过了打系统内核补丁的步骤导致。

11. visp:标定 D455 相机内参

参考:8.4 相机内参标定

- 获取适量图片,10~15张为宜

cd ~/shd/visp-ws/visp-build/tutorial/grabber

mkdir ~/shd/visp-ws/cali-cam-d455

./tutorial-grabber-realsense --seqname ~/shd/visp-ws/cali-cam-d455/chessboard-%02d.jpg --record 1

此时会生成一个示例的标定文件:~/shd/visp-ws/cali-cam-d455/camera.xml,参数是不正确的,标定时要删掉该示例文件。

- 标定

cd ~/shd/visp-ws/cali-cam-d455

cp ~/shd/visp-ws/visp-build/example/calibration/calibrate-camera ./

cp ~/shd/visp-ws/visp-build/example/calibration/default-chessboard.cfg ./

rm camera.xml

./calibrate-camera default-chessboard.cfg

运行标定程序时如果出现下面的错误:

或:

这是由于没有使用第三方库如 MKL、OpenBLAS、 Atlas、 GSL、Netlib来加速矩阵运算导致的,cmake ../visp -DUSE_BLAS/LAPACK=GSL完成后要保证:

尝试了 OpenBLAS、 Atlas、Netlib都不行,GSL可行,MKL没试。

MKL、OpenBLAS、 Atlas、 GSL、Netlib 的安装和指定请参考:8.1 基本的矩阵向量运算

- 安装可以优化矩阵运算的第三方库GSL

sudo apt install libgsl-dev

- 指定使用GSL来加速矩阵运算,并重新编译visp或只编译例程calibrate-camera

cd ~/shd/visp-ws/visp-build

rm -rf *

cmake ../visp -DUSE_BLAS/LAPACK=GSL

make -j$(nproc) calibrate-camera

或

make -j$(nproc)

- 成功标定

cd ~/shd/visp-ws/cali-cam-d455

rm calibrate-camera camera.xml

cp ~/shd/visp-ws/visp-build/example/calibration/calibrate-camera ./

./calibrate-camera default-chessboard.cfg

标定结果 px,py,u0,v0 保存在~/shd/visp-ws/cali-cam-d455/camera.xml中:

<?xml version="1.0"?>

<root>

<!--This file stores intrinsic camera parameters used

in the vpCameraParameters Class of ViSP available

at https://visp.inria.fr/download/ .

It can be read with the parse method of

the vpXmlParserCamera class.-->

<camera>

<!--Name of the camera-->

<name>Camera</name>

<!--Size of the image on which camera calibration was performed-->

<image_width>640</image_width>

<image_height>480</image_height>

<!--Intrinsic camera parameters computed for each projection model-->

<model>

<!--Projection model type-->

<type>perspectiveProjWithoutDistortion</type>

<!--Pixel ratio-->

<px>398.84641712787538</px>

<py>398.68682503969529</py>

<!--Principal point-->

<u0>322.99756859139262</u0>

<v0>248.6004154301479</v0>

</model>

<model>

<!--Projection model type-->

<type>perspectiveProjWithDistortion</type>

<!--Pixel ratio-->

<px>388.57370857483153</px>

<py>388.41874618866353</py>

<!--Principal point-->

<u0>325.65955938183913</u0>

<v0>248.12836717904835</v0>

<!--Undistorted to distorted distortion parameter-->

<kud>-0.039473814232162549</kud>

<!--Distorted to undistorted distortion parameter-->

<kdu>0.040460699526659552</kdu>

</model>

<!--Additional information-->

<additional_information>

<date>2024/05/13 11:40:55</date>

<nb_calibration_images>7</nb_calibration_images>

<calibration_pattern_type>Chessboard</calibration_pattern_type>

<board_size>9x6</board_size>

<square_size>0.025</square_size>

<global_reprojection_error>

<without_distortion>0.191072</without_distortion>

<with_distortion>0.105808</with_distortion>

</global_reprojection_error>

<camera_poses>

<cMo>-0.1224468879 -0.09066443142 0.2851836326 0.396193237 0.0252419678 0.05725736687</cMo>

<cMo>-0.1122749815 -0.07131476187 0.2850666327 0.07173676775 -0.4428100689 0.03359819038</cMo>

<cMo>-0.1252028762 -0.09066211225 0.2824452077 0.1124871165 -0.555598502 0.05762187366</cMo>

<cMo>-0.1741429871 -0.05962608505 0.3464753725 0.2196880035 -0.3503487077 -0.1767877418</cMo>

<cMo>-0.02636216889 -0.0568963793 0.4076841021 0.01830059873 0.6156466408 0.210153884</cMo>

<cMo>-0.06688647124 -0.1001443402 0.2733689325 0.2880379529 0.01325446236 0.4713165184</cMo>

<cMo>-0.1100997782 -0.1000982268 0.3432905219 -0.07095469824 0.2366782342 0.393682507</cMo>

<cMo_dist>-0.1242547143 -0.09017472147 0.2748890938 0.3974270231 0.0157942535 0.05879537619</cMo_dist>

<cMo_dist>-0.1142069932 -0.0709658145 0.2755640618 0.07116784978 -0.4471194347 0.03401523573</cMo_dist>

<cMo_dist>-0.126982605 -0.09026828346 0.2720565051 0.1118477587 -0.5628451697 0.05778465003</cMo_dist>

<cMo_dist>-0.1761208319 -0.05913361779 0.3334596217 0.2209953356 -0.3609393418 -0.1755910688</cMo_dist>

<cMo_dist>-0.02911523232 -0.05641192436 0.3968714019 0.0171518882 0.6106532922 0.2093655367</cMo_dist>

<cMo_dist>-0.06871420006 -0.09981702989 0.2645287482 0.2850990662 0.009511734891 0.4721361553</cMo_dist>

<cMo_dist>-0.1126448284 -0.09979328033 0.3330543328 -0.07206900533 0.220845779 0.3932979184</cMo_dist>

</camera_poses>

</additional_information>

</camera>

</root>

标定提示如:

jetson@unbutu:~/shd/visp-ws/cali-cam-d455$ ./calibrate-camera default-chessboard.cfg

Settings from config file: default-chessboard.cfg

grid width : 9

grid height: 6

square size: 0.025

pattern : CHESSBOARD

input seq : chessboard-%02d.jpg

tempo : 1

Settings from command line options:

Ouput xml file : camera.xml

Camera name : Camera

Initialize camera parameters with default values

Camera parameters used for initialization:

Camera parameters for perspective projection without distortion:

px = 600 py = 600

u0 = 320 v0 = 240

Process frame: chessboard-04.jpg, grid detection status: 1, image used as input data

Process frame: chessboard-05.jpg, grid detection status: 1, image used as input data

Process frame: chessboard-06.jpg, grid detection status: 1, image used as input data

Process frame: chessboard-07.jpg, grid detection status: 1, image used as input data

Process frame: chessboard-08.jpg, grid detection status: 1, image used as input data

Process frame: chessboard-09.jpg, grid detection status: 1, image used as input data

Process frame: chessboard-10.jpg, grid detection status: 1, image used as input data

Calibration without distortion in progress on 7 images...

Camera parameters for perspective projection without distortion:

px = 398.8464171 py = 398.686825

u0 = 322.9975686 v0 = 248.6004154

Image chessboard-04.jpg reprojection error: 0.2249227586

Image chessboard-05.jpg reprojection error: 0.1894938163

Image chessboard-06.jpg reprojection error: 0.1602951323

Image chessboard-07.jpg reprojection error: 0.1386196645

Image chessboard-08.jpg reprojection error: 0.148481482

Image chessboard-09.jpg reprojection error: 0.2398637129

Image chessboard-10.jpg reprojection error: 0.2111191561

Global reprojection error: 0.191072441

Camera parameters without distortion successfully saved in "camera.xml"

Calibration with distortion in progress on 7 images...

Camera parameters for perspective projection with distortion:

px = 388.5737086 py = 388.4187462

u0 = 325.6595594 v0 = 248.1283672

kud = -0.03947381423

kdu = 0.04046069953

Image chessboard-04.jpg reprojection error: 0.1195661908

Image chessboard-05.jpg reprojection error: 0.1193498118

Image chessboard-06.jpg reprojection error: 0.1057798103

Image chessboard-07.jpg reprojection error: 0.08989710063

Image chessboard-08.jpg reprojection error: 0.09226062422

Image chessboard-09.jpg reprojection error: 0.1115839283

Image chessboard-10.jpg reprojection error: 0.09794506681

Global reprojection error: 0.1058083044

This tool computes the line fitting error (mean distance error) on image points extracted from the raw distorted image.

chessboard-04.jpg line 1 fitting error on distorted points: 0.180956872 ; on undistorted points: 0.02950599891

chessboard-04.jpg line 2 fitting error on distorted points: 0.1228108594 ; on undistorted points: 0.01468993169

chessboard-04.jpg line 3 fitting error on distorted points: 0.07217472377 ; on undistorted points: 0.01824309706

chessboard-04.jpg line 4 fitting error on distorted points: 0.02996954372 ; on undistorted points: 0.02391568145

chessboard-04.jpg line 5 fitting error on distorted points: 0.02206760241 ; on undistorted points: 0.02048522567

chessboard-04.jpg line 6 fitting error on distorted points: 0.1117242205 ; on undistorted points: 0.06897936988

This tool computes the line fitting error (mean distance error) on image points extracted from the undistorted image (vpImageTools::undistort()).

chessboard-04.jpg undistorted image, line 1 fitting error: 0.05715642892

chessboard-04.jpg undistorted image, line 2 fitting error: 0.03421786

chessboard-04.jpg undistorted image, line 3 fitting error: 0.02696133557

chessboard-04.jpg undistorted image, line 4 fitting error: 0.02182783737

chessboard-04.jpg undistorted image, line 5 fitting error: 0.01848111712

chessboard-04.jpg undistorted image, line 6 fitting error: 0.07628415665

This tool computes the line fitting error (mean distance error) on image points extracted from the raw distorted image.

chessboard-05.jpg line 1 fitting error on distorted points: 0.07842274598 ; on undistorted points: 0.04073278419

chessboard-05.jpg line 2 fitting error on distorted points: 0.02894332818 ; on undistorted points: 0.03381049647

chessboard-05.jpg line 3 fitting error on distorted points: 0.03171134573 ; on undistorted points: 0.03028086031

chessboard-05.jpg line 4 fitting error on distorted points: 0.08958890743 ; on undistorted points: 0.08256816471

chessboard-05.jpg line 5 fitting error on distorted points: 0.07121212097 ; on undistorted points: 0.05129840474

chessboard-05.jpg line 6 fitting error on distorted points: 0.09545491062 ; on undistorted points: 0.03808806659

This tool computes the line fitting error (mean distance error) on image points extracted from the undistorted image (vpImageTools::undistort()).

chessboard-05.jpg undistorted image, line 1 fitting error: 0.03642440367

chessboard-05.jpg undistorted image, line 2 fitting error: 0.03209987361

chessboard-05.jpg undistorted image, line 3 fitting error: 0.0281838577

chessboard-05.jpg undistorted image, line 4 fitting error: 0.08309325865

chessboard-05.jpg undistorted image, line 5 fitting error: 0.04991418802

chessboard-05.jpg undistorted image, line 6 fitting error: 0.0292585384

This tool computes the line fitting error (mean distance error) on image points extracted from the raw distorted image.

chessboard-06.jpg line 1 fitting error on distorted points: 0.05561697668 ; on undistorted points: 0.0662133449

chessboard-06.jpg line 2 fitting error on distorted points: 0.05927166434 ; on undistorted points: 0.02312656332

chessboard-06.jpg line 3 fitting error on distorted points: 0.02796679038 ; on undistorted points: 0.03354572908

chessboard-06.jpg line 4 fitting error on distorted points: 0.05702547089 ; on undistorted points: 0.05405971444

chessboard-06.jpg line 5 fitting error on distorted points: 0.0584693254 ; on undistorted points: 0.04685735151

chessboard-06.jpg line 6 fitting error on distorted points: 0.03431135262 ; on undistorted points: 0.03052371163

This tool computes the line fitting error (mean distance error) on image points extracted from the undistorted image (vpImageTools::undistort()).

chessboard-06.jpg undistorted image, line 1 fitting error: 0.06296087909

chessboard-06.jpg undistorted image, line 2 fitting error: 0.04294818457

chessboard-06.jpg undistorted image, line 3 fitting error: 0.05168819921

chessboard-06.jpg undistorted image, line 4 fitting error: 0.0556851262

chessboard-06.jpg undistorted image, line 5 fitting error: 0.05056370979

chessboard-06.jpg undistorted image, line 6 fitting error: 0.04425359694

This tool computes the line fitting error (mean distance error) on image points extracted from the raw distorted image.

chessboard-07.jpg line 1 fitting error on distorted points:

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1929

1929

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?