使用的版本

- https://github.com/ultralytics/yolov5/tree/v5.0

安装onnx

torch.onnx.export(model, img, f, verbose=False, opset_version=12, input_names=['images'],

output_names=['classes', 'boxes'] if y is None else ['output'],

dynamic_axes={'images': {0: 'batch', 2: 'height', 3: 'width'}, # size(1,3,640,640)

'output': {0: 'batch', 2: 'y', 3: 'x'}} if opt.dynamic else None)

opset_version=12,安装对应版本的onnx

:~/Documents/pachong/yolov5$ pip install onnx==1.12.0

Collecting onnx==1.12.0

Downloading onnx-1.12.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (13.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 13.1/13.1 MB 32.9 kB/s eta 0:00:00

Requirement already satisfied: numpy>=1.16.6 in /home/pdd/anaconda3/envs/yolo/lib/python3.7/site-packages (from onnx==1.12.0) (1.21.6)

Requirement already satisfied: typing-extensions>=3.6.2.1 in /home/pdd/anaconda3/envs/yolo/lib/python3.7/site-packages (from onnx==1.12.0) (4.4.0)

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ProtocolError('Connection aborted.', ConnectionResetError(104, '连接被对方重设'))': /simple/protobuf/

Collecting protobuf<=3.20.1,>=3.12.2

Downloading protobuf-3.20.1-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl (1.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.0/1.0 MB 26.1 kB/s eta 0:00:00

Installing collected packages: protobuf, onnx

Attempting uninstall: protobuf

Found existing installation: protobuf 3.20.3

Uninstalling protobuf-3.20.3:

Successfully uninstalled protobuf-3.20.3

Attempting uninstall: onnx

Found existing installation: onnx 1.13.0

Uninstalling onnx-1.13.0:

Successfully uninstalled onnx-1.13.0

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

tensorboard 2.10.1 requires protobuf<3.20,>=3.9.2, but you have protobuf 3.20.1 which is incompatible.

Successfully installed onnx-1.12.0 protobuf-3.20.1

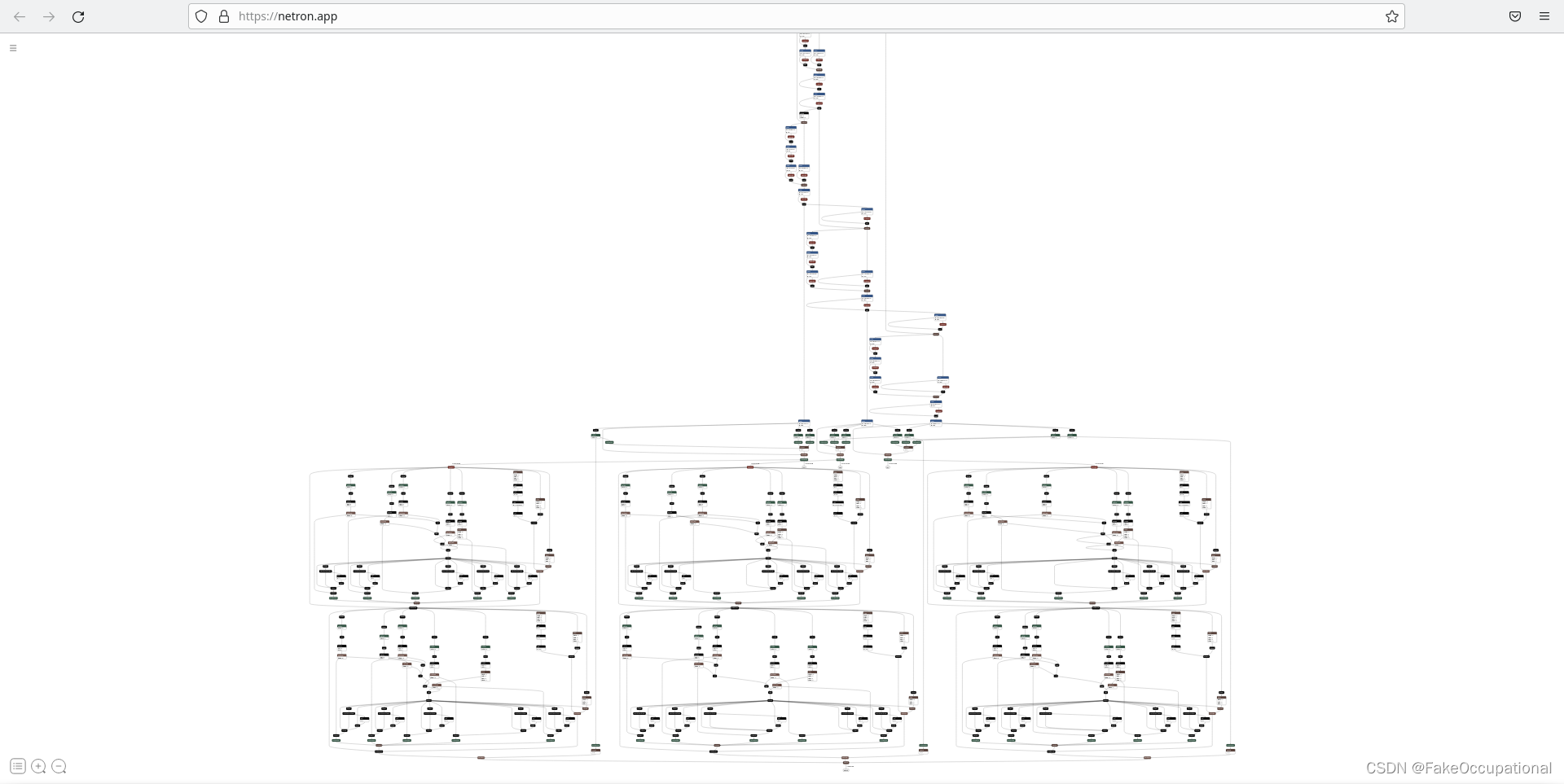

export model

python ./models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1

When given a 640x640 input image, the model outputs the following 3 tensors.

// https://medium.com/axinc-ai/yolov5-the-latest-model-for-object-detection-b13320ec516b

(1, 3, 80, 80, 85) # anchor 0

(1, 3, 40, 40, 85) # anchor 1

(1, 3, 20, 20, 85) # anchor 2

YOLOv5 输出网格被展平并连接以形成单个输出

python ./models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1 --grid

gird 起作用的位置

# Input

img = torch.zeros(opt.batch_size, 3, *opt.img_size).to(device) # image size(1,3,320,192) iDetection

# Update model

for k, m in model.named_modules():

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if isinstance(m, models.common.Conv): # assign export-friendly activations

if isinstance(m.act, nn.Hardswish):

m.act = Hardswish()

elif isinstance(m.act, nn.SiLU):

m.act = SiLU()

# elif isinstance(m, models.yolo.Detect):

# m.forward = m.forward_export # assign forward (optional)

model.model[-1].export = not opt.grid # set Detect() layer grid export

y = model(img) # dry run

detect独有属性export

class Detect(nn.Module):

stride = None # strides computed during build

export = ** # onnx export

def __init__(self, nc=80, anchors=(), ch=()): # detection layer

super(Detect, self).__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers

self.na = len(anchors[0]) // 2 # number of anchors

self.grid = [torch.zeros(1)] * self.nl # init grid

a = torch.tensor(anchors).float().view(self.nl, -1, 2)

self.register_buffer('anchors', a) # shape(nl,na,2)

self.register_buffer('anchor_grid', a.clone().view(self.nl, 1, -1, 1, 1, 2)) # shape(nl,1,na,1,1,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

其他细节

class Focus(nn.Module):

# Focus wh information into c-space

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

super(Focus, self).__init__()

self.conv = Conv(c1 * 4, c2, k, s, p, g, act)

# self.contract = Contract(gain=2)

# def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)

# return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))

# # return self.conv(self.contract(x))

def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)

if torch.onnx.is_in_onnx_export():# TODO

a, b = x[..., ::2, :].transpose(-2, -1), x[..., 1::2, :].transpose(-2, -1)

c = torch.cat([a[..., ::2, :], b[..., ::2, :], a[..., 1::2, :], b[..., 1::2, :]], 1).transpose(-2, -1)

return self.conv(c)

else:

return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))

simplifier

- 但我其实没有用这个,因为我安装这个后再次导出onnx出现了问题

- https://github.com/daquexian/onnx-simplifier

pip install onnx-simplifier -i https://pypi.tuna.tsinghua.edu.cn/simplepython -m onnxsim ./weights/yolov5s.onnx ./weights/yolov5.onnx

使用带有 --grid 错误的 ONNX Simplifier 导出 https://github.com/ultralytics/yolov5/issues/2558

python ./models/export.py --weights ./weights/best.pt --img 640 --batch 1 https://www.cnblogs.com/ryzemagic/p/17089528.html

CG

-

python models/export.py --grid --simplifyhttps://github.com/ultralytics/yolov5/issues/1597 -

b[:, 4] += math.log(8 / (640 / s) ** 2) # obj (8 objects per 640 image) RuntimeError: a view of a leaf Variable that requires grad is being used in an in-place operation.https://blog.csdn.net/zhangxiangweide/article/details/125781044 -

看到很多博客都用了这篇 https://huggingface.co/spaces/darylfunggg/xray-hand-joint-detection/blob/main/yolov5/export.py

first of all, onnx-simplifier need to be installed with pip install onnx-simplifier,

then, the simplification codes are:

# ONNX export

try:

import onnx

from onnxsim import simplify

print('\nStarting ONNX export with onnx %s...' % onnx.__version__)

f = opt.weights.replace('.pt', '.onnx') # filename

torch.onnx.export(model, img, f, verbose=False, opset_version=12, input_names=['images'],

output_names=['output'] if y is None else ['output'])

# Checks

onnx_model = onnx.load(f) # load onnx model

model_simp, check = simplify(onnx_model)

assert check, "Simplified ONNX model could not be validated"

onnx.save(model_simp, f)

# print(onnx.helper.printable_graph(onnx_model.graph)) # print a human readable model

print('ONNX export success, saved as %s' % f)

except Exception as e:

print('ONNX export failure: %s' % e)

class Detect(nn.Module):

stride = None # strides computed during build

export = False # onnx export

def __init__(self, nc=80, anchors=(), ch=()): # detection layer

super(Detect, self).__init__()

self.nc = nc # number of classes

self.no = nc + 5 # number of outputs per anchor

self.nl = len(anchors) # number of detection layers

self.na = len(anchors[0]) // 2 # number of anchors

self.grid = [torch.zeros(1)] * self.nl # init grid

a = torch.tensor(anchors).float().view(self.nl, -1, 2)

self.register_buffer('anchors', a) # shape(nl,na,2)

self.register_buffer('anchor_grid', a.clone().view(self.nl, 1, -1, 1, 1, 2)) # shape(nl,1,na,1,1,2)

self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output conv

def forward(self, x):

# x = x.copy() # for profiling

z = [] # inference output

self.training |= self.export

for i in range(self.nl):

x[i] = self.m[i](x[i]) # conv

bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training: # inference

if self.grid[i].shape[2:4] != x[i].shape[2:4]:

self.grid[i] = self._make_grid(nx, ny).to(x[i].device)

y = x[i].sigmoid()

y[..., 0:2] = (y[..., 0:2] * 2. - 0.5 + self.grid[i]) * self.stride[i] # xy

y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh

z.append(y.view(bs, -1, self.no))

return x if self.training else (torch.cat(z, 1), x)

1555

1555

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?