一、overview

特征:

batch: not streams

open-source

python

useful UI

integration

architecture

lifecycle

DAG

有向无环图: Directed Acyclic Graph

node: tasks(按照顺序执行)

edges: dependencies

点和边可以通过python来定义。

二、 python操作

用python定义DAG

这个airflow pipeline实现这样一个简单功能:

打印greeting -> 打印时间 -> 5S后再循环

再举个例子

# import the libraries

from datetime import timedelta

# The DAG object; we'll need this to instantiate a DAG

from airflow import DAG

# Operators; we need this to write tasks!

from airflow.operators.bash_operator import BashOperator

# This makes scheduling easy

from airflow.utils.dates import days_ago

#defining DAG arguments

# You can override them on a per-task basis during operator initialization

default_args = {

'owner': 'Ramesh Sannareddy',

'start_date': days_ago(0),

'email': ['ramesh@somemail.com'],

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

# defining the DAG

dag = DAG(

'dummy_dag',

default_args=default_args,

description='My first DAG',

schedule_interval=timedelta(minutes=1),

)

# define the tasks

# define the first task

task1 = BashOperator(

task_id='task1',

bash_command='sleep 1',

dag=dag,

)

# define the second task

task2 = BashOperator(

task_id='task2',

bash_command='sleep 2',

dag=dag,

)

# define the third task

task3 = BashOperator(

task_id='task3',

bash_command='sleep 3',

dag=dag,

)

# task pipeline

task1 >> task2 >> task3

写好之后,需要将其放在airflow对应的dags目录下:

cp dummy_dag.py $AIRFLOW_HOME/dags

Scheduler

- depoly on worker array

- follows your dag

- first dag run

- subsequent runs

三、终端与webUI操作

UI操作基本一目了然,不多废话了。

终端启动之后,如下终端操作比较有用:

# 列出所有的DAGS

airflow dags list

# 列出DAG名字为`example_bash_operator`的所有tasks:

airflow tasks list example_bash_operator

# 启动dag

airflow dags unpause tutorial

# 暂停dag

airflow dags pause tutorial

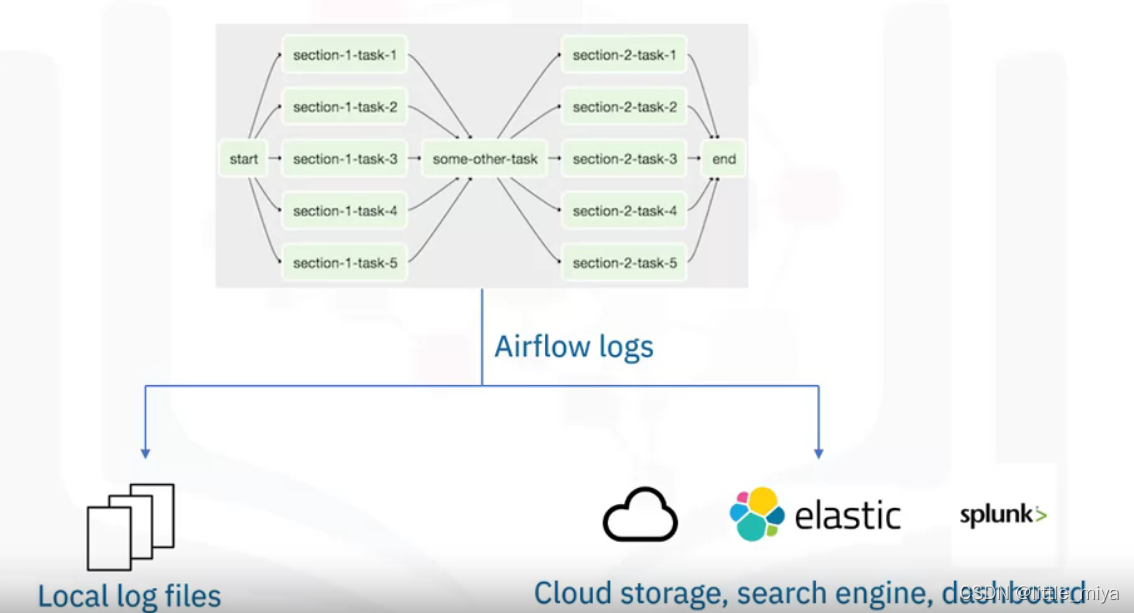

四、 airflow 监控与日志

默认logfile的文件命名如下:

logs可以存在本地,也可以存在云端,存在search engines, 或者日志分析工具。

monitor metrics

monitor监控流程:

2062

2062

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?