文献来源,然后复现之:

1 概述

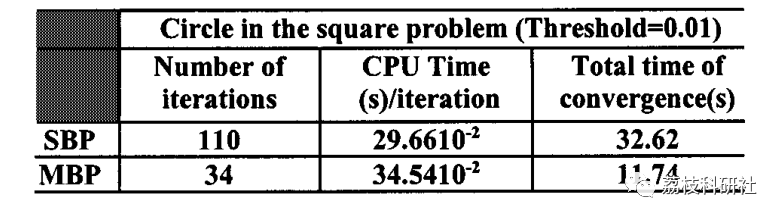

SBP算法已成为用于训练多层感知器的标准算法,如图1所示。它是一种广义最小均方 (LMS) 算法,它最小化等于实际输出和所需输出之间误差平方和的标准。这个标准是:

详细文章下载链接:

https://ieeexplore.ieee.org/document/914537

2 运行结果

W1W1 =-0.1900 -0.7425 -2.9507-0.3955 0.2059 -0.8937-0.4751 0.5315 -1.2644-2.5390 -1.8319 3.2741-1.0816 -0.6534 0.7954-1.4622 -0.0331 -0.4283-0.3125 0.0840 -0.8470-0.6496 -0.2922 -0.6272b1b1 =9.42431.54310.18602.46300.10970.53901.6335-0.4229W2W2 =-1.6282 0.5796 0.2008 1.0366 0.9238 -0.3099 0.6122 -0.0681b2b2 =0.3461Mean Error Square at Iter = 2000eSq =0.0016eSq_v =0.0022eSq_t =0.0017Trained时间已过 1540.739160 秒。No. of Iterations = 2001Final Mean Squared Error at Iter = 2001eSq =0.0016>>

部分代码:

%****Load the Input File******

load ./nnm_train.txt

redData = nnm_train(:,2);

nir1Data = [nnm_train(:,3) ./ redData]';

nir2Data = [nnm_train(:,4) ./ redData]';

nir3Data = [nnm_train(:,5) ./ redData]';

pg = [ nir1Data; nir2Data; nir3Data];

targetData = nnm_train(:,7) ;

%*******Validate Data*******

load ./nnm_validate.txt

redData_v = nnm_validate(:,2);

nir1Data_v = [nnm_validate(:,3) ./ redData_v]';

nir2Data_v = [nnm_validate(:,4) ./ redData_v]';

nir3Data_v = [nnm_validate(:,5) ./ redData_v]';

targetData_v = nnm_validate(:,7) ;

pValidate = [nir1Data_v; nir2Data_v; nir3Data_v];

%*******Test Data*******

load ./nnm_test.txt

redData_t = nnm_test(:,2);

nir1Data_t = [nnm_test(:,3) ./ redData_t]';

nir2Data_t = [nnm_test(:,4) ./ redData_t]';

nir3Data_t = [nnm_test(:,5) ./ redData_t]';

targetData_t = nnm_test(:,7) ;

pTest = [nir1Data_t; nir2Data_t; nir3Data_t];

%---Plot the Original Function----

pa = 1 : length(targetData_t);

actLine = 0:0.1:0.8;

subplot(2,1,2), plot (actLine, actLine); legend('Actual');%scatter(targetData_t, targetData_t,'^b');

hold on

%-----Randomized First Layer Weights & Bias-------

fprintf( 'Initial Weights and Biases');

%****3-8-1******

W1 = [ -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand; -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand;...

-0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand]'; %Uniform distribution [-0.5 0.5]

b1 = [ -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand]';

W2 = [ -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand -0.5+rand];

%-----Randomized Second Layer Bias------

b2 = [ -0.5+rand ];

if (lambda == 0) % Save the Weights and Bias on SBP

W1_initial = W1;

b1_initial = b1;

W2_initial = W2;

b2_initial = b2;

else % Reuse the Weights and Bias on MBP

W1 = W1_initial;

b1 = b1_initial;

W2 = W2_initial;

b2 = b2_initial;

end

%-----RandPermutation of Input Training Set-------

j = randperm(length(targetData));

j_v = randperm(length(targetData_v));

j_t = randperm(length(targetData_t));

%--Set Max. Iterations---

maxIter = 2000;

tic

for train = 1 : maxIter +1

eSq = 0; eSq_v = 0; eSq_t = 0;

% **** Mean Square Error ****

%if ( train <= maxIter )

for p = 1 : length(targetData)

n1 = W1*pg(:,p)+ b1 ;

a1 = logsig(n1);

a2 = poslin( W2 * a1 + b2 );

e = targetData(p) - a2 ;

eSq = eSq + (e^2);

end

eSq = eSq/length(targetData);

%*******Validate Error**********

for p = 1 : length(targetData_v)

n1 = W1*pValidate(:,p)+ b1 ;

a1 = logsig(n1);

a2 = poslin( W2 * a1 + b2 );

e = targetData_v(p) - a2 ;

eSq_v = eSq_v + (e^2);

end

eSq_v = eSq_v/length(targetData_v);

%********Use Validate Error for Early Stopping********

if ( train > 200 )

earlyStopCount = earlyStopCount + 1;

% fprintf('EarlyStop = %d', earlyStopCount);

if (earlyStopCount == 50)

if ( (prev_eSq_v - eSq_v) < 0 )

W2 = W2_25;

b2 = b2_25;

W1 = W1_25;

b1 = b1_25;

break;

end

prev_eSq_v = eSq_v; % Store previous validation error

earlyStopCount = 0; % Reset Early Stopping

%----Save the weights and biases-------

disp('Saving'); eSq

W2_25 = W2;

b2_25 = b2;

W1_25 = W1;

b1_25 = b1;

end

else

% ----Initialize the Weights----

if ( train == 200 )

W2_25 = W2;

b2_25 = b2;

W1_25 = W1;

b1_25 = b1;

end

prev_eSq_v = eSq_v; % Store previous validation error

end

%*******Test Error**********

for p = 1 : length(targetData_t)

n1 = W1*pTest(:,p)+ b1 ;

a1 = logsig(n1);

a2 = poslin( W2 * a1 + b2 );

e = targetData_t(p) - a2 ;

eSq_t = eSq_t + (e^2);

end

eSq_t = eSq_t/length(targetData_t);

if (train == 1 || mod (train, 100) == 0 )

fprintf( 'Weights and Biases at Iter = %d\n',train);

fprintf('W1');

(W1)

fprintf('b1')

b1

fprintf('W2')

W2

fprintf('b2')

b2

fprintf( 'Mean Error Square at Iter = %d',train);

eSq

eSq_v

eSq_t

end

subplot(2,1,1),

xlabel('No. of Iterations');

ylabel('Mean Square Error');

title('Convergence Characteristics ');

loglog(train, eSq, '*r'); hold on

loglog(train, eSq_v, '*g'); hold on

loglog(train, eSq_t, '*c'); hold on

legend('Training Error', 'Validation Error', 'Testing Error');

%************Train Data**********************

% Update only when the error is decreasing

% if ( earlyStopCount == 0 )

for p = 1 : length(targetData)

%----Output of the 1st Layer-----------

n1 = W1*pg(:,j(p))+ b1 ;

a1 = logsig(n1) ;

%-----Output of the 2nd Layer----------

n2 = W2 * a1 + b2;

a2 = (poslin( n2 ));

%a2 = (logsig( n2 ));

%-----Error-----

t = targetData(j(p));

e = t - a2;

%******CALCULATE THE SENSITIVITIES************

%-----Derivative of logsig function----

%f1 = dlogsig(n1,a1)

% f1 = [(1-a1(1))*a1(1) 0; 0 (1-a1(2))*a1(2)] ;

f1 = diag((1-a1).*a1);

%-----Derivative of purelin function---

f2 = 1;

%f2 = diag((1-a2).*a2);

%------Last Layer (2nd) Sensitivity----

S2 = -2 * f2 * e;

S2mbp = ((t)-n2);

%------First Layer Sensitivity---------

S1 = f1 *(W2' * S2);

S1mbp = f1 * (W2' * S2mbp);

%******UPDATE THE WEIGHTS**********************

%-----Second Layer Weights & Bias------

W2 = W2 - (alpha * S2*(a1)') - (alpha * lambda * S2mbp *(a1)');

b2 = b2 - alpha * S2 - (alpha * lambda * S2mbp);

%-----First Layer Weights & Bias-------

W1 = W1 - alpha * S1*(pg(:,j(p)))' - (alpha * lambda * S1mbp *(pg(:,j(p)))');

b1 = b1 - alpha * S1 - (alpha * lambda * S1mbp );

% end

end

%end

% End of 21 Input Training Sets

% ********** Function Apporx. *****************

if (train == 1 || mod (train, 100) == 0 || train == maxIter )

disp('Trained');

subplot(2,1,2),

xlabel('Actual Fraction of Weeds in 3 sq feet of grass area');

ylabel('Estimated Fraction of Weeds in 3 sq feet of grass area');

title('Correlation of Estimated Value with respect to the Actual Function using Standard Backpropagation');

legend('Estimated');

for p = 1 : length(targetData_t)

n1 = W1*pTest(:,p)+ b1 ; % Test Data

a1 = logsig(n1) ;

a2(p) = (poslin( W2 * a1 + b2 ));

end %end for

%scatter(targetData_t, a2); hold on;

end

end

toc

%-------End of Iterations------------

%***Plot of Final Function******

subplot(2,1,2),

for p = 1 : length(targetData_t)

n1 = W1*pTest(:,p)+ b1 ; % Test Data

a1 = logsig(n1) ;

a2(p) = (poslin( W2 * a1 + b2 ));

end %end for

3 参考文献

[1]S. Abid, F. Fnaiech and M. Najim, "A fast feedforward training algorithm using a modified form of the standard backpropagation algorithm," in IEEE Transactions on Neural Networks, vol. 12, no. 2, pp. 424-430, March 2001, doi: 10.1109/72.914537.

711

711

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?