结构运动-摄像机运动估计

目标

在本教程中,您将学习如何使用重建api的摄像机运动估计:

- 加载文件与跟踪二维点,并建立容器的所有帧。

- libmv重建传递途径运行。

- 使用即显示结果。

从运动中恢复结构SfM模块安装

使用情况和结果

为了运行这个示例,我们需要指定的路径跟踪点文件,相机的焦距长度除了中心投影坐标(以像素为单位)。 你可以找到一个示例文件/数据/ desktop_trakcks.txt样品

./example_sfm_trajectory_reconstruction desktop_tracks.txt 1914 640 360

./sfm_test ../desktop_tracks.txt 1914 640 360

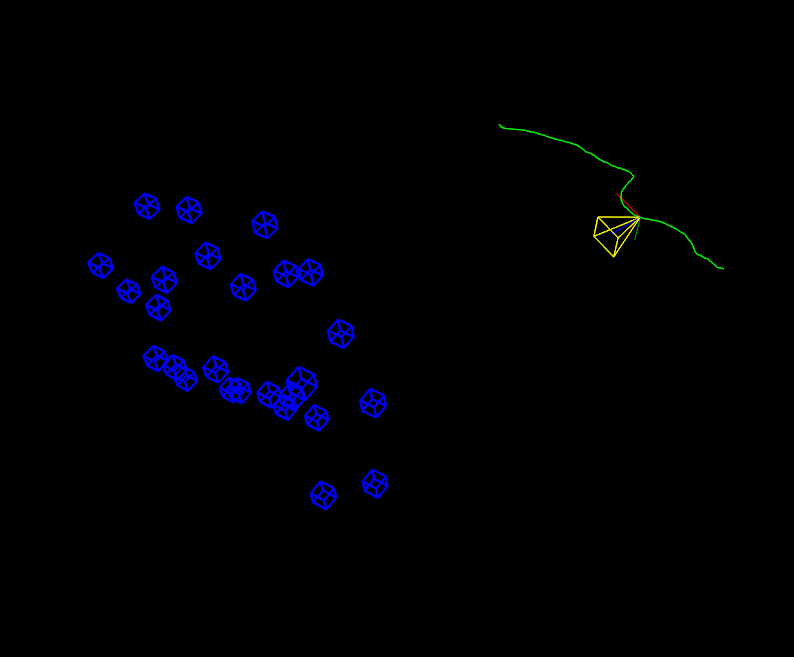

下面的图片显示了获得相机运动从追踪获得2 d点:

Code

代码

#define CERES_FOUND 1

#include <opencv2/sfm.hpp>

#include <opencv2/core.hpp>

#include <opencv2/sfm.hpp>

#include <opencv2/viz.hpp>

#include <opencv2/sfm/reconstruct.hpp>

#include <opencv2/sfm/simple_pipeline.hpp>

#include "/usr/local/include/opencv2/sfm/reconstruct.hpp"

//#include "reconstruct2.hpp"

#include <opencv2/calib3d.hpp>

#include <opencv2/core.hpp>

#include <iostream>

#include <fstream>

#include <opencv2/core.hpp>

#include <opencv2/sfm.hpp>

#include <opencv2/viz.hpp>

#include <iostream>

#include <fstream>

using namespace std;

using namespace cv;

using namespace cv::sfm;

static void help() {

cout

<< "\n------------------------------------------------------------------\n"

<< " This program shows the camera trajectory reconstruction capabilities\n"

<< " in the OpenCV Structure From Motion (SFM) module.\n"

<< " \n"

<< " Usage:\n"

<< " example_sfm_trajectory_reconstruction <path_to_tracks_file> <f> <cx> <cy>\n"

<< " where: is the tracks file absolute path into your system. \n"

<< " \n"

<< " The file must have the following format: \n"

<< " row1 : x1 y1 x2 y2 ... x36 y36 for track 1\n"

<< " row2 : x1 y1 x2 y2 ... x36 y36 for track 2\n"

<< " etc\n"

<< " \n"

<< " i.e. a row gives the 2D measured position of a point as it is tracked\n"

<< " through frames 1 to 36. If there is no match found in a view then x\n"

<< " and y are -1.\n"

<< " \n"

<< " Each row corresponds to a different point.\n"

<< " \n"

<< " f is the focal lenght in pixels. \n"

<< " cx is the image principal point x coordinates in pixels. \n"

<< " cy is the image principal point y coordinates in pixels. \n"

<< "------------------------------------------------------------------\n\n"

<< endl;

}

/* Build the following structure data

*

* frame1 frame2 frameN

* track1 | (x11,y11) | -> | (x12,y12) | -> | (x1N,y1N) |

* track2 | (x21,y11) | -> | (x22,y22) | -> | (x2N,y2N) |

* trackN | (xN1,yN1) | -> | (xN2,yN2) | -> | (xNN,yNN) |

*

*

* In case a marker (x,y) does not appear in a frame its

* values will be (-1,-1).

*/

void

parser_2D_tracks(const String &_filename, std::vector<Mat> &points2d )

{

ifstream myfile(_filename.c_str());

if (!myfile.is_open())

{

cout << "Unable to read file: " << _filename << endl;

exit(0);

} else {

double x, y;

string line_str;

int n_frames = 0, n_tracks = 0;

// extract data from text file

vector<vector<Vec2d> > tracks;

for ( ; getline(myfile,line_str); ++n_tracks)

{

istringstream line(line_str);

vector<Vec2d> track;

for ( n_frames = 0; line >> x >> y; ++n_frames)

{

if ( x > 0 && y > 0)

track.push_back(Vec2d(x,y));

else

track.push_back(Vec2d(-1));

}

tracks.push_back(track);

}

// embed data in reconstruction api format

for (int i = 0; i < n_frames; ++i)

{

Mat_<double> frame(2, n_tracks);

for (int j = 0; j < n_tracks; ++j)

{

frame(0,j) = tracks[j][i][0];

frame(1,j) = tracks[j][i][1];

}

points2d.push_back(Mat(frame));

}

myfile.close();

}

}

/* Keyboard callback to control 3D visualization

*/

bool camera_pov = false;

void keyboard_callback(const viz::KeyboardEvent &event, void* cookie)

{

if ( event.action == 0 &&!event.symbol.compare("s") )

camera_pov = !camera_pov;

}

/* Sample main code

*/

//void cv::sfm::reconstruct(InputArrayOfArrays points2d, OutputArray Rs, OutputArray Ts, InputOutputArray K,OutputArray points3d, bool is_projective = false);

int main(int argc, char** argv)

{

// Read input parameters

if ( argc != 5 )

{

help();

exit(0);

}

// Read 2D points from text file

std::vector<Mat> points2d;

parser_2D_tracks( argv[1], points2d );

// Set the camera calibration matrix

const double f = atof(argv[2]),

cx = atof(argv[3]), cy = atof(argv[4]);

Matx33d K = Matx33d( f, 0, cx,

0, f, cy,

0, 0, 1);

bool is_projective = true;

vector<Mat> Rs_est, ts_est, points3d_estimated;

cv::sfm::reconstruct(points2d, Rs_est, ts_est, K, points3d_estimated, is_projective);

// Print output

cout << "\n----------------------------\n" << endl;

cout << "Reconstruction: " << endl;

cout << "============================" << endl;

cout << "Estimated 3D points: " << points3d_estimated.size() << endl;

cout << "Estimated cameras: " << Rs_est.size() << endl;

cout << "Refined intrinsics: " << endl << K << endl << endl;

cout << "3D Visualization: " << endl;

cout << "============================" << endl;

viz::Viz3d window_est("Estimation Coordinate Frame");

window_est.setBackgroundColor(); // black by default

window_est.registerKeyboardCallback(&keyboard_callback);

// Create the pointcloud

cout << "Recovering points ... ";

// recover estimated points3d

vector<Vec3f> point_cloud_est;

for (int i = 0; i < points3d_estimated.size(); ++i)

point_cloud_est.push_back(Vec3f(points3d_estimated[i]));

cout << "[DONE]" << endl;

cout << "Recovering cameras ... ";

vector<Affine3d> path_est;

for (size_t i = 0; i < Rs_est.size(); ++i)

path_est.push_back(Affine3d(Rs_est[i],ts_est[i]));

cout << "[DONE]" << endl;

cout << "Rendering Trajectory ... ";

cout << endl << "Press: " << endl;

cout << " 's' to switch the camera pov" << endl;

cout << " 'q' to close the windows " << endl;

if ( path_est.size() > 0 )

{

// animated trajectory

int idx = 0, forw = -1, n = static_cast<int>(path_est.size());

while(!window_est.wasStopped())

{

for (size_t i = 0; i < point_cloud_est.size(); ++i)

{

Vec3d point = point_cloud_est[i];

Affine3d point_pose(Mat::eye(3,3,CV_64F), point);

char buffer[50];

sprintf (buffer, "%d", static_cast<int>(i));

viz::WCube cube_widget(Point3f(0.1,0.1,0.0), Point3f(0.0,0.0,-0.1), true, viz::Color::blue());

cube_widget.setRenderingProperty(viz::LINE_WIDTH, 2.0);

window_est.showWidget("Cube"+String(buffer), cube_widget, point_pose);

}

Affine3d cam_pose = path_est[idx];

viz::WCameraPosition cpw(0.25); // Coordinate axes

viz::WCameraPosition cpw_frustum(K, 0.3, viz::Color::yellow()); // Camera frustum

if ( camera_pov )

window_est.setViewerPose(cam_pose);

else

{

// render complete trajectory

window_est.showWidget("cameras_frames_and_lines_est", viz::WTrajectory(path_est, viz::WTrajectory::PATH, 1.0, viz::Color::green()));

window_est.showWidget("CPW", cpw, cam_pose);

window_est.showWidget("CPW_FRUSTUM", cpw_frustum, cam_pose);

}

// update trajectory index (spring effect)

forw *= (idx==n || idx==0) ? -1: 1; idx += forw;

// frame rate 1s

window_est.spinOnce(1, true);

window_est.removeAllWidgets();

}

}

return 0;

}

解释

首先,我们需要加载这个文件包含2 d点跟踪所有帧和构造容器重建api。 在这种情况下,跟踪2 d点会有以下结构,2 d点的向量数组,每个数组内代表一个不同的帧。 每一帧由2 d点的列表,例如第一个点在坐标系1点在坐标系2相同。 如果没有在一帧分配的值将被(-1,-1):

/* Build the following structure data

*

* frame1 frame2 frameN

* track1 | (x11,y11) | -> | (x12,y12) | -> | (x1N,y1N) |

* track2 | (x21,y11) | -> | (x22,y22) | -> | (x2N,y2N) |

* trackN | (xN1,yN1) | -> | (xN2,yN2) | -> | (xNN,yNN) |

*

*

* In case a marker (x,y) does not appear in a frame its

* values will be (-1,-1).

*/

...

for (int i = 0; i < n_frames; ++i)

{

Mat_<double> frame(2, n_tracks);

for (int j = 0; j < n_tracks; ++j)

{

frame(0,j) = tracks[j][i][0];

frame(1,j) = tracks[j][i][1];

}

points2d.push_back(Mat(frame));

}

Secondly, the built container will be used to feed the reconstruction api. It is important outline that the estimated results must be stored in a vector<Mat>:

其次,建立集装箱将被用来喂重建api。 重要的是大纲,必须存储在一个向量的估计结果<<Mat>:

bool is_projective =true;

vector<Mat> Rs_est, ts_est, points3d_estimated;

reconstruct(points2d, Rs_est, ts_est, K, points3d_estimated, is_projective(投影的));

// Print output

cout << "\n----------------------------\n" << endl;

cout << "Reconstruction: " << endl;

cout << "============================" << endl;

cout << "Estimated 3D points: " << points3d_estimated.size() << endl;

cout << "Estimated cameras: " << Rs_est.size() << endl;

cout << "Refined intrinsics: " << endl << K << endl << endl;

Finally, the obtained results will be shown in Viz, in this case reproducing(复制) the camera with an oscillation(振荡) effect.

最后,获得的结果将即所示,在这种情况下相机的重建效果。

cmake 文件

project(sfm_test)

CMAKE_MINIMUM_REQUIRED(VERSION 2.6)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

set("OpenCV_DIR" "/usr/local/share/OpenCV/")

find_package( OpenCV REQUIRED )

MESSAGE("OpenCV vesion: ${OpenCV_VERSION}")

find_package(Ceres REQUIRED)

find_package(Gflags QUIET)

find_package(Ceres QUIET)

# include(CheckCXXCompilerFlag)

AUX_SOURCE_DIRECTORY(. DIR_SRCS)

ADD_EXECUTABLE(sfm_test ${DIR_SRCS} )

target_link_libraries( sfm_test ${OpenCV_LIBS} )reconstruct()说明

§ reconstruct() [1/4]

| void cv::sfm::reconstruct | ( | InputArrayOfArrays | points2d, |

| OutputArray | Ps, | ||

| OutputArray | points3d, | ||

| InputOutputArray | K, | ||

| bool | is_projective = false | ||

| ) |

在执行自动校准时从2d对应重建3d点。

参数

| points2d | 2d点矢量的输入矢量(内部矢量是每个图像)。 |

| PS | 输出向量与每个图像的3x4投影矩阵。 |

| points3d | 具有估计的3d点的输出数组。 |

| ķ | 输入/输出相机矩阵\(K = \ vecthreethree {f_x} {0} {c_x} {0} {f_y} {c_y} {0} {0} {1} \)。 输入参数用作初始猜测。 |

| is_projective | 如果是真的,相机应该是投射的。 |

该方法调用以下签名并从估计的K,R和t中提取投影矩阵。

注意

- 轨道必须尽可能精确。 它不处理异常值并且对它们非常敏感。

§ reconstruct() [2/4]

| void cv::sfm::reconstruct | ( | InputArrayOfArrays | points2d, |

| OutputArray | Rs, | ||

| OutputArray | Ts, | ||

| InputOutputArray | K, | ||

| OutputArray | points3d, | ||

| bool | is_projective = false | ||

| ) |

在执行自动校准时从2d对应重建3d点。

参数

通过实例化SFMLibmvEuclideanReconstruction类,在内部调用libmv简单管道例程和一些默认参数。 注意

| |||||||||||||

问题

'reconstruct' is not a member of 'cv::sfm'

This is because of the Mismatch of Required Ceres, Eigen version from the SFM Module. The CERES_FOUND flag is set to 0 internally because of the newer Ceres version!

You can manually set this flag to 1 like this. before including the SFM modules.

#define CERES_FOUND 1

#include <opencv2/sfm.hpp>

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?