一、录制bag

本人使用的zed2i相机。

rosbag record -O 32 /zed2i/zed_node/imu/data /zed2i/zed_node/imdata_raw /zed2i/zed_node/left/image_rect_color /zed2i/zed_node/right/image_rect_color /zed2i/zed_node/left_raw/image_raw_color /zed2i/zed_node/right_raw/image_raw_color我录制了相机自我去畸变和原本图像的双目图像,如果你也使用的ZED相机,推荐查看这篇博客,了解各个话题的含义:

双目立体视觉(3)- ZED2 & ROS Melodic 发布RGB图像及深度信息_zed2官方文档-CSDN博客

我电脑18.04,cuda11.4,zedsdk 3.8

图像分辨率,设置的1280*720,10hz ,Imu 300hz 录制1min左右,频率官网说调到4hz最好,之前标定发现不改也可以,如果修改可以使用以下命令

举例:

rosrun topic_tools throttle messages /zed2i/zed_node/right_raw/image_raw_color 4.0 /right/image_raw以下是我的配置文件,一些自己尝试出来的注意事项,希望给大家避坑:

1、zed2i.yaml

# params/zed2i.yaml

# Parameters for Stereolabs ZED2 camera

---

general:

camera_model: 'zed2i'

depth:

min_depth: 0.7 # Min: 0.2, Max: 3.0 - Default 0.7 - Note: reducing this value wil require more computational power and GPU memory

max_depth: 10.0 # Max: 40.0

pos_tracking:

imu_fusion: true # enable/disable IMU fusion. When set to false, only the optical odometry will be used.

sensors:

sensors_timestamp_sync: false # Synchronize Sensors messages timestamp with latest received frame 不能开,开会极大降低IMU频率

max_pub_rate: 200. # max frequency of publishing of sensors data. MAX: 400. - MIN: grab rate imu的频率,开到400实际值会在360附近

publish_imu_tf: true # publish `IMU -> <cam_name>_left_camera_frame` TF

object_detection:

od_enabled: false # True to enable Object Detection [not available for ZED]

model: 0 # '0': MULTI_CLASS_BOX - '1': MULTI_CLASS_BOX_ACCURATE - '2': HUMAN_BODY_FAST - '3': HUMAN_BODY_ACCURATE - '4': MULTI_CLASS_BOX_MEDIUM - '5': HUMAN_BODY_MEDIUM - '6': PERSON_HEAD_BOX

confidence_threshold: 50 # Minimum value of the detection confidence of an object [0,100]

max_range: 15. # Maximum detection range

object_tracking_enabled: true # Enable/disable the tracking of the detected objects

body_fitting: false # Enable/disable body fitting for 'HUMAN_BODY_X' models

mc_people: true # Enable/disable the detection of persons for 'MULTI_CLASS_BOX_X' models

mc_vehicle: true # Enable/disable the detection of vehicles for 'MULTI_CLASS_BOX_X' models

mc_bag: true # Enable/disable the detection of bags for 'MULTI_CLASS_BOX_X' models

mc_animal: true # Enable/disable the detection of animals for 'MULTI_CLASS_BOX_X' models

mc_electronics: true # Enable/disable the detection of electronic devices for 'MULTI_CLASS_BOX_X' models

mc_fruit_vegetable: true # Enable/disable the detection of fruits and vegetables for 'MULTI_CLASS_BOX_X' models

mc_sport: true # Enable/disable the detection of sport-related objects for 'MULTI_CLASS_BOX_X' models2、common.yaml

# params/common.yaml

# Common parameters to Stereolabs ZED and ZED mini cameras

---

# Dynamic parameters cannot have a namespace

brightness: 4 # Dynamic

contrast: 4 # Dynamic

hue: 0 # Dynamic

saturation: 4 # Dynamic

sharpness: 4 # Dynamic

gamma: 8 # Dynamic - Requires SDK >=v3.1

auto_exposure_gain: true # Dynamic

gain: 100 # Dynamic - works only if `auto_exposure_gain` is false

exposure: 100 # Dynamic - works only if `auto_exposure_gain` is false

auto_whitebalance: true # Dynamic

whitebalance_temperature: 42 # Dynamic - works only if `auto_whitebalance` is false

depth_confidence: 30 # Dynamic

depth_texture_conf: 100 # Dynamic

pub_frame_rate: 10.0 # Dynamic - frequency of publishing of video and depth data

point_cloud_freq: 10.0 # Dynamic - frequency of the pointcloud publishing (equal or less to `grab_frame_rate` value)

general:

camera_name: zed # A name for the camera (can be different from camera model and node name and can be overwritten by the launch file)

zed_id: 0

serial_number: 0

resolution: 2 # '0': HD2K, '1': HD1080, '2': HD720, '3': VGA

grab_frame_rate: 10 # Frequency of frame grabbing for internal SDK operations

gpu_id: -1

base_frame: 'base_link' # must be equal to the frame_id used in the URDF file

verbose: false # Enable info message by the ZED SDK

svo_compression: 2 # `0`: LOSSLESS, `1`: AVCHD, `2`: HEVC

self_calib: true # enable/disable self calibration at starting

camera_flip: false

video:

img_downsample_factor: 1 # Resample factor for images [0.01,1.0] The SDK works with native image sizes, but publishes rescaled image.缩放因子,例如0.5会缩小图像长宽各一半

extrinsic_in_camera_frame: true # if `false` extrinsic parameter in `camera_info` will use ROS native frame (X FORWARD, Z UP) instead of the camera frame (Z FORWARD, Y DOWN) [`true` use old behavior as for version < v3.1]

depth:

quality: 3 # '0': NONE, '1': PERFORMANCE, '2': QUALITY, '3': ULTRA, '4': NEURAL

#控制深度图的质量级别。'0': NONE:不生成深度图。'1': PERFORMANCE:优先性能的深度图生成模式,适用于需要较高帧率的应用。'2': QUALITY':优先质量的深度图生成模式,适用于需要更准确深度信息的应用,但可能降低帧率。'3': ULTRA':最高质量的深度图生成模式,提供最好的深度信息,但要求高计算能力。'4': NEURAL':使用神经网络增强的深度图生成模式,旨在提供更准确和详细的深度信息。

sensing_mode: 0 # '0': STANDARD, '1': FILL (not use FILL for robotic applications)

depth_stabilization: 1 # `0`: disabled, `1`: enabled

openni_depth_mode: false # 'false': 32bit float meters, 'true': 16bit uchar millimeters

depth_downsample_factor: 1 # Resample factor for depth data matrices [0.01,1.0] The SDK works with native data sizes, but publishes rescaled matrices (depth map, point cloud, ...)

pos_tracking:

pos_tracking_enabled: false # True to enable positional tracking from start

publish_tf: true # publish `odom -> base_link` TF

publish_map_tf: true # publish `map -> odom` TF

map_frame: 'map' # main frame

odometry_frame: 'odom' # odometry frame

area_memory_db_path: 'zed_area_memory.area' # file loaded when the node starts to restore the "known visual features" map.

save_area_memory_db_on_exit: false # save the "known visual features" map when the node is correctly closed to the path indicated by `area_memory_db_path`

area_memory: true # Enable to detect loop closure

floor_alignment: false # Enable to automatically calculate camera/floor offset

initial_base_pose: [0.0,0.0,0.0, 0.0,0.0,0.0] # Initial position of the `base_frame` -> [X, Y, Z, R, P, Y]

init_odom_with_first_valid_pose: true # Enable to initialize the odometry with the first valid pose

path_pub_rate: 2.0 # Camera trajectory publishing frequency

path_max_count: -1 # use '-1' for unlimited path size

two_d_mode: false # Force navigation on a plane. If true the Z value will be fixed to "fixed_z_value", roll and pitch to zero

fixed_z_value: 0.00 # Value to be used for Z coordinate if `two_d_mode` is true

mapping:

mapping_enabled: false # True to enable mapping and fused point cloud publication

resolution: 0.05 # maps resolution in meters [0.01f, 0.2f]

max_mapping_range: -1 # maximum depth range while mapping in meters (-1 for automatic calculation) [2.0, 20.0]

fused_pointcloud_freq: 1.0 # frequency of the publishing of the fused colored point cloud

clicked_point_topic: '/clicked_point' # Topic published by Rviz when a point of the cloud is clicked. Used for plane detection

二、kalibr的使用

1、双目相机标定

首先,安装教程有很多,而且没有明显的坑,可以自己搜索,或者直接编译我调整好的

https://download.csdn.net/download/weixin_44760904/88902590?spm=1001.2014.3001.5503

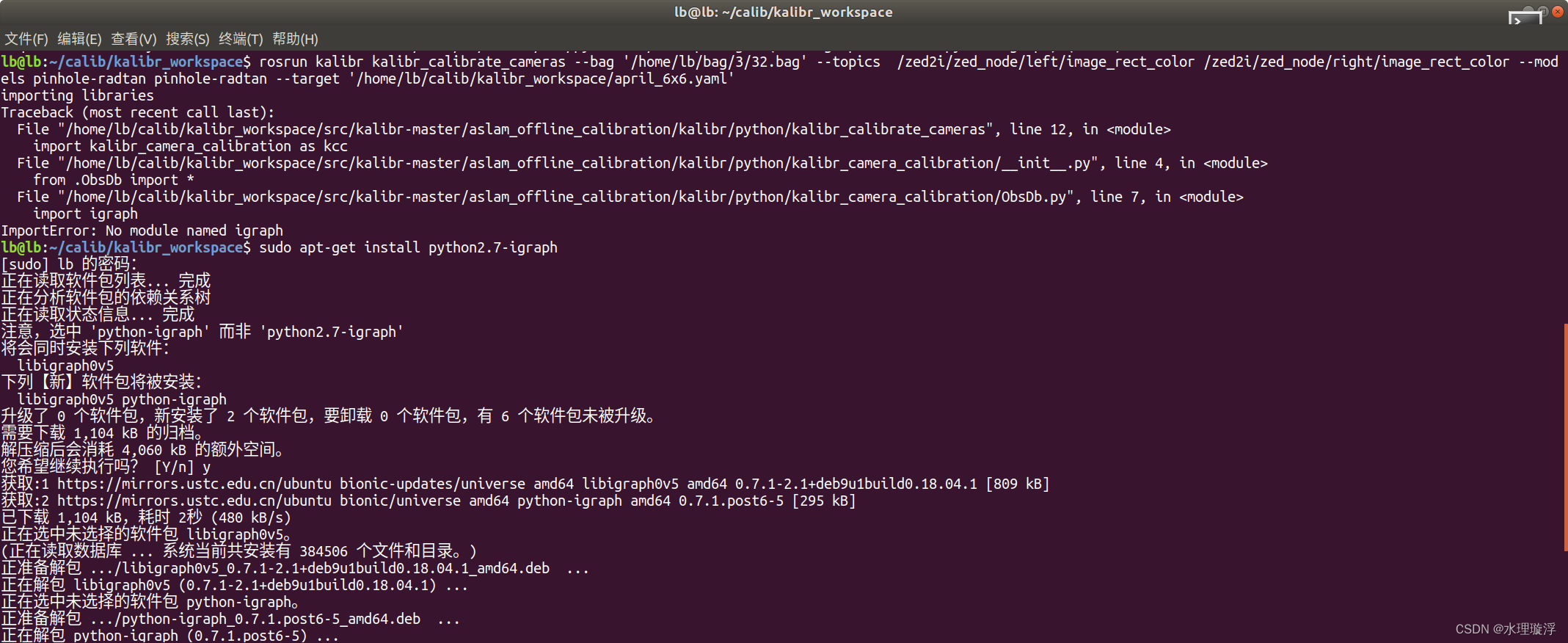

报错:ImportError: No module named igraph

解决方式: sudo apt-get install python2.7-igraph

(使用棋盘格时,yaml文件里的棋盘格行列数要比实际各减1)

先标定双目:

rosrun kalibr kalibr_calibrate_cameras --bag '/home/lb/bag/3/32.bag' --topics /zed2i/zed_node/left/image_rect_color /zed2i/zed_node/right/image_rect_color --models pinhole-radtan pinhole-radtan --target '/home/lb/calib/kalibr_workspace/april_6x6.yaml' 命令含义(GPT生成):

rosrun:这是一个ROS命令,用于在ROS包中运行特定的节点。kalibr:这是你要运行的ROS包的名称。kalibr_calibrate_cameras:这是kalibr包中你想要运行的特定节点或可执行文件。它似乎用于相机校准。--bag '/home/lb/bag/3/32.bag':这指定了你要用于校准的ROS包文件。ROS包是存储ROS消息数据的一种便捷方式。--topics /zed2i/zed_node/left/image_rect_color /zed2i/zed_node/right/image_rect_color:这指定了你要用于校准的包文件中的主题。在这种情况下,似乎是左右相机图像。--models pinhole-radtan pinhole-radtan:这指定了你要用于校准的相机模型。在这里,你使用的是针孔-radial-tangential模型,用于左右两个相机。--target '/home/lb/calib/kalibr_workspace/april_6x6.yaml':这指定了校准目标的YAML文件。校准目标是具有已知几何形状的模式或物体,用于校准相机。

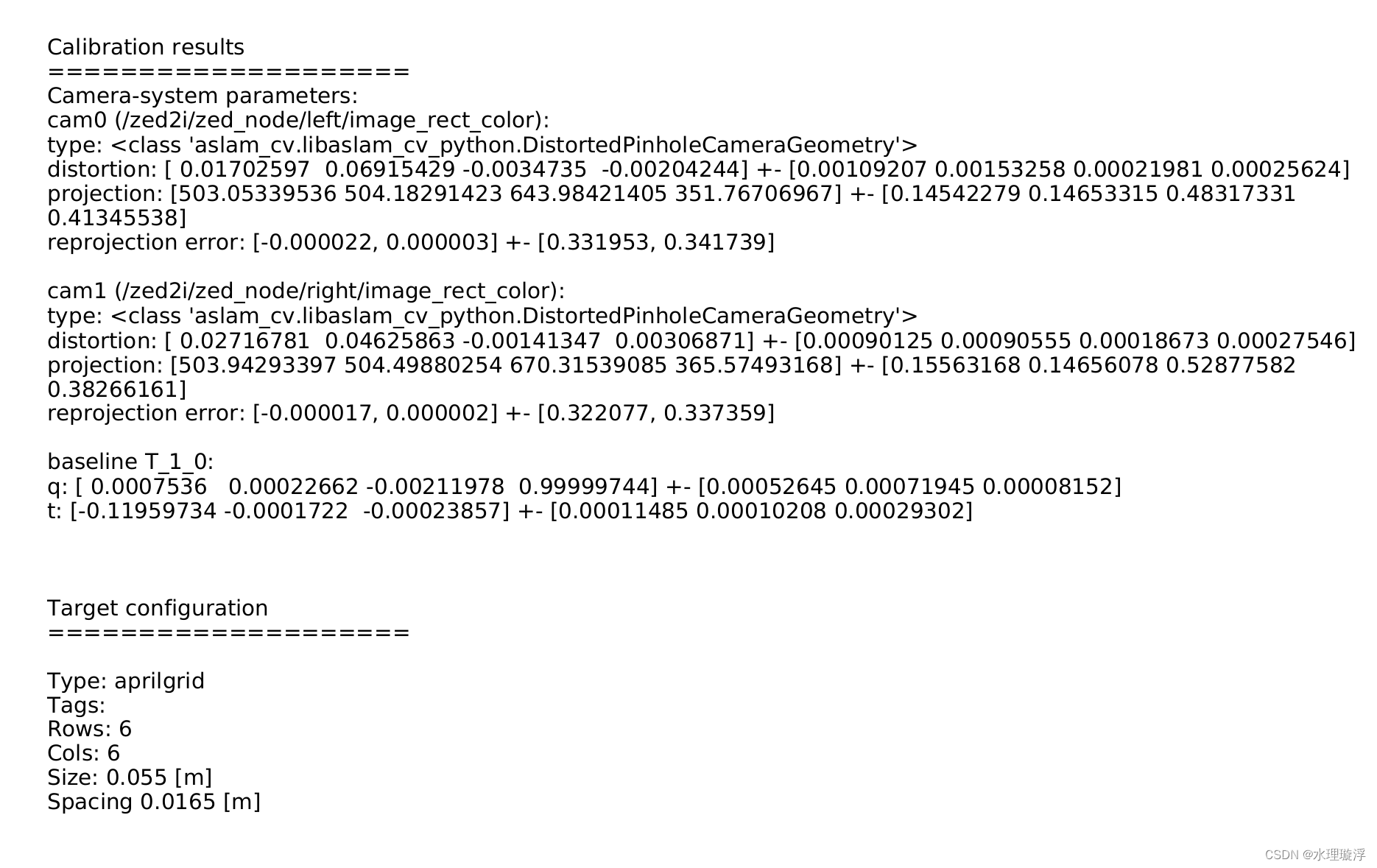

耐心等待之后

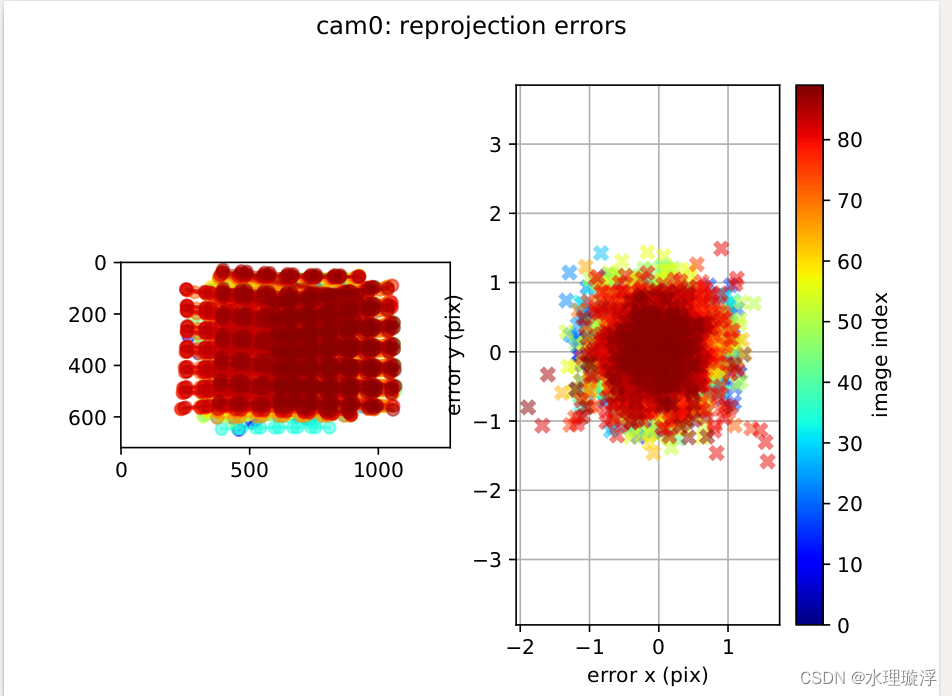

结果:

重投影误差1左右,可用

2、Imu标定

使用 imu_utils 进行标定,它依赖ceres-solver优化库,要先安装ceres库

另外,imu_utils是依赖于code_utils的,所以先编译code_utils后再下载编译imu_utils

录制2h左右,期间保持相机不动。

结果:

新建一个imu.yaml

填入:

rostopic: /zed2i/zed_node/imu/data

update_rate: 300.0 #Hz

accelerometer_noise_density: 1.8370034577350359e-02

accelerometer_random_walk: 3.1364398099665192e-04

gyroscope_noise_density: 2.2804362845695965e-03

gyroscope_random_walk: 4.7394104123124287e-05

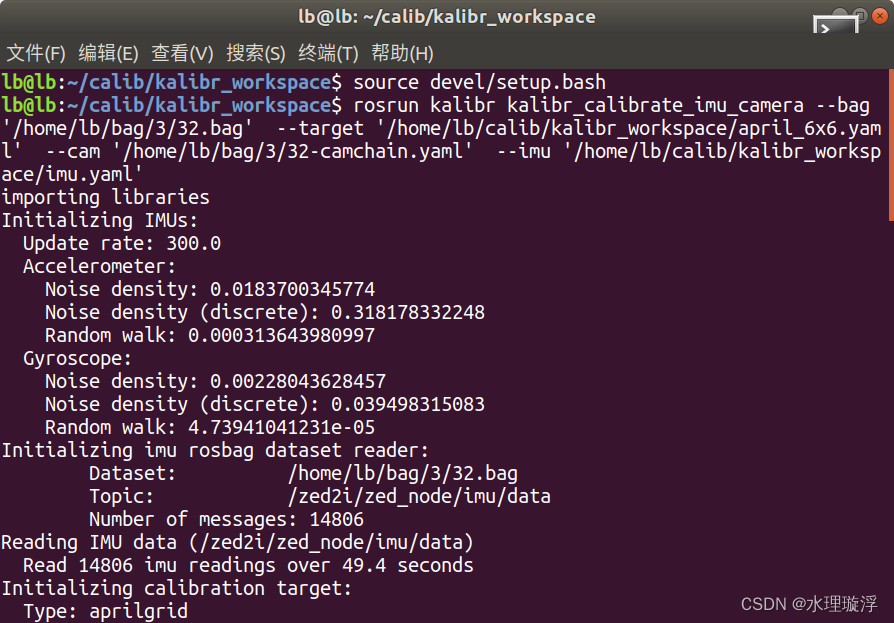

3、开始联合标定

rosrun kalibr kalibr_calibrate_imu_camera --bag '/home/lb/bag/3/32.bag' --target '/home/lb/calib/kalibr_workspace/april_6x6.yaml' --cam '/home/lb/bag/3/32-camchain.yaml' --imu '/home/lb/calib/kalibr_workspace/imu.yaml'

rosrun kalibr kalibr_calibrate_imu_camera --bag '/home/lb/bag/3/32.bag' --target '/home/lb/calib/kalibr_workspace/april_6x6.yaml' --cam '/home/lb/bag/3/32-camchain.yaml' --imu '/home/lb/calib/kalibr_workspace/imu.yaml'

耐心等待之后

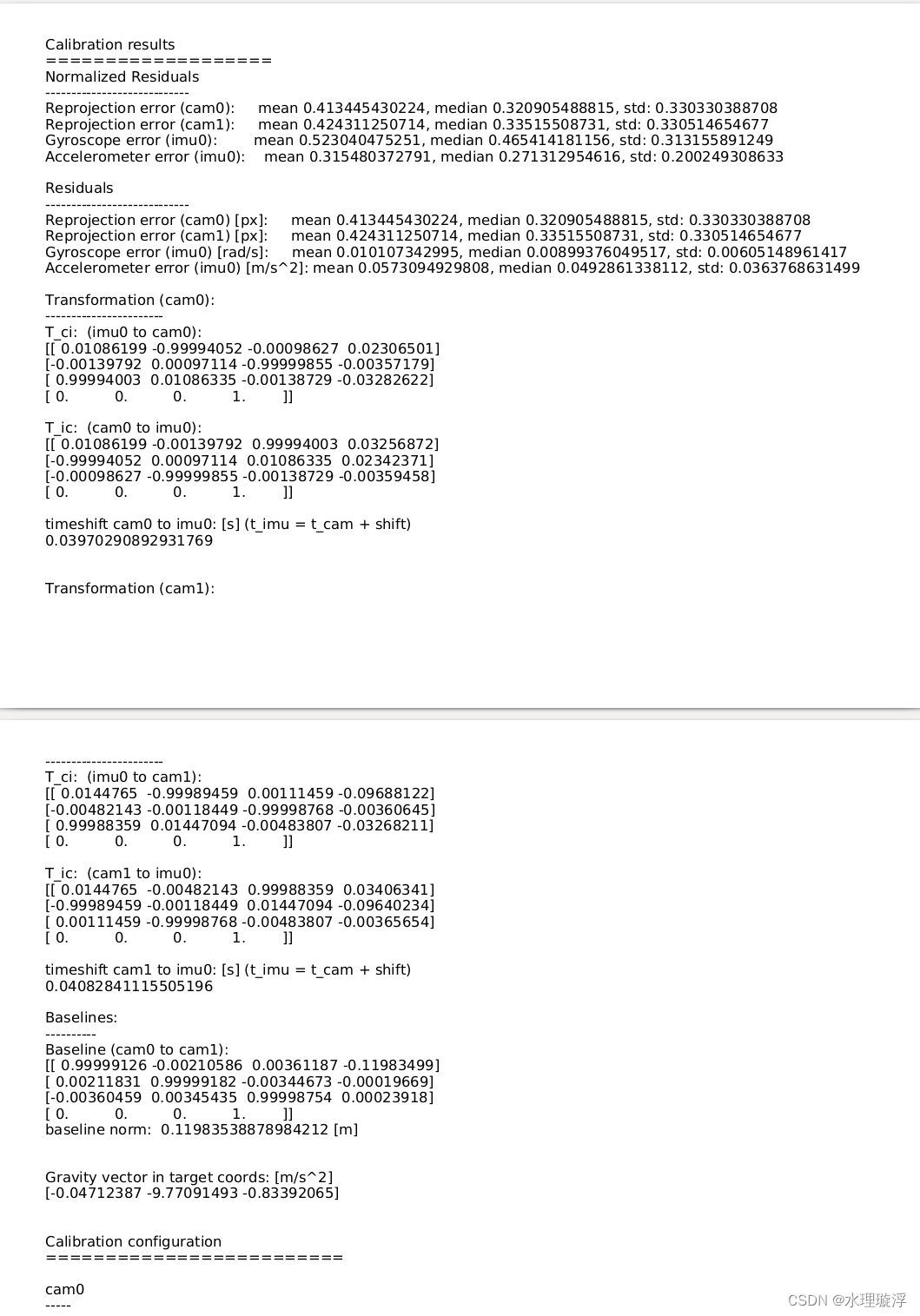

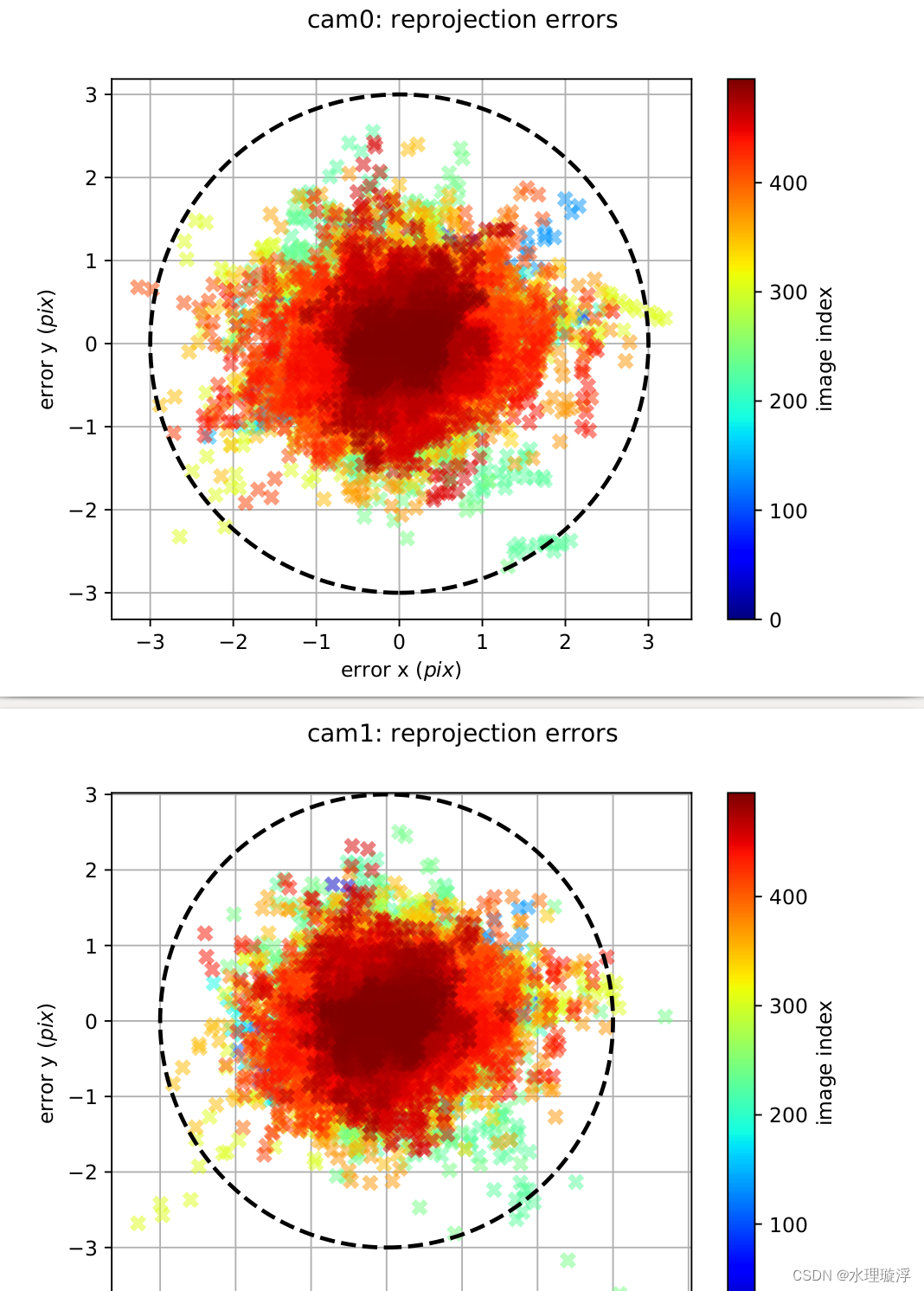

结果:

重投影误差看着有点大,不过是因为我录制的帧数太多导致的,结果放入算法实际运行验证,算是一个较优结果。

如果在优化时报错:

ERROR] [时间错]: Optimization failed!打开kalibr/aslam_offline_calibration/kalibr/python/kalibr_calibrate_imu_camera.py文件,搜索timeOffsetPadding直接更改此值为0.3。

#timeOffsetPadding=parsed.timeoffset_padding, #原来的代码

timeOffsetPadding=0.3, #修改后

本文详细介绍了如何使用ZED2相机录制bag文件,包括设置相机参数,双目立体视觉的RGB图像和深度信息发布,以及使用kalibr进行相机和imu的联合标定过程,包括imu_utils的安装和标定步骤。

本文详细介绍了如何使用ZED2相机录制bag文件,包括设置相机参数,双目立体视觉的RGB图像和深度信息发布,以及使用kalibr进行相机和imu的联合标定过程,包括imu_utils的安装和标定步骤。

1207

1207

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?