https://catlikecoding.com/unity/tutorials/rendering/part-18/

参考网址:https://docs.unity3d.com/Manual/GIIntro.html

this is part 18 of a tutorial series about rendering. after wrapping up baked global illumination in part 17, we move on to supporting real time gi. after that, we will also support light probe proxy volumes and cross-fading lod groups.

from now on, this tutorial series is made with unity 2017.1.0f3. it will not work with older versions, because we will end up using a new shader function.

1 realtime global illumination

baking light works very well for static geometry, and also pretty well for dynamic geometry thanks to light probes. however, it can not deal with dynamic lights. lights in mixed mode can get away with some real time adjustments, but too much makes it obvious that the baked indirect light does not change. so when u have an outdoor scene, the sun has to be unchanging. it can not travel across the sky like it does in real life, as it requires gradually changing gi. so the scene has to be frozen in time.

to make indirect lighting work with something like a moving sun, unity uses the enlighten system to calculate realtime global illumination. it works like baked indirect lighting, except that the lightmaps and probes are computed at runtime.

figuring out indirect light requires knowledge of how light could bounce between static surfaces. the question is which surfaces are potentially affected by which other surfaces, and to what degree. 当前的面,受到哪些面影响。

figuring out these relationships is a lot of work and can not be done in real time. so this data is processed by the editor and stored for use at runtime. enlighten then uses it to compute the realtime lightmaps and probe data. even then, it is only feasible with low resolution lightmaps. 只能使用低分辨率的光照贴图

1.1 enabling realtime gi

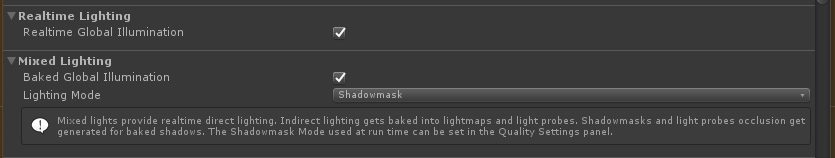

real time global illumination can be enabled independent of baked lighting. 可以和烘焙光独立开单独使用。u can have none, one, or both active at the same time. it is enabled via the checkbox in the realtime lighting section of the lighting window.

to see real time gi in action, set the mode of the main light in our test scene to real time. as we have no other lights, this effectively turns off baked lighting, event when it is enabled.

make sure that all objects in the scene use our white material. like last time, the spheres are all dynamic and everything else is static geometry.

it turns out that only the dynamic objects benefit from realtime gi. the static objects have become darker. that is because the light probes automatically incorporated the realtime gi. static objects have to sample the realtime lightmaps, which are not the same as the baked lightmaps. our shader does not do this yet.

1.2 baking realtime gi

unity already generates the realtime lightmaps while in edit mode, so u can always see the realtime gi contribution. these maps are not retained when switching between edit and play mode, but they end up the same. u can inspect the realtime lightmaps via the object maps tab of the lighting window, with a lightmap-static object selected. choose the realtime intensity visualization to see the realtime lightmap data.

实时gi,也是针对静态物体的????

although realtime lightmaps are already baked, and they might appear correct, 看起来是正确的,但是是错误的。our meta pass actually uses the wrong coordinates. realtime gi has its own lightmap coordinates, which can end up being different than those for static lightmaps. unity generates these coordinates automatically, based on the lightmap and object settings. they are stored in the third mesh uv channel. so add this data to VertexData in My Lightmapping.

struct VertexData

{

float4 vertex:POSITION;

float4 uv:TEXCOORD0;

float4 uv1:TEXCOORD1;

float4 uv2:TEXCOORD2;

};

now MyLightmappingVertexProgram has to use either the second or third UV set, together with either the static or dynamic lightmap’s scale and offset. we can rely on the UntiyMetaVertexPosition function to use the right data.

Interpolators MyLightmappingVertexProgram (VertexData v) {

Interpolators i;

i.pos = UnityMetaVertexPosition(

v.vertex, v.uv1, v.uv2, unity_LightmapST, unity_DynamicLightmapST

);

i.uv.xy = TRANSFORM_TEX(v.uv, _MainTex);

i.uv.zw = TRANSFORM_TEX(v.uv, _DetailTex);

return i;

}

note that the meta pass is used for both baked and realtime lightmapping. so when realtime gi is used, it will also be included in builds.

1.3 sampling realtime lightmaps

to actually sample the realtime lightmpas, we have to also add the third uv set to VertexData in MyLighting.

struct VertexData {

float4 vertex : POSITION;

float3 normal : NORMAL;

float4 tangent : TANGENT;

float2 uv : TEXCOORD0;

float2 uv1 : TEXCOORD1;

float2 uv2 : TEXCOORD2;

};

when a realtime lightmap is used, we have to add its lightmap coordinates to our interpolators. the standard shader combines both lightmap coordinates sets in a single interpolator——multiplexed with some other data——but we can get away with separate interplators for both. we know that there is dynamic light data when DYNAMICLIGHTMAP_ON keyword ins defined. it is part of keyword list of the multi_compile_fwdbase compiler directive.

struct Interpolators

{

…

#if defined(DYNAMICLIGHTMAP_ON)

float2 dynamicLightmapUV : TEXCOORD7;

#endif

};

fill the coordiantes just like the static lightmap coordinates, except with the dynamic lightmap’s scale and offset, made available via unity_DynamicLightmapST.

Interpolators MyVertexProgram (VertexData v) {

…

#if defined(LIGHTMAP_ON) || ADDITIONAL_MASKED_DIRECTIONAL_SHADOWS

i.lightmapUV = v.uv1 * unity_LightmapST.xy + unity_LightmapST.zw;

#endif

#if defined(DYNAMICLIGHTMAP_ON)

i.dynamicLightmapUV =

v.uv2 * unity_DynamicLightmapST.xy + unity_DynamicLightmapST.zw;

#endif

…

}

sampling the realtime lightmap is done in our CreateIndirectLight function. duplicate the #if defined(LIGHTMAP_ON) code block and make a few changes. first, the new block is based on the DYNAMICLIGHTMAP_ON keyword. also, it should use DecodeRealtimeLigthmap instead of DecodeLightmap, because the realtime maps use a different color format. because this data might be added to baked lighting, do not immediately assign to indirectLight.diffuse, but use an immediate variable which is added to it at the end. 不要赋值,而是使用+=。finally, we should only sample spherical harmonics when neither a baked nor a realtime lighmap is used.

#if defined(LIGHTMAP_ON)

indirectLight.diffuse =

DecodeLightmap(UNITY_SAMPLE_TEX2D(unity_Lightmap, i.lightmapUV));

#if defined(DIRLIGHTMAP_COMBINED)

float4 lightmapDirection = UNITY_SAMPLE_TEX2D_SAMPLER(

unity_LightmapInd, unity_Lightmap, i.lightmapUV

);

indirectLight.diffuse = DecodeDirectionalLightmap(

indirectLight.diffuse, lightmapDirection, i.normal

);

#endif

ApplySubtractiveLighting(i, indirectLight);

// #else

// indirectLight.diffuse += max(0, ShadeSH9(float4(i.normal, 1)));

#endif

#if defined(DYNAMICLIGHTMAP_ON)

float3 dynamicLightDiffuse = DecodeRealtimeLightmap(

UNITY_SAMPLE_TEX2D(unity_DynamicLightmap, i.dynamicLightmapUV)

);

#if defined(DIRLIGHTMAP_COMBINED)

float4 dynamicLightmapDirection = UNITY_SAMPLE_TEX2D_SAMPLER(

unity_DynamicDirectionality, unity_DynamicLightmap,

i.dynamicLightmapUV

);

indirectLight.diffuse += DecodeDirectionalLightmap(

dynamicLightDiffuse, dynamicLightmapDirection, i.normal

);

#else

indirectLight.diffuse += dynamicLightDiffuse;

#endif

#endif

#if !defined(LIGHTMAP_ON) && !defined(DYNAMICLIGHTMAP_ON)

indirectLight.diffuse += max(0, ShadeSH9(float4(i.normal, 1)));

#endif

now realtime lightmaps are used by our shader. initially, it might look the same as baked lighting with a mixed light, when using distance shadowmask mode. the difference becomes obvious when turning off the light while in play mode.

after disabling a mixed lights, its indirect light will remain. in contrast, the indirect contribution of a real-time light disappears–and reappears-- as it should. however, it might take a while before the new situation is fully baked. enlighten incrementally adjust the lightmaps and probes. how quickly this happens depends on the complexity of the scene and the realtime global illumination cpu quality tier setting.

这个地方不懂了。。。。

all realtime lights contribute to realtime gi. however, its typical use is with the main direction light only, representing the sun as it moves through the sky. it is fully functional for directional lights. points lights and spotlights work too, but only unshadowed. so when using shadowed point lights or spotlights u can end up with incorrect lighting.

也就是典型的应用场景是一个主光源是平行光,而且他是实时灯光。对于点光源或者聚光灯则只能是没有阴影的时候才能正常设置为realtime的。

if u want to exclude a realtime light from realtime gi, u can do so by settings its indirect multiplier for its light intensity to zero.

1.4 emissive light

realtime gi can also be used for static objects that emit light. this makes it possible to vary their emission with matching realtime indirect light. let us try this out. add a static sphere to the scene and give it a material that uses our shader with a black albedo and white emission color. initially, we can only see the indirect effects of the emitted light via the static lightmaps.

to bake emissive light into the static lightmap, we had to set the material’s global illumination flags in our shader gui. as we always set the flags to BakedEmissive, the light ends up in the baked lightmap. this is fine when emissive light is constant, but does not allow us to animate it.

to support both baked and realtime lighting for the emission, we have to make this configurable. we can do so by adding a choice for this to MyLightingShaderGUI, via the MaterialEditor.LightmapEmissionProperty method. its single parameter is the property’s indentation level.

the visual difference between baked and realtime gi is that the real-time lightmap usually has a much lower resolution than the baked one. so when the emission does not change and u use baked gi anyway, make sure to take advantage of its higher resolution.

当自发光不变的时候,尽量使用baked,因为其分辨率要更高。

1.5 animating emission

real-timer gi for emission is only possible for static objects. while the objects are static, the emission properties of their materials can be animated and will be packed up by the global illumination system. let’s try this out with a simple component that oscillates 震荡 between a white and emission color.

using UnityEngine;

public class EmissiveOscillator : MonoBehaviour {

Material emissiveMaterial;

void Start () {

emissiveMaterial = GetComponent<MeshRenderer>().material;

}

void Update () {

Color c = Color.Lerp(

Color.white, Color.black,

Mathf.Sin(Time.time * Mathf.PI) * 0.5f + 0.5f

);

emissiveMaterial.SetColor("_Emission", c);

}

}

add this component to our emisive sphere. when in play mode, its emission will animate, but indirect light is not affected yet. we have to notify the realtime gi system that it has work to do. this can be done by invoking the Render.UpdateGIMaterials method of the appropriate mesh render.

MeshRenderer emissiveRenderer;

Material emissiveMaterial;

void Start () {

emissiveRenderer = GetComponent<MeshRenderer>();

emissiveMaterial = emissiveRenderer.material;

}

void Update () {

…

emissiveMaterial.SetColor("_Emission", c);

emissiveRenderer.UpdateGIMaterials();

}

invoking UpdateGIMaterials triggers a complete update of the object’s emission, rendering it using its meta pass. this is necessary when the emission is more complex than a solid color, for example, if we used a texture. if a solid color is sufficient , we can get away with a shortcut by invoking Dynamic.SetEmissive with the renderer and the emission color. this is quicker than rendering the object with the meta pass, so take advantage of it when able.

// emissiveRenderer.UpdateGIMaterials();

DynamicGI.SetEmissive(emissiveRenderer, c);

2 light probe proxy volumes

both baked and realtime gi is applied to dynamic objects via light probes. an object’s position is used to interpolate light probe data, which is then used to apply gi. this works for fairly small objects, but is too crude for large ones.

as an example, add long stretched cube to the test scene so that it is subject to varying lighting conditions. it should use our white material. as it is a dynamic cube, it ends up using a single point to determine its gi contribution. positioning it so that this point ends up shadowed, the entire cube becomes dark, which is obviously wrong. to make it very obvious, use a baked main light, so all lighting comes from the baked and realtime gi data.

to make light probes work for cases like this, we can use a light probe proxy volume, or LPPV for short.

1908

1908

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?