1 Introuduction

In this work we study the combination of the two most recent ideas: Residual connections and Inception v3. We replace the filter concatenation stage of the Inception architecture with residual connections.

Besides a straightforwad integration, we have also designed a new version named Inception -v4.

2 related work

However the use of residual connections seems to imporove the training speed greadly.

3 Architectural Choices

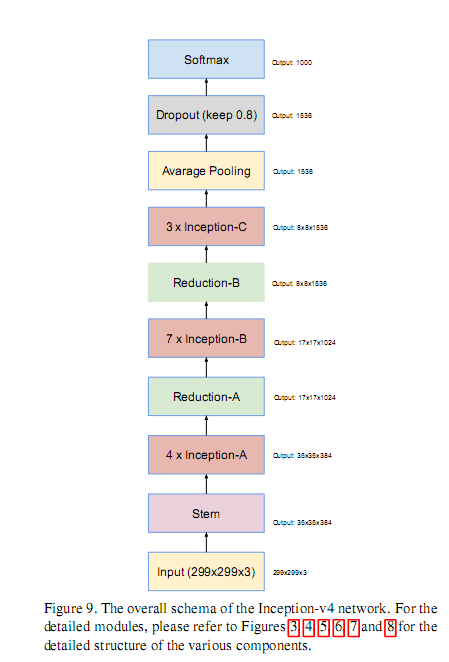

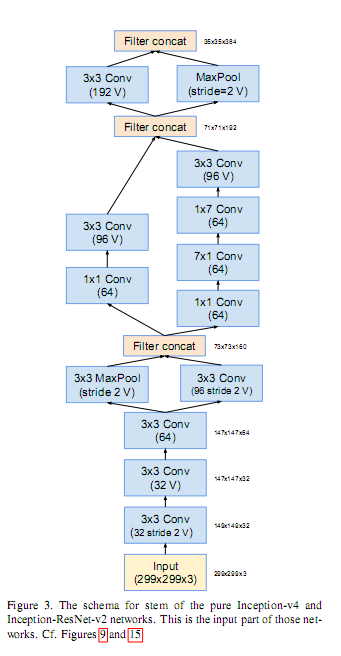

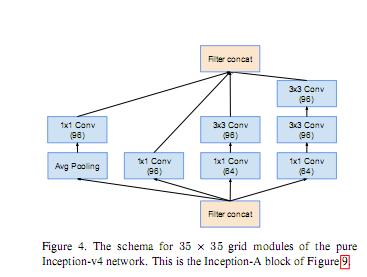

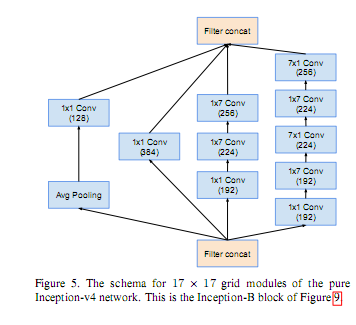

3.1 Inception -v4

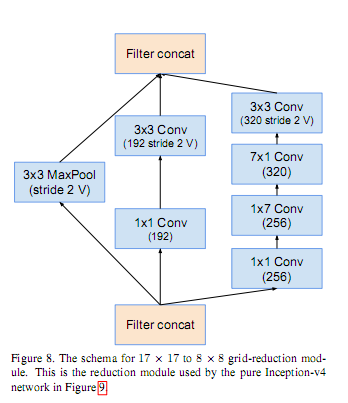

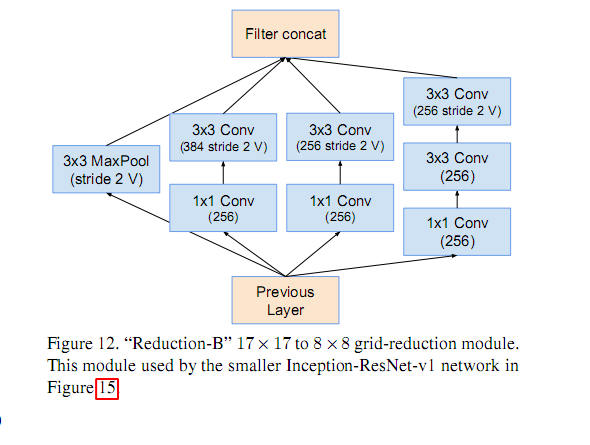

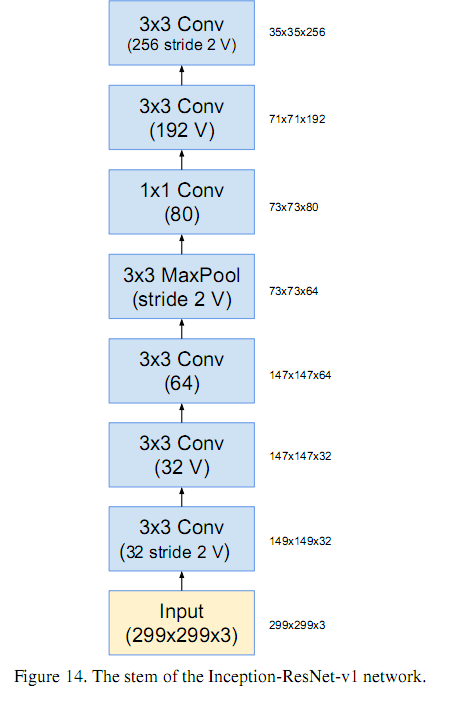

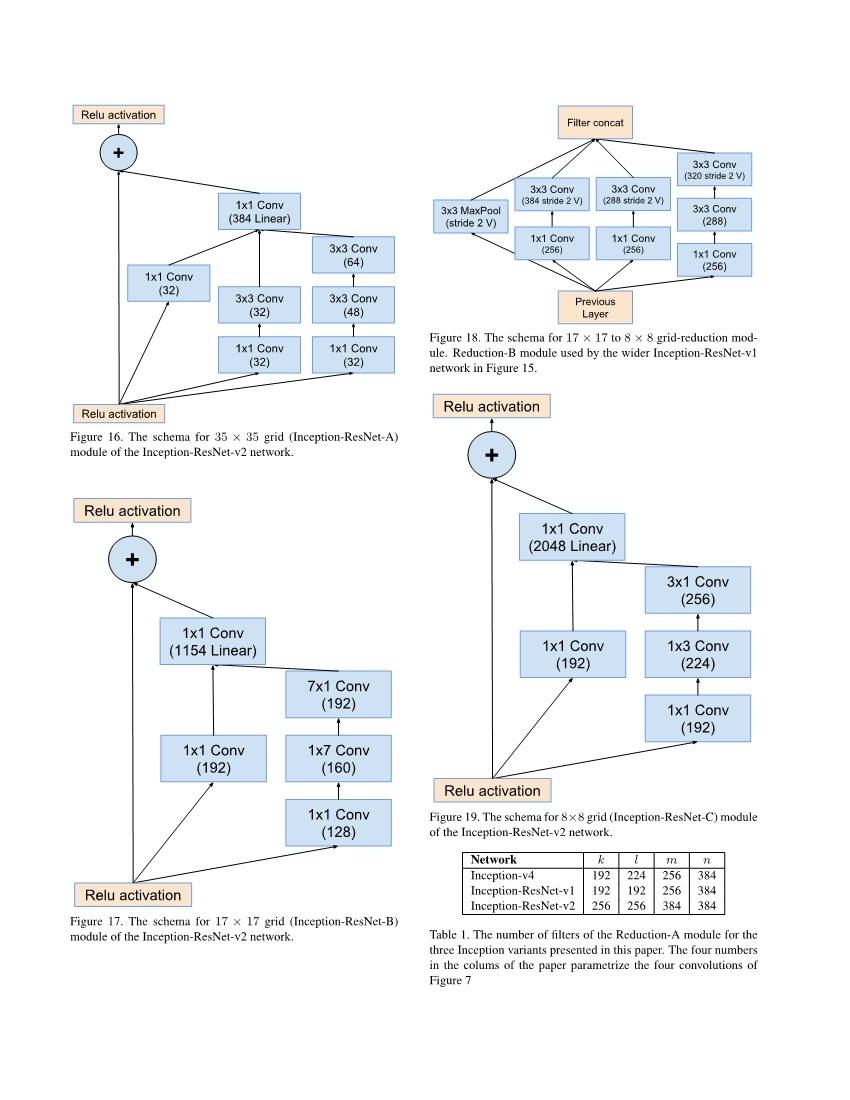

Convolutions marked with V are valid padded, meaning that input patch of each unit is fully contained in the previous layer and the gid size of the output activation map is reduced accordingly.

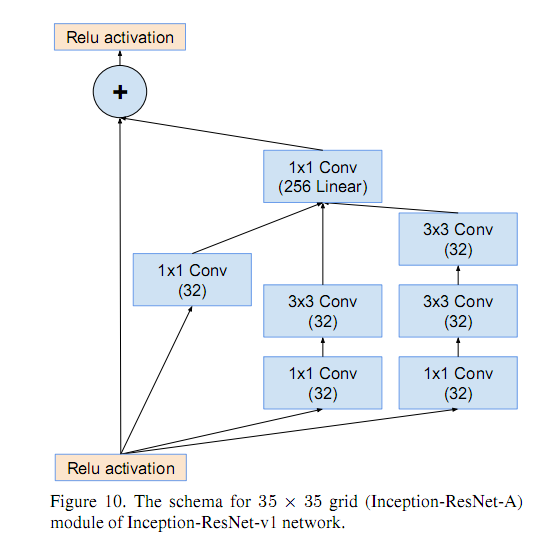

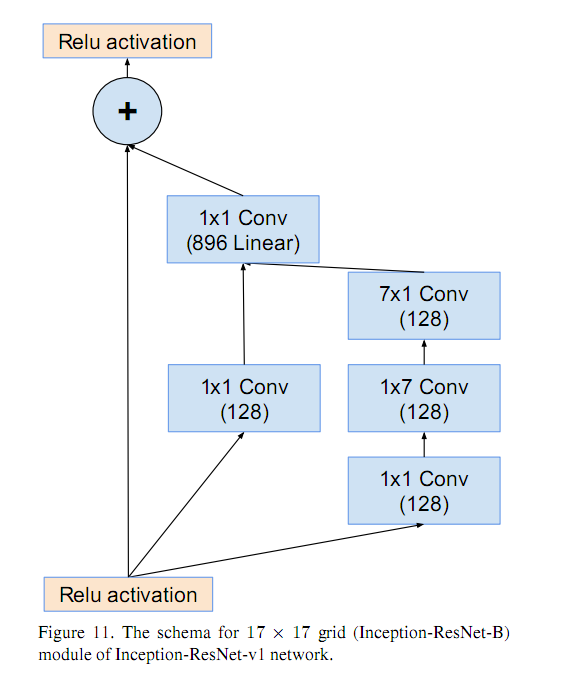

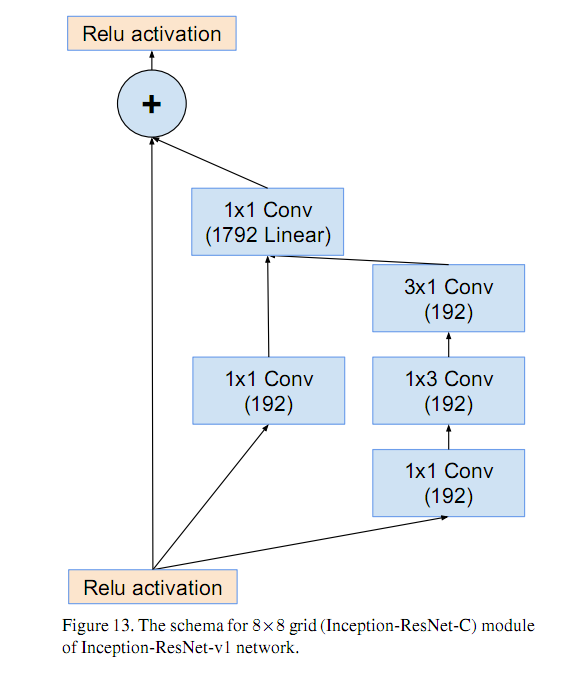

3.2 Residual Inception blocks

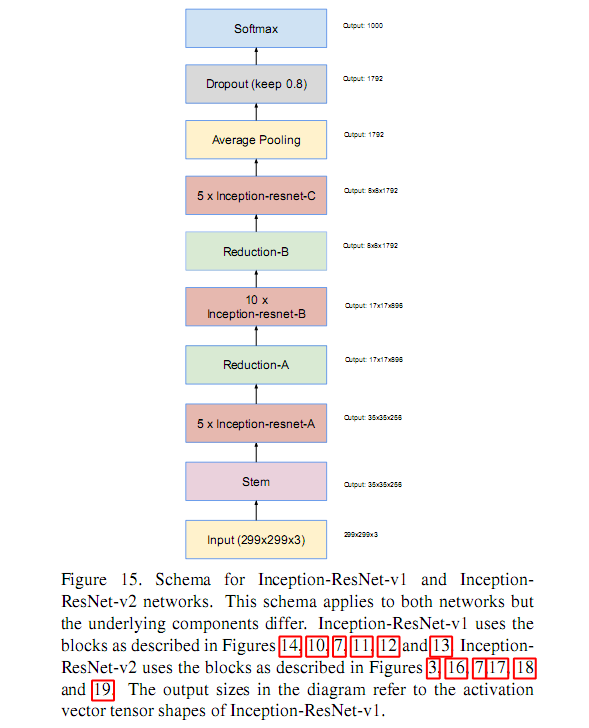

Inception-resnet-v1 and Inception-ResNet v2

IRV1 roughly the computational cost of Inception-v3, v2maeches the raw coset of the newly introduced Inceptionv4, but inceptionv4 was proved to be dignificantly slower in practice.

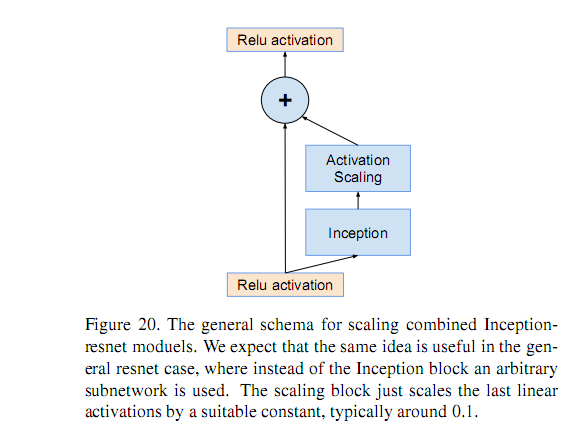

3.3 Scaling of the Residuals

Also we found that if the number of filters exceeded 1000 , the residual variants started to exhibit instabilities and the network has just ‘died’ early in the training, meaning that the last layer before the average pooling started to produce only zeros after a few tens of thousands of iterations. this could not be prevented , neither by lowering the lr nor by adding an extra batch-nomalization to this layer.

We found that scaliing down the residual before adding them to the previous layer activation seemed to stabilize the training. In gerneral we picked some scaling factors between 0.1 and 0.3 to scale the residuals before their being added to the accumulated layer activations:

Even where the scaling was not strictly necessary, it never seemed to harm the final accuracy, but it helped to stabilize the training.

A similar instability was observed by He kaiming, they suggest a two-phase training in the case of very deep residual networks. first warm-up with lower lr, followed by a second phase with high lr.

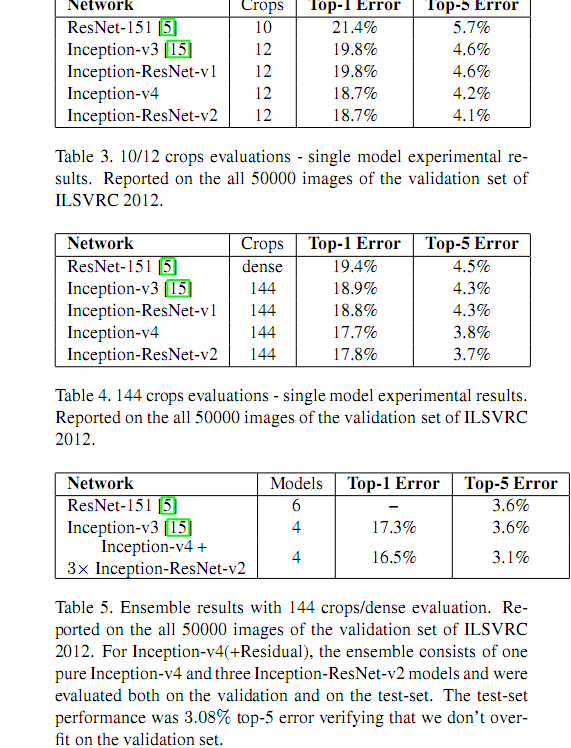

4 result

5 Conclusion

- Inception-resnet-v1: a hybird inception version that has a simliar computional cost to Inceptionv3

- I-R-v2: costlier, but significantly imporved recognition performance.

- Inception-v4: roughly the same rocognition performance as Inception-Resnet-v2

Residual connections leads to dramatically improved training speed for the Inception architecture.

本文探讨了将残差连接与Inception v3架构相结合的方法,并设计出了Inception-v4等新版本。实验表明,使用残差连接可以显著提高训练速度,并通过调整残差缩放等方式稳定训练过程。

本文探讨了将残差连接与Inception v3架构相结合的方法,并设计出了Inception-v4等新版本。实验表明,使用残差连接可以显著提高训练速度,并通过调整残差缩放等方式稳定训练过程。

489

489

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?