LLMs:《Large Language Models A Survey综述调查》翻译与解读—概述了早期预训练语言模型发展史→介绍了3个流行的LLM家族及其它代表性模型→构建、增强和使用LLM的方法和技术→数据集和基准→未来方向和挑战

导读:这篇论文系统总结了LLM技术从统计模型到深度学习模型的发展历程,并概括当前三大主流LLM家族的代表模型及其特点,为读者提供了一个 LLMs 领域的全景视角。

背景:

>> 文章回顾了统计语言模型(SLMs)及早期神经网络语言模型(NLMs)。语言模型技术源于20世纪50年代,起初是基于n-gram统计机器学习算法。随后的神经网络语言模型(NLM)采用embedding技术解决了统计语言模型的数据稀疏性问题。

>> 随后提出预训练语言模型(PLMs)及大规模语言模型(LLMs)两个概念。预训练语言模型(PLM)是任务无关的,其学习得到的嵌入空间也是通用的。PLM采用预训练-微调范式进行训练。主流PLM有BERT、GPT系列、T5等。BERT使用双向训练,GPT采用单向自回归训练,T5提出将所有NLP任务统一为生成任务。

近年来LLM技术的成功主要因素:

>> 采用Transformer结构,可以进行高效预训练。

>> 预训练规模不断扩大,如PaLM以540B参数规模进行预训练。

>> 出现强化学习等新技术进行增强训练。

论文总结了三大主流LLM家族技术细节:

● GPT系列模型,以开放AI研发,175B的参数GPT-3首次展示强大语言理解能力。

● LLaMA系列,以元数据研发,13B参数模型性能超越GPT-3,开源可复现。

● PaLM系列,Facebook研发,以8B和62B参数两种规模,在多种任务上领先同级别模型。

核心要点:

>> 详细阐述了构建LLMs的各个环节,包括数据清理、分词方法、模型预训练、微调与指令微调等。

>> 系统介绍了LLMs的常用应用技巧,如提示设计以及外部知识增强等。

>> 文章选取多个重要数据集,对不同模型在这些数据集上的表现进行了对比,以观察模型在自然语言理解、推理、代码生成等多项能力上的优劣。

LLM的主要优势:如上下文学习能力、指令执行能力以及多步推理能力。

LLMs的主要挑战:比如模型效率问题、多模态处理能力、面对安全与伦理问题等。

未来研究方向:如模型效率、多模态、人机交互等。

该论文系统回顾了LLMs的历史发展与分类方法,并探讨了模型构建、应用及评估各个环节,对这一快速发展的研究领域给予了全面而深入的总结,对后续研究具有指导意义。

目录

《Large Language Models A Survey大型语言模型的综述调查》翻译与解读

四波浪潮:SLM(统计语言模型)→NLM(神经语言模型)→PLM(预训练语言模型)→LLM(大语言模型)

LLM:优于PLM的三大特点(规模更大/语言理解和生成能力更强/新兴能力【CoT/IF/MSR】),也可使用外部工具来增强和交互(收集反馈数据然后不断改进自身)

本文结构:第二部分(LLMs的最新技术)→第三部分(如何构建LLMs)→第四部分(LLMs的用途)→第五和第六部分(评估LLMs的流行数据集和基准)→第七部分(面临的挑战和未来方向)

II. LARGE LANGUAGE MODELS大型语言模型

TABLE I: High-level Overview of Popular Language Models

A. Early Pre-trained Neural Language Models早期预训练的神经语言模型

Bengio等人开发第一个NLM→Mikolov等人发布了RNNLM→基于RNN的变体(如LSTM和GRU),被广泛用于许多自然语言应用,包括机器翻译、文本生成和文本分类

诞生Transformer架构:NLM新的里程碑,比RNN更多的并行化+采用GPU+下游微调

基于Transformer的PLMs三大类:仅编码器、仅解码器和编码器-解码器模型

1)、仅编码器PLMs:只包含一个编码器网络、适合语言理解任务(比如文本分类)、使用掩码语言建模和下一句预测等目标进行预训练

BERT:3个模块(嵌入模块+Transformer编码器堆栈+全连接层)、2个任务(MLM和NSP),可添加一个分类器层实现多种语言理解任务,显著提高并成为仅编码器语言模型的基座

Fig. 3: Overall pre-training and fine-tuning procedures for BERT. Courtesy of [24]

ELECTRA(提出RTD替换MLM任务+小型生成器网络+训练一个判别模型)、XLM(两种方法将BERT扩展到跨语言语言模型=仅依赖于单语数据的无监督方法+利用平行数据的监督方法)

Fig. 7: High-level overview of GPT pretraining, and fine-tuning steps. Courtesy of OpenAI.

GPT-1:基于仅解码器的Transformer+生成式预训练【无标签语料库+自监督学习】+微调【下游任务有区别的微调】

GPT-2:基于数百万网页的WebText数据集+遵循GPT-1的模型设计+将层归一化移动到每个子块的输入处+自关注块之后添加额外的层归一化+修改初始化+词汇量扩展到5025+上下文扩展到1024

T5(将所有NLP任务都视为文本到文本生成任务)、mT5(基于T5的多语言变体+101种语言)

MASS(重构剩余部分句子片段+编码器将带有随机屏蔽片段句子作为输入+解码器预测被屏蔽的片段)、BART()

B. Large Language Model Families大型语言模型家族(基于Transformer的PLM+尺寸更大【数百亿到数千亿个参数】+表现更强):比如LLM的三大家族

Fig. 10: The high-level overview of RLHF. Courtesy of [59].

GPT家族(OpenAI开发+后期闭源):开源系列(GPT-1、GPT-2),闭源系列(GPT-3、InstrucGPT、ChatGPT、GPT-4、CODEX、WebGPT,只能API访问)

GPT-3(175B):1750亿参数+被视为史上第一个LLM(因其大规模尺寸+涌现能力)+仅需少量演示直接用于下游任务而无需任何微调+适应各种NLP任务

CODEX(2023年3月,即Copilot的底层技术):通用编程模型,解析自然语言并生成代码作为响应,采用GPT-3+微调GitHub的代码语料库

WebGPT:采用GPT-3+基于文本的网络浏览器回答+三步骤(学习模仿人类浏览行为+利用奖励函数来预测人类的偏好+强化学习和拒绝抽样来优化奖励函数)

InstructGPT:采用GPT-3+对人类反馈进行微调(RLHF对齐用户意图)+改善了真实性和有毒性

ChatGPT(2022年11月30日):一种聊天机器人+采用用户引导对话完成各种任务+基于GPT-3.5+为InstructGPT的兄弟模型

GPT-4(2023年3月):GPT家族中最新最强大的多模态LLM(呈现人类级别的性能)+基于大型文本语料库预训练+采用RLHF进行微调

Fig. 12: Training of LLaMA-2 Chat. Courtesy of [61].

LLaMA2(2023年7月/Meta与微软合作):预训练(公开可用)+监督微调+模型优化(RLHF/拒绝抽样/最近邻策略优化)

Alpaca:利用GPT-3.5生成52K指令数据+微调LLaMA-7B模型,比GPT-3.5相当但成本非常小

Guanaco:采用指令遵循数据+利用QLoRA微调LLaMA模型,单个48GB GPU上仅需24小时可微调65B参数模型,达到了ChatGPT的99.3%性能

Koala:采用指令遵循数据(侧重交互数据)+微调LLaMA模型

Mistral-7B:为了优越的性能和效率而设计,利用分组查询注意力来实现更快的推理,并配合滑动窗口注意力来有效地处理任意长度的序列

其它:Code LLaMA 、Gorilla、Giraffe、Vigogne、Tulu 65B、Long LLaMA、Stable Beluga

Fig. 14: Flan-PaLM finetuning consist of 473 datasets in above task categories. Courtesy of [74].

U-PaLM:使用UL2R(使用UL2的混合去噪目标来持续训练)在PaLM上进行训练+可节省约2倍的计算资源

Flan-PaLM:更多的任务(473个数据集/总共1836个任务)+更大的模型规模+链式思维数据

PaLM-2:计算效率更高+更好的多语言和推理能力+混合目标,快速、更高效的推理能力

Med-PaLM(特定领域的PaLM(医学领域问题回答),基于PaLM的指令提示调优)→Med-PaLM 2(医学领域微调和集成提示改进)

C. Other Representative LLMs其他代表性LLM

Fig. 15: comparison of instruction tuning with pre-train–finetune and prompting. Courtesy of [78].

Fig. 16: Model architecture details of Gopher with different number of parameters. Courtesy of [78].

Fig. 17: High-level model architecture of ERNIE 3.0. Courtesy of [81].

Fig. 20: Different OPT Models’ architecture details. Courtesy of [86].

Fig. 22: An overview of UL2 pretraining paradigm. Courtesy of [92].

Fig. 23: An overview of BLOOM architecture. Courtesy of [93].

BLOOM(基于ROOTS语料库+仅解码器的Transformer,176B)、GLM(双语(中英文)预训练语言模型+对飙100B级别的GPT-3,130B)、Pythia(由16个LLM组成的套件)

Orca(从GPT-4丰富的信号中学习+解释痕迹+多步思维过程,13B)、StarCoder(8K上下文长度,15B参数/1T的toekn)、KOSMOS(多模态大型语言模型+任意交错的模态数据)

Gemini(多模态模型,基于Transformer解码器+支持32k上下文长度,多个版本)

III. HOW LLMS ARE BUILT如何构建LLMs

数据准备(收集、清理、去重等)→分词→模型预训练(以自监督的学习方式)→指令调优→对齐

Fig. 25: This figure shows different components of LLMs.

A. Dominant LLM Architectures主流LLM架构(即基于Transformer)

最初是为使用GPU进行有效的并行计算而设计,核心是(自)注意机制,比递归和卷积机制更有效地捕获长期上下文

Fig. 26: High-level overview of transformer work. Courtesy of [44].

2) Encoder-Only:注意层都可以访问初始句子中的所有单词,适合需要理解整个序列的任务,例如句子分类、命名实体识别和抽取式问答,比如BERT

3) Decoder-Only(也被称为自回归模型):注意层只能访问句子中位于其前面的单词,最适合涉及文本生成的任务,比如GPT

4) Encoder-Decoder(也被称为序列到序列模型): 最适合基于给定输入生成新句子的任务,比如摘要、翻译或生成式问答

Falcon40B已证明仅对web数据进行适当过滤和去重就可以产生强大的模型,从CommonCrawl获得了5T的token+从REFINEDWEB数据集中提取了600B个token

数据过滤:目的(数据的高质量+模型的有效性),去除噪声、处理异常值、解决不平衡、文本预处理、处理歧义

去重:可提高泛化能力,NLP任务需要多样化和代表性的训练数据,主要依赖于高级特征之间的重叠比率来检测重复样本

C. Tokenizations分词:分词器依赖于词典、常用的是基于子词但存在OOV问题,三种流行的分词器

Tokenization是将文本序列分割成较小单元(token)的过程,对自然语言处理任务至关重要

BytePairEncoding:最初是一种数据压缩算法,主要是保持频繁词的原始形式,对不常用的词进行分解。通过识别字节级别的频繁模式来压缩数据,有效管理词汇表大小,同时很好地表示常见单词和形态形式

WordPieceEncoding:比如BERT和Electra等,确保训练数据中的所有字符都包含在词汇表中,以防止未知token,并根据频率进行标记化

SentencePieceEncoding:解决了具有嘈杂元素或非传统词边界的语言中的分词问题,不依赖于空格分隔

Fig. 28: Various positional encodings are employed in LLMs.

绝对位置编码 (APE):在Transformer模型中被用来保留序列顺序信息,通过在编码器和解码器堆栈底部将词的位置信息添加到输入嵌入中

相对位置编码(RPE):扩展了自注意力机制,考虑了输入元素之间的成对链接,以及作为密钥的附加组件和值矩阵的子组件。RPE将输入视为具有标签和有向边的完全连接图,并通过限制相对位置来进行预测

旋转位置编码(RoPE)通过使用旋转矩阵对词的绝对位置进行编码,并在自注意力中同时包含显式的相对位置细节,提供了灵活性、降低了词之间的依赖性,并能够改进线性自注意力

相对位置偏差(RPA,如ALiBi):旨在在推理时为解决训练中遇到的更长的序列进行外推。ALiBi在注意力分数中引入了一个偏差,对查询-键对的距离施加罚项,以促进在训练中未遇到的序列长度的外推

E. Model Pre-training预训练:LLM中的第一步,自监督训练,获得基本的语言理解能力

T1、自回归语言建模:模型尝试以自回归方式预测给定序列中的下一个标记,通常使用预测标记的对数似然作为损失函数

T2、掩码语言建模(或去噪自编码):通过遮蔽一些词,并根据周围的上下文预测被遮蔽的词

T3、混合专家:允许用较少的计算资源进行预训练,通过稀疏MoE层和门控网络或路由器来实现,路由器确定将哪些标记发送到哪个专家,并且可以将一个标记发送给多个专家

F. Fine-tuning and Instruction Tuning微调和指令调优

有监督微调SFT:早期语言模型(如BERT)经过自监督训练后,需要通过有标签数据进行特定任务的微调,以提高性能

微调的意义:微调不仅可以针对单一任务进行,还可以采用多任务微调的方法,这有助于提高结果并减少提示工程的复杂性

指令微调:对LLMs进行微调的重要原因之一是将其响应与人类通过提示提供的期望进行对齐,比如InstructGPT和Alpaca

RLHF(利用奖励模型从人类反馈中学习对齐)和RLAIF(从AI反馈中学习对齐)

贪婪搜索:它在每一步中选择最有可能的标记作为序列的下一个标记,简单快速,但可能失去一些时间连贯性和一致性

束搜索:它考虑N个最有可能的标记,直到达到预定义的最大序列长度或出现终止标记,选择具有最高总分数的标记序列作为输出

Top-k 采样:Top-k抽样从k个最可能的选项中随机选择一个标记,以概率分布的形式确定标记的优先级,并引入一定的随机性

Top-p抽样:Top-p抽样选择总概率超过阈值p的标记形成的“核心”,这种方法更适合于顶部k标记概率质量不大的情况,通常产生更多样化和创造性的输出

I. Cost-Effective Training/Inference/Adaptation/Compression高效的训练/推理/适应/压缩

优化训练—降存提速:ZeRO(优化内存)和RWKV(模型架构)是优化训练的两个主要框架,能够大幅提高训练速度和效率

Fig. 32: RWKV architecture. Courtesy of [141].

低秩自适应(LoRA)—减参提速:LoRA通过低秩矩阵近似差异化权重,显著减少了可训练参数数量,加快了训练速度,提高了模型效率,同时产生的模型体积更小,易于存储和共享

知识蒸馏—轻量级部署提速:知识蒸馏是从更大的模型中学习的过程,通过蒸馏多个模型的知识,创建更小的模型,从而在边缘设备上实现部署,有助于减小模型的体积和复杂度

Fig. 35: A generic knowledge distillation framework with student and teacher (Courtesy of [144]).

量化—降低精度提速:量化是指减小模型权重的精度,从而减小模型大小并加快推理速度,包括后训练量化和量化感知训练两种方法

IV. HOW LLMS ARE USED AND AUGMENTED如何使用和扩展LLMs

Fig. 36: How LLMs Are Used and Augmented.

限制:缺乏记忆、随机概率性的、缺乏实时信息、昂贵的GPU、存在幻觉问题

幻觉的分类:内在幻觉(与源材料直接冲突,引入事实错误或逻辑不一致)、外在幻觉(虽然不矛盾,但无法与源进行验证,包括推测性或不可验证的元素)

幻觉的衡量:需要结合统计和基于模型的度量方法,以及人工判断,例如使用ROUGE、BLEU等指标、基于信息提取模型的度量和基于自然语言推理数据集的度量

FactScore:最近的一个度量标准的例子,它既可以用于人类评估,也可以用于基于模型的评估

缓解LLM幻觉的挑战:包括产品设计和用户交互策略、数据管理与持续改进、提示工程和元提示设计,以及模型选择和配置

B. Using LLMs: Prompt Design and Engineering提示设计和工程

提示工程是塑造LLM及其他生成式人工智能模型交互和输出的迅速发展学科,需要结合领域知识、对模型的理解以及为不同情境量身定制提示的方法论

Prompt工程是一门不断发展的学科,通过设计最佳Prompt来实现特定目标

主要的Prompt工程方法包括:CoT、ToT、自我一致性、反思、专家提示、链条和轨道

8)自动提示工程:旨在自动化生成LLM的提示,利用LLM本身的能力生成和评估提示,进而创建更高质量的提示,更有可能引发期望的响应或结果

C. Augmenting LLMs through external knowledge - RAG通过外部知识扩充

Fig. 37: An example of synthesizing RAG with LLMs for question answering application [166].

Fig. 38: This is one example of synthesizing the KG as a retriever with LLMs [167].

FLARE:通过迭代地结合预测和信息检索,提高了大型语言模型的能力

使用外部工具是增强LLM功能的一种方式,不仅包括从外部知识源中检索信息,还包括访问各种外部服务或API

工具是LLM可以利用的外部功能或服务,扩展了LLM的任务范围,从基本的信息检索到与外部数据库或API的复杂交互,比如Toolformer

ART是一种将自动化的链式思维提示与外部工具使用相结合的提示工程技术,增强了LLM处理复杂任务的能力,尤其适用于需要内部推理和外部数据处理或检索的任务。

LLM代理是基于特定实例化的(增强的)LLM的系统,能够自主执行特定任务,通过与用户和环境的交互来做出决策,通常超出简单响应生成的范围

Prompt工程技术针对LLM代理的需要进行了专门的开发,例如ReWOO、ReAct、DERA等。这些技术旨在增强LLM代理的推理、行动和对话能力,使其能够处理各种复杂的决策和问题解决任务

ReWOO:目标是将推理过程与直接观察分离,让LLM先制定完整的推理框架与方案,然后在获取必要数据后执行

ReAct:会引导LLM同时产生推理解释与可执行行动,从而提升其动态解决问题的能力

DERA:利用多个专业化代理交互解决问题和做决定,每个代理有不同角色与职能,这种方式更高效地进行复杂决策

Fig. 39: HuggingGPT: An agent-based approach to use tools and planning [image courtesy of [171]]

Fig. 40: A LLM-based agent for conversational information seeking. Courtesy of [36].

V. POPULAR DATASETS FOR LLMS常用的数据集

Fig. 41: Dataset applications.

Fig. 42: Datasets licensed under different licenses.

TABLE II: LLM Datasets Overview.

基本任务数据集:用于评估LLM基本能力的基准和数据集,如自然问题、数学问题、代码生成等任务的数据集,包括Natural Questions、MMLU、MBPP等。

阅读理解数据集:用于阅读理解任务的数据集,如RACE、SQuAD、BoolQ等。

阅读推理数据集:包括MultiRC等,适用于需要跨句子推理的阅读理解任务的数据集

新兴任务数据集:评估LLM新兴能力的基准和数据集,包括GSM8K、MATH等,用于多步数学推理、解决数学问题等任务

常识推理数据集:如HellaSwag、AI2 Reasoning Challenge (ARC)等,用于评估LLM的常识推理能力,包括常识问题、科学推理等任务

自然常识问题数据集:例如PIQA、SIQA等,旨在评估LLM对社交情境和物理常识的推理能力

开放式问题回答数据集:包括OpenBookQA (OBQA)、TruthfulQA等,用于评估LLM在处理开放性问题时的能力。

指令元学习数据集:OPT-IML Bench,用于评估LLM在指令元学习方面的表现

VI. PROMINENT LLMS’ PERFORMANCE ON BENCHMARKS杰出LLM的基准表现

TABLE III: LLM categories and respective definitions.

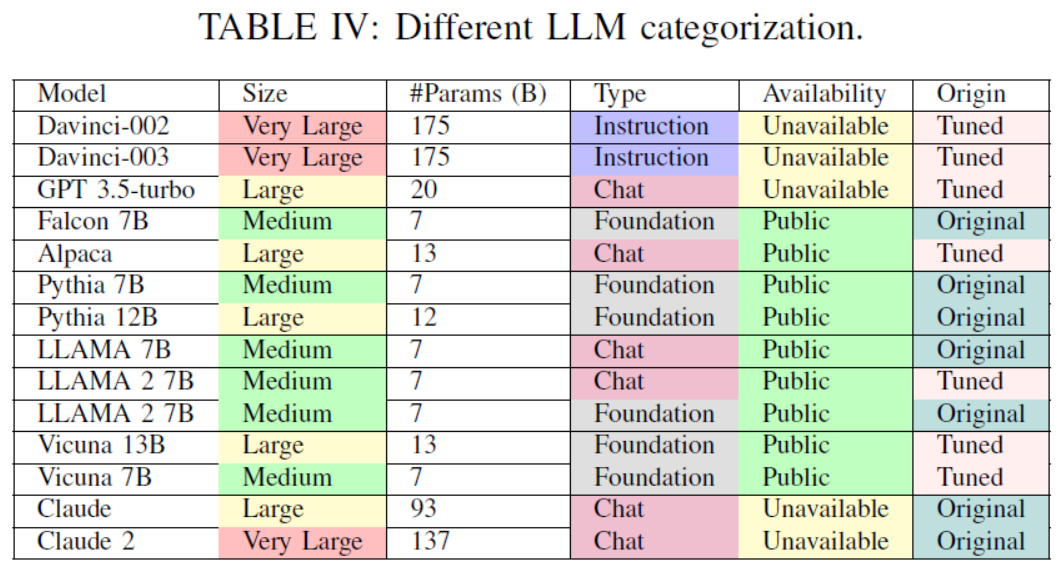

TABLE IV: Different LLM categorization.

TABLE V: Commonsense reasoning comparison.

TABLE VI: Symbolic reasoning comparison.

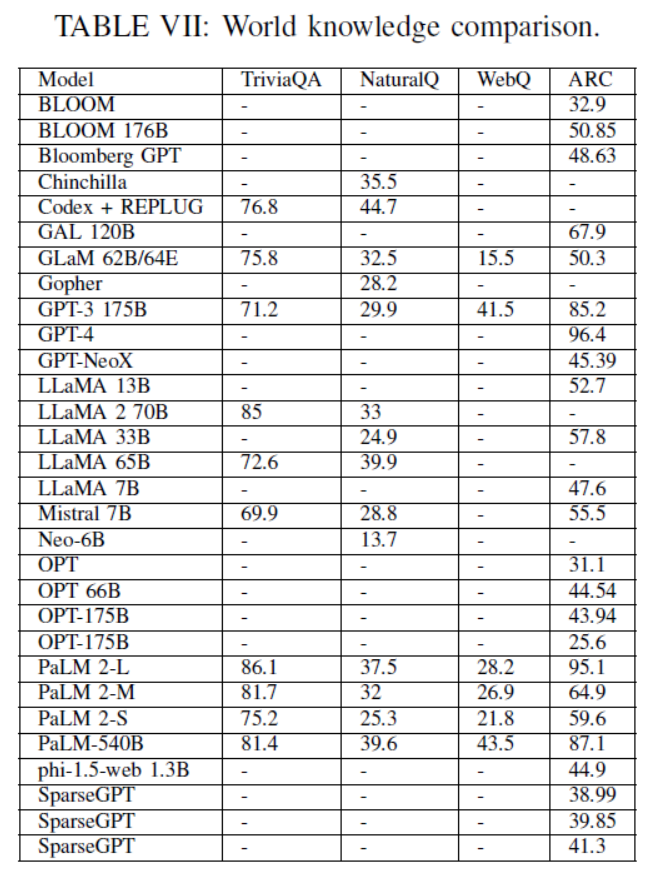

TABLE VII: World knowledge comparison.

TABLE VIII: Coding capability comparison.

TABLE IX: Arithmetic reasoning comparison.

TABLE X: Hallucination evaluation

A. Popular Metrics for Evaluating LLMs评估LLM的流行指标

代码生成需要使用不同的指标,如Pass@k和Exact Match (EM)

评估机器翻译等生成任务时,通常使用Rouge和BLEU等度量标准

LLM的分类和标签:将LLMs根据参数规模划分为小型、中型、大型和超大型4类;按预训练目的划分为基础模型、指令模型和聊天模型3类。此外,还区分原始模型和调优模型,以及公共模型和私有模型

B. LLMs’ Performance on Different Tasks在不同任务上的表现

LLMs在常识推理、世界知识、编码能力、算术推理和幻觉检测等方面表现出不同的性能

GPT-4在HellaSwag常识数据集上表现最好;Davinci-003在OBQA问答数据集上表现最佳

VII. CHALLENGES AND FUTURE DIRECTIONS挑战与未来方向

A. Smaller and more efficient Language Models更小、更高效的语言模型:如Phi系列小语言模型

针对大型语言模型的高成本和低效率,出现了对小型语言模型(SLMs)的研究趋势,如Phi-1、Phi-1.5和Phi-2

未来预计将继续研究如何训练更小、更高效的模型,使用参数有效的微调(PEFT)、师生学习和其他形式的蒸馏等技术

B. New Post-attention Architectural Paradigms新的后注意力机制的架构范式:探索注意力机制之外的新架构,如状态空间模型和MoE混合专家模型

传统的Transformer模块在当前LLM框架中起着关键作用,但越来越多的研究开始探索替代方案,被称为后注意力模型

结构状态空间模型(SSM)是一类重要的后注意力模型,如Mamba、Hyena和Striped Hyena

后注意力模型解决了传统基于注意力的架构在支持更大上下文窗口方面的挑战,为处理更长上下文提供了更有效的方法

MoE机制已经存在多年,但近年来在Transformer模型和LLMs中越来越受欢迎,被应用于最先进和最具性能的模型中

MoEs允许训练极大的模型,而在推理过程中只部分实例化,其中一些专家被关闭。 MoEs已成为最先进LLMs的重要组成部分,例如GPT-4、Mixtral、GLaM

C. Multi-modal Models多模态模型:研发多模态语言模型,融合文本、图片、视频等多种数据类型

未来的LLMs预计将是多模态的,能够统一处理文本、图像、视频、音频等多种数据类型,如LLAVA、GPT-4等

D. Improved LLM Usage and Augmentation techniques改进LLM的使用和增强技术

通过高级提示工程(提升问答引导)、工具使用或其他增强技术,可以解决LLMs的一些缺陷和限制,如幻觉等

预计将在LLMs的应用和使用方面进行持续和加速的研究,如个性化推荐、多代理系统等

E. Security and Ethical/Responsible AI安全和道德/负责任的人工智能:保障LLM模型安全性,减少对抗攻击,并注重LLM的公平性和负责任

需要研究确保LLMs对抗攻击和其他漏洞的稳健性和安全性,以防止它们被用于操纵人们或传播错误信息

正在努力解决LLMs的道德关切和偏见问题,以确保它们公平、无偏见,并能够负责任地处理敏感信息

Open Source Toolkits For LLM Development and Deployment用于LLM开发和部署的开源工具包

A. LLM Training/Inference Frameworks训练/推理框架

DeepSpeed、Transformers、Megatron-LM、BMTrain

FastChat、Skypilot、vLLM、text-generation-inference、LangChain、Are context-aware

OpenLLM、Embedchain、Autogen、BabyAGI

Guidance、PromptTools、PromptBench、Promptfoo

Faiss、Milvus、Qdrant、Weaviate、LlamaIndex、Pinecone

《Large Language Models A Survey大型语言模型的综述调查》翻译与解读

| 地址 | |

| 时间 | 2024年2月9日 |

| 作者 | Shervin Minaee, Tomas Mikolov, Narjes Nikzad, Meysam Chenaghlu, Richard Socher, Xavier Amatriain, Jianfeng Gao |

| 总结 |

Abstract摘要

| Abstract—Large Language Models (LLMs) have drawn a lot of attention due to their strong performance on a wide range of natural language tasks, since the release of ChatGPT in November 2022. LLMs’ ability of general-purpose language understanding and generation is acquired by training billions of model’s parameters on massive amounts of text data, as predicted by scaling laws [1], [2]. The research area of LLMs, while very recent, is evolving rapidly in many different ways. In this paper, we review some of the most prominent LLMs, including three popular LLM families (GPT, LLaMA, PaLM), and discuss their characteristics, contributions and limitations. We also give an overview of techniques developed to build, and augment LLMs. We then survey popular datasets prepared for LLM training, fine-tuning, and evaluation, review widely used LLM evaluation metrics, and compare the performance of several popular LLMs on a set of representative benchmarks. Finally, we conclude the paper by discussing open challenges and future research directions. | 自2022年11月ChatGPT发布以来,大型语言模型(LLM)因其在广泛的自然语言任务上的出色表现而引起了广泛的关注。LLM的通用语言理解和生成能力是通过在大量文本数据上训练数十亿个模型的参数来获得的,正如缩放定律所预测的那样[1],[2]。LLMs的研究领域虽然很新,但在许多不同方面迅速发展。在本文中,我们回顾了一些最著名的LLM,包括三个流行的LLM家族(GPT, LLaMA, PaLM),并讨论了它们的特点、贡献和局限性。我们还概述了用于构建和增强LLMs的技术。然后,我们调查了为LLMs训练、微调和评估而准备的流行数据集,回顾了广泛使用的LLMs评估指标,并比较了几种流行LLMs在一组代表性基准上的性能。最后,对本文的研究方向和面临的挑战进行了展望。 |

I. INTRODUCTION引言

| Language modeling is a long-standing research topic, dating back to the 1950s with Shannon’s application of information theory to human language, where he measured how well simple n-gram language models predict or compress natural language text [3]. Since then, statistical language modeling became fundamental to many natural language understanding and generation tasks, ranging from speech recognition, machine translation, to information retrieval [4], [5], [6]. | 语言建模是一个长期存在的研究课题,可以追溯到20世纪50年代,香农将信息论应用于人类语言的时候,他测量了简单n-gram语言模型预测或压缩自然语言文本的效果[3]。自那时以来,统计语言建模成为许多自然语言理解和生成任务的基础,范围从语音识别、机器翻译到信息检索[4]、[5]、[6]。 |

| The recent advances on transformer-based large language models (LLMs), pretrained on Web-scale text corpora, significantly extended the capabilities of language models (LLMs). For example, OpenAI’s ChatGPT and GPT-4 can be used not only for natural language processing, but also as general task solvers to power Microsoft’s Co-Pilot systems, for instance, can follow human instructions of complex new tasks performing multi-step reasoning when needed. LLMs are thus becoming the basic building block for the development of general-purpose AI agents or artificial general intelligence (AGI). | 最近基于Transformer的大型语言模型(LLMs)在Web规模文本语料库上预训练的进展,显着扩展了语言模型的能力。例如,OpenAI的ChatGPT和GPT-4不仅可以用于自然语言处理,还可以作为通用任务解决器,为微软的Co-Pilot系统提供动力,例如,可以在需要时遵循人类对复杂新任务的指令执行多步推理。。因此,LLM正在成为开发通用人工智能代理或通用人工智能(AGI)的基本构建块。 |

| As the field of LLMs is moving fast, with new findings, models and techniques being published in a matter of months or weeks [7], [8], [9], [10], [11], AI researchers and practitioners often find it challenging to figure out the best recipes to build LLM-powered AI systems for their tasks. This paper gives a timely survey of the recent advances on LLMs. We hope this survey will prove a valuable and accessible resource for students, researchers and developers. | 由于LLMs领域发展迅速,新的发现、模型和技术以几个月甚至几周的速度发布,因此人工智能研究人员和从业者常常发现很难找出为其任务构建LLM驱动的人工智能系统的最佳方法。本文及时调查了LLMs的最新进展。我们希望这项调查能成为学生、研究人员和开发人员宝贵且易于获取的资源。 |

Fig. 1: LLM Capabilities.

四波浪潮:SLM(统计语言模型)→NLM(神经语言模型)→PLM(预训练语言模型)→LLM(大语言模型)

| LLMs are large-scale, pre-trained, statistical language models based on neural networks. The recent success of LLMs is an accumulation of decades of research and development of language models, which can be categorized into four waves that have different starting points and velocity: statistical language models, neural language models, pre-trained language models and LLMs. | LLM是基于神经网络的大规模、预训练的统计语言模型。LLMs的最近成功是对语言模型研究和开发数十年积累的成果,可以分为四波浪潮:统计语言模型、神经语言模型、预训练语言模型和LLM,它们有不同的起点和速度。 |

SLM

| Statistical language models (SLMs) view text as a sequence of words, and estimate the probability of text as the product of their word probabilities. The dominating form of SLMs are Markov chain models known as the n-gram models, which compute the probability of a word conditioned on its immediate proceeding n − 1 words. Since word probabilities are estimated using word and n-gram counts collected from text corpora, the model needs to deal with data sparsity (i.e., assigning zero probabilities to unseen words or n-grams) by using smoothing, where some probability mass of the model is reserved for unseen n-grams [12]. N-gram models are widely used in many NLP systems. However, these models are incomplete in that they cannot fully capture the diversity and variability of natural language due to data sparsity. | >> 统计语言模型(SLM)将文本视为一系列单词,并估计文本的概率为它们单词概率的乘积。将文本视为单词序列。SLM的主要形式是被称为n-gram模型的马尔可夫链模型,它计算一个单词在其直接前n-1个单词的条件下出现的概率。由于单词概率是使用从文本语料库中收集的单词和n-gram计数来估计的,因此模型需要通过使用平滑来处理数据稀疏性(即为未见过的单词或n-gram分配零概率),其中模型的一些概率质量为未见过的n-gram保留[12]。N-gram模型广泛应用于许多自然语言处理系统。然而,这些模型是不完整的,因为由于数据稀疏性,它们无法充分捕捉自然语言的多样性和可变性。 |

NLM

| Early neural language models (NLMs) [13], [14], [15], [16] deal with data sparsity by mapping words to low-dimensional continuous vectors (embedding vectors) and predict the next word based on the aggregation of the embedding vectors of its proceeding words using neural networks. The embedding vectors learned by NLMs define a hidden space where the semantic similarity between vectors can be readily computed as their distance. This opens the door to computing semantic similarity of any two inputs regardless their forms (e.g., queries vs. documents in Web search [17], [18], sentences in different languages in machine translation [19], [20]) or modalities (e.g., image and text in image captioning [21], [22]). Early NLMs are task-specific models, in that they are trained on task-specific data and their learned hidden space is task-specific. | >> 早期的神经语言模型(NLMs)[13],[14],[15],[16]通过将单词映射到低维连续向量(嵌入向量)来处理数据稀疏性,并使用神经网络基于其前一个单词的嵌入向量的聚合来预测下一个单词。NLM学习的嵌入向量定义了一个隐藏空间,在这个空间中,向量之间的语义相似度可以很容易地计算为它们之间的距离。这为计算任意两个输入的语义相似度打开了大门,而不管它们的形式(例如,网络搜索中的查询与文档,语言翻译中不同语言中的句子)还是模态(例如,图像和文本在图像字幕中)。早期的NLM是特定于任务的模型,因为它们是在特定于任务的数据上训练的,它们学习到的隐藏空间是特定于任务的。 |

PLM

| Pre-trained language models (PLMs), unlike early NLMs, are task-agnostic. This generality also extends to the learned hidden embedding space. The training and inference of PLMs follows the pre-training and fine-tuning paradigm, where language models with recurrent neural networks [23] or transformers [24], [25], [26] are pre-trained on Web-scale unlabeled text corpora for general tasks such as word prediction, and then finetuned to specific tasks using small amounts of (labeled) task-specific data. Recent surveys on PLMs include [8], [27], [28]. | >> 预训练语言模型(PLMs),与早期的NLMs不同,是任务无关的。这种通用性也延伸到了学习的隐藏嵌入空间。PLM的训练和推理遵循预训练和微调范式,其中使用RNN[23]或Transformer[24],[25],[26]的语言模型在web规模的未标记文本语料库上进行预训练,用于单词预测等一般任务,然后使用少量(标记的)特定任务数据对特定任务进行微调。最近关于PLM的调查包括[8],[27],[28]。 |

LLM:优于PLM的三大特点(规模更大/语言理解和生成能力更强/新兴能力【CoT/IF/MSR】),也可使用外部工具来增强和交互(收集反馈数据然后不断改进自身)

| Large language models (LLMs) mainly refer to transformer-based neural language models 1 that contain tens to hundreds of billions of parameters, which are pretrained on massive text data, such as PaLM [31], LLaMA [32], and GPT-4 [33], as summarized in Table III. Compared to PLMs, LLMs are not only much larger in model size, but also exhibit stronger language understanding and generation abilities, and more importantly, emergent abilities that are not present in smaller-scale language models. As illustrated in Fig. 1, these emergent abilities include (1) in-context learning, where LLMs learn a new task from a small set of examples presented in the prompt at inference time, (2) instruction following, where LLMs, after instruction tuning, can follow the instructions for new types of tasks without using explicit examples, and (3) multi-step reasoning, where LLMs can solve a complex task by breaking down that task into intermediate reasoning steps as demonstrated in the chain-of-thought prompt [34]. LLMs can also be augmented by using external knowledge and tools [35], [36] so that they can effectively interact with users and environment [37], and continually improve itself using feedback data collected through interactions (e.g. via reinforcement learning with human feedback (RLHF)). | >> 大型语言模型(Large language models, LLM)主要是指基于Transformer的神经语言模型,包含数百亿到数千亿个参数,这些模型在大规模文本数据上进行了预训练,例如PaLM [31],LLaMA [32]和GPT-4 [33],如表3所总结。与PLMs相比,LLMs不仅在模型规模上更大,而且在语言理解和生成能力上更强,更重要的是,在规模较小的语言模型中不存在的新兴能力。如图1所示,这些突现能力包括 (1)上下文学习,LLMs在推断时通过提示中呈现的少量示例学习新任务, (2)遵循指令,经过指令调整后,LLMs可以按照新类型任务的指令执行任务,而无需使用明确的示例,以及 (3)多步推理。LLMs可以通过将任务分解为中间推理步骤来解决复杂任务,如链式思维提示中所示[34]。 LLM也可以通过使用外部知识和工具来增强[35],[36],这样它们就可以有效地与用户和环境进行交互[37],并使用通过交互收集的反馈数据(例如通过人类反馈的强化学习(RLHF))不断改进自身。 |

基于LLM的AI代理

| Through advanced usage and augmentation techniques, LLMs can be deployed as so-called AI agents: artificial entities that sense their environment, make decisions, and take actions. Previous research has focused on developing agents for specific tasks and domains. The emergent abilities demonstrated by LLMs make it possible to build general-purpose AI agents based on LLMs. While LLMs are trained to produce responses in static settings, AI agents need to take actions to interact with dynamic environment. Therefore, LLM-based agents often need to augment LLMs to e.g., obtain updated information from external knowledge bases, verify whether a system action produces the expected result, and cope with when things do not go as expected, etc. We will discuss in detail LLM-based agents in Section IV. | 通过先进的使用和增强技术,LLM可以被部署为所谓的AI代理:感知环境、做出决策并采取行动的人工实体。以前的研究主要集中在开发特定任务和领域的代理。LLM所展示的涌现能力使得基于LLM构建通用人工智能代理成为可能。虽然LLMs经过训练以在静态设置中产生响应,但AI代理需要采取行动以与动态环境交互。因此,基于LLMs的代理通常需要增强LLMs,以获取来自外部知识库的更新信息,验证系统行动是否产生预期结果,并处理事情不如预期时的情况等。我们将在第四部分详细讨论基于LLMs的代理。 |

本文结构:第二部分(LLMs的最新技术)→第三部分(如何构建LLMs)→第四部分(LLMs的用途)→第五和第六部分(评估LLMs的流行数据集和基准)→第七部分(面临的挑战和未来方向)

| In the rest of this paper, Section II presents an overview of state of the art of LLMs, focusing on three LLM families (GPT, LLaMA and PaLM) and other representative models. Section III discusses how LLMs are built. Section IV discusses how LLMs are used, and augmented for real-world applications Sections V and VI review popular datasets and benchmarks for evaluating LLMs, and summarize the reported LLM evaluation results. Finally, Section VII concludes the paper by summarizing the challenges and future research directions. | 在本文的其余部分中,第二部分概述了LLMs的最新技术,重点介绍了三个LLMs系列(GPT、LLaMA和PaLM)和其他代表性模型。第三部分讨论了LLMs是如何构建的。第四部分讨论了LLMs的用途,并增强了用于现实世界应用的LLMs。第五和第六部分回顾了用于评估LLMs的流行数据集和基准,并总结了报告的LLMs评估结果。最后,第七部分总结了本文面临的挑战和未来的研究方向。 |

Fig. 2: The paper structure.

II. LARGE LANGUAGE MODELS大型语言模型

| In this section we start with a review of early pre-trained neural language models as they are the base of LLMs, and then focus our discussion on three families of LLMs: GPT, LlaMA, and PaLM. Table I provides an overview of some of these models and their characteristics. | 在本节中,我们首先回顾早期预训练的神经语言模型,因为它们是LLM的基础,然后重点讨论三个LLM家族:GPT, LlaMA和PaLM。表1提供了其中一些模型及其特征的概述。 |

TABLE I: High-level Overview of Popular Language Models

A. Early Pre-trained Neural Language Models早期预训练的神经语言模型

Bengio等人开发第一个NLM→Mikolov等人发布了RNNLM→基于RNN的变体(如LSTM和GRU),被广泛用于许多自然语言应用,包括机器翻译、文本生成和文本分类

| Language modeling using neural networks was pioneered by [38], [39], [40]. Bengio et al. [13] developed one of the first neural language models (NLMs) that are comparable to n-gram models. Then, [14] successfully applied NLMs to machine translation. The release of RNNLM (an open source NLM toolkit) by Mikolov [41], [42] helped significantly popularize NLMs. Afterwards, NLMs based on recurrent neural networks (RNNs) and their variants, such as long short-term memory (LSTM) [19] and gated recurrent unit (GRU) [20], were widely used for many natural language applications including machine translation, text generation and text classification [43]. | 使用神经网络进行语言建模是由[38],[39],[40]开创的。 >> Bengio等人[13]开发了第一个可与n-gram模型相媲美的神经语言模型(NLM)。然后,[14]成功地将NLM应用于机器翻译。 >> Mikolov[41],[42]发布的RNNLM(一个开源的NLM工具包)极大地促进了NLM的普及。 >> 之后,基于循环神经网络(RNN)及其变体的NLM,如长短期记忆(LSTM)和门控循环单元(GRU),被广泛用于许多自然语言应用,包括机器翻译、文本生成和文本分类。 |

诞生Transformer架构:NLM新的里程碑,比RNN更多的并行化+采用GPU+下游微调

| Then, the invention of the Transformer architecture [44] marks another milestone in the development of NLMs. By applying self-attention to compute in parallel for every word in a sentence or document an “attention score” to model the influence each word has on another, Transformers allow for much more parallelization than RNNs, which makes it possible to efficiently pre-train very big language models on large amounts of data on GPUs. These pre-trained language models (PLMs) can be fine-tuned for many downstream tasks. | 然后,Transformer架构的发明[44]标志着NLM发展的另一个里程碑。通过应用自注意力机制来并行计算句子中的每个单词,或者记录一个“注意力分数”来模拟每个单词对另一个单词的影响,Transformers允许比RNN更多的并行化,这使得可以在GPU上有效地对大量数据进行预训练非常大的语言模型。这些预训练语言模型(PLMs)可以针对许多下游任务进行微调。 |

基于Transformer的PLMs三大类:仅编码器、仅解码器和编码器-解码器模型

| We group early popular Transformer-based PLMs, based on their neural architectures, into three main categories: encoderonly, decoder-only, and encoder-decoder models. Comprehensive surveys of early PLMs are provided in [43], [28]. | 根据它们的神经结构,我们将早期流行的基于Transformer的PLMs分为三大类:仅编码器、仅解码器和编码器-解码器模型。文献[43]、[28]对早期PLM进行了全面调查。 |

1)、仅编码器PLMs:只包含一个编码器网络、适合语言理解任务(比如文本分类)、使用掩码语言建模和下一句预测等目标进行预训练

| 1) Encoder-only PLMs: As the name suggests, the encoderonly models only consist of an encoder network. These models are originally developed for language understanding tasks, such as text classification, where the models need to predict a class label for an input text. Representative encoder-only models include BERT and its variants, e.g., RoBERTa, ALBERT, DeBERTa, XLM, XLNet, UNILM, as to be described below. | 1)仅编码器PLMs:正如名称所示,仅编码器模型只包含一个编码器网络。这些模型最初是为语言理解任务开发的,例如文本分类,在这些任务中,模型需要预测输入文本的类别标签。代表性的仅编码器模型包括BERT及其变体,例如RoBERTa、ALBERT、DeBERTa、XLM、XLNet、UNILM等。如下所述。 |

BERT:3个模块(嵌入模块+Transformer编码器堆栈+全连接层)、2个任务(MLM和NSP),可添加一个分类器层实现多种语言理解任务,显著提高并成为仅编码器语言模型的基座

| BERT (Birectional Encoder Representations from Transformers) [24] is one of the most widely used encoder-only language models. BERT consists of three modules: (1) an embedding module that converts input text into a sequence of embedding vectors, (2) a stack of Transformer encoders that converts embedding vectors into contextual representation vectors, and (3) a fully connected layer that converts the representation vectors (at the final layer) to one-hot vectors. BERT is pre-trained uses two objectives: masked language modeling (MLM) and next sentence prediction. The pre-trained BERT model can be fine-tuned by adding a classifier layer for many language understanding tasks, ranging from text classification, question answering to language inference. A high-level overview of BERT framework is shown in Fig 3. As BERT significantly improved state of the art on a wide range of language understanding tasks when it was published, the AI community was inspired to develop many similar encoder-only language models based on BERT. | BERT (Birectional Encoder Representations from Transformers)[24]是最广泛使用的仅编码器语言模型之一。BERT由三个模块组成: (1)将输入文本转换为嵌入向量序列的嵌入模块, (2)一堆Transformer编码器,将嵌入向量转换为上下文表示向量,以及 (3)一个完全连接的层,将表示向量(在最终层)转换为one-hot向量。 BERT的预训练使用两个目标:掩模语言建模(MLM)和下一句预测(NSP)。 预训练的BERT模型可以通过添加一个分类器层进行许多语言理解任务(从文本分类、问答到语言推理)的微调。 BERT框架的高级概述如图3所示。由于BERT在发布时显著提高了广泛的语言理解任务的技术水平,人工智能社区受到启发,基于BERT开发了许多类似的仅编码器语言模型 |

Fig. 3: Overall pre-training and fine-tuning procedures for BERT. Courtesy of [24]

RoBERTa(提高鲁棒性=修改关键超参数+删除NSP+更大的微批量和学习率)、ALBERT(降耗提速=嵌入矩阵拆分+使用组间分割的重复层)、DeBERTa(两种技术【解缠注意力机制+增强的掩码解码器】+新颖的虚拟对抗训练方法来微调)

| RoBERTa [25] significantly improves the robustness of BERT using a set of model design choices and training strategies, such as modifying a few key hyperparameters, removing the next-sentence pre-training objective and training with much larger mini-batches and learning rates. ALBERT [45] uses two parameter-reduction techniques to lower memory consumption and increase the training speed of BERT: (1) splitting the embedding matrix into two smaller matrices, and (2) using repeating layers split among groups. DeBERTa (Decodingenhanced BERT with disentangled attention) [26] improves the BERT and RoBERTa models using two novel techniques. The first is the disentangled attention mechanism, where each word is represented using two vectors that encode its content and position, respectively, and the attention weights among words are computed using disentangled matrices on their contents and relative positions, respectively. Second, an enhanced mask decoder is used to incorporate absolute positions in the decoding layer to predict the masked tokens in model pre-training. In addition, a novel virtual adversarial training method is used for fine-tuning to improve models’ generalization. | >> RoBERTa[25]使用一组模型设计选择和训练策略显著提高了BERT的鲁棒性,例如修改几个关键的超参数,删除下一个句子的预训练目标以及使用更大的微批量和学习率进行训练。 >> ALBERT[45]使用两种参数约简技术来降低内存消耗并提高BERT的训练速度:(1)将嵌入矩阵拆分为两个较小的矩阵,(2)使用在各组之间分割的重复层。 >> DeBERTa (增强的解码BERT与解缠注意力)[26]利用两种新技术改进了BERT和RoBERTa模型。第一个是解缠注意力机制,其中每个单词使用两个向量来编码其内容和位置,而单词之间的注意力权重使用关于它们的内容和相对位置的解缠矩阵进行计算。其次,在解码层中使用了增强的掩码解码器来预测模型预训练中的掩码token。此外,还使用了一种新颖的虚拟对抗训练方法来进行微调,以提高模型的泛化能力。 |

ELECTRA(提出RTD替换MLM任务+小型生成器网络+训练一个判别模型)、XLM(两种方法将BERT扩展到跨语言语言模型=仅依赖于单语数据的无监督方法+利用平行数据的监督方法)

| ELECTRA [46] uses a new pre-training task, known as replaced token detection (RTD), which is empirically proven to be more sample-efficient than MLM. Instead of masking the input, RTD corrupts it by replacing some tokens with plausible alternatives sampled from a small generator network. Then, instead of training a model that predicts the original identities of the corrupted tokens, a discriminative model is trained to predict whether a token in the corrupted input was replaced by a generated sample or not. RTD is more sample-efficient than MLM because the former is defined over all input tokens rather than just the small subset being masked out, as illustrated in Fig 4. XLMs [47] extended BERT to cross-lingual language models using two methods: (1) a unsupervised method that only relies on monolingual data, and (2) a supervised method that leverages parallel data with a new cross-lingual language model objective, as illustrated in Fig 5. XLMs had obtained state-of-the-art results on cross-lingual classification, unsupervised and supervised machine translation, at the time they were proposed. | >> ELECTRA[46]使用了一种新的预训练任务,称为替换标记检测(RTD),经验上证明比MLM更具样本效率。RTD不是对输入进行屏蔽,而是用从小型生成器网络中采样的合理替代方案替换一些标记,从而破坏输入。然后,与其训练一个模型来预测受损标记的原始标识相反,训练了一个判别模型来预测损坏输入中的令牌是否被生成的样本取代。RTD比MLM更具样本效率,因为前者是基于所有输入标记而不仅仅是被屏蔽的小子集来定义的,如图4所示。 >> XLM[47]使用两种方法将BERT扩展到跨语言语言模型: (1)一种仅依赖于单语数据的无监督方法, (2)一种利用新的跨语言语言模型目标并利用平行数据的监督方法,如图5所示。在提出XLM时,它们已经在跨语言分类、无监督和有监督机器翻译方面获得了最先进的结果。 |

Fig. 4: A comparison between replaced token detection and masked language modeling. Courtesy of [46].

Fig. 5: Cross-lingual language model pretraining. The MLM objective is similar to BERT, but with continuous streams of text as opposed to sentence pairs. The TLM objective extends MLM to pairs of parallel sentences. To predict a masked English word, the model can attend to both the English sentence and its French translation, and is encouraged to align English and French representations. Courtesy of [47].

利用自回归(解码器)模型的优点的一些仅编码器:XLNet(基于Transformer-XL+预训练采用广义自回归+MLM中采用连续的文本流而非句子对+TLM将MLM扩展到平行句对)、UNILM(统一三种类型的语言建模任务=单向/双向/序列到序列预测)

| There are also encoder-only language models that leverage the advantages of auto-regressive (decoder) models for model training and inference. Two examples are XLNet and UNILM. XLNet [48] is based on Transformer-XL, pre-trained using a generalized autoregressive method that enables learning bidirectional contexts by maximizing the expected likelihood over Fig. 5: Cross-lingual language model pretraining. The MLM objective is similar to BERT, but with continuous streams of text as opposed to sentence pairs. The TLM objective extends MLM to pairs of parallel sentences. To predict a masked English word, the model can attend to both the English sentence and its French translation, and is encouraged to align English and French representations. Courtesy of [47]. all permutations of the factorization order. UNILM (UNIfied pre-trained Language Model) [49] is pre-trained using three types of language modeling tasks: unidirectional, bidirectional, and sequence-to-sequence prediction. This is achieved by employing a shared Transformer network and utilizing specific self-attention masks to control what context the prediction is conditioned on, as illustrated in Fig 6. The pre-trained model can be fine-tuned for both natural language understanding and generation tasks. Fig. 6: Overview of unified LM pre-training. The model parameters are shared across the LM objectives (i.e., bidirectional LM, unidirectional LM, and sequence-to-sequence LM). Courtesy of [49]. | 还有一些仅编码器的语言模型,利用自回归(解码器)模型的优点进行模型训练和推理。其中两个例子是XLNet和UNILM。 >> XLNet[48]基于Transformer-XL,使用广义自回归方法进行预训练,可以通过最大化图5:跨语言模型预训练的期望似然来学习双向上下文。MLM的目标类似于BERT,但是使用连续的文本流,而不是句子对。TLM的目标是将MLM扩展到平行句对。为了预测一个被屏蔽的英文单词,模型可以同时关注英文句子及其法语翻译,并被鼓励对齐英语和法语表示。由[47]提供。分解顺序的所有排列。 >> UNILM (UNIfied pre-trained Language Model,统一预训练语言模型)[49]使用三种类型的语言建模任务进行预训练:单向、双向和序列到序列预测。这是通过使用共享的Transformer网络和利用特定的自注意力掩码来控制预测所依赖的上下文实现的,如图6所示。预训练的模型可以对自然语言理解和生成任务进行微调。图6:统一LM预训练概述。模型参数在LM目标之间共享(即双向LM、单向LM和序列到序列LM)。由[49]提供。 |

Fig. 6: Overview of unified LM pre-training. The model parameters are shared across the LM objectives (i.e., bidirec-tional LM, unidirectional LM, and sequence-to-sequence LM). Courtesy of [49].

2)、仅解码器PLMs:

| 2) Decoder-only PLMs: Two of the most widely used decoder-only PLMs are GPT-1 and GPT-2, developed by OpenAI. These models lay the foundation to more powerful LLMs subsequently, i.e., GPT-3 and GPT-4. | 2)仅解码器PLMs:两个最广泛使用的纯解码器PLM是由OpenAI开发的GPT-1和GPT-2。这些模型为后来更强大的LLM,即GPT-3和GPT-4奠定了基础。 |

Fig. 7: High-level overview of GPT pretraining, and fine-tuning steps. Courtesy of OpenAI.

GPT-1:基于仅解码器的Transformer+生成式预训练【无标签语料库+自监督学习】+微调【下游任务有区别的微调】

| GPT-1 [50] demonstrates for the first time that good performance over a wide range of natural language tasks can be obtained by Generative Pre-Training (GPT) of a decoder-only Transformer model on a diverse corpus of unlabeled text in a self-supervised learning fashion (i.e., next word/token predic-tion), followed by discriminative fine-tuning on each specific downstream task (with much fewer samples), as illustrated in Fig 7. GPT-1 paves the way for subsequent GPT models, with each version improving upon the architecture and achieving better performance on various language tasks. | GPT-1[50]首次证明,在各种未标记文本语料库上,通过自监督学习方式(即下一个单词/token预测)对仅解码器的Transformer模型进行生成式预训练(GPT),可以在广泛的自然语言任务上获得良好的性能,然然后在每个具体的下游任务上进行有区别的微调(样本数量少的多),如图7所示。GPT-1为后续的GPT模型铺平了道路,每个版本都对架构进行了改进,并在各种语言任务上实现了更好的性能。 |

GPT-2:基于数百万网页的WebText数据集+遵循GPT-1的模型设计+将层归一化移动到每个子块的输入处+自关注块之后添加额外的层归一化+修改初始化+词汇量扩展到5025+上下文扩展到1024

| GPT-2 [51] shows that language models are able to learn to perform specific natural language tasks without any explicit supervision when trained on a large WebText dataset consisting of millions of webpages. The GPT-2 model follows the model designs of GPT-1 with a few modifications: Layer normalization is moved to the input of each sub-block, additional layer normalization is added after the final self-attention block, initialization is modified to account for the accumulation on the residual path and scaling the weights of residual layers, vocabulary size is expanded to 50,25, and context size is increased from 512 to 1024 tokens. | GPT-2[51]表明,在由数百万个网页组成的大型WebText数据集上训练时,语言模型能够在没有任何明确监督的情况下学习执行特定的自然语言任务。GPT-2模型遵循GPT-1的模型设计,但做了一些修改:将层归一化移动到每个子块的输入处,在最终的自关注块之后添加额外的层归一化,修改初始化以考虑残差路径上的积累和残差层的权重缩放,词汇量扩展到5025,上下文大小从512增加到1024。 |

3)、编码器-解码器PLMs:

| 3) Encoder-Decoder PLMs: In [52], Raffle et al. shows that almost all NLP tasks can be cast as a sequence-to-sequence generation task. Thus, an encoder-decoder language model, by design, is a unified model in that it can perform all natural language understanding and generation tasks. Representative encoder-decoder PLMs we will review below are T5, mT5, MASS, and BART. | 3)编码器-解码器PLMs:在[52]中,Raffle等人表明,几乎所有的NLP任务都可以转换为序列到序列的生成任务。因此,编码器-解码器语言模型在设计上是一个统一的模型,因为它可以执行所有自然语言理解和生成任务。我们将在下面回顾的编码器-解码器PLMs包括T5, mT5, MASS和BART。 |

T5(将所有NLP任务都视为文本到文本生成任务)、mT5(基于T5的多语言变体+101种语言)

| T5 [52] is a Text-to-Text Transfer Transformer (T5) model, where transfer learning is effectively exploited for NLP via an introduction of a unified framework in which all NLP tasks are cast as a text-to-text generation task. mT5 [53] is a multilingual variant of T5, which is pre-trained on a new Common Crawlbased dataset consisting of texts in 101 languages. | T5[52]是一个文本到文本Transformer(T5)模型,将所有NLP任务都视为文本到文本生成任务,有效地利用了迁移学习。 mT5[53]是T5的多语言变体,,它在一个包含101种语言文本的新型Common Crawl数据集上进行了预训练。 |

MASS(重构剩余部分句子片段+编码器将带有随机屏蔽片段句子作为输入+解码器预测被屏蔽的片段)、BART()

| MASS (MAsked Sequence to Sequence pre-training) [54] adopts the encoder-decoder framework to reconstruct a sentence fragment given the remaining part of the sentence. The encoder takes a sentence with randomly masked fragment (several consecutive tokens) as input, and the decoder predicts the masked fragment. In this way, MASS jointly trains the encoder and decoder for language embedding and generation, respectively. | MASS (mask Sequence to Sequence pre-training)[54]采用编码器-解码器框架对给定句子剩余部分的句子片段进行重构。编码器将带有随机屏蔽片段(几个连续的标记)的句子作为输入,解码器预测被屏蔽的片段。这样,MASS同时训练编码器和解码器,分别用于语言嵌入和语言生成。 |

| BART [55] uses a standard sequence-to-sequence translation model architecture. It is pre-trained by corrupting text with an arbitrary noising function, and then learning to reconstruct the original text. | BART[55]使用标准的序列到序列翻译模型架构。它通过使用任意的加噪函数对文本进行破坏性处理进行预训练,然后学习重建原始文本。 |

B. Large Language Model Families大型语言模型家族(基于Transformer的PLM+尺寸更大【数百亿到数千亿个参数】+表现更强):比如LLM的三大家族

| Large language models (LLMs) mainly refer to transformer-based PLMs that contain tens to hundreds of billions of parameters. Compared to PLMs reviewed above, LLMs are not only much larger in model size, but also exhibit stronger language understanding and generation and emergent abilities that are not present in smaller-scale models. In what follows, we review three LLM families: GPT, LLaMA, and PaLM, as illustrated in Fig 8. | 大型语言模型(LLM)主要是指基于Transformer的PLM,包含数百亿到数千亿个参数。与上面提到的PLM相比,LLM不仅模型尺寸更大,而且在语言理解和生成方面表现更强,并且具有在规模较小的模型中不存在的新兴能力。接下来,我们将回顾三个LLM家族:GPT、LLaMA和PaLM,如图8所示。 |

Fig. 8: Popular LLM Families.

Fig. 9: GPT-3 shows that larger models make increasingly efficient use of in-context information. It shows in-context learning performance on a simple task requiring the model to remove random symbols from a word, both with and without a natural language task description. Courtesy of [56].图9:GPT-3显示,更大的模型对上下文信息的利用越来越有效。它显示了在一个简单任务上的上下文学习性能,该任务要求模型从单词中删除随机符号,包括有和没有自然语言任务描述。由[56]提供。

Fig. 10: The high-level overview of RLHF. Courtesy of [59].

GPT家族(OpenAI开发+后期闭源):开源系列(GPT-1、GPT-2),闭源系列(GPT-3、InstrucGPT、ChatGPT、GPT-4、CODEX、WebGPT,只能API访问)

| 1) The GPT Family: Generative Pre-trained Transformers (GPT) are a family of decoder-only Transformer-based language models, developed by OpenAI. This family consists of GPT-1, GPT-2, GPT-3, InstrucGPT, ChatGPT, GPT-4, CODEX, and WebGPT. Although early GPT models, such as GPT-1 and GPT-2, are open-source, recent models, such as GPT-3 and GPT-4, are close-source and can only be accessed via APIs. GPT-1 and GPT-2 models have been discussed in the early PLM subsection. We start with GPT-3 below. | 1) GPT家族:生成预训练Transformer(GPT)是一个由OpenAI开发的仅基于解码器的Transformer语言模型家族。该家族包括GPT-1、GPT-2、GPT-3、InstrucGPT、ChatGPT、GPT-4、CODEX和WebGPT。虽然早期的GPT模型,如GPT-1和GPT-2,是开源的,但最近的模型,如GPT-3和GPT-4,是闭源的,只能通过API访问。GPT-1和GPT-2模型已经在早期的PLM小节中讨论过。我们从下面的GPT-3开始。 |

GPT-3(175B):1750亿参数+被视为史上第一个LLM(因其大规模尺寸+涌现能力)+仅需少量演示直接用于下游任务而无需任何微调+适应各种NLP任务

| GPT-3 [56] is a pre-trained autoregressive language model with 175 billion parameters. GPT-3 is widely considered as the first LLM in that it not only is much larger than previous PLMs, but also for the first time demonstrates emergent abilities that are not observed in previous smaller PLMs. GPT3 shows the emergent ability of in-context learning, which means GPT-3 can be applied to any downstream tasks without any gradient updates or fine-tuning, with tasks and few-shot demonstrations specified purely via text interaction with the model. GPT-3 achieved strong performance on many NLP tasks, including translation, question-answering, and the cloze tasks, as well as several ones that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, 3-digit arithmetic. Fig 9 plots the performance of GPT-3 as a function of the number of examples in in-context prompts | GPT-3 [56] 是一个具有1750亿个参数的预训练自回归语言模型。GPT-3被广泛认为是第一个LLM,因为它不仅比以前的PLM大得多,而且第一次展示了在以前较小的PLM中没有观察到的涌现能力。GPT3显示了上下文学习的涌现能力,这意味着GPT-3可以应用于任何下游任务,而无需任何梯度更新或微调,任务和少量演示完全通过与模型的文本交互指定。GPT-3在许多NLP任务上取得了很好的表现,包括翻译、问答和完形填空任务,以及一些需要即时推理或领域适应的任务,如拼凑单词、在句子中使用新单词、3位数算术。图9以上下文提示中示例数量作为函数绘制了GPT-3的性能。 |

CODEX(2023年3月,即Copilot的底层技术):通用编程模型,解析自然语言并生成代码作为响应,采用GPT-3+微调GitHub的代码语料库

| CODEX [57], released by OpenAI in March 2023, is a general-purpose programming model that can parse natural language and generate code in response. CODEX is a descendant of GPT-3, fine-tuned for programming applications on code corpora collected from GitHub. CODEX powers Microsoft’s GitHub Copilot. | CODEX[57]是OpenAI于2023年3月发布的通用编程模型,可以解析自然语言并生成代码作为响应。CODEX是GPT-3的后代,对从GitHub收集的代码语料库进行了微调,用于编程应用程序。CODEX为微软的GitHub Copilot提供支持。 |

WebGPT:采用GPT-3+基于文本的网络浏览器回答+三步骤(学习模仿人类浏览行为+利用奖励函数来预测人类的偏好+强化学习和拒绝抽样来优化奖励函数)

| WebGPT [58] is another descendant of GPT-3, fine-tuned to answer open-ended questions using a text-based web browser, facilitating users to search and navigate the web. Specifically, WebGPT is trained in three steps. The first is for WebGPT to learn to mimic human browsing behaviors using human demonstration data. Then, a reward function is learned to predict human preferences. Finally, WebGPT is refined to optimize the reward function via reinforcement learning and rejection sampling. | WebGPT[58]是GPT-3的另一个后代,经过微调后,可以使用基于文本的网络浏览器回答开放性问题,帮助用户搜索和浏览网络。具体来说,WebGPT的训练分为三个步骤。首先是WebGPT学习模仿人类浏览行为,使用人类演示数据。然后,学习奖励函数来预测人类的偏好。最后,通过强化学习和拒绝抽样来优化奖励函数,对WebGPT进行了改进。 |

InstructGPT:采用GPT-3+对人类反馈进行微调(RLHF对齐用户意图)+改善了真实性和有毒性

| To enable LLMs to follow expected human instructions, InstructGPT [59] is proposed to align language models with user intent on a wide range of tasks by fine-tuning with human feedback. Starting with a set of labeler-written prompts and prompts submitted through the OpenAI API, a dataset of labeler demonstrations of the desired model behavior is collected. Then GPT-3 is fine-tuned on this dataset. Then, a dataset of human-ranked model outputs is collected to further fine-tune the model using reinforcement learning. The method is known Reinforcement Learning from Human Feedback (RLHF), as shown in 10. The resultant InstructGPT models have shown improvements in truthfulness and reductions in toxic output generation while having minimal performance regressions on public NLP datasets. | InstructGPT:为了使LLM能够遵循预期的人类指令,提出了InstructGPT[59],通过对人类反馈进行微调,使语言模型在广泛的任务上与用户意图保持一致。从一组分词器编写的提示和通过OpenAI API提交的提示开始,收集所需模型行为的分词器演示数据集。然后GPT-3在这个数据集上进行微调。接下来,收集了一个包含人类排名模型输出的数据集,以进一步使用强化学习对模型进行微调。这种方法被称为基于人类反馈的强化学习(RLHF),如图10所示。所得到的InstructGPT模型在真实性方面有所改善,并且在减少有毒输出生成方面表现良好,同时在公共NLP数据集上具有最小的性能回归。 |

ChatGPT(2022年11月30日):一种聊天机器人+采用用户引导对话完成各种任务+基于GPT-3.5+为InstructGPT的兄弟模型

| The most important milestone of LLM development is the launch of ChatGPT (Chat Generative Pre-trained Transformer) [60] on November 30, 2022. ChatGPT is chatbot that enables users to steer a conversation to complete a wide range of tasks such as question answering, information seeking, text summarization, and more. ChatGPT is powered by GPT-3.5 (and later by GPT-4), a sibling model to InstructGPT, which is trained to follow an instruction in a prompt and provide a detailed response. | ChatGPT:LLM发展最重要的里程碑是在2022年11月30日推出ChatGPT(聊天生成预训练Transformer)[60]。ChatGPT是一种聊天机器人,它使用户能够引导对话完成各种任务,如问题回答、信息搜索、文本摘要等。ChatGPT是由GPT-3.5(后来又由GPT-4)提供支持的,它是InstructGPT的兄弟模型,经过训练可以在提示中遵循指令并提供详细的响应。 |

GPT-4(2023年3月):GPT家族中最新最强大的多模态LLM(呈现人类级别的性能)+基于大型文本语料库预训练+采用RLHF进行微调

| GPT-4 [33] is the latest and most powerful LLM in the GPT family. Launched in March, 2023, GPT-4 is a multimodal LLM in that it can take image and text as inputs and produce text outputs. While still less capable than humans in some of the most challenging real-world scenarios, GPT-4 exhibits human-level performance on various professional and academic benchmarks, including passing a simulated bar exam with a score around the top 10% of test takers, as shown in Fig 11. Like early GPT models, GPT-4 was first pre-trained to predict next tokens on large text corpora, and then fine-tuned with RLHF to align model behaviors with human-desired ones. | GPT-4[33]是GPT家族中最新最强大的LLM。GPT-4于2023年3月推出,是一种多模态LLM,可以接受图像和文本作为输入,并产生文本输出。虽然在一些最具挑战性的现实场景中仍然不如人类,但GPT-4在各种专业和学术基准测试中表现出人类级别的性能,包括在模拟的司法考试中获得了约前10%考生的成绩,如图11所示。与早期的GPT模型一样,GPT-4首先被预训练以预测大型文本语料库上的下一个标记,然后使用RLHF进行微调,使模型行为与人类期望的行为保持一致。 |

Fig. 11: GPT-4 performance on academic and professional exams, compared with GPT 3.5. Courtesy of [33].

LLaMA家族(主要由Meta开发+开源)

| 2) The LLaMA Family: LLaMA is a collection of foundation language models, released by Meta. Unlike GPT models, LLaMA models are open-source, i.e., model weights are released to the research community under a noncommercial license. Thus, the LLaMA family grows rapidly as these models are widely used by many research groups to develop better open-source LLMs to compete the closed-source ones or to develop task-specific LLMs for mission-critical applications. | 2) LLaMA家族: LLaMA是Meta发布的一系列基础语言模型。与GPT模型不同,LLaMA模型是开源的,也就是说,模型权重是在非商业许可下发布给研究社区的。因此,随着这些模型被许多研究小组广泛用于开发更好的开源LLM以与闭源LLM竞争,或者为关键任务应用程序开发特定于任务的LLM,LLaMA家族发展迅速。 |

Fig. 12: Training of LLaMA-2 Chat. Courtesy of [61].

Fig. 13: Relative Response Quality of Vicuna and a few other well-known models by GPT-4. Courtesy of Vicuna Team.

LLaMA1(2023年2月):预训练语料(公开可用+万亿级token)+参照GPT-3的Transformer架构+部分修改(SwiGLU替代ReLU/旋转位置嵌入替代绝对位置嵌入/均方根层归一化替代标准层归一化),良好基准

| The first set of LLaMA models [32] was released in February 2023, ranging from 7B to 65B parameters. These models are pre-trained on trillions of tokens, collected from publicly available datasets. LLaMA uses the transformer architecture of GPT-3, with a few minor architectural modifications, including (1) using a SwiGLU activation function instead of ReLU, (2) using rotary positional embeddings instead of absolute positional embedding, and (3) using root-mean-squared layernormalization instead of standard layer-normalization. The open-source LLaMA-13B model outperforms the proprietary GPT-3 (175B) model on most benchmarks, making it a good baseline for LLM research. | 第一套LLaMA模型[32]于2023年2月发布,参数从7B到65B不等。这些模型是在数万亿个令牌上进行预训练的,这些令牌是从公开可用的数据集中收集的。LLaMA使用了GPT-3的Transformer架构,并进行了一些小的架构修改,包括(1)使用SwiGLU激活函数而不是ReLU,(2)使用旋转位置嵌入而不是绝对位置嵌入,以及(3)使用均方根层归一化而不是标准层归一化。开源的LLaMA-13B模型在大多数基准测试中优于专有的GPT-3 (175B)模型,使其成为LLM研究的良好基准。 |

LLaMA2(2023年7月/Meta与微软合作):预训练(公开可用)+监督微调+模型优化(RLHF/拒绝抽样/最近邻策略优化)

| In July 2023, Meta, in partnership with Microsoft, released the LLaMA-2 collection [61], which include both foundation language models and Chat models finetuned for dialog, known as LLaMA-2 Chat. The LLaMA-2 Chat models were reported to outperform other open-source models on many public benchmarks. Fig 12 shows the training process of LLaMA-2 Chat. The process begins with pre-training LLaMA-2 using publicly available online data. Then, an initial version of LLaMA-2 Chat is built via supervised fine-tuning. Subsequently, the model is iteratively refined using RLHF, rejection sampling and proximal policy optimization. In the RLHF stage, the accumulation of human feedback for revising the reward model is crucial to prevent the reward model from being changed too much, which could hurt the stability of LLaMA model training. | 在2023年7月,Meta与微软合作发布了LLaMA-2系列[61],其中包括基础语言模型和针对对话进行微调的聊天模型,称为LLaMA-2 Chat。据报道,LLaMA-2 Chat模型在许多公共基准测试中优于其他开源模型。图12为LLaMA-2 Chat的训练过程。这个过程从使用公开的在线数据对LLaMA-2进行预训练开始。然后,通过监督微调构建LLaMA-2 Chat的初始版本。然后,使用RLHF、拒绝抽样和最近邻策略优化对模型进行迭代改进。在RLHF阶段,人为反馈对奖励模型修正的积累至关重要,以防止奖励模型变化过大,影响LLaMA模型训练的稳定性。 |

Alpaca:利用GPT-3.5生成52K指令数据+微调LLaMA-7B模型,比GPT-3.5相当但成本非常小

| Alpaca [62] is fine-tuned from the LLaMA-7B model using 52K instruction-following demonstrations generated in the style of self-instruct using GPT-3.5 (text-davinci-003). Alpaca is very cost-effective for training, especially for academic research. On the self-instruct evaluation set, Alpaca performs similarly to GPT-3.5, despite that Alpaca is much smaller. | Alpaca [62]是通过使用GPT-3.5 (text- davincii -003)以自我指导的方式生成的52K指令跟随演示,对LLaMA-7B模型进行微调的。Alpaca是非常划算的训练,尤其是学术研究。在自我指导评估集上,Alpaca的表现与GPT-3.5相似,尽管Alpaca要小得多。 |

Vicuna:从ShareGPT收集用户对话数据+微调利用LLaMA+采用GPT4评估,达到ChatGPT和Bard的90%性能但仅为300美元

| The Vicuna team has developed a 13B chat model, Vicuna13B, by fine-tuning LLaMA on user-shared conversations collected from ShareGPT. Preliminary evaluation using GPT4 as a evaluator shows that Vicuna-13B achieves more than 90% quality of OpenAI’s ChatGPT, and Google’s Bard while outperforming other models like LLaMA and Stanford Alpaca in more than 90% of cases. 13 shows the relative response quality of Vicuna and a few other well-known models by GPT-4. Another advantage of Vicuna-13B is its relative limited computational demand for model training. The training cost of Vicuna-13B is merely $300. | Vicuna团队开发了一个13B聊天模型,Vicuna13B,通过对LLaMA对从ShareGPT收集的用户共享对话进行微调。使用GPT4作为评估器进行的初步评估表明,Vicuna-13B的质量达到了OpenAI的ChatGPT和Google的Bard的90%以上,而在90%以上的情况下优于LLaMA和Stanford Alpaca等其他模型。图13为GPT-4对Vicuna和其他几个知名模型的相对响应质量。Vicuna-13B的另一个优点是它对模型训练的计算需求相对有限。Vicuna-13B的训练费用仅为300美元。 |

Guanaco:采用指令遵循数据+利用QLoRA微调LLaMA模型,单个48GB GPU上仅需24小时可微调65B参数模型,达到了ChatGPT的99.3%性能

| Like Alpaca and Vicuna, the Guanaco models [63] are also finetuned LLaMA models using instruction-following data. But the finetuning is done very efficiently using QLoRA such that finetuning a 65B parameter model can be done on a single 48GB GPU. QLoRA back-propagates gradients through a frozen, 4-bit quantized pre-trained language model into Low Rank Adapters (LoRA). The best Guanaco model outperforms all previously released models on the Vicuna benchmark, reaching 99.3% of the performance level of ChatGPT while only requiring 24 hours of fine-tuning on a single GPU. | 与Alpaca和Vicuna一样,Guanaco模型[63]也是使用指令遵循数据进行微调的LLaMA模型。但是,使用QLoRA进行微调非常有效,因此可以在单个48GB GPU上完成65B参数模型的微调。QLoRA通过冻结的4位量化预训练语言模型将梯度反向传播到低秩适配器(Low Rank Adapters, LoRA)。在Vicuna基准测试中,最好的Guanaco型号的性能超过了之前发布的所有型号,达到了ChatGPT性能水平的99.3%,而只需要在单个GPU上进行24小时的微调。 |

Koala:采用指令遵循数据(侧重交互数据)+微调LLaMA模型

| Koala [64] is yet another instruction-following language model built on LLaMA, but with a specific focus on interaction data that include user inputs and responses generated by highly capable closed-source chat models such as ChatGPT. The Koala-13B model performs competitively with state-of-the-art chat models according to human evaluation based on realworld user prompts. | Koala[64]是另一个基于LLaMA的指令遵循语言模型,但它特别关注交互数据,包括用户输入和由功能强大的闭源聊天模型(如ChatGPT)生成的响应。根据基于真实用户提示的人类评估,Koala-13B模型在与最先进的聊天模型竞争时表现出色。 |

Mistral-7B:为了优越的性能和效率而设计,利用分组查询注意力来实现更快的推理,并配合滑动窗口注意力来有效地处理任意长度的序列

| Mistral-7B [65] is a 7B-parameter language model engineered for superior performance and efficiency. Mistral-7B outperforms the best open-source 13B model (LLaMA-2-13B) across all evaluated benchmarks, and the best open-source 34B model (LLaMA-34B) in reasoning, mathematics, and code generation. This model leverages grouped-query attention for faster inference, coupled with sliding window attention to effectively handle sequences of arbitrary length with a reduced inference cost. | Mistral-7B[65]是一种7b参数语言模型,设计用于优越的性能和效率。Mistral-7B在所有评估基准上优于最佳开源13B模型(LLaMA-2-13B),在推理、数学和代码生成方面优于最佳开源34B模型(LLaMA-34B)。该模型利用分组查询注意力来实现更快的推理,并配合滑动窗口注意力来有效地处理任意长度的序列,同时降低推理成本。 |

其它:Code LLaMA 、Gorilla、Giraffe、Vigogne、Tulu 65B、Long LLaMA、Stable Beluga

| The LLaMA family is growing rapidly, as more instructionfollowing models have been built on LLaMA or LLaMA2, including Code LLaMA [66], Gorilla [67], Giraffe [68], Vigogne [69], Tulu 65B [70], Long LLaMA [71], and Stable Beluga2 [72], just to name a few. | LLaMA家族正在迅速壮大,因为更多的指令跟随模型已经建立在LLaMA或LLaMA2上,包括Code LLaMA [66]、Gorilla [67]、Giraffe [68]、Vigogne [69]、Tulu 65B [70]、Long LLaMA [71]和Stable Beluga2 [72]等等。 |

PaLM家族(Google开发):

PaLM(2022年4月,2023年3月才对外公开):540B参数+基于Transformer+语料库(7800亿个令牌)+高效训练(采用Pathways系统在6144个TPU v4芯片)+与人类表现相当

| 3) The PaLM Family: The PaLM (Pathways Language Model) family are developed by Google. The first PaLM model [31] was announced in April 2022 and remained private until March 2023. It is a 540B parameter transformer-based LLM. The model is pre-trained on a high-quality text corpus consisting of 780 billion tokens that comprise a wide range of natural language tasks and use cases. PaLM is pre-trained on 6144 TPU v4 chips using the Pathways system, which enables highly efficient training across multiple TPU Pods. PaLM demonstrates continued benefits of scaling by achieving state-of-the-art few-shot learning results on hundreds of language understanding and generation benchmarks. PaLM540B outperforms not only state-of-the-art fine-tuned models on a suite of multi-step reasoning tasks, but also on par with humans on the recently released BIG-bench benchmark | 3) PaLM家族:PaLM (Pathways Language Model)家族是由Google开发的。第一款PaLM型号[31]于2022年4月公布,直到2023年3月才对外公开。它是一个基于Transformer的LLM,具有540B个参数。该模型是在一个高质量的文本语料库上进行预训练的,该语料库由7800亿个令牌组成,涵盖了广泛的自然语言任务和用例。PaLM使用Pathways系统在6144个 TPU v4芯片上进行预训练,从而实现跨多个TPU pod的高效训练。PaLM通过在数百种语言理解和生成基准上实现最先进的少量学习结果,展示了继续扩展的好处。PaLM540B不仅在一系列多步骤推理任务上优于最先进的微调模型,,而且在最近发布的BIG-bench基准测试中与人类表现相当。 |

Fig. 14: Flan-PaLM finetuning consist of 473 datasets in above task categories. Courtesy of [74].

U-PaLM:使用UL2R(使用UL2的混合去噪目标来持续训练)在PaLM上进行训练+可节省约2倍的计算资源

| The U-PaLM models of 8B, 62B, and 540B scales are continually trained on PaLM with UL2R, a method of continue training LLMs on a few steps with UL2’s mixture-of-denoiser objective [73]. An approximately 2x computational savings rate is reported. | 8B、62B和540B规模的U-PaLM模型正在不断地使用UL2R在PaLM上进行训练,UL2R是一种使用UL2的混合去噪目标来持续训练LLM的方法。据报道,这可以节省约2倍的计算资源。 |

Flan-PaLM:更多的任务(473个数据集/总共1836个任务)+更大的模型规模+链式思维数据

| U-PaLM is later instruction-finetuned as Flan-PaLM [74]. Compared to other instruction finetuning work mentioned above, Flan-PaLM’s finetuning is performed using a much larger number of tasks, larger model sizes, and chain-ofthought data. As a result, Flan-PaLM substantially outperforms previous instruction-following models. For instance, FlanPaLM-540B, which is instruction-finetuned on 1.8K tasks, outperforms PaLM-540B by a large margin (+9.4% on average). The finetuning data comprises 473 datasets, 146 task categories, and 1,836 total tasks, as illustrated in Fig 14. | U-PaLM后来被调整为Flan-PaLM[74]。与上面提到的其他指令调优工作相比,Flan-PaLM的微调使用了更多的任务、更大的模型规模和链式思维数据。因此,Flan-PaLM大大优于以前的指令遵循模型。例如,在1.8K任务上进行指令微调的FlanPaLM-540B的性能大大优于PaLM-540B(平均+9.4%)。调优数据包括473个数据集,146个任务类别,总共1836个任务,如图14所示。 |

PaLM-2:计算效率更高+更好的多语言和推理能力+混合目标,快速、更高效的推理能力

| PaLM-2 [75] is a more compute-efficient LLM with better multilingual and reasoning capabilities, compared to its predecessor PaLM. PaLM-2 is trained using a mixture of objectives. Through extensive evaluations on English, multilingual, and reasoning tasks, PaLM-2 significantly improves the model performance on downstream tasks across different model sizes, while simultaneously exhibiting faster and more efficient inference than PaLM. | 与其前身PaLM相比,PaLM-2[75]是一种计算效率更高的LLM,具有更好的多语言和推理能力。PaLM-2是使用混合目标进行训练的。通过对英语、多语言和推理任务的广泛评估,PaLM-2显著提高了不同模型规模下的下游任务性能,同时表现出比PaLM更快速、更高效的推理能力。 |

Med-PaLM(特定领域的PaLM(医学领域问题回答),基于PaLM的指令提示调优)→Med-PaLM 2(医学领域微调和集成提示改进)

| Med-PaLM [76] is a domain-specific PaLM, and is designed to provide high-quality answers to medical questions. Med-PaLM is finetuned on PaLM using instruction prompt tuning, a parameter-efficient method for aligning LLMs to new domains using a few exemplars. Med-PaLM obtains very encouraging results on many healthcare tasks, although it is still inferior to human clinicians. Med-PaLM 2 improves MedPaLM via med-domain finetuning and ensemble prompting [77]. Med-PaLM 2 scored up to 86.5% on the MedQA dataset (i.e., a benchmark combining six existing open question answering datasets spanning professional medical exams, research, and consumer queries), improving upon Med-PaLM by over 19% and setting a new state-of-the-art. | Med-PaLM[76]是一个特定领域的PaLM,旨在为医学问题提供高质量的答案。Med-PaLM使用指令提示调优在PaLM上进行调优,这是一种参数高效的方法,可以使用少量示例将LLM与新领域对齐。Med-PaLM在许多医疗保健任务中获得了非常令人鼓舞的结果,尽管它仍然不如人类临床医生。Med-PaLM 2通过医学领域微调和集成提示改进了Med-PaLM [77]。Med-PaLM 2在MedQA数据集上得分高达86.5%(即一个结合了六个现有开放问题回答数据集的基准测试,涵盖专业医学考试,研究和消费者查询),比Med-PaLM提高了超过19%,并设定了新的先进技术。 |

C. Other Representative LLMs其他代表性LLM

| In addition to the models discussed in the previous subsections, there are other popular LLMs which do not belong to those three model families, yet they have achieved great performance and have pushed the LLMs field forward. We briefly describe these LLMs in this subsection. | 除了前几节讨论的模型之外,还有其他流行的LLM,它们不属于这三个模型家族,但它们取得了很大的成绩,并推动了LLM领域的发展。我们在本小节中简要介绍这些LLM。 |

Fig. 15: comparison of instruction tuning with pre-train–finetune and prompting. Courtesy of [78].

Fig. 16: Model architecture details of Gopher with different number of parameters. Courtesy of [78].

Fig. 17: High-level model architecture of ERNIE 3.0. Courtesy of [81].

Fig. 18: Retro architecture. Left: simplified version where a sequence of length n = 12 is split into l = 3 chunks of size m = 4. For each chunk, we retrieve k = 2 neighbours of r = 5 tokens each. The retrieval pathway is shown on top. Right: Details of the interactions in the CCA operator. Causality is maintained as neighbours of the first chunk only affect the last token of the first chunk and tokens from the second chunk. Courtesy of [82].

Fig. 19: GLaM model architecture. Each MoE layer (the bottom block) is interleaved with a Transformer layer (the upper block). Courtesy of [84].

Fig. 20: Different OPT Models’ architecture details. Courtesy of [86].

Fig. 21: Sparrow pipeline relies on human participation to continually expand a training set. Courtesy of [90].

Fig. 22: An overview of UL2 pretraining paradigm. Courtesy of [92].

Fig. 23: An overview of BLOOM architecture. Courtesy of [93].

FLAN(通过指令调优来提高语言模型的零样本性能)、Gopher(探索基于Transformer的不同模型尺度上的性能+采用152个任务评估,280B个参数)、T0(将任何自然语言任务映射到人类可读提示形式)

| FLAN: In [78], Wei et al. explored a simple method for improving the zero-shot learning abilities of language models. They showed that instruction tuning language models on a collection of datasets described via instructions substantially improves zero-shot performance on unseen tasks. They take a 137B parameter pretrained language model and instruction tune it on over 60 NLP datasets verbalized via natural language instruction templates. They call this instruction-tuned model FLAN. Fig 15 provides a comparison of instruction tuning with pretrain–finetune and prompting. | FLAN:在[78]中,Wei等人探索了一种改进语言模型零样本学习能力的简单方法。他们表明,在通过指令描述的数据集集合上的指令调优语言模型大大提高了未见任务的零样本性能。他们采用了一个137B参数的预训练语言模型,并在60多个NLP数据集上进行了指令调整,这些数据集是通过自然语言指令模板口头描述的。他们称这个经过指令调整的模型为FLAN。图15比较了指令调整、预训练微调和提示之间的差异。 |

| Gopher: In [79], Rae et al. presented an analysis of Transformer-based language model performance across a wide range of model scales — from models with tens of millions of parameters up to a 280 billion parameter model called Gopher. These models were evaluated on 152 diverse tasks, achieving state-of-the-art performance across the majority. The number of layers, the key/value size, and other hyper-parameters of different model sizes are shown in Fig 16. | Gopher:在[79]中,Rae等人对基于Transformer的语言模型在各种模型尺度上的性能进行了分析——从具有数千万个参数的模型到一个名为Gopher的2800亿个参数的模型。这些模型在152个不同的任务上进行了评估,大多数任务的性能达到了最先进水平。图16显示了不同模型规模的层数、键/值大小和其他超参数。 |

| T0: In [80], Sanh et al. developed T0, a system for easily mapping any natural language tasks into a human-readable prompted form. They converted a large set of supervised datasets, each with multiple prompts with diverse wording. These prompted datasets allow for benchmarking the ability of a model to perform completely held-out tasks. Then, a T0 encoder-decoder model is developed to consume textual inputs and produces target responses. The model is trained on a multitask mixture of NLP datasets partitioned into different tasks. | T0:在[80]中,Sanh等人开发了T0,这是一个可以轻松地将任何自然语言任务映射到人类可读提示形式的系统。他们转换了一大批有监督数据集,每个数据集都有多个具有不同措辞的提示。这些提示数据集允许对模型执行完全未见任务的能力进行基准测试。然后,开发了一个T0编码器-解码器模型来处理文本输入并生成目标响应。该模型在多任务的NLP数据集上进行训练,这些数据集被分成不同的任务。 |

ERNIE 3.0(知识增强模型+融合了自回归网络和自编码网络+可轻松地适应自然语言理解和生成任务,4TB语料库/10B参数)、RETRO(基于与先前token的局部相似性,调节语料库中检索的文档块,增强了自回归语言模型,2T的token)、GLaM(采用一种稀疏激活的专家混合架构来扩展模型容量+1.2T参数)

| ERNIE 3.0: In [81], Sun et al. proposed a unified framework named ERNIE 3.0 for pre-training large-scale knowledge enhanced models. It fuses auto-regressive network and autoencoding network, so that the trained model can be easily tailored for both natural language understanding and generation tasks using zero-shot learning, few-shot learning or fine-tuning. They have trained ERNIE 3.0 with 10 billion parameters on a 4TB corpus consisting of plain texts and a large-scale knowledge graph. Fig 17 illustrates the model architecture of Ernie 3.0. | ERNIE 3.0:在[81]中,Sun等人提出了一个统一的框架ERNIE 3.0,用于预训练大规模的知识增强模型。它融合了自回归网络和自编码网络,以便训练后的模型可以轻松地适应自然语言理解和生成任务,使用零样本学习、少样本学习或微调。他们在由纯文本和大规模知识图组成的4TB语料库上训练了100亿个参数的ERNIE 3.0。图17展示了Ernie 3.0的模型架构。 |

| RETRO: In [82], Borgeaud et al. enhanced auto-regressive language models by conditioning on document chunks retrieved from a large corpus, based on local similarity with preceding tokens. Using a 2-trillion-token database, the RetrievalEnhanced Transformer (Retro) obtains comparable performance to GPT-3 and Jurassic-1 [83] on the Pile, despite using 25% fewer parameters. As shown in Fig 18, Retro combines a frozen Bert retriever, a differentiable encoder and a chunked cross-attention mechanism to predict tokens based on an order of magnitude more data than what is typically consumed during training. | RETRO:在[82]中,Borgeaud等人基于与先前标记的局部相似性,通过对从大型语料库中检索的文档块进行调节,增强了自回归语言模型。使用2万亿令牌数据库,检索增强Transformer (Retro)在Pile上获得与GPT-3和Jurassic-1[83]相当的性能,尽管使用的参数减少了25%。如图18所示,Retro结合了一个冻结的Bert检索器、一个可微的编码器和一个分块的交叉注意机制,以基于比训练期间典型消耗的数据量大一个数量级的数据来预测token。 |

| GLaM: In [84], Du et al. proposed a family of LLMs named GLaM (Generalist Language Model), which use a sparsely activated mixture-of-experts architecture to scale the model capacity while also incurring substantially less training cost compared to dense variants. The largest GLaM has 1.2 trillion parameters, which is approximately 7x larger than GPT3. It consumes only 1/3 of the energy used to train GPT-3 and requires half of the computation flops for inference, while still achieving better overall zero, one and few-shot performance across 29 NLP tasks. Fig 19 shows the high-level architecture of GLAM. | GLaM:在[84]中,Du等人提出了一系列名为GLaM(通用语言模型)的LLM,它们使用了一种稀疏激活的专家混合架构来扩展模型容量,同时与密集变体相比,训练成本大大降低。最大的GLaM具有1.2万亿参数,大约比GPT3大7倍。它的能耗仅为训练GPT-3所用能量的1/3,并且需要一半的计算FLOPS进行推断,同时在29个NLP任务中实现了更好的零、一和少样本性能。图19显示了GLAM的高级架构。 |

LaMDA(基于transformer的专门用于对话+能够查找外部知识来源+显著改善安全性和事实,137B参数/1.56T个单词)、OPT(仅解码器+与研究人员共享这些模型)、Chinchilla(提出模型大小与token个数的规模定律,70B参数)

| LaMDA: In [85], Thoppilan et al. presented LaMDA, a family of Transformer-based neural language models specialized for dialog, which have up to 137B parameters and are pre-trained on 1.56T words of public dialog data and web text. They showed that fine-tuning with annotated data and enabling the model to consult external knowledge sources can lead to significant improvements towards the two key challenges of safety and factual grounding. | LaMDA:在[85]中,Thoppilan等人提出了LaMDA,这是一组基于transformer的专门用于对话的神经语言模型,该模型具有多达137B个参数,并对公共对话数据和网络文本的1.56T个单词进行了预训练。 他们表明,使用带注释的数据进行微调,并使模型能够查找外部知识来源,可以显著改善安全性和事实基础这两个关键挑战。 |

| OPT: In [86], Zhang et al. presented Open Pre-trained Transformers (OPT), a suite of decoder-only pre-trained transformers ranging from 125M to 175B parameters, which they share with researchers. The OPT models’ parameters are shown in 20 | OPT:在[86]中,Zhang等人提出了开放预训练Transformer(OPT),这是一套仅解码器的预训练Transformer,参数范围从125M到175B,他们与研究人员共享这些模型。OPT模型参数见20 |

| Chinchilla: In [2], Hoffmann et al. investigated the optimal model size and number of tokens for training a transformer language model under a given compute budget. By training over 400 language models ranging from 70 million to over 16 billion parameters on 5 to 500 billion tokens, they found that for compute-optimal training, the model size and the number of training tokens should be scaled equally: for every doubling of model size the number of training tokens should also be doubled. They tested this hypothesis by training a predicted compute-optimal model, Chinchilla, that uses the same compute budget as Gopher but with 70B parameters and 4% more more data. | Chinchilla:在[2]中,Hoffmann等人研究了在给定计算预算下训练transformer语言模型的最佳模型大小和toekn数量。通过在5B至500B个toekn上训练超过400个语言模型,这些模型的参数范围从7000万到超过16B,他们发现,对于计算最优的训练,应该等比例地缩放模型大小和训练标记数量:模型大小每增加一倍,训练标记数量也应该增加一倍。他们通过训练一个预测的计算最优模型Chinchilla来验证这一假设,Chinchilla使用与Gopher相同的计算预算,但仅有70B个参数和多4%的数据。 |

Galactica(存储、组合和推理科学知识+训练数据【论文/参考资料/知识库等】)、CodeGen(基于自然语言和编程语言数据,16B参数+一个开放基准MTPB【由115个不同的问题集组成】)、AlexaTM(证明了多语言的seq2seq在混合去噪和因果语言建模(CLM)任务上比仅解码器更有效地少样本学习)

| Galactica: In [87], Taylor et al. introduced Galactica, a large language model that can store, combine and reason about scientific knowledge. They trained on a large scientific corpus of papers, reference material, knowledge bases and many other sources. Galactica performed well on reasoning, outperforming Chinchilla on mathematical MMLU by 41.3% to 35.7%, and PaLM 540B on MATH with a score of 20.4% versus 8.8% | Galactica: Taylor等人在[87]中介绍了Galactica,这是一个可以存储、组合和推理科学知识的大型语言模型。他们在大量科学论文、参考资料、知识库和许多其他来源上进行了训练。Galactica在推理方面表现出色,数学MMLU的表现超过Chinchilla,分别为41.3%和35.7%,在数学MATH方面与PaLM 540B相比,得分为20.4%对8.8%。 |

| CodeGen: In [88], Nijkamp et al. trained and released a family of large language models up to 16.1B parameters, called CODEGEN, on natural language and programming language data, and open sourced the training library JAXFORMER. They showed the utility of the trained model by demonstrating that it is competitive with the previous state-ofthe-art on zero-shot Python code generation on HumanEval. They further investigated the multi-step paradigm for program synthesis, where a single program is factorized into multiple prompts specifying sub-problems. They also constructed an open benchmark, Multi-Turn Programming Benchmark (MTPB), consisting of 115 diverse problem sets that are factorized into multi-turn prompts. | CodeGen:在[88]中,Nijkamp等人在自然语言和编程语言数据上训练并发布了一系列多达16.1B个参数的大型语言模型,称为CodeGen,并开源了训练库JAXFORMER。他们展示了经过训练的模型的实用性,证明它在HumanEval上的零样本Python代码生成方面与之前最先进的模型具有竞争力。他们进一步研究了程序合成的多步范式,其中一个程序被分解为指定子问题的多个提示。他们还构建了一个开放基准,名为多轮编程基准(MTPB),由115个不同的问题集组成,这些问题集被分解为多轮提示。 |

| AlexaTM: In [89], Soltan et al. demonstrated that multilingual large-scale sequence-to-sequence (seq2seq) models, pre-trained on a mixture of denoising and Causal Language Modeling (CLM) tasks, are more efficient few-shot learners than decoder-only models on various task. They trained a 20 billion parameter multilingual seq2seq model called Alexa Teacher Model (AlexaTM 20B) and showed that it achieves state-of-the-art (SOTA) performance on 1-shot summarization tasks, outperforming a much larger 540B PaLM decoder model. AlexaTM consist of 46 encoder layers, 32 decoder layers, 32 attention heads, and dmodel = 4096. | AlexaTM:在[89]中,Soltan等人证明了多语言大规模序列到序列(seq2seq)模型,在混合去噪和因果语言建模(CLM)任务上进行预训练,比仅解码器模型在各种任务上更有效地进行少样本学习。他们训练了一个200亿个参数的多语言seq2seq模型,称为Alexa教师模型(AlexaTM 20B),并表明它在单样本总结任务上达到了最先进的(SOTA)性能,优于更大的540B PaLM解码器模型。AlexaTM由46个编码器层,32个解码器层,32个注意头组成,dmodel = 4096。 |

Sparrow(一种信息搜索对话代理+更有帮助/更正确/更无害+RLHF+帮助人类评价代理行为)、Minerva(在通用自然语言数据上预训练+在技术内容上进一步训练+解决定量推理的困难)、MoD(将各种预训练范式结合在一起的预训练目标,即UL2框架)

| Sparrow: In [90], Glaese et al. presented Sparrow, an information-seeking dialogue agent trained to be more helpful, correct, and harmless compared to prompted language model baselines. They used reinforcement learning from human feedback to train their models with two new additions to help human raters judge agent behaviour. The high-level pipeline of Sparrow model is shown in Fig 21. | Sparrow:在[90]中,Glaese等人提出了Sparrow,这是一种信息搜索对话代理,与提示语言模型基线相比,它被训练得更有帮助、更正确、更无害。他们从人类反馈中使用强化学习来训练他们的模型,并添加了两个新功能,以帮助人类评价代理行为。Sparrow模型的高级流程如图21所示。 |

| Minerva: In [91], Lewkowycz et al. introduced Minerva, a large language model pretrained on general natural language data and further trained on technical content, to tackle previous LLM struggle with quantitative reasoning (such as solving mathematics, science, and engineering problems). | Minerva:在[91]中,Lewkowycz等人引入了Minerva,这是一个在通用自然语言数据上预训练,并在技术内容上进一步训练的大型语言模型,以解决以前LLM在定量推理方面的困难(如解决数学、科学和工程问题)。 |

| MoD: In [92], Tay et al. presented a generalized and unified perspective for self-supervision in NLP and show how different pre-training objectives can be cast as one another and how interpolating between different objectives can be effective. They proposed Mixture-of-Denoisers (MoD), a pretraining objective that combines diverse pre-training paradigms together. This framework is known as Unifying Language Learning (UL2). An overview of UL2 pretraining paradigm is shown in Fig 21. | MoD:在[92]中,Tay等人对NLP中的自监督提出了一个广义和统一的视角,并展示了不同的预训练目标是如何相互作用的,以及不同目标之间的插值是如何有效的。他们提出了混合去噪器(MoD),这是一种将各种预训练范式结合在一起的预训练目标。这个框架被称为统一语言学习(UL2)。UL2预训练范式概述如图21所示。 |

BLOOM(基于ROOTS语料库+仅解码器的Transformer,176B)、GLM(双语(中英文)预训练语言模型+对飙100B级别的GPT-3,130B)、Pythia(由16个LLM组成的套件)

| BLOOM: In [93], Scao et al. presented BLOOM, a 176B parameter open-access language model designed and built thanks to a collaboration of hundreds of researchers. BLOOM is a decoder-only Transformer language model trained on the ROOTS corpus, a dataset comprising hundreds of sources in 46 natural and 13 programming languages (59 in total). An overview of BLOOM architecture is shown in Fig 23. | BLOOM:在[93]中,Scao等人提出了BLOOM,这是一个由数百名研究人员合作设计和构建的176B参数的开放获取语言模型。BLOOM是在ROOTS语料库上训练的仅解码器的Transformer语言模型,ROOTS语料库是一个包含46种自然语言和13种编程语言(总共59种)的数百个源的数据集。BLOOM架构的概述如图23所示。 |

| GLM: In [94], Zeng et al. introduced GLM-130B, a bilingual (English and Chinese) pre-trained language model with 130 billion parameters. It was an attempt to open-source a 100B-scale model at least as good as GPT-3 (davinci) and unveil how models of such a scale can be successfully pretrained. | GLM: Zeng等人在[94]中介绍了GLM- 130b,这是一个包含130B个参数的双语(中英文)预训练语言模型。它试图开源至少与GPT-3(davinci)一样好的100B规模的模型,并揭示这种规模的模型如何成功地进行预训练。 |

| Pythia: In [95], Biderman et al. introduced Pythia, a suite of 16 LLMs all trained on public data seen in the exact same order and ranging in size from 70M to 12B parameters. We provide public access to 154 checkpoints for each one of the 16 models, alongside tools to download and reconstruct their exact training dataloaders for further study. | Pythia:在[95]中,Biderman等人介绍了Pythia,这是一个由16个LLM组成的套件,它们都是在完全相同的顺序上看到的公共数据上训练的,大小从70M到12B个参数不等。我们为16个模型中的每个模型提供154个检查点的公共访问,以及下载和重建其精确训练数据加载器的工具,以供进一步研究。 |

Orca(从GPT-4丰富的信号中学习+解释痕迹+多步思维过程,13B)、StarCoder(8K上下文长度,15B参数/1T的toekn)、KOSMOS(多模态大型语言模型+任意交错的模态数据)

| Orca: In [96], Mukherjee et al. develop Orca, a 13-billion parameter model that learns to imitate the reasoning process of large foundation models. Orca learns from rich signals from GPT-4 including explanation traces; step-by-step thought processes; and other complex instructions, guided by teacher assistance from ChatGPT. | Orca:在[96]中,Mukherjee等人开发了Orca,这是一个13B个参数的模型,可以学习模仿大型基础模型的推理过程。Orca从GPT-4丰富的信号中学习,包括解释痕迹;一步一步的思维过程;以及其他复杂的指导,由ChatGPT的教师协助指导。 |

| StarCoder: In [97], Li et al. introduced StarCoder and StarCoderBase. They are 15.5B parameter models with 8K context length, infilling capabilities and fast large-batch inference enabled by multi-query attention. StarCoderBase is trained on one trillion tokens sourced from The Stack, a large collection of permissively licensed GitHub repositories with inspection tools and an opt-out process. They fine-tuned StarCoderBase on 35B Python tokens, resulting in the creation of StarCoder. They performed the most comprehensive evaluation of Code LLMs to date and showed that StarCoderBase outperforms every open Code LLM that supports multiple programming languages and matches or outperforms the OpenAI code-cushman-001 model | StarCoder:在[97]中,Li等人介绍了StarCoder和StarCoderBase。它们是15.5B参数模型,具有8K上下文长度,填充能力和通过多查询注意机制实现的快速大批量推理。StarCoderBase是在来自The Stack的1T的toekn上进行培训练的,The Stack是一个具有检查工具和选择退出过程的许可许可GitHub存储库的大型集合。他们在35B Python令牌上对StarCoderBase进行了微调,从而创建了StarCoder。他们对迄今为止最全面的代码LLM进行了评估,结果表明,StarCoderBase优于所有支持多种编程语言的开放代码LLM,并匹配或优于OpenAI代码-cushman-001模型。 |

| KOSMOS: In [98], Huang et al. introduced KOSMOS-1, a Multimodal Large Language Model (MLLM) that can perceive general modalities, learn in context (i.e., few-shot), and follow instructions (i.e. zero-shot). Specifically, they trained KOSMOS-1 from scratch on web-scale multi-modal corpora, including arbitrarily interleaved text and images, image-caption pairs, and text data. Experimental results show that KOSMOS1 achieves impressive performance on (i) language understanding, generation, and even OCR-free NLP (directly fed with document images), (ii) perception-language tasks, including multimodal dialogue, image captioning, visual question answering, and (iii) vision tasks, such as image recognition with descriptions (specifying classification via text instructions). | KOSMOS:在[98]中,Huang等人介绍了KOSMOS-1,这是一种多模态大型语言模型(MLLM),可以感知一般模态,在上下文中学习(即few-shot),并遵循指令(即zero-shot)。具体来说,他们在网络规模的多模态语料库上从头开始训练KOSMOS-1,包括任意交错的文本和图像、图像-标题对和文本数据。实验结果表明,KOSMOS1在以下方面取得了令人印象深刻的表现: (i)语言理解、生成,甚至是无OCR的NLP(直接使用文档图像), (ii)感知语言任务,包括多模态对话、图像字幕、视觉问答,以及 (iii)视觉任务,如带有描述的图像识别(通过文本指令指定分类)。 |

Gemini(多模态模型,基于Transformer解码器+支持32k上下文长度,多个版本)

| Gemini: In [99], Gemini team introduced a new family of multimodal models, that exhibit promising capabilities across image, audio, video, and text understanding. Gemini family includes three versions: Ultra for highly-complex tasks, Pro for enhanced performance and deployability at scale, and Nano for on-device applications. Gemini architecture is built on top of Transformer decoders, and is trained to support 32k context length (via using efficient attention mechanisms). | Gemini:在[99]中,Gemini团队引入了一系列新的多模态模型,这些模型在图像、音频、视频和文本理解方面表现出了很好的能力。Gemini系列包括三个版本: Ultra用于高度复杂的任务, Pro用于增强性能和大规模部署能力,Nano用于设备上应用。 Gemini架构建立在Transformer解码器之上,经过训练支持32k上下文长度(通过使用有效的注意力机制)。 |

| Some of the other popular LLM frameworks (or techniques used for efficient developments of LLMs) includes InnerMonologue [100], Megatron-Turing NLG [101], LongFormer [102], OPT-IML [103], MeTaLM [104], Dromedary [105], Palmyra [106], Camel [107], Yalm [108], MPT [109], ORCA2 [110], Gorilla [67], PAL [111], Claude [112], CodeGen 2 [113], Zephyr [114], Grok [115], Qwen [116], Mamba [30], Mixtral-8x7B [117], DocLLM [118], DeepSeek-Coder [119], FuseLLM-7B [120], TinyLlama-1.1B [121], LLaMA-Pro-8B [122]. | 其他一些流行的LLM框架(或用于有效开发LLM的技术)包括InnerMonologue [100], Megatron-Turing NLG [101], LongFormer [102], OPT-IML [103], MeTaLM [104], Dromedary [105], Palmyra [106], Camel [107], Yalm [108], MPT [109], ORCA2 [110], Gorilla [67], PAL [111], Claude [112], CodeGen 2 [113], Zephyr [114], Grok [115], Qwen [116], Mamba [30], Mixtral-8x7B [117], DocLLM [118], DeepSeek-Coder [119], FuseLLM-7B [120], TinyLlama-1.1B [121], LLaMA-Pro-8B [122]. |

| Fig 24 provides an overview of some of the most representative LLM frameworks, and the relevant works that have contributed to the success of LLMs and helped to push the limits of LLMs. | 图24概述了一些最具代表性的LLM框架,以及为LLM的成功做出贡献并帮助突破LLM极限的相关工作。 |

III. HOW LLMS ARE BUILT如何构建LLMs

数据准备(收集、清理、去重等)→分词→模型预训练(以自监督的学习方式)→指令调优→对齐

| In this section, we first review the popular architectures used for LLMs, and then discuss data and modeling techniques ranging from data preparation, tokenization, to pre-training, instruction tuning, and alignment. Once the model architecture is chosen, the major steps involved in training an LLM includes: data preparation (collection, cleaning, deduping, etc.), tokenization, model pretraining (in a self-supervised learning fashion), instruction tuning, and alignment. We will explain each of them in a separate subsection below. These steps are also illustrated in Fig 25. | 在本节中,我们首先回顾用于LLM的流行架构,然后讨论从数据准备、分词到预训练、指令调优和对齐的数据和建模技术。一旦选择了模型架构,训练LLM所涉及的主要步骤包括:数据准备(收集、清理、去重等)、分词、模型预训练(以自监督的学习方式)、指令调优和对齐。我们将在下面单独的小节中解释它们。图25也说明了这些步骤。 |

Fig. 25: This figure shows different components of LLMs.

A. Dominant LLM Architectures主流LLM架构(即基于Transformer)

| The most widely used LLM architectures are encoder-only, decoder-only, and encoder-decoder. Most of them are based on Transformer (as the building block). Therefore we also review the Transformer architecture here. | 最广泛使用的LLM架构是仅编码器、仅解码器和编码器-解码器。它们中的大多数都基于Transformer(作为构建块)。因此,我们也在这里回顾一下Transformer架构。 |

Fig. 24: Timeline of some of the most representative LLM frameworks (so far). In addition to large language models with our #parameters threshold, we included a few representative works, which pushed the limits of language models, and paved the way for their success (e.g. vanilla Transformer, BERT, GPT-1), as well as some small language models. ♣ shows entities that serve not only as models but also as approaches. ♦ shows only approaches.

1) Transformer

最初是为使用GPU进行有效的并行计算而设计,核心是(自)注意机制,比递归和卷积机制更有效地捕获长期上下文