ORB_SLAM2_CUDA( https://github.com/thien94/ORB_SLAM2_CUDA )的编译运行环境和普通ORB_SLAM2/ORB_SLAM3的区别在于opencv和cv_bridge上,需要做些改动,其他的依赖项都是一样的。

基础opencv环境其实和vins-fusion-gpu是一样的,都是需要编译安装带有CUDA模块的opencv。

我这里是在部署了vins-fusion-gpu的OrinNX上进行ORB_SLAM2_CUDA的部署,opencv装的是3.4.1,编译时带上了CUDA相关选项。

opencv部署

新建一个opencv341文件夹,在里面下载3.4.1的opencv和opencv_contrib,使得opencv和opencv_contrib同在opencv341文件下。

git clone -b 3.4.1 https://github.com/opencv/opencv.git

git clone -b 3.4.1 https://github.com/opencv/opencv_contrib

然后在opencv文件夹下新建一个build文件夹,并运行下面命令

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \

-D WITH_CUDA=ON \

-D CUDA_ARCH_BIN=7.2 \

-D CUDA_ARCH_PTX="" \

-D ENABLE_FAST_MATH=ON \

-D CUDA_FAST_MATH=ON \

-D WITH_CUBLAS=ON \

-D WITH_LIBV4L=ON \

-D WITH_GSTREAMER=ON \

-D WITH_GSTREAMER_0_10=OFF \

-D WITH_QT=ON \

-D WITH_OPENGL=ON \

-D CUDA_NVCC_FLAGS="--expt-relaxed-constexpr" \

-D WITH_TBB=ON \

-D OPENCV_EXTRA_MODULES_PATH=../../opencv_contrib/modules \

../

OPENCV_EXTRA_MODULES_PATH参数后面的路径要和实际opencv_contrib的路径对应 在NX上 CUDA_ARCH_BIN=7.2 ,在其他平台上这个参数值需要改 CMAKE_INSTALL_PREFIX对应sudo make install的时候实际的安装路径,修改后面的路径可以opencv所安装的文件夹。

上面命令运行完后接着运行下面命令

make -j8

sudo make install

安装好后,打开jtop,可以看到opencv 3.4.1的with CUDA后面显示的yes,代表我们装好了带有CUDA模块的opencv3.4.1,可以进行GPU加速。

cv_bridge

下源码编译安装cv_bridge

mkdir -p cv_bridge_ws/src

cd cv_bridge_ws/src

git clone https://gitee.com/bingobinlw/cv_bridge

cd ..

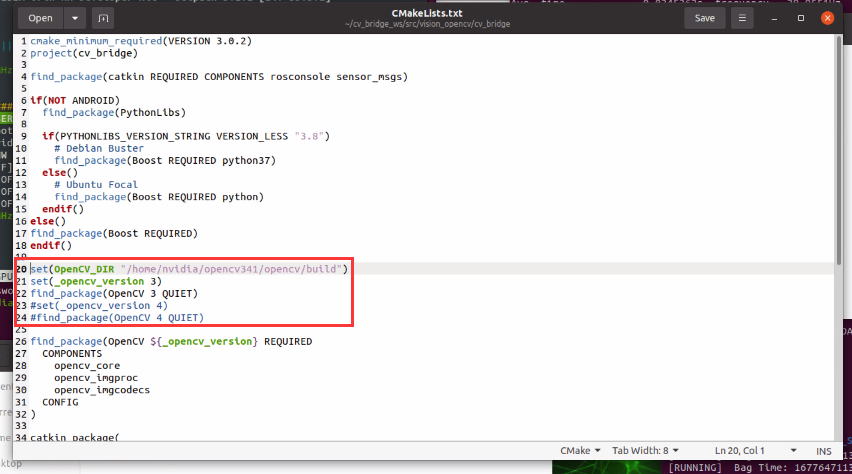

将opencv编译生成的build文件路径加入到CMakeLists.txt,个人感觉这步不加也可以应该,因为系统可以自动找到opemcv3.4.1了,保险起见还是加上。

set(OpenCV_DIR "your-path/opencv/build")

如果有find_package(OpenCV 4 QUIET)需要改为find_package(OpenCV 3 QUIET),总之需要保证cv_bridge是寻找链接的opencv3.4.1,而不是opencv4

catkin_make

把cv_bridge的功能包路径添加到bashrc里面,使得系统可以自动找到cv_bridge功能包

echo "source ~/cv_bridge_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

Pangolin部署

Pangolin起可视化作用

git clone -b v0.6 https://gitee.com/maxibooksiyi/Pangolin

然后装些Pangolin的依赖,保险起见我没有直接运行脚本装,怕他给我更新了什么软件,其实下面这些装的时候大部分已经装了

sudo apt install libgl1-mesa-dev

sudo apt install libglew-dev

sudo apt install libpython2.7-dev

sudo apt install pkg-config

sudo apt install libegl1-mesa-dev libwayland-dev libxkbcommon-dev wayland-protocols

然后编译安装

cd Pangolin

mkdir build

cd build

cmake .. #有的地方写 cmake -DCPP11_NO_BOOSR=1 .. 也可以,#待验证区别

make

sudo make install

sudo ldconfig #这步容易漏掉

eigen安装

可以二进制安装

sudo apt-get install libeigen3-dev

编译ORB_SLAM2_CUDA

git clone https://github.com/thien94/ORB_SLAM2_CUDA

运行build.sh

会遇到下图所示报错

参考https://blog.csdn.net/For_Air_/article/details/135707182

把LoopClosing.h里面的

typedef map<KeyFrame*,g2o::Sim3,std::less<KeyFrame*>,

Eigen::aligned_allocator<std::pair<const KeyFrame*, g2o::Sim3> > > KeyFrameAndPose;

改为:

typedef map<KeyFrame*,g2o::Sim3,std::less<KeyFrame*>,

Eigen::aligned_allocator<std::pair<KeyFrame *const, g2o::Sim3> > > KeyFrameAndPose;

接着编译ros部分

需要先在.bashrc里面添加这句或者在运行build_ros.sh的终端运行这一句,不然编译会说找不到ORB_SLAM2_CUDA这个功能包

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/nvidia/ORB_SLAM2_CUDA/Examples/ROS

接着运行build_ros.sh

参考 https://blog.csdn.net/weixin_44457432/article/details/130380189

cmakelists里加一句ADD_COMPILE_OPTIONS(-std=c++14 )

再运行build_ros.sh编译完成

ORB_SLAM2_CUDA运行

在ORB_SLAM2_CUDA/Examples/ROS/ORB_SLAM2_CUDA/conf下添加一个RealSense_D435i.yaml

这个RealSense_D435i.yaml的内容也是来自ORBSLAM3自己提供的D435i的配置文件

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

File.version: "1.0"

Camera.type: "PinHole"

# Rectified Camera calibration (OpenCV)

Camera.fx: 382.613

Camera.fy: 382.613

Camera.cx: 320.183

Camera.cy: 236.455

# Kannala-Brandt distortion parameters

Camera.k1: 0.0034823894022493434

Camera.k2: 0.0007150348452162257

Camera.p1: -0.0020532361418706202

Camera.p2: 0.00020293673591811182

# Right Camera calibration and distortion parameters (OpenCV)

Camera2.fx: 382.613

Camera2.fy: 382.613

Camera2.cx: 320.183

Camera2.cy: 236.455

# Kannala-Brandt distortion parameters

Camera2.k1: 0.0034003170790442797

Camera2.k2: 0.001766278153469831

Camera2.p1: -0.00266312569781606

Camera2.p2: 0.0003299517423931039

Stereo.b: 0.0499585

# stereo baseline times fx ,Camera.fx * Stereo.b

Camera.bf: 19.13

# Camera resolution

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 30

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

Stereo.ThDepth: 40.0

# Transformation from body-frame (imu) to left camera

Tbc: !!opencv-matrix

rows: 4

cols: 4

dt: f

data: [1.0, 0.0, 0.0, -0.005,

0.0, 1.0, 0.0, -0.005,

0.0, 0.0, 1.0, 0.0117,

0.0, 0.0, 0.0, 1.0]

# Do not insert KFs when recently lost

IMU.InsertKFsWhenLost: 0

# IMU noise (Use those from VINS-mono)

IMU.NoiseGyro: 1e-3 # 2.44e-4 #1e-3 # rad/s^0.5

IMU.NoiseAcc: 1e-2 # 1.47e-3 #1e-2 # m/s^1.5

IMU.GyroWalk: 1e-6 # rad/s^1.5

IMU.AccWalk: 1e-4 # m/s^2.5

IMU.Frequency: 200

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1250

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1.0

Viewer.GraphLineWidth: 0.9

Viewer.PointSize: 2.0

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3.0

Viewer.ViewpointX: 0.0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -3.5

Viewer.ViewpointF: 500.0

在ORB_SLAM2_CUDA/Examples/ROS/ORB_SLAM2_CUDA/launch下添加一个ros_mono_d435i.launch

<?xml version="1.0"?>

<launch>

<arg name="vocabularty_path" default="$(find ORB_SLAM2_CUDA)/../../../Vocabulary/ORBvoc.txt" />

<arg name="camera_setting_path" default="$(find ORB_SLAM2_CUDA)/conf/RealSense_D435i.yaml" />

<arg name="bUseViewer" default="true" />

<arg name="bEnablePublishROSTopic" default="true" />

<node name="ORB_SLAM2_CUDA" pkg="ORB_SLAM2_CUDA" type="Mono" output="screen"

args="$(arg vocabularty_path) $(arg camera_setting_path) $(arg bUseViewer) $(arg bEnablePublishROSTopic)">

</node>

</launch>

把ORB_SLAM2_CUDA/Examples/ROS/ORB_SLAM2_CUDA/src/ros_mono.cc里订阅的图像话题名称改为D435i的图像话题名称,改完保存后需要重新运行build_ros.sh进行编译

ros::Subscriber sub = nodeHandler.subscribe("/camera/infra1/image_rect_raw", 1, &ImageGrabber::GrabImage,&igb);

再运行下面命令可以将ORB_SLAM2_CUDA起起来

roslaunch /path/to/ORB_SLAM2_CUDA/Examples/ROS/ORB_SLAM2_CUDA/launch/ros_mono_d435i.launch

注意需要先在.bashrc里面添加这句或者在运行上述命令的终端里运行这一句,不然会找不到ORB_SLAM2_CUDA这个功能包

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/nvidia/ORB_SLAM2_CUDA/Examples/ROS

接着再运行D435i或者播放D435i的bag包,可以将基于D435i的ORB_SLAM2_CUDA运行起来

从jtop也可以看到gpu有明显被占用

终端也实时打印每帧的耗时情况

7250

7250

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?