CLIP

欢迎来到 OpenAI 的开源实现夹子(对比语言-图像预训练)。

利用此代码库,我们在各种数据源和计算预算上训练了多个模型,包括小规模实验更大规模的运行,包括在以下数据集上训练的模型LAION-400M,拉伊奥-2B和数据压缩-1B.本文详细研究了我们的许多模型及其缩放特性对比语言图像学习的可重复缩放定律。下面显示了我们训练过的一些最佳模型及其零样本 ImageNet-1k 准确率,以及由 OpenAI 和其他最先进的开源替代方案训练的 ViT-L 模型(所有模型都可以通过 OpenCLIP 加载)。我们提供了有关我们完整的预训练模型集合的更多详细信息这里以及 38 个数据集的零样本结果这里。

.

| Model | Training data | Resolution | # of samples seen | ImageNet zero-shot acc. |

|---|---|---|---|---|

| ConvNext-Base | LAION-2B | 256px | 13B | 71.5% |

| ConvNext-Large | LAION-2B | 320px | 29B | 76.9% |

| ConvNext-XXLarge | LAION-2B | 256px | 34B | 79.5% |

| ViT-B/32 | DataComp-1B | 256px | 34B | 72.8% |

| ViT-B/16 | DataComp-1B | 224px | 13B | 73.5% |

| ViT-L/14 | LAION-2B | 224px | 32B | 75.3% |

| ViT-H/14 | LAION-2B | 224px | 32B | 78.0% |

| ViT-L/14 | DataComp-1B | 224px | 13B | 79.2% |

| ViT-G/14 | LAION-2B | 224px | 34B | 80.1% |

| ViT-L/14 (Original CLIP) | WIT | 224px | 13B | 75.5% |

| ViT-SO400M/14 (SigLIP) | WebLI | 224px | 45B | 82.0% |

| ViT-SO400M-14-SigLIP-384 (SigLIP) | WebLI | 384px | 45B | 83.1% |

| ViT-H/14-quickgelu (DFN) | DFN-5B | 224px | 39B | 83.4% |

| ViT-H-14-378-quickgelu (DFN) | DFN-5B | 378px | 44B | 84.4% |

M

| 模型 | 训练数据 | 解决 | 已查看的样本数量 | ImageNet 零样本估计 |

|---|---|---|---|---|

| ConvNext 基础 | 拉伊奥-2B | 256像素 | 13B | 71.5% |

| ConvNext-大型 | 拉伊奥-2B | 320像素 | 29B | 76.9% |

| ConvNext-XXLarge | 拉伊奥-2B | 256像素 | 34B | 79.5% |

| 维特-B/32 | 数据压缩-1B | 256像素 | 34B | 72.8% |

| 维生素-B/16 | 数据压缩-1B | 224像素 | 13B | 73.5% |

| 维特-L/14 | 拉伊奥-2B | 224像素 | 32B | 75.3% |

| 维生素 H/14 | 拉伊奥-2B | 224像素 | 32B | 78.0% |

| 维特-L/14 | 数据压缩-1B | 224像素 | 13B | 79.2% |

| 维特-G/14 | 拉伊奥-2B | 224像素 | 34B | 80.1% |

| 维特-L/14(原始片段) | 智慧教育 | 224像素 | 13B | 75.5% |

| ViT-SO400M/14(信号) | 网页式语言 | 224像素 | 45B | 82.0% |

| ViT-SO400M-14-SigLIP-384(信号) | 网页式语言 | 384像素 | 45B | 83.1% |

| ViT-H/14-快速凝胶(DFN) | DFN-5B | 224像素 | 39B | 83.4% |

| ViT-H-14-378-快速凝胶(DFN) | DFN-5B | 378像素 | 44B | 84.4% |

可以在 Hugging Face Hub 的 OpenCLIP 库标签下找到带有其他模型特定细节的模型卡:https://huggingface.co/models?library=open_clip。

如果你发现这个存储库有用,请考虑引用。如果您有任何其他要求或建议,我们欢迎任何人提交问题或发送电子邮件。

请注意,部分src/open_clip/建模和标记器代码改编自 OpenAI 官方存储库。

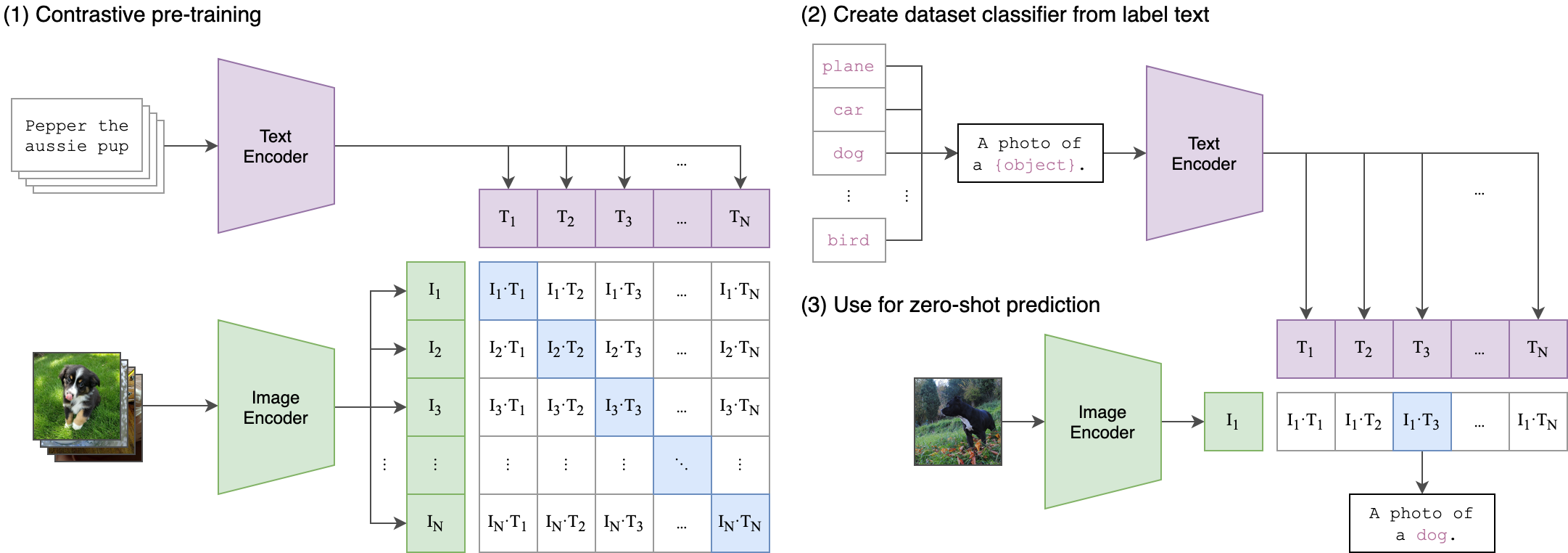

方法

| 图片来源:GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image |

用法

<span style="background-color:var(--bgColor-muted, var(--color-canvas-subtle))"><span style="color:#1f2328"><span style="color:var(--fgColor-default, var(--color-fg-default))"><span style="background-color:var(--bgColor-muted, var(--color-canvas-subtle))"><code>pip install open_clip_torch

</code></span></span></span></span>import torch

from PIL import Image

import open_clip

model, _, preprocess = open_clip.create_model_and_transforms('ViT-B-32', pretrained='laion2b_s34b_b79k')

model.eval() # model in train mode by default, impacts some models with BatchNorm or stochastic depth active

tokenizer = open_clip.get_tokenizer('ViT-B-32')

image = preprocess(Image.open("docs/CLIP.png")).unsqueeze(0)

text = tokenizer(["a diagram", "a dog", "a cat"])

with torch.no_grad(), torch.cuda.amp.autocast():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features /= image_features.norm(dim=-1, keepdim=True)

text_features /= text_features.norm(dim=-1, keepdim=True)

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

print("Label probs:", text_probs) # prints: [[1., 0., 0.]]

另请参阅[剪辑合作实验室]。

为了高效计算数十亿个嵌入,你可以使用片段检索它具有 openclip 支持。

预训练模型

我们提供一个简单的模型接口来实例化预训练和未训练的模型。要查看哪些预训练模型可用,请使用以下代码片段。有关我们的预训练模型的更多详细信息,请访问这里。

>>> import open_clip

>>> open_clip.list_pretrained()

您可以在以下位置找到有关我们支持的模型的更多信息(例如参数数量、FLOP)这张桌子。

注意:许多现有检查点使用原始 OpenAI 模型中的 QuickGELU 激活。这种激活实际上比 PyTorch 最新版本中的原生 torch.nn.GELU 效率低。模型默认值现在是 nn.GELU,因此应该-quickgelu对 OpenCLIP 预训练权重使用带后缀的模型定义。所有 OpenAI 预训练权重将始终默认为 QuickGELU。也可以使用-quickgeluQuickGELU 将非模型定义与预训练权重一起使用,但精度会下降,因为微调可能会在较长的运行中消失。未来训练的模型将使用 nn.GELU。

加载模型

可以使用 加载模型open_clip.create_model_and_transforms,如下例所示。模型名称和对应的pretrained键与 的输出兼容open_clip.list_pretrained()。

该pretrained参数还接受本地路径,例如/path/to/my/b32.pt。您也可以通过这种方式从 huggingface 加载检查点。为此,请下载文件open_clip_pytorch_model.bin(例如,https://huggingface.co/laion/CLIP-ViT-L-14-DataComp.XL-s13B-b90K/tree/main),并使用pretrained=/path/to/open_clip_pytorch_model.bin。

# pretrained also accepts local paths

model, _, preprocess = open_clip.create_model_and_transforms('ViT-B-32', pretrained='laion2b_s34b_b79k')

分类任务的微调

此存储库专注于训练 CLIP 模型。若要在下游分类任务(如 ImageNet)上微调经过训练的零样本模型,请参阅我们的其他存储库:WiSE-FT。 这WiSE-FT 存储库包含我们论文的代码零样本模型的稳健微调,其中我们介绍了一种用于微调零样本模型同时在分布转变下保持稳健性的技术。

数据

要将数据集下载为 webdataset,我们建议img2数据集。

概念性标题

YFCC 和其他数据集

除了上面提到的通过 CSV 文件指定训练数据之外,我们的代码库还支持网络数据集,建议用于较大规模的数据集。预期格式是一系列.tar文件。每个.tar文件都应包含每个训练示例的两个文件,一个用于图像,一个用于相应的文本。两个文件应具有相同的名称,但扩展名不同。例如,shard_001.tar可以包含诸如abc.jpg和之类的文件。您可以在以下位置abc.txt了解更多信息webdatasetGitHub - webdataset/webdataset: A high-performance Python-based I/O system for large (and small) deep learning problems, with strong support for PyTorch.我们使用.tar每个包含 1,000 个数据点的文件,这些文件是使用篷布。

您可以从以下网址下载 YFCC 数据集多媒体共享。与 OpenAI 类似,我们使用 YFCC 的一个子集来达到上述准确率。该子集中图像的索引为OpenAI 的 CLIP 存储库。

培训 CLIP

安装

我们建议您首先创建一个虚拟环境:

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1993

1993

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?